We are all waiting for you Mr. Disk….are you there?

1. Introduction

As more I deal with Performance issue on ISA Server (or TMG), more I realize that there are not really a lot of new things on this area to be explored. The reason why I say that there are not much of new things on this area is because I can easily map the top five core causes of ISA/TMG stop responding requests and causing the “server hanging” symptom, which are:

· DNS – a wrong DNS configuration or a lack of response from the DNS Server can definitely cause issues on ISA. Please see the following related articles:

https://blogs.technet.com/b/isablog/archive/2009/01/12/isa-server-2006-stops-answering-requests.aspx

· Authentication – if the DC doesn’t answer, ISA can’t authenticate and as result new authentication requests will start to accumulate. The infamous 5783/5719 scenario is a good example of that. Please see the following related articles:

· Logging – if we can’t log we will eventually stop responding. On ISA we go to lockdown mode, on TMG we start to write the LLQ files in the disk, which can fill up the disk and the server runs out of disk space, which will end up causing ISA/TMG stop responding. Please see the following related articles:

· Disk – this is one key element, because if we have disk bottleneck, everything else will fall apart. Please see the following related articles:

· Antivirus – well, yeah…this is true. There are many elements here that can go wrong, for example: some antivirus also introduces firewall modules and cause conflicts with ISA/TMG firewall kernel engine, which is something that I already explained in here. Please see the following related articles:

As you can see this is a long list and the scenario that I’m about to describe on this post is a combination of all elements above and I like to call it: the perfect storm. What’s the symptom? The usual: ISA Server stop responding request and to fix ISA Admin have to restart Firewall Service.

2. Data Gathering

On this type of scenario the most common action plan is to gather perfmon, dump of the wspsrv.exe process and ISA Data Packager in repro mode. Here are the core steps:

a. Install ISABPA from www.isabpa.com

b. Configure Performance Monitoring with the following objects:

> ISA Server Firewall Packet Engine/*

> ISA Server Firewall Service/*

> ISA Server Web Proxy/*

> Memory/*

> Processor/*

> Network Interface/*

> Process/*

> Physical Disk/*

> Threads/*

Note: configure the maximum size file for 200MB, the refresh time to 15 seconds and configure Perfmon to stop when the log is full and create a new file (Schedule Tab).

c. Install the DebugDiag (download from the link below):

When the issue happens, the following steps needs to be done in order to gather the correct data:

a. Go to Start / Programs / Microsoft ISA Server / ISA Tools / ISA Data Packager

b. On the option "Collect data using one of the following repro scenarios", select "Web Proxy and Web Publishing" and click Next;

c. Click in Modify Options;

d. In addition to the options that are already selected please select also:

- ISA BPA

- ISA Info

e. Click in Start data collection

f. The Data Packager will start to run. When the option "Press spacebar to start the capture" appears, press the spacebar and repro the issue by trying to connect from the client workstation.

g. After you finishing testing then press space bar again in the ISA Data Packager console.

h. When ISA Data Packager finishes collecting data, open DebugDiag.

i. On the first Debugdiag screen (Select Rule Type) click cancel.

j. Go to Processes tab and look for the wspsrv.exe process.

l. While this window is open, go back to the workstation and try to connect again.

m. While the workstation is trying to connect, go back to debugdiag window, right click on wspsrv.exe process and choose Create Full User Dump.

n. Stop Perfmon counter.

3. Data Analysis

Having the correct data in hands you can start looking for obvious issues on the ISA BPA report. If there is nothing there that is relevant for this type of issue, move forward to analyze the perfmon or dump. In this particular case I’m going to review the dump first.

First step was to check if there was any thread locked in Critical Section:

0:000> !cs -l

-----------------------------------------

DebugInfo = 0x000c0fe8

Critical section = 0x011c9024 (+0x11C9024)

LOCKED

LockCount = 0x0

WaiterWoken = No

OwningThread = 0x0000113c

RecursionCount = 0x1

LockSemaphore = 0x0

SpinCount = 0x00000000

-----------------------------------------

Notice that this thread is locked and we have the address of the owning thread; let’s see which thread is that:

45 Id: 1c38.113c Suspend: 1 Teb: 7ffa0000 Unfrozen

ChildEBP RetAddr Args to Child

36d8f354 7c827d29 77e61d1e 00000d28 00000000 ntdll!KiFastSystemCallRet

36d8f358 77e61d1e 00000d28 00000000 36d8f39c ntdll!ZwWaitForSingleObject+0xc

36d8f3c8 77e61c8d 00000d28 00004e20 00000000 kernel32!WaitForSingleObjectEx+0xac

36d8f3dc 74cd2e3f 00000d28 00004e20 00004e20 kernel32!WaitForSingleObject+0x12

36d8f408 6d56ddde 002fb5b0 0138d2a0 00000009 DBmsLPCn!ConnectionRead+0xaf

36d8f428 6d5687fc 0138f2c0 0138d2a0 00000009 dbnetlib!WrapperRead+0x2c

36d8f480 4e2597ce 0138f2c0 0138d2a0 0138d2a0 dbnetlib!ConnectionRead+0x519

36d8f4b4 4e25982d 0138f2c0 0138d2a0 00000009 sqloledb!CDataSource::ConnectionRead+0x35

36d8f500 4e252358 0138d06e 00000001 00000000 sqloledb!CDBConnection::GetBytes+0x269

36d8f54c 4e2555c4 011da180 00000088 0000001e sqloledb!CDBConnection::ProcessTDSStream+0x157

36d8f608 4e255691 011c5680 0000003d 011fb198 sqloledb!CStmt::ExecDirect+0x786

36d8f620 4e254d32 011c5680 0000003d 00000000 sqloledb!CStmt::SQLExecDirect+0x28

36d8f650 4e25517d 00000000 4e25321c 0000003d sqloledb!CCommand::ExecuteHelper+0x157

36d8f6d4 4e254c4b 011d4888 00000000 4bbea778 sqloledb!CCommand::Execute+0x76b

36d8f70c 4bbea64d 011e8550 00000000 4bbea778 sqloledb!CImpICommandText::Execute+0xdd

36d8f74c 4bc0c79b 011c8d78 011fb22c 011f8738 msado15!CConnection::Execute+0x9d

36d8f91c 4bbea4a7 011cfed8 00000000 011d16c8 msado15!_ExecuteAsync+0x19f

36d8f930 4bbea385 011cfed8 ffffffff 00000000 msado15!ExecuteAsync+0x23

36d8fa18 4bbea258 00000000 7c828200 00000000 msado15!CQuery::Execute+0xa5e

36d8fa84 4bc21717 011d16c8 00000000 7c828200 msado15!CCommand::_Execute+0x153

The yellow line in the second stack shows that the machine is submitting a SQL statement using the SQLExecDirect function. Now let’s see what SQL command is being executed:

0:000> du 011c5680

011c5680 "SELECT RTRIM(filename) FROM ISAL"

011c56c0 "OG_20101107_FWS_000..sysfiles"

Logs starting with FWS suffix represent the Firewall log; in this case ISA was querying the SQL database for this log. Now where is SQL located? According to ISAInfo collected by ISA Data Packager the Log was located on the D: drive, which was actually part of the same disk as C:, only in a different partition. Now it is time to review perfmon and see if we can match this with something going on from the disk perspective.

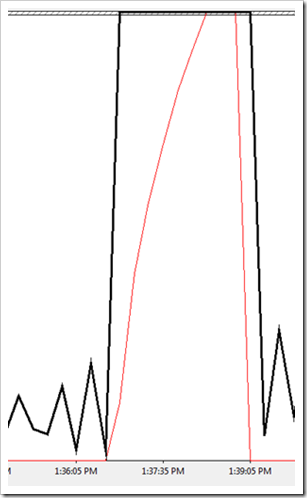

Here it is sample of the time where the issue was happening:

The black line represents the Average Disk Queue Length that goes from zero to 26 (maximum should be 2 per spindle - in this case we just have 1 spindle) and got stuck there from 1:36PM to 1:39PM. During the same time we see the ISA Server Firewall Packet Engine\Backlogged Packets goes from zero to 113 (maximum should never be higher than 10). The logic here is the following:

1. ISA is trying to query a firewall log located on the SQL (MSDE in this case) database. ISA is waiting on SQL.

2. SQL is performing a reading operation for a piece of information located in disk. SQL is waiting on Disk.

3. Disk is having bottleneck and it is queuing up requests.

4. Since ISA can’t proceed (since is waiting on disk), ISA starts to accumulate requests (backlog starts to grow). ISA stop answering new requests.

5. Clients can’t browse.

You might be thinking, but this is only for 3 minutes, I can live with that. Really? I doubt your helpdesk will not overflow of calls if nobody can browse Internet for 3 minutes.

4. Conclusion

I hope this post gives you a big picture of what goes behind an ISA (or TMG) performance issue in scenarios where ISA/TMG stops responding. There are much more elements that needs to be investigated other than ISA/TMG itself.