The App-V Client does not upload reporting data, resulting with Event 3144 and error code 0A-200001F4

Hi everyone, Madelinde here again. Some time ago I was working on a reporting issue for App-V and because this issue keeps popping up every once in a while I thought I’d write a blog post about it so you would be aware of it as well.

Hi everyone, Madelinde here again. Some time ago I was working on a reporting issue for App-V and because this issue keeps popping up every once in a while I thought I’d write a blog post about it so you would be aware of it as well.

NOTE Before applying the actions in this article, make sure to back up your SQL database at any time before making changes!

The problem

The issue that occurs here is that the App-V client fails to upload its reporting cache back to the server. In this reporting cache we store the application usage data so if you have this issue you will not be able to run proper reports on App-V’s application usage. Not all clients have to have this issue, so don’t be surprised if some clients do work, but if some clients have the issue your reports will be incomplete and not reliable.

The symptoms

If your App-V server is configured to request reporting data from your clients, but if you do not use App-V reports, you will likely not even know if you have this issue. A remedy would then be to turn off reporting data collection.

In general, the symptoms of this issue would be:

· Warnings in the logs (Application Event log and App-V Client log).

· Missing or incomplete data in the Management server reports.

· Increasing disk space usage on the App-V clients, because the XML files will never be cleared.

In your App-V client log (sftlog.txt), you will find an entry similar to the one below if you have the issue.

[07/08/2011 07:56:28:947 REPT WRN] {tid=744:usr=USERNAME}

The Application Virtualization Client report data cache could not be sent to Publishing Server URL 'rtsp://APPVSERVER:554/' (rc = 1690640A-200001F4).

A corresponding warning event with ID 3144 will be logged in the Application Event log, but you should make sure that you check the details of this event.

The Event ID 3144 is a general ID for all reporting warnings and it is possible that you see another actual return code (rc) in the details. If you have Event 3144s and if your description matches RC 0A-200001F4 together with the symptoms listed earlier, you are very likely to experience the issue we are talking about here.

An important thing we should keep in mind for this issue is that the complete resolution consists of two parts. After we resolve the issue, reporting data will be uploaded again from that moment on but the reporting cache from before that time will not be uploaded automatically. This is where it gets interesting, because if you need the history data as well, you need to import all the history data manually into the SQL database which can be a somewhat complex procedure.

Background information on App-V reporting

Since the release of App-V 4.5, we improved the way the reporting works in general. We use XML files on the App-V client to store the application usage information between DC refreshes and these files will be uploaded to the server when a DC refresh happens. Because of the changes we made compared to SoftGrid 4.1, clients with App-V 4.5 or newer will not be able to upload their reporting to a SoftGrid 4.1 VAS. When this is the case, an entry similar to the below will be logged in the App-V Client log (sftlog.txt):

[08/24/2010 14:55:18:179 MIME VRB] {tid=BA4:usr=Administrator}

DC server does not want to receive v4.5+ reporting

In addition to this, when a 4.5+ client reports against a SoftGrid 4.1 VAS, you will notice that the below registry value is set to 0 and when it is reporting against a 4.5 VAS, it is set to 1.

On x86:

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\SoftGrid\4.5\Client\DC Servers\Reporting

On x64:

HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\Microsoft\SoftGrid\4.5\Client\DC Servers\Reporting

If the reporting key mentioned above is set to 1, you will have the following registry key as well. This key holds the information on the latest reporting cache XML file.

On x86:

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\SoftGrid\4.5\Client\Reporting\LastCacheFile

On x64:

HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\Microsoft\SoftGrid\4.5\Client\Reporting\LastCacheFile

This XML file is a physical file on the App-V client. It contains all the application usage information amongst some additional data in XML format. When a DC refresh occurs, we upload this to the server, the XML gets parsed and it will be inserted into the SQL database after which the local file will be flushed. This way, we can use the data in our reports.

NOTE The default location for the XML file is the Global Data Directory, but you have to select “Show hidden files and folders” in Windows folder options before you can actually see it.

Below is an example of how this XML looks like when we have launched applications and before it is uploaded. When we would now do a refresh, the data will be uploaded.

After a DC refresh, the XML file is empty until applications are launched again. An example of this is below as well.

When we use NTA logging to verify this, we can see that when we do a DC Refresh we will get a request for the reporting to be uploaded as well.

[10/31/2011 14:54:12.344, 0x03144] SWRTSPRequest::CreateRequest: Method(SET_PARAMETER), Url(rtsp://madelw-vas:554/), Header(Content-Type: text/xml

AppV-Op: Report

)

In a verbose NTA log you can verify what goes over the wire. When we upload the reporting data, the local XML file will be parsed and it will be sent to the server. In the frame that contains the information on this, you will see a section called “CLIENT_DATA”, and this section contains the information from the XML file.

When we strip an NTA log, in a good situation, the part per application would look like this:

<PKG_DATA.Name="{2920C30C-FB29-4E28-842D-34934585C7E0}" .Guid="{2920C30C-FB29-4E28-842D-34934585C7E0}".Ver="0".VerGuid="{00000000-0000-0000-0000-000000000000}".Source="RTSP://%SFT_SOFTGRIDSERVER%:554/APPNAME.sft".PctCached="0"/>"

In a situation where we experience the 0A-200001F4 issue, it would look like the below:

<PKG_DATA.Name="" .Guid="{2920C30C-FB29-4E28-842D-34934585C7E0}".Ver="0".VerGuid="{00000000-0000-0000-0000-000000000000}".Source="RTSP://%SFT_SOFTGRIDSERVER%:554/APPNAME.sft".PctCached="0"/>"

As you can see, the PKG_DATA.Name has no value. And this is exactly the issue!

The resolution

With the above information kept in mind, the reason for the issue is the fact that the PKG_DATA.Name does not have a value. And this needs to be imported into a column in SQL that is by default set not to accept NULL values.

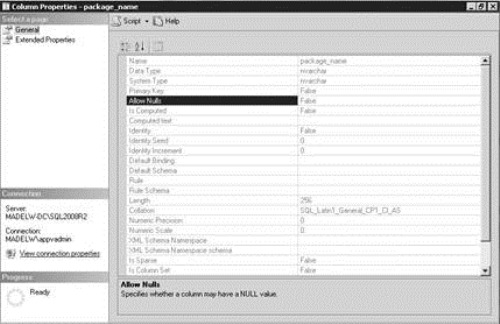

You can verify this in SQL Server Management Studio, by opening the column properties for the package_name column in the dbo.REPORTING_PACKAGE_INFORMATION table.

Allow Nulls is set to False here, as shown in the example below:

When you execute the following SQL statement in a new query window, you will notice that the column will be able to accept NULL values from now on.

You have to make sure that the right database is selected when you execute the SQL statement. Otherwise, it will fail!

alter table REPORTING_PACKAGE_INFORMATION

alter column package_name nvarchar(256) null

When this SQL statement has been executed successfully, the issue will be resolved and the XML reporting cache will be uploaded to the App-V VAS again.

How to import the history data?

Now, the issue is resolved and all new App-V reporting data will be uploaded from now on. But the history data from before that time will remain orphaned on the App-V clients. It is possible to gather and import this “old” data as well, but this is a bit of a tricky, complex procedure.

We will use a SQL Stored Procedure to bulk import the data from the XML files into the SQL database. Below is a text version of the Stored Procedure.

NOTE: This stored procedure has been tested on SQL 2008. On other versions, it might need modification. Please test carefully!

You have to save this to a file called “proc_ImportReportingCacheFilesv3.sql”

SET NOCOUNT ON if exists( select 1 from sysobjects where id = object_id('proc_ImportReportingCacheFilev3') and OBJECTPROPERTY(id, N'IsProcedure') = 1 ) begin drop procedure proc_ImportReportingCacheFilev3 end go create procedure proc_ImportReportingCacheFilev3 ( @client_host_name nvarchar(256), @cache_file_name nvarchar(256) ) as if @client_host_name is null begin raiserror (N'Client host name cannot be NULL', 16, 1) return end if @cache_file_name is null begin raiserror (N'Reporting cache file name cannot be NULL', 16, 1) return end -- First, ensure that there is a row in the table that tracks basic -- metadata for all reporting clients. If there isn't one, we will -- synthesize a placeholder row with just enough information to allow -- the app usage data to import correctly if not exists ( select 1 from REPORTING_CLIENT_INFORMATION where host_name = @client_host_name ) begin insert into REPORTING_CLIENT_INFORMATION ( host_name, version, cache_size, cache_used ) values ( @client_host_name, N'4.6.0.1523', 1, 0 ) end declare @hostid uniqueidentifier select @hostid = host_id from REPORTING_CLIENT_INFORMATION where host_name = @client_host_name print N'Mapped host_id for client ' + @client_host_name + N' as ' + rtrim(cast(@hostid as nvarchar(50))) -- Load the reporting cache document declare @doc xml declare @sql nvarchar(1024) set @sql = N'select @doc_out = ( select * from openrowset ( bulk ''' + @cache_file_name + N''', single_blob ) as data )' execute sp_executesql @sql, N'@doc_out xml output', @doc_out = @doc output if @@ERROR = 0 begin if NOT exists( select 1 from sysobjects where id = object_id('imported_records') ) begin create table imported_records ( row_id int not null identity, start_time datetime not null, end_time datetime null, app_name nvarchar(64) not null, app_version nvarchar(16) not null, username nvarchar(256) not null, server_name nvarchar(256) not null ) Print 'Table Created for 1st Iteration: imported_records' end Print 'Table : imported_records Exists' insert into imported_records ( start_time, end_time, app_name, app_version, username, server_name ) select cache.start_time, case when cache.end_time = '' then null else cache.end_time end, cache.app_name, cache.app_version, cache.username, cache.server_name from ( select parsed.content.query('data(@Launched)').value('.', 'datetime') as start_time, parsed.content.query('data(@Shutdown)').value('.', 'datetime') as end_time, parsed.content.query('data(@Name)').value('.', 'nvarchar(64)') as app_name, parsed.content.query('data(@Ver)').value('.', 'nvarchar(16)') as app_version, parsed.content.query('data(@User)').value('.', 'nvarchar(256)') as username, parsed.content.query('data(@Server)').value('.', 'nvarchar(256)') as server_name from @doc.nodes('/REPORT_DATA_CACHE/APP_RECORDS/APP_RECORD') as parsed(content) ) as cache print N'Loaded ' + rtrim(cast(@@rowcount as nvarchar(30))) + N' records from file ' + @cache_file_name -- Prune any records that look like they have already been loaded delete from imported_records where exists ( select 1 from APPLICATION_USAGE where imported_records.start_time = APPLICATION_USAGE.start_time and imported_records.app_name = APPLICATION_USAGE.app_name and imported_records.app_version = APPLICATION_USAGE.app_version and imported_records.username = APPLICATION_USAGE.username and APPLICATION_USAGE.host_id = @hostid ) print N'Removed ' + rtrim(cast(@@rowcount as nvarchar(30))) + N' records that look to have been already uploaded.' -- Prune duplicate records in the import list. Since they're duplicates, it -- doesn't really matter which one we keep, so keep the one with the smallest -- row_id delete from imported_records where exists ( select 1 from imported_records as imported_records_inner where imported_records_inner.start_time = imported_records.start_time and imported_records_inner.app_name = imported_records.app_name and imported_records_inner.app_version = imported_records.app_version and imported_records_inner.username = imported_records.username group by start_time, app_name, app_version, username having count(0) > 1 and min(imported_records_inner.row_id) != imported_records.row_id ) print N'Removed ' + rtrim(cast(@@rowcount as nvarchar(30))) + N' records that are duplicates within the file itself.' -- Prune records in the import list that indicate that an app launch ended before -- it started. delete from imported_records where end_time is not null and start_time > end_time print N'Removed ' + rtrim(cast(@@rowcount as nvarchar(30))) + N' records with an incongruous end_time.' -- Now the new records can be fed to the app usage table insert into APPLICATION_USAGE ( start_time, end_time, shutdown_state, app_name, app_version, app_id, username, server_name, host_id ) select imported_records.start_time, imported_records.end_time, case when imported_records.end_time is null or imported_records.end_time = '' then 0 else 1 end, imported_records.app_name, imported_records.app_version, APPLICATIONS.app_id, imported_records.username, imported_records.server_name, @hostid from imported_records, APPLICATIONS where APPLICATIONS.name = imported_records.app_name and APPLICATIONS.version = imported_records.app_version print N'Added the remaining ' + rtrim(cast(@@rowcount as nvarchar(30))) + N' records with matching applications to the APPLICATION_USAGE table.' print N'Cleaning up... : imported_records' truncate table imported_records print N'Table : imported_records truncated' end go |

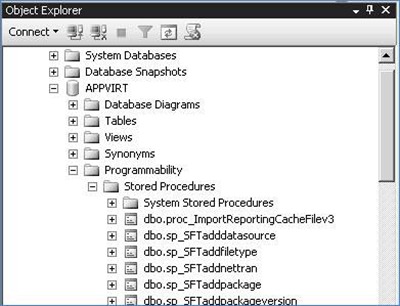

In order to add this Stored Procedure to SQL you have to do the following:

· Open SQL Server Management Studio.

· Select your App-V database.

· Open a new query window and copy the Stored Procedure in there.

· Execute it.

· You will now see that it is added:

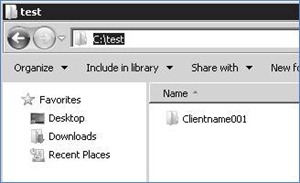

If we have a lot of orphaned XML files spread across our clients, we need to gather them to one location. When all XML files are gathered in one folder, for example on your SQL server, you can use the following command to generate TSQL output.

With this TSQL output, you can start the bulk import automatically, and all XML files will be inserted in the database.

for /d %d in (C:\location where the orphaned XML files are gathered\*) do @for /f %f in ('dir /b /a:h %d\*.xml') do @echo exec proc_ImportReportingCacheFilev3 N'%~nd.fulldomainname', N'%~fd\%~f' >>c:\output.sql

When you have gathered the files like the below example, make sure that you do not include the subfolder name, in this case “Clientname001” in your command.

The /d option for the FOR loop will make sure it goes through all subfolders. So if you have created a folder “C:\Test”, containing subfolders for each client you gathered the orphaned files from, you only have to specify “C:\Test” in the command. An example command is outlined below.

Example:

for /d %d in (C:\test\*) do @for /f %f in ('dir /b /a:h %d\*.xml') do @echo exec proc_ImportReportingCacheFilev3 N'%~nd.domain.com', N'%~fd\%~f' >>c:\output.sql

NOTEIt can take some minutes before this will finish, depending on the amount of files and folders to go through.

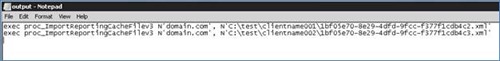

When the command is working properly, we will have a new output file created. This file will look like the below, and it will contain one line per XML file.

NOTE We should verify that the amount of lines in this file corresponds to the number of XML files before executing the TSQL.

Now we can copy this output to SQL Server Management Studio and when we execute it, all XML files will be imported to the SQL database.

We should keep in mind here that when we are talking about 100s or 1000s of files, this can be quite intensive. Therefore we should always do this outside office hours.

In addition to this, we can also consider to load a few files at a time to catch potential issues early.

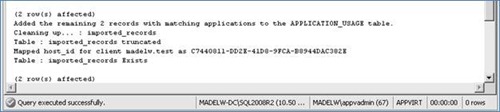

When we execute the TSQL, we will receive a message that the query was executed successfully, as shown in the example below. Now we can continue to verify that the data has been imported properly, because if we now run an Application Usage report from our App-V Management Server, we should get the expected results

As soon as the results are confirmed, we have to do one final step, which is to remove the temporary table that SQL used to import the data.

Therefore, we have to execute the following command:

drop table imported_records

Now everything is working after all this work, I would also like to say to make a new backup of the database, so that it does not have to be repeated again.

Hopefully this will solve all the “0A-200001F4” errors out there!

Madelinde Walraven | Senior App-V Support Engineer

App-V Team blog: https://blogs.technet.com/appv/

AVIcode Team blog: https://blogs.technet.com/b/avicode

ConfigMgr Support Team blog: https://blogs.technet.com/configurationmgr/

DPM Team blog: https://blogs.technet.com/dpm/

MED-V Team blog: https://blogs.technet.com/medv/

OOB Support Team blog: https://blogs.technet.com/oob/

Opalis Team blog: https://blogs.technet.com/opalis

Orchestrator Support Team blog: https://blogs.technet.com/b/orchestrator/

OpsMgr Support Team blog: https://blogs.technet.com/operationsmgr/

SCMDM Support Team blog: https://blogs.technet.com/mdm/

SCVMM Team blog: https://blogs.technet.com/scvmm

Server App-V Team blog: https://blogs.technet.com/b/serverappv

Service Manager Team blog: https://blogs.technet.com/b/servicemanager

System Center Essentials Team blog: https://blogs.technet.com/b/systemcenteressentials

WSUS Support Team blog: https://blogs.technet.com/sus/