Effective cost saving in Azure without moving to PaaS

By Chris Taylor, DevOps Engineer at DevOpsGuys

By Chris Taylor, DevOps Engineer at DevOpsGuys

Recently we were asked if we could help a company reduce the costs of their infrastructure with Microsoft Azure. They wanted to move rapidly, so working on a plan to re-architect their system to make use of the Platform-as-a-Service (PaaS) features that Azure provides wasn't a viable option - we had to find another way.

Naturally, the first recommendation we would make would be to resize the servers in all non-Production environments so the compute charges would come down, however this wasn’t an option either as they used these environments to gauge performance of the system before rolling changes to Production.

Using the Azure Automation feature to turn VMs on and off on a schedule

We noticed that they had non-Production environments (development, test, UAT and Pre-Production) that were not used all the time. However, they were powered on in Azure all the time, which meant they were continually getting charged for compute resource. Most of the people who would be using these environments were based in the UK, so the core hours of operation were between 7 am and 10 pm GMT (accommodating all nightly processes).

Using this knowledge, and our knowledge of PowerShell and Azure automation, we wrote a custom run book that would turn off all servers in each environment at a given time, and then another run book that did the opposite - turn them back on again.

We wanted to use best practices when creating the code, so hard coding values were out and parameters and tags were in: parameters were used to pass in a resource group, and tags were used to identify servers that were to be turned off. Tags were especially useful for doing this, as in some resource groups we couldn’t power down all the servers.

Below is some of the PowerShell used to turn the VMs off:

workflow Stop-ResGroup-VMs {

param (

[Parameter(Mandatory=$true)][string]$ResourceGroupName

)

#The name of the Automation Credential Asset this runbook will use to authenticate to Azure.

$CredentialAssetName = 'CredentialName'

$Cred = Get-AutomationPSCredential -Name $CredentialAssetName

$Account = Add-AzureAccount -Credential $Cred

$AccountRM = Add-AzureRmAccount -Credential $Cred

Select-AzureSubscription -SubscriptionName "MySubscription"

#Get all the VMs you have in your Azure subscription

$VMs = Get-AzureRmVM -ResourceGroupName "$ResourceGroupName"

if(!$VMs) {

Write-Output "No VMs were found in your subscription."

} else {

Foreach -parallel ($VM in $VMs) {

if ($VM.Tags['server24x7'] -ne 'Yes') {

Stop-AzureRmVM -ResourceGroupName "$ResourceGroupName" -Name $VM.Name -Force -ErrorAction SilentlyContinue

}

}

}

}

The ability to hide secret variables within the automation blade was useful too, as we needed somewhere safe to store credentials that were to be used to connect to the subscription.

Once authored and pushed to Azure, the run books had schedules setup as follows:

- For development and test, the servers would turn off at 10pm and on again at 7am on weekdays, leaving them off all weekend.

- For UAT, the servers would turn off at midnight on the weekend, and back on again Monday at midnight

- For Pre-Production, the servers would turn off at 10 pm every day (and off at weekends), however they were configured not to start back up again. We decided to make turning it back on an on-demand feature via TeamCity (their CI system). Turning it back off at 10 pm meant it was never online when not needed.

- For the CI agents; These followed the same pattern as development and test servers, as we didn't want the CI server to try and deploy changes to servers that were not switched on.

Alerts were set up so that if these scripts failed, someone was alerted and could act on it. If they failed for several days, the cost saving wouldn’t have been very good!

Using the above proved very effective. As an example (and using the Azure pricing calculator) if you had a standard Windows D12 VM without additional disks, leaving this on all month (744 hours) would cost around £322.17. If you used a schedule to turn it on and off again like the above, it would bring the cost down to £140.73 - well over a 50% saving. Imagine that over hundreds of servers and it mounts up to a hefty saving.

They were very happy about this as they could see the cost coming down straight away when they went to view billing details via the portal.

Using the Azure Advisor to guide us on servers that might be underutilised

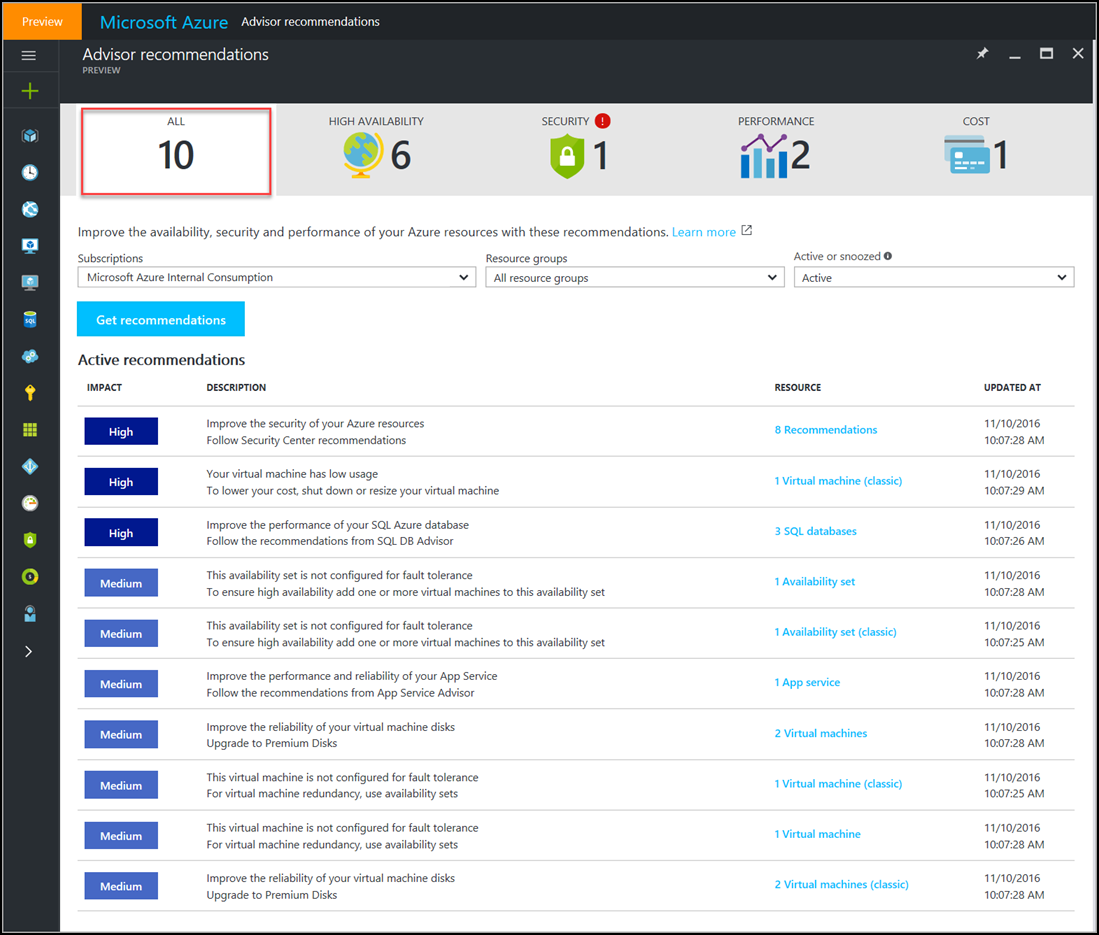

A useful tool that has been recently released in Azure is the Advisor blade.

This is designed to look over the resources you have in a subscription, and give you recommendations around areas such as under-utilisation and cost, availability, performance and security. We decided this would be useful to run against the subscription, so we logged into the portal and switched the blade on. This registers the Advisor with the subscription, and kicks off the process straight away.

It took about 5 minutes in total for it to work its magic and then the results were ready.

One of the best features of the Advisor tool is that it continually works in the background like a virtual consultant looking at your resources. Recommendations are updated every hour so you can go in and search through the content to see what it says. If you choose not to act on a recommendation, you can snooze it so it doesn’t appear under the active list. The blade is extremely easy to use and navigate to.

The first time it ran, it had identified several VMs that were either underutilised or not used at all, and the recommendation was to either resize, turn off or remove completely. Part of what is presented back is a potential cost saving by doing something with the VMs – this allowed us to very quickly generate a rough total of what could be saved.

After a quick check with the company’s IT director to discuss the highlighted servers, we found they were in fact not being used but had been provisioned for a project that wasn't started. Having the cost on the screen showed them instantly what could be saved and the decision was made to turn the machines off in the meantime.

The Advisor was a great tool that can be turned on and allowed to look over the resources in a subscription before generating feedback without a human having to click through numerous screens in the portal, or write any code. Certainly a must if you have lots of departments who can access your subscription to create resources who might not be particularly good at cleaning up afterwards!

Conclusions

There are many other ways to go about cost savings in Azure, however if you have a limitation around what you can do then using automation and the Advisor can help.

The Advisor is a tool we’d especially recommend to gain insight into how your resources are being used – not just for cost. We trialled a few other 3rd party tools that either only worked against an Enterprise subscription (which they didn’t have), or we found that they just plain didn’t work. The Azure Advisor was certainly the best of the bunch and the least painful to use.

---

Chris Taylor is a DevOps Engineer at DevOpsGuys. The DevOpsGuys are a DevOps consultancy who are leading the charge for WinOps. CEO and CTO James Smith and Steve Thair co-founded WinOps London with Alex Dover and Sam Gorvin from Prism Digital.

For more on Azure and DevOps methodologies, check out the WinOps meetup. Every month, like-minded people join together to listen to talks and discuss the use of DevOps methodologies on Windows stack. If you or your company would be interested in hosting a meetup for between 100 and 200 people, get in touch with the organisers.

For more information, including sponsorship and speaking opportunities, contact sponsorship@winops.org . Follow the meetup on Twitter @WinOPsLDN and meetup.com/winops.