Intelligence Patterns of AI Supercomputer @ Future Decoded

What are the underlying patterns that make up Cloud Intelligence? Here's a quick sneak preview of the upcoming Future Decoded keynote with Joseph Sirosh.

By Joseph Sirosh, Corp VP for the Data Group at Microsoft

I am incredibly excited to keynote on the Technical Day of Future Decoded 2016 which kicks off this week in London. To pique your curiosity, I wanted to share with you some of the AI themes, patterns and demos that I will be presenting during my keynote. The key theme is “Intelligent Cloud” .

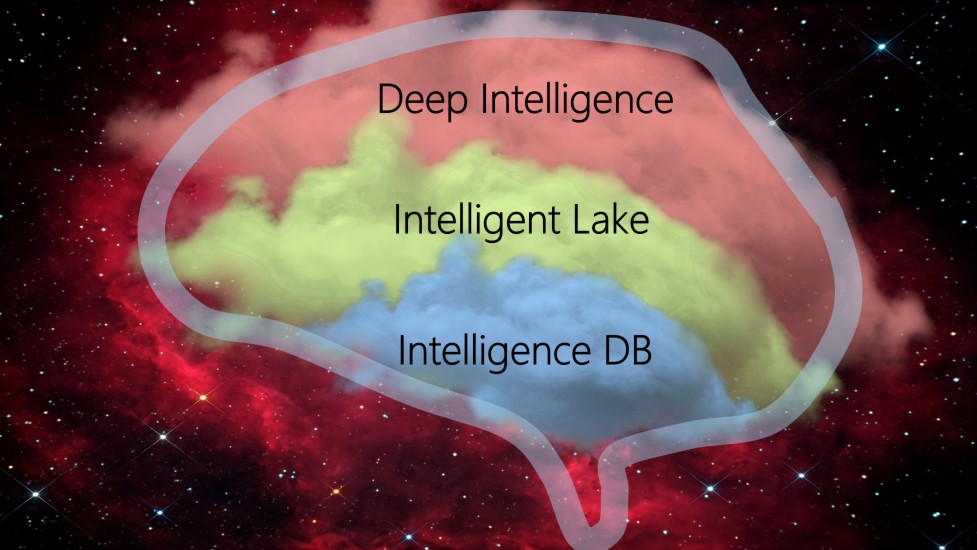

The three patterns I will present in my keynote resemble the anatomy of a human brain as shown below:

The patterns are really about ways to bring data and intelligence together in software services in the cloud to build intelligent applications, and here’s what they mean:

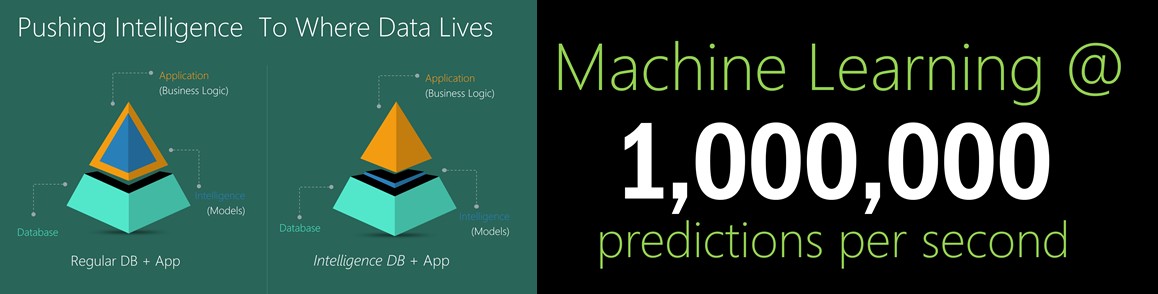

- Intelligence DB: This is the pattern where intelligence lives with the data in the Database. Imagine a core transactional enterprise application built with a database like SQL Server. What if you could embed intelligence (aka advanced analytic algorithms and intelligent data transformations) within the database itself to make every transaction intelligent in real time? This is now possible for the first time with R and Machine Learning built into SQL Server 2016 .

At the event, I will present a case study on “Machine Learning @ 1,000,000 predictions per second” showcasing real time predictive Fraud Detection and Scoring in SQL Server. By combining the performance of SQL Server in-memory OLTP technology as well as in-memory columnstore with R and Machine Learning, applications can get remarkable performance in production, as well as the throughput, parallelism, security, reliability, compliance certifications and manageability of an industrial strength database engine. To put it simply, intelligence (models) become just like data, and then models can be managed in the database exploiting all of the sophisticated capabilities of the database engine. The performance of models can be reported, their access can be controlled, and moreover, because models live in a database, they can be shared by multiple applications. No more intelligence being “locked up” in an application.Here is the link to the blog about SQL Server-as-a-Scoring Engine @1,000,000 transactions per second. And I highly encourage all of you to go and play with Machine Learning Templates with SQL Server 2016 R Services which include Online Fraud Detection Template, Customer Churn Prediction Template, Predictive Maintenance Template using SQL Server R Services.

At the event, I will present a case study on “Machine Learning @ 1,000,000 predictions per second” showcasing real time predictive Fraud Detection and Scoring in SQL Server. By combining the performance of SQL Server in-memory OLTP technology as well as in-memory columnstore with R and Machine Learning, applications can get remarkable performance in production, as well as the throughput, parallelism, security, reliability, compliance certifications and manageability of an industrial strength database engine. To put it simply, intelligence (models) become just like data, and then models can be managed in the database exploiting all of the sophisticated capabilities of the database engine. The performance of models can be reported, their access can be controlled, and moreover, because models live in a database, they can be shared by multiple applications. No more intelligence being “locked up” in an application.Here is the link to the blog about SQL Server-as-a-Scoring Engine @1,000,000 transactions per second. And I highly encourage all of you to go and play with Machine Learning Templates with SQL Server 2016 R Services which include Online Fraud Detection Template, Customer Churn Prediction Template, Predictive Maintenance Template using SQL Server R Services.

- Intelligent Lake: I will describe an “Intelligent Lake” pattern, which allows you to do bulk intelligence processing on Petabytes of data; for instance, speech recognition at bulk, machine translation at bulk, image recognition at bulk, image captioning, text processing and relevance, language understanding, language modeling. There are several ways to implement Extensible Intelligent Lake solution in Azure, namely using Azure Data Lake with Cognitive Service, using Spark on Azure with Microsoft R Services, and HDInsight with Microsoft R Services. I will demonstrate several Cognitive services operating using the Intelligent Kiosk and in Azure Data Lake at scale - detecting emotions, identifying age and gender from images, doing OCR processing at bulk, sentiment analysis from text, key phrase extraction from text, image tagging including invoking R and Python at scale. For example, we can do automated image tagging on 10 million images (from Imagenet) in about 10 minutes, that is, tagging at the rate of about a million images per minute. This demo will illustrate the very powerful extensibility of Azure Data Lake. Other ways to implement Intelligent Lake include R Server on Spark in Azure and R Server on HDInsight. Users can now run R functions over Spark nodes to train models on data 1000 times larger than before, at 125 times the speed of running open source R with CRAN algorithms, due to R Server and Spark’s combined power of parallelized algorithms and Spark’s in-memory architecture.

Deep Intelligence: I will also be discussing Deep Intelligence solutions in Azure that enable users to perform extremely sophisticated deep learning with ease using GPU VMs. These VMs combine powerful hardware (NVIDIA Tesla K80 or M60 GPUs) with cutting-edge, high-efficiency integration technologies such as Discrete Device Assignment, bringing a new level of deep learning capability to public clouds. We’ll have a Data Science VM with Microsoft Cognitive Toolkit. The demo that we’ll show here is that of Connected Drones from one of our partners, eSmart Systems, inspecting faults in electric power lines.

eSmart Systems is a small, dynamic, and young company out of Norway that has made an early decision to build all of their products completely on the Microsoft Azure platform. Their mission is to bring big data analytics to utilities and smart cities, and Connected Drone is the next-step in how they deliver value to their customers through Azure. The way they put it “Connected drone is a way of making Azure intelligence mobile” .Today, to implement Deep Learning pattern, you can use the Data Science VM already pre-loaded with deep learning toolkits or use Batch Shipyard toolkit, which enables easy deployment of batch-style Dockerized workloads to Azure Batch compute pools. Both are ideal for Deep Learning, so you should give it a try.Also, we’re planning to leave a few “surprises” to show you the full power of a rich cloud platform enabled with intelligence.

See you at Future Decoded!

Future Decoded features high-profile speakers from Microsoft and thought leaders, and is a unique opportunity for developers and IT Pros to learn more about using artificial intelligence to solve real-world problems. I invite you to join us on Twitter (watch the #FutureDecoded hashtag).

I look forward to seeing you all at this amazing event!