DevOps Technology in a Windows World

By Elton Stoneman. Elton spoke at WinOps (24th-25th May) on DevOps Technology in a Windows World

The DevOps movement is quickly making ground in the IT industry as it clearly delivers on the promise of helping us to deliver better software, more quickly. 'Better software' means products which are reliable and scalable, easy to manage, easy to change and give value to the business.

DevOps is based on cultural change, putting developers and ops closer together, sharing pain points and working as one delivery team to overcome them. There is a growing suite of tools which support the move to DevOps and establish the foundations for better delivery by making it easier to do things right, and harder to do things wrong.

Much of the supporting technology for DevOps emerged from Linux and the open source world, but the migration to Windows is happening fast. The technology providers recognise the huge market potential in the Microsoft world, where large enterprises as much as new startups are making steps towards DevOps.

In this article I'll look at some of the major DevOps technologies which work well on the Windows platform, in the space that's becoming known as 'WinOps'.

Infrastructure as Code

Supporting DevOps means having a technology stack with a smooth, automated deployment pipeline. You should be able to deploy a part or the whole of your solution to a completely new environment using a single process, knowing that the end result will be a fully configured, tested and functional release.

The deployment process should do three things:

- Create all the components the environments need - including virtual networks, subnets and VMs

- Run all the setup tasks your VMs need - installing Windows features, configuring the OS and installing third-party libraries

- Deploy and configure your own application stack, so the solution is ready to work as soon as the deployment is done

To automate all these things, you need a platform that lets you set up your components through scripts - which is the practice of Infrastructure as Code. It's an important part of ensuring the quality of your pipeline, because scripts are all kept centrally in version control and every step of the setup is encapsulated in the scripts.

There are no manual tweaks or config changes, so there's a much smaller surface area for human error, or for 'secret knowledge' which stops deployments happening unless certain people are there to do the magic. If someone makes a manual change to an application host without updating the scripts, it will get wiped out at the next deployment - which encourages the correct practice, of putting everything in the script.

The approach may sound like an unattainable nirvana, but there are technologies in the WinOps space which mean you can do this now. Popular tools like Chef, Puppet and Ansible all work with Windows; we have PowerShell Desired State Configuration, and with Windows Server 2016 we'll be able to natively use one of the most capable and straightforward options - Docker.

There's a lot of commonality in the tools, so instead of doing a full comparison and repeating a lot of ground, I'm going to focus on two opposites- PowerShell DSC and Docker.

PowerShell DSC

PowerShell Desired State Configuration is the native Windows option for scripting your deployments. It covers the setup and deployment steps for VMs, not the initial provisioning - although you can combine DSC with other PowerShell packages, like Azure PowerShell, which do the infrastructure provisioning in your platform.

DSC works with scripts which declaratively specify what you want the final state of the machine to be, rather than having you script out every step yourself. It's a modular system, with different types of components in different packages, which you can get from Microsoft and the community.

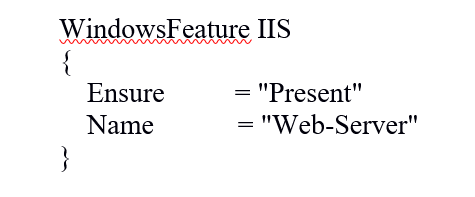

With PowerShell DSC you write a single script to define the state of a particular type of machine - so you may have a .NET Web Server script which installs IIS and the .NET framework on the VM. The code is straightforward and follows a set pattern - this snippet shows how to deploy IIS:

This says to ensure that the Windows feature called 'Web-Server' is present on the machine, and the 'IIS' tag is just a friendly name so I know what the component is. There are modules available to script a full Windows Web server - including setting up IIS Websites and App Pools, configuring the metastore, and downloading your app code from a shared location.

DSC won't provision servers for you, but if you're running in the cloud (or on-premises in a private Azure Stack cloud), you can integrate it with Azure PowerShell, which you use to create the virtual networks and VMs. Microsoft have a DSC extension for VMS which lets you run a DSC script on an Azure VM from a remote PC - which would be your local machine while you're building out the scripts, and the build server when the scripts are incorporated in the deployment pipeline.

PowerShell DSC can be used for one-time configuration, or it can be used in a more complex setup which periodically checks machines are still set up correctly. If the current state of the VM has drifted from the desired state, then the script will automatically re-apply the missing parts (or remove any extra parts).

Desired State Configuration is a good fit if you have a complex setup process to run on your VMS, and you build VMs as long-lived components. Provisioning Azure VMs and running DSC scripts is not a fast procedure - it can take tens of minutes to go from zero to fully configured, but if your deployments are regularly scheduled that shouldn't be a problem.

Docker

At the other end of the scale is Docker. With Docker you keep your host server (physical or VM) as light as possible, just installing the base OS and the Docker tools, and then your applications run as Docker containers on the host. Containers make use of a Linux feature that lets you isolate processes running on the OS, so your containers all share the same kernel and you don't need a heavy and expensive hypervisor between your apps and the host's compute resources.

Docker wraps that up in a simple, DevOps-friendly, packaging system. With Docker you script out the setup of a container using a text file called a Dockerfile which has a custom, but very easy to use, language. Docker is the opposite of PowerShell DSC - you don't specify what you want the end state to be and let the engine decide how to get there, with Docker you explicitly script everything out.

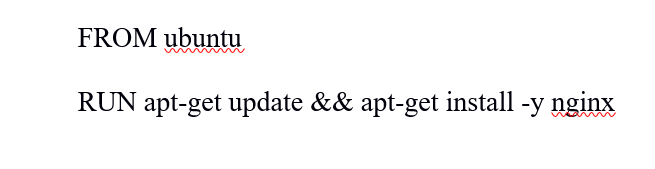

So the Dockerfile for your web server might start like this:

That says your container is going to be based from the existing Ubuntu image, and when the container is built the first part of the process runs a script to update the local package repository and install the Nginx web server package. The Dockerfile would go on to download any dependencies, configure the software, copy your own application files in, and it would end with a statement telling Docker what app to run when the container starts.

You use the Docker tools to build an image from that Dockerfile, and the build process executes all the steps to create a binary container image. That image is complete and self-contained, it has a thin OS layer and all the other software you configure in your Dockerfile. You can start a container from image on any compatible Docker host and it will run in exactly the same way, so the app you deploy in production is the exact same component you tested.

Docker images are much smaller and lightweight than VMs - tens or hundreds of megabytes rather than tens of gigabytes - which means they're easy to move around and quick to start - typically in seconds rather than minutes. They don't require a fixed allocation of CPU or memory, so you can run hundreds or thousands of containers on the same host. They'll compete for resources, but assuming they have different usage patterns, they can all co-exist happily.

Windows Server 2016 will have native support for running Docker containers, and Windows Server Nano is a lightweight OS you can use inside your containers. Because containers are so fast to build and provision, they make your deployment pipeline very slick. Your process builds a new version of the image and deploys it by starting a container from the new image - and killing any containers from the old image.

Docker is great for enabling you to move away from long-lived hosts, encouraging you to think of your apps as a whole unit - OS, dependencies, app configuration and the app itself - which is defined in code and version-controlled. Over the next year, as Docker gets integrated into Windows and .NET Core lets us build lean, lightweight apps, I'm confident that we'll see containers becoming the preferred model of compute, with heavy VMS reserved for complex or demanding components.

Summary

This is a great time to be in IT. The whole culture of DevOps is about addressing shortcomings that we know exist in the way we build software today and making sure we can deliver better software more quickly, with fewer headaches all round. The technologies behind DevOps are as exciting as the culture itself, and promise to radically change the way we build, deploy and design software.

Windows and the Microsoft stack are catching up fast with the open source Linux tools, and when Windows Server 2016 lands we'll have a rich set of tools to support Infrastructure as Code and Immutable Infrastructure. Here I've looked at two of those tools - PowerShell DSC which is great for automating the setup of complex components, and Docker which is great for building small, lean components.

Now is the time to start working with these tools, to get yourself familiar with what they can do, and how they can improve what we do. There are DevOps and WinOps meetups and conferences happening all over the world, so it's easy to join in and get started.

Resources

- Getting started with PowerShell Desired State Configuration - Microsoft Virtual Academy

- Building Linux based solutions in Azure - Microsoft Virtual Academy

- DevOps: An IT Pro guide - Microsoft Virtual Academy

Elton Stoneman is an MVP, Docker Captain and Plurasight author. He has worked in IT since 2000, primarily as an Architect connecting systems using Microsoft technologies, although in recent years he has found a lot of value in going cross-platform with Linux.

Elton Stoneman is an MVP, Docker Captain and Plurasight author. He has worked in IT since 2000, primarily as an Architect connecting systems using Microsoft technologies, although in recent years he has found a lot of value in going cross-platform with Linux.