Bringing Predictability to Cloud Server Storage

In theory, the logic behind cloud computing seems undeniable: lots of data-center servers providing lots of computing power and storage to lots of customers. It’s the beauty of scale: Everybody wins—right?

In practice, as you might guess, things get a bit more complicated. Separate parties jostle for the same resources at the same time. Confusion ensues. Things become unpredictable, and scale needs predictability.

Soon, though, a collaboration between Microsoft researchers and members of Microsoft product teams will deliver that much needed predictability. Researcher Eno Thereska (@enothereska) explains.

“We started to investigate a puzzling behavior of most cloud systems today,” he says. “Their performance is great when a few customers are running at a time, but as soon as the number of customers increases, performance usually suffers. Sometimes it suffers a lot—a client’s job that used to take a few minutes to complete could suddenly take hours.

“The bottom line is that performance is unpredictable. This is a serious problem, because we expect thousands—if not hundreds of thousands—of customers to make use of cloud systems simultaneously, and each customer expects predictable performance. Our research attempts to get to the root cause of performance unpredictability.”

That research—and its embrace by Microsoft’s Windows Server, Hyper-V, and System Center teams—has resulted in End-to-End Storage QoS (quality of service), technology that on Oct. 28, in Barcelona, Spain, was announced during TechEd Europe 2014 as a key feature in the Windows Server Technical Preview.

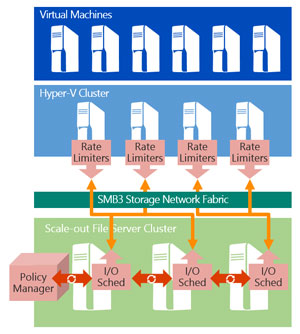

The technology enables the shaping of storage traffic in private cloud deployments. The End-to-End Storage QoS work enforces the minimum and maximum numbers of input/output operations per second for virtual machines (VMs) or groups of virtual machines. Those who host data on the cloud thus can increase their VM deployment while not starving others of storage performance.

The vision for this approach, something the researchers refer to as “software-defined storage architecture,” is for storage QoS to be used as a mechanism to enforce tenant service-level agreements (SLAs). Microsoft’s software-defined storage vision is delivered using the SMB3 protocol and highly available scale-out file servers, and research findings help to deliver consistent end-to-end performance. The technology enables per-tenant aggregate SLAs, specified across a set of VMs and multiple storage servers.

“The key contribution was to re-architect key components of the system, namely the network and storage resources, to provide performance isolation,” Thereska says. “It turned out that the root cause of the problem was uncontrolled performance interference: A customer’s workload would interfere with another’s at shared resources, such as the network and disks.

“Performance isolation is about reducing that interference to the point where a customer gets the same performance from a shared system that they’d get from a dedicated system.”

The work is explored in detail on the Predictable Data Centers (PDC) webpage and in the paper IOFlow: A Software-Defined Storage Architecture , written by Thereska and fellow researchers Hitesh Ballani, Greg O’Shea,Thomas Karagiannis, Antony Rowstron, Richard Black and Timothy Zhu, along with Tom Talpey, a Windows Server architect.

The collaboration began before 2012, when the researchers began developing the networking QoS concepts.

“When we started doing the networking aspects of the PDC project,” Rowstron says, “there was no request from a product group. We just did it because we felt it was the right thing to do. We then got good feedback from the Windows Server team, and the opportunity opened for expanding the approach to storage.”

For much of 2012 and 2013, the collaborating teams worked together to develop and incubate a new storage-layer QoS architecture. In fall 2013, the teams developed an IO rate limiter that was shipped in the server-message-block (SMB) stack in Windows Server 2012 R2. Meanwhile, the collaboration broadened to include the Hyper-V and System Center teams to build a policy manager to orchestrate the behavior of rate limiters in a distributed system.

For much of 2012 and 2013, the collaborating teams worked together to develop and incubate a new storage-layer QoS architecture. In fall 2013, the teams developed an IO rate limiter that was shipped in the server-message-block (SMB) stack in Windows Server 2012 R2. Meanwhile, the collaboration broadened to include the Hyper-V and System Center teams to build a policy manager to orchestrate the behavior of rate limiters in a distributed system.

Program managers Jose Barreto and Patrick Lang took the lead in delivering the feature to the Windows Server Technical Preview.

“This contribution,” Ballani says, “is relevant to several different groups in Microsoft. As such, the solution needed to meet a high bar and satisfy several diverse requirements. For example, it was a goal for the solution to be immediately deployable without requiring customers to change their applications or how they deploy them. That led to a design decision to place part of the solution, the rate limiters, in the hypervisor.

“It was also highly desirable to move steps ahead of the competition and provide customers with a richer set of SLAs than what’s currently out there. That led to design decisions to provide aggregate SLAs and manage a set of VMs as a whole.”

It wasn’t easy, but it worked.

“Something on this scale,” Rowstron observes, “takes a lot of skilled people across all of Microsoft.”

Talpey can only agree—and look forward to more of the same.

“This has been an incredibly productive engagement from the Windows Server side,” he says. “I foresee significant additional engagement on this technology. We have only scratched the surface of its potential.”

This article was originally written for Inside Microsoft Research.