Microsoft Server Virtualisation

By Alan Richards, Senior Consultant at Foundation SP and SharePoint MVP.

Overview

All businesses are constantly looking at ways to save more or become more efficient and one of the largest drains on funds is normally the IT department, a necessary evil really in most businesses eyes. Without IT businesses wouldn’t be able to run their core services and definitely wouldn’t be able to have an online presence, which in this age of online shopping would herald the death bells for any business without an online presence. There comes a time in the life of all CIO’s when the board says ‘How can you reduce your budget’ Of course what they actually want is for you to reduce your budget while still providing exactly the same service if not more. Well virtualisation is one of those technologies that can help reduce not only want you spend on actual hardware but also reduce your operating costs as well. For a moment in time you may even become the boards blue eyed boy.

The School had recently invested large amounts of money on the network infrastructure and at the time of the review were about to embark on a program of server replacement. At the time server virtualisation was in its infancy with Microsoft just starting to make inroads into the market and to be perfectly honest they were sceptic; they could not see any benefit to users in taking services that ran on physical servers and putting them onto one large host to share resources, surely this would reduce functionality and the responsiveness of the server, especially file servers, which in any educational environment is one of the most heavily used servers.

So after reading many articles, a bit like this one, they took the decision to run a test environment, their server replacement program was put on hold for a year apart from 4 servers that in no way could carry on

any longer. A high specification server was purchased and the 4 servers that required replacement were transferred to the new virtual host. This was run for a year with constant monitoring of all the key elements:

- Disk usage

- Network utilization

- CPU load

- End user experience

The results of the yearlong test were very pleasing and the decision to move to a fully virtualised environment was taken.

They now run a fully virtualised server environment using Windows Server 2008 R2 and HyperV and this eBook will lead you through all the decisions they took from the initial planning phase all the way through to the day to day running of the systems. This eBook is not designed to be a how to, more an aid to help you make the right decisions when moving to server virtualisation.

Running A Trial

When you first look at starting out on the virtualisation road map running a trial is advisable. You may be more than happy with researching what other people have done and pulling together all of the information available in blogs, white papers and videos but a trial will be able to tell you details about your network and how your infrastructure can cope with the demands of virtualisation.

How you run the trial will depend on the information you want to discover and to a certain extent how much money you want to spend on the hardware.

A simple trial would consist of a single server with enough on board storage to cope with 3 or 4 virtual servers and network connectivity to service the virtual servers. This type of test will allow you to monitor the loads on your network infrastructure, the actual network cards on the servers and also allow you to monitor the end user experience.

If you have decided on virtualisation and your trial is going to be used to simply collect data to help in the planning and installation phases then you could go for a trial setup with a single server

and the storage system of your choice. This setup will allow you to collect all the required data but also make the transition to a full virtualised environment a lot easier.

Whichever method of trial you decide on, the data collected will never go to waste as it can be used to inform your decision making throughout the process of virtualisation.

Planning

Fail to prepare…Prepare to fail

When implementing server virtualisation the above phrase fully applies. The planning phase should not be overlooked or entered into lightly. IT is core to any business from high street banks to the smallest of primary schools and if that IT fails or is not fit for purpose then the business could fail.

What Have We Got

The first part of the planning phase should be finding out what you have got and how it is being used. Before you can plan for how many virtual hosts you need you need to know what servers you are going to

virtualise and the load that they are under currently. The load on servers is an important factor in deciding on your virtualisation strategy, for instance it would not be advisable to place all your memory hungry servers on the same virtual host.

Finding out what you have can include:

- A simple list of servers

- Disk utilisation monitoring

- Network monitoring tools to map out the network

load and utilization - CPU load

All of these factors will help you in answering some of the key questions that if you don’t have the right information could prove decisive in the way your virtualised environment performs. These key questions can include:

- How many virtual hosts do I need

- What size storage should I purchase

- What type of storage (iSCSI, fiber channel etc)

What To Virtualise

A major question you will have to ask yourself is what services you are going to virtualise. Theoretically you can virtualise any Windows based server and also any non-Windows based server, but whether you should virtualise everything is a question that has produced some very heated debates.

Let’s take, for example, one of the core services to any Windows based domain; Active Directory. At its most basic level it provides the logon functionality for users to access computers and therefore their network accounts. If this service fails your network is fundamentally compromised, so one argument for virtualising it would be that you are providing a level of fault tolerance by it not being a physical server. However a downside to this is what happens if your virtual hosts are members of the domain and your virtualised domain controller fails – how do you login to your host if it requires a domain account to login?

Obviously this is taking it to the extreme and there are ways around this scenario but the point this argument is trying to make is that you need to carefully consider what services you virtualise.

As a rule it is best practice to keep at least one physical domain controller to provide active directory functionality, you can then have as many virtualised domain controllers as you like for fault tolerance.

Other considerations when considering what to virtualise include:

- Physical connection (there is no way to connect

to external SCSI connections in HyperV ie tape drives). - Security, both physical and logical (Is it, for

example, a company requirement to have your root certificate authority server

locked away somewhere secure).

Microsoft Assessment & Planning Toolkit

While there is no substitute for ‘knowing your network’ there is a tool provided by Microsoft that will make life slightly easier for you. The Microsoft Assessment & Planning (MAP) toolkit will look at your current servers and provide you with suggested setups for your virtualised environment.

I would suggest running the tool and using the suggested solutions as a starting point for your own planning, I wouldn’t suggest using the solutions from the MAP toolkit as gospel.

You can download the tool using from the following location.

https://www.microsoft.com/download/en/details.aspx?displaylang=en&id=7826

Virtualisation Scenario

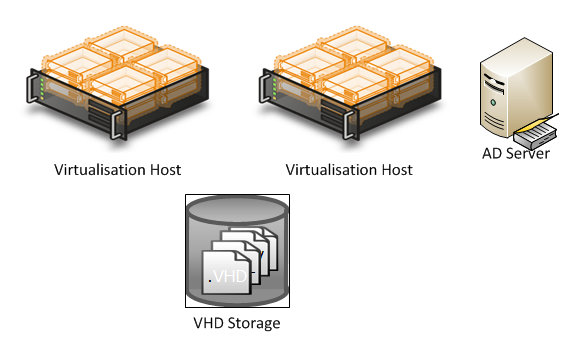

Now we have completed our planning step let’s look at a typical small scale scenario. Imagine you are a business with only 4 servers, so not very big at all really, one of which is your Active Directory server,

maybe a setup like the one below.

For this scenario you could quite easily use one server for your virtualisation host with lots of disk space and end up reducing your servers from 4 to 2 as shown below

While this scenario would work and be relatively low cost to implement it does fall down in a two key areas

- Future Growth – As your needs for more servers may increase it will put more strain on the resources of this single host and ultimately this may have a detrimental effect on your end user experience

- Redundancy – If your host fails then all of your virtual servers will fail as well as all the virtual hard drives are stored on your single server. This would not be ideal.

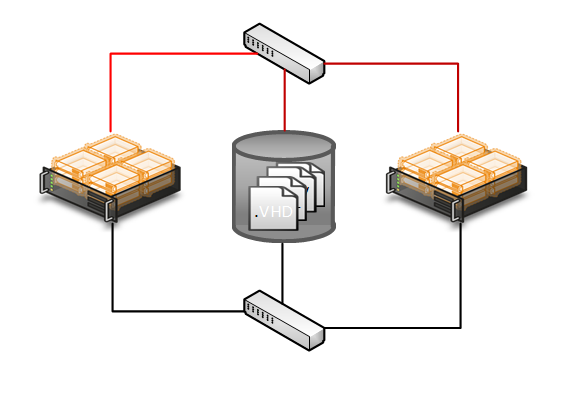

A preferred solution to above is shown below, this solution gives you redundancy and the ability to grow your virtualisation environment as your needs grow.

So how is this scenario so much better than our first one?

- Future Growth – While initially this scenario may seem a bit of an overkill what it does allow you to do is grow your environment as your needs change without the need to purchase more equipment or completely change your setup.

- Redundancy – Because all the virtual hard drives are stored on a central storage system the failure of one host will not affect the running of your virtual servers. Failover clustering will take care of the transfer of services between hosts automatically and your users experience will not be affected.

Virtualisation Technology Decision Factors

With the planning phase now over another question you should be asking yourself is which virtualisation technology you are going to implement. There are a number of players in the market but the two key ones are:

- VMware

- Microsoft Hyper-V

Both products will fulfil your needs when it comes to virtualising your servers but there are two key factors you should consider before deciding on your chosen technology

Cost

VMware will cost you, that is a given and be under no illusions that it is not cheap whereas Hyper-V is built into versions of Windows Server at no extra cost of licensing.

There is also a free standalone version of Hyper-V Server which can be downloaded using the link below. This version is purely for use as a Hyper-V server and does not have the same management GUI as the Hyper-V version in Windows Server.

https://www.microsoft.com/download/en/details.aspx?displaylang=en&id=3512

Licensing

Licensing can be expensive, but when undertaking virtualisation you need to consider how you are going to license both your hosts and your virtual servers.

Your hosts will need a version of Windows Server installed to run Hyper-V, which version you install can have a dramatic effect on how you license your virtual servers. The table below illustrates a typical large environment and how the version of Windows installed on the hosts affects their licensing requirements.

Number of Hosts |

Windows Version Installed on Host |

Included VM Licenses |

VM’s Installed |

Licenses Purchased by Business |

5 |

Standard |

1 |

30 (6 per host) |

30 |

5 |

Enterprise |

4 |

30 (6 per host) |

15 |

5 |

Datacentre |

Unlimited |

30 (6 per host) |

5 |

As you can see paying a little bit extra for the datacentre version of Windows Server can help save you money by reducing the number of server licenses you have to purchase. This may not fit for every implementation but for large Hyper-V implementations, licensing can play a huge part in both making the decision on technology provider and your on-going costs. Of course with VMware as well as paying for the actual software there is no ‘included’ Windows licenses and so you will have to license every copy of Windows you install whether it is physical or virtual.

Hardware Decision Factors

So far we have looked at planning, a virtualisation scenario and what you should think about before deciding on which technology provider to choose for your implementation, the final step in this process is looking at what and how much hardware to purchase.

Working out what specification and how many host servers you will purchase should centre on a number of factors:

- Number of virtual servers

- Network bandwidth required

- Memory requirements of virtual servers

- CPU requirements of virtual servers

- Storage

All of the above are going to have an impact on your virtualisation design and purchasing so let’s look at each one in more detail

Number of Virtual Servers

As we saw in the virtualisation scenario the number of virtual servers you plan on hosting can have a large effect on your hardware decisions. If you only ever plan on hosting a small amount of servers then you

may very well get away with just one virtualisation host; but remember that this will not give you any redundancy if your host dies or any future growth possibilities.

If you plan on hosting a large number of virtual servers then this will force you down certain paths about the number of hosts and the storage of the virtual hard drives.

Network Bandwidth Required

When you are designing your host servers then the network resources required by each virtual server will have an impact on the number of network interface cards that you will have built into the host server. You need to consider that if you host 5 servers on a host and only have 1 NIC then all data traffic will be going down that one network card, this may have a detrimental effect on your user’s experience.

One item that will be discussed later in this book is the setting up of the management side of virtualisation which will take up at least one NIC for management traffic across the network.

Memory Requirements

As anyone who works with computers knows, memory has a massive effect on how computers perform, this is no different in servers and in some ways it is more important. When designing the memory requirements for your host servers you will need to consider both the memory requirements for the host server plus all of the virtual servers.

Let’s look again at the setup from earlier in the book.

Let’s assume that each of these servers has 10Gb of memory and we are going to virtualise all of them except the AD Server. A simple calculation shows us that for the virtual servers we will need 30Gb of memory and if we give the host the minimum of 4Gb then the host server will need 34Gb of memory.

Sounds simple, well it is to a certain extent, but as we will discuss later in the book if you are planning on setting up your virtualisation environment to have redundancy you will need to allow enough memory on your hosts to cope with the failure of 1 or 2 hosts and the failover of virtual servers to the remaining hosts.

In Hyper-V this is called failover clustering and is the best way to ensure that your users are not affected if you suffer the failure of one or more virtualisation hosts.

CPU Requirements

Designing your CPU requirements for your host servers is done in a very similar way to the memory requirements. You need to consider how much CPU activity and load each of your virtual servers will take plus the load that will be required by the host operating system.

You also need to give consideration to what will happen in the event of a host failure and the failover of other virtual servers to the remaining hosts.

Storage

In a virtualised environment storage plays a major role. In a simple one server environment this can be just a large amount of hard disk space on the actual host, in larger environments the storage is normally a NAS, SAN or other form of centralised storage.

Some of the factors you need to consider when looking at your storage design are:

- Size

- Redundancy

- Connectivity

- Expandability

Let’s look at each of these factors one by one

Size

The size of your storage will fully depend on how many virtualised servers you are going to run and what they are going to store. For example a web server is not likely to take up a lot of hard drive space, however a large SQL server is going to take up a large amount of space.

You also need to consider your growth plans, while you can design your storage requirements for your current selection of servers you also need to consider how your servers will grow or the addition of more servers.

Redundancy

With centralised storage you could introduce a single point of failure. With Microsoft Hyper-V and failover clustering you are taking a decision to implement a system that can cope with the failure of virtualisation hosts, if you don’t take similar steps with your storage solution then you are introducing a fail point. If the SAN or NAS fails then access to your virtual server hard drives will fail and therefore your servers will not run.

There are various ways you can mitigate the failure of your storage system.

Duplication

One of the ways to ensure your storage system is not a single point of failure is to have a duplicate system. This method is by far the most reliable. By setting up a duplicate storage system that is constantly synchronised with the live data you remove the single point of failure. However this method is expensive and requires storage solutions that are capable of failover, hence narrowing your field of choice when it comes to your storage.

RAID

Setting up your storage solution for RAID 5 or 10 will mitigate the effects of a particular drive error. This requires a storage solution capable of RAID (which is probably all of them) but foremost your storage solution must support hot swappable drives, this way you will not need to shutdown the storage system to replace the faulty drive.

Backup

Finally ensure your data and your virtual hard drives are backed up. Backing up your VHD’s will mean that if your storage solution fails you will only need to recover the VHD’s to return your system to a functional state. Most of the backup software on the market can perform backups of virtual servers but an obvious choice would be Microsoft System Center Data Protection Manager, which has full Hyper-V support.

Connectivity

How you connect to your storage solution is also a key factor, two of the main choices are iSCSI and Fiber Channel. Which you choose can be affected by a number of factors.

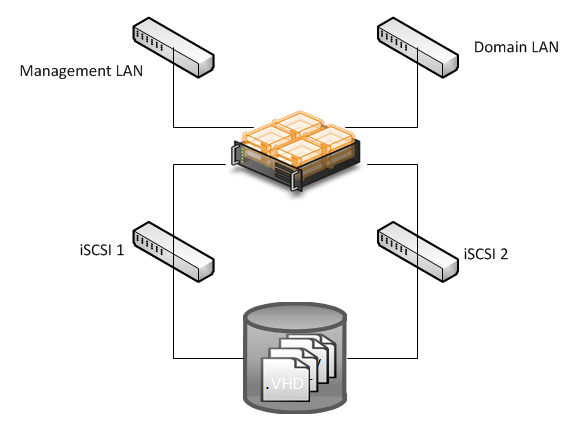

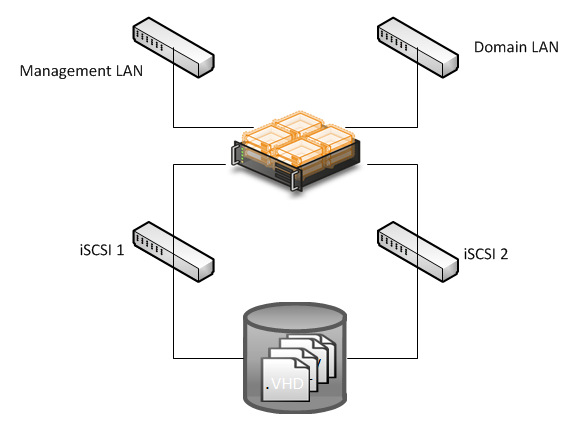

Once you have chosen your system of connectivity then you need to consider how much traffic will be traveling between your hosts and the storage system. You also need to consider redundancy again, a single point of failure could be introduced if you use a single network cable and switch to connect all your hosts to your SAN or NAS.

A simple scenario is shown in the diagram below, this shows two routes for each host to access the storage system. This will remove the connectivity single point of failure.

Expandability

As you consider your storage solutions you should also think about the future. When setting up Microsoft Hyper-V you will need to connect your failover cluster to your storage system and the LUN’s you set up on it. If you do not think about how your environment will grow in the future you could lock yourself into a situation where if your storage system becomes full you may need to remove all the current LUNS’s before you can increase the amount of available storage space.

So let’s explain that in a bit more detail. When you purchase your storage solution you will purchase two distinctly different items; the physical hard drives and the ‘housing’ for them to go in. The housing is the piece of equipment that will manage your hard drives, the iSCSI connections and the partitioning of the hard drives into what are called LUNS’s which then you can connect to your hosts.

What you need to consider when purchasing the ‘housing’ is the complexity of the management system; some of the low end storage solutions will have the basics, such as RAID array ability, dual controllers, dual iSCSI connections but what they won’t have is dynamic LUN expansion. This basically means that once you set the size of the LUN on the storage box it is fixed and if you ever want to increase the size you will need to backup all the data, delete the LUN and then rebuild it with the increased size. Some of the more expensive storage solutions have the ability to increase the size of a LUN as you insert more physical hard drives.

A major factor in this decision is obviously cost; the more features in a storage solution the higher the cost.

Installation

So you have run your trial, planned your virtualisation setup and decided on the specifications for your hardware, all you need to do now is install the system – sounds simple and to a certain extent it is simple.

The basic setting up of the hardware and storage system is relatively straight forward, this book is not designed to be a how to for using virtualisation but more a thought provoker but the basic steps for setting up your hardware include.

- Connecting your hosts to your network

- Setting up your storage LUN’s

- Connecting your hosts to your storage solution

- Installing Windows Server to your hosts

- Enabling the Hyper-V role

- Enabling the failover clustering role

- Installing your virtual servers

While these are the basics steps to installing a virtualised infrastructure there are, as you have probably guessed, a number of factors you have to consider.

Network Connection

Network connectivity can play a large part in your end user experience; if you do not assign enough network bandwidth to both the host server and the virtual machines you could create bottlenecks which will then affect how fast data is returned to your users. You also have to consider the management of the host and connection to your storage solution. In an ideal world your host server would have separate connections for each of the networks it has to service. The simple diagram below shows a host server with a series of network connections.

In this scenario the server would require 4 network interfaces; however this only gives the virtual servers on the host a single connection to the domain LAN. A much better scenario would be to increase the number of network interface so that once Hyper-V is installed you can assign more interface to the domain LAN

Setting Up Your Storage LUN’s

A LUN on a storage unit is a logical space that can then be assigned to the virtualisation infrastructure. How you set up your LUN’s and assign them depends heavily on the manufacturer of your chosen storage

solution. When setting up your LUN’s you need to consider how much data you are likely to store, how much this data may expand. These two factors will affect how many and the size you assign to your LUN’s.

Failover Clustering

Failover clustering is the technology used to allow your virtual servers to ‘failover’ to another host if its parent host goes down for any reason. Failover clustering is relatively simple to setup and running the wizard on a host will guide you through the steps for enabling the technology.

The first step in the setting up of failover clustering is to validate the cluster. To do this you will need all your hosts setup, the Hyper-V role installed and connection to your storage solution complete and

working. Validation of the cluster carries out checks on the system and simulates failed hosts. While you can create the cluster even if elements of the validation fail it is worth noting that if you place a support call with Microsoft in the future, they will ask to see the original validation report to verify that the system was fully functional when setup.

It is also worth making sure validation passes if just to give you peace of mind that your system is ‘up to the job’

Management

So you now have a fully functioning virtualisation infrastructure and all is going well. The management of virtualisation is a relatively simple job; you manage your virtual servers as you would any physical server, with updates and patches all installed to a schedule that you as the Network Manager have decided upon.

The management of the actual Hyper-V hosts is also fairly simple in that as with any physical server updates and patches are scheduled as per your company or network team policies. The only key difference is that any restarts of a host server will affect a number of virtual servers so requires a little more thought. While it is true that with failover clustering installed the virtual servers will failover once the host restarts, it would be a much better plan to move the virtual servers to another host before any updates take place, this way the end users will not get any interruption of service.

The main management tool for a Hyper-V environment with failover clustering installed is the failover cluster manager.

From this management suite you can

- Create New Virtual Servers

- Stop / Shutdown / Restart Virtual Servers

- View the health of network connections / storage

connections / networks - Move / Migrate Virtual Servers

The moving of virtual servers between hosts can be done in a number of ways but if you want your end users work to not be interrupted then live migration is the service to use. Live migration moves a virtual server from one host to another without interrupted network access to it and therefore you end users will not notice any interruption in network connectivity.

The failover cluster manager is a simple to use application and provides all the necessary tools to effectively manage your virtualisation environment and because it’s part of the Windows management suite, very familiar to anyone who works with Windows Servers.

Conclusions

The aim of this eBook article has always been to help you in the decision making process with regards to virtualisation. You need to decide if virtualisation is;

a) Right for your environment

b) Which technology provider to use

c) What storage solution to choose

d) Specification of your hardware

While we can’t tell you if virtualisation is right for your environment, we hope this eBook article helps you in making those other decisions.

Key points you can take from this book should help you in your virtualisation journey, Microsoft Hyper-V is a powerful virtualisation technology and as it is built into Windows Server 2008 R2 you don’t have to pay any more for it. The licensing model that Microsoft provides for licensing virtual servers can actually save you money on your licensing costs.

The free version of Windows Hyper-V gives the option of installing a cut down version of Windows that is dedicated to virtualisation, ideal for environments that don’t currently run Windows.

Combine Microsoft’s Hyper-V and Failover Clustering technology and you have a solution that is cost effective, resilient and an extremely powerful tool in managing your virtualised environment.

And finally, as they used to say on all good news programs in the UK, did the School in question actually save any money?

Well the answer to that is yes, the reduction in the number of servers to maintain, cool and generally look after has given them annual savings of approximately £12,000 a year.

The School in question was a very forward thinking one and took on HyperV just as it was making inroads into the market, the next steps for them are to look at upgrading their current virtualisation far to Server 2012 and start to take advantage of all the great new features that Windows Server 2012 brings you.