WS2012 Designed for the cloud - real benefits for Physical Embedded Servers

Several key goals of the WS2012 Server rewrite were to accommodate requirements of the cloud business. Datacenters which provide cloud capability are generally massive and have unique requirements which are normally a superset of those found in a smaller environment. These requirements include uptime, resiliency, flexibility, cost savings, manageability, speed and security.

In this article, I would like to explain some of the new features that provide dramatic improvements in Speed of the WS2012 servers sold by our OEMs in specific environments that are dependent upon throughput, such as storage, financial, industrial control, gaming, printing, communications and broadcast verticals, as examples.

SPEED

In order to expand performance on a server, many things must be in place.

Multiple processors, and the more the better.

More memory with dynamic allocation.

Higher bandwidth through the networks.

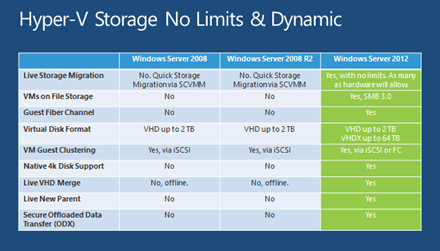

An unbounded connection to disk farms.

Self healing capability.

Microsoft, in order to increase the performance of datacenters servicing the cloud, implemented support for up to 640 physical processors (not just cores).

An expansion of memory was needed to service all these processors and the resulting virtualized instances that would be running on them. Ergo, not only were the upper limits increased for memory (to 4TB, but also the way in which they were allocated and used. Also, support for SSD is incorporated.

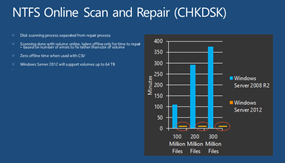

Storage spaces allowed the combination of multiple storage technology types into a single storage pool. And this is no ordinary storage pool. WS2012 can run ReFS -Resilient File System, which allows doing file checks on the fly (imagining doing a FSCHK of 300 million files in seconds--- a direct result due to the files being checked in advance), in essence providing self-healing data that did not have to go through cleansing and correction at the storage level.

Also implemented was support for the off-loading of storage transfers from the server computer to the storage vendors like EMC, Hitachi, Network Appliance. (This was not only for SAN environments, but now, also support for NFS was added). This optimizes usage of the pipe, and expands our ability to work in heterogeneous environments and service existing disk farms.

In order to maximize the speed of the disks, the use of 4K blocks have become the standard. This is what disk drives want to see.

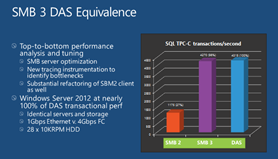

SMB 3.0 to allow multichannel support and a refactoring of SMB 2.0 allowed Network transfers to reach 97% of DAS (direct attach storage) speed. Additionally, files are always opened in a “write through” mode.

SMB 3.0 to allow multichannel support and a refactoring of SMB 2.0 allowed Network transfers to reach 97% of DAS (direct attach storage) speed. Additionally, files are always opened in a “write through” mode.

(As an aside, de-duplication at the file level was incorporated. This not only saves space, but does assist in reducing time spent searching for files, and support for 256 i-scsi targets was supported.)

And finally, NIC teaming, with the capability of creating a failover cluster of 16 channels or aggregating 32 ports (with load balancing in both scenarios) to maximize throughput was incorporated. NIC products from multiple vendors could even be blended together. With this kind of thr oughput, and support for networked storage, you can see how an IPSAN can be built with throughputs of up to 320Gb/sec (using 10Gb/E network cards). What used to be hard is now easy.

oughput, and support for networked storage, you can see how an IPSAN can be built with throughputs of up to 320Gb/sec (using 10Gb/E network cards). What used to be hard is now easy.

We measured 1 million IOPS with some of our tests, and we did not even reach the upper bound of WS2012. (I spelled “Million”, because I was certain some would think that number was a typographical error). This is due to the previously mentioned features, as well as SMB Multichannel with SMB 3.0… this means that very powerful storage can be built but for a tenth of the price companies pay today to EMC/NTAP.

If these performance enhancements are of interest to you, go to https://www.microsoft.com/windowsembedded/en-us/evaluate/windows-embedded-server.aspx download and begin testing a demo copy of WS2012.