Exchange 2010: The mystery of the 9223372036854775766 copy queue…

Database Copy Self-Protection Mode

What would you say if I told you that a copy queue length (CQL) of 9 quintillion log files was not a bad thing? By the way, if you are wondering, that works out to a CQL of 9,223,372,036,854,775,766, a number so big that it cannot be entered into Windows Calculator, and so big that if it represented actual transaction log files, it would require 8 yottabytes of storage, and sadly, as of this year, no storage or network system on the planet has reached even one thousandth of a yottabyte (which would be a zettabyte, by the way) of information.

But fear not, as you don’t need to start collecting all of the storage on the planet to plan for a CQL this large, but it is a real and valid value for a passive copy’s CQL, and in recent weeks, I’ve worked with a few customers who have experienced this.

Background

During regular operations, the Microsoft Exchange Information Store service (store.exe) and the Microsoft Exchange Replication service (msexchangerepl.exe) on DAG members hosting an active database copy write two values to the cluster registry at HKLM\Cluster\ExchangeActiveManager\LastLog:

- DatabaseGUID with a decimal value representing the last log generated by the active copy

- DatabaseGUID_TIME with the system time of when that log file was generated

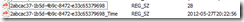

Here is an example of these entries in the cluster registry:

To decipher these entries, you can use Get-MailboxDatabase to get the GUID for a mailbox database:

[PS] C:\Windows\system32>Get-MailboxDatabase dag-db0 | fl name,*guid*

Name : DAG-DB0

Guid : 2abcac37-1b5d-4b9c-8472-e33c65379698

These values are written to the cluster registry on the server hosting the active copy, and native cluster registry replication is used to propagate this information to all other DAG members. DAG members that host a passive copy of the database use this information (the last log file generated by the active copy) along with information about the last log file replicated to the passive copy, to determine the CQL for the database copy. Thus, it is critical that all DAG members have up-to-date values, as the CQL is used by Active Manager to evaluating whether or not to mount a database in response to a failover.

A Larger-than-Life CQL

In Exchange 2010 SP1, we changed how we determine the CQL. In addition to tracking the last log generated by the active copy, we also track the last time that log file was generated. This was done specifically to prevent situations in which a passive copy is not aware of log files generated by the active copy and makes automount decisions based on stale data.

We use the DATABASEGUID_Time entry for this purpose. If the difference between the timestamp recorded in the cluster registry and the system time on the server hosting the passive copy is off by more than 12 minutes, the Microsoft Exchange Replication service on the server hosting the passive copy places the database copy into a self-protection mode. This is done by setting the CQL for that passive copy to 9223372036854775766. Because a passive copy can be activated and automatically mounted only when its CQL is equal to or less than the configured value for AutoDatabaseMountDial, this has the effect of preventing the passive copy from ever mounting automatically. After all, a value of 9223372036854775766 will always be higher than any possible value for AutoDatabaseMountDial.

Where Did the Time Go?

So why would a condition exist that causes the time discrepancy to be greater than 12 minutes in the first place? This can actually happen for a few reasons:

- The Cluster service on the server hosting the active copy might be having a problem writing updates even though the node remains in cluster membership.

- The Cluster service on the server hosting the passive copy might be having a problem receiving updates even though they remain in cluster membership.

- The Information Store service and Exchange Replication service could be stopped on the server hosting the active copy. (Remember that a copy that is “active” simply signifies the node that owns the copy not the actual mount / dismount state of the database).

- A datacenter switchover is being performed and more than 12 minutes have elapsed between the time when the failed DAG members were stopped and when the remote DAG members were activated.

What to Do When CQL Reaches 9223372036854775766?

To recover from this situation, an administrator can simply perform a database switchover. Note, though, that the switchover will need to be performed using the Exchange Management Shell, as the administrator will need to force the move by using multiple switches and the MountDialOverride parameter. Because the following command skips all of the built-in safety checks for a passive copy, it should be used only when you know that the copies to be activated were healthy prior to the copy going into self-protection mode.

Attempted move without overrides:

Move-ActiveMailboxDatabase DAG-DB0 -ActivateOnServer DAG-2

Confirm

Are you sure you want to perform this action?

Moving mailbox database "DAG-DB0" from server "DAG-1.domain.com" to server "DAG-2.domain.com".

[Y] Yes [A] Yes to All [N] No [L] No to All [?] Help (default is "Y"): y

Identity ActiveServerAtS ActiveServerAtE Status NumberOfLogsLost RecoveryPoint MountStatus MountStatus

tart nd Objective AtMoveStart AtMoveEnd

-------- --------------- --------------- ------ ---------------- ------------- ----------- -----------

DAG-DB0 dag-1 dag-1 Failed Dismounted Dismounted

An Active Manager operation failed. Error The database action failed. Error: An error occurred while trying to validate the specified database copy for possible activation. Error: Database copy 'DAG-DB0' on server 'DAG-2.domain.com' has a copy queue length of 9223372036854725486 logs, which is too high to enable automatic recovery. You can use the Move-ActiveMailboxDatabase cmdlet with the -SkipLagChecks and -MountDialOverride parameters to move the database with loss. If the database isn't mounted after successfully running Move-ActiveMailboxDatabase, use the Mount-Database cmdlet to mount the database.. [Database: DAG-DB0, Server: DAG-2.domain.com]

+ CategoryInfo : InvalidOperation: (DAG-DB0:ADObjectId) [Move-ActiveMailboxDatabase], AmDbActionWrapperException

+ FullyQualifiedErrorId : 3F936D4B,Microsoft.Exchange.Management.SystemConfigurationTasks.MoveActiveMailboxDatabase

Successful move with overrides:

Move-ActiveMailboxDatabase DAG-DB0 -ActivateOnServer DAG-2 -SkipHealthChecks -SkipActiveCopyChecks -SkipClientExperienceChecks -SkipLagChecks -MountDialOverride:BESTEFFORT

Confirm

Are you sure you want to perform this action?

Moving mailbox database "DAG-DB0" from server "DAG-1.domain.com" to server "DAG-2.domain.com".

[Y] Yes [A] Yes to All [N] No [L] No to All [?] Help (default is "Y"): y

Identity ActiveServerAtS ActiveServerAtE Status NumberOfLogsLost RecoveryPoint MountStatus MountStatus

tart nd Objective AtMoveStart AtMoveEnd

-------- --------------- --------------- ------ ---------------- ------------- ----------- -----------

DAG-DB0 dag-1 dag-2 Succeeded 922337203685472... 5/29/2012 ... Dismounted Dismounted

At this point, the database can be mounted on the remote server after moving the active copy.

Of course, the ultimate question is: why 9223372036854725486? The value for CQL must be a 64-bit integer that cannot be null; therefore, we chose a value close to maxInt64 that is so large that it prevents a potentially out-of-date copy from being activated.