Exchange 2007 - Standby clustering with pre-staged resources (part 2)

Recently I’ve worked with several Exchange 2007 customers that are leveraging storage replication solutions with a Single Copy Cluster (SCC) as part of their site resiliency / disaster recovery solution for Exchange data. As a part of these implementations, customers are pre-staging clusters in their standby datacenters <and> creating Exchange clustered resources for these clusters.

In general, two configurations are typically seen:

1. The same clustered mailbox server (CMS) is recovered to a standby cluster.

2. An alternate CMS is installed and mailboxes are moved to the standby cluster.

In part 1 of this series, I will address the first method –recovering the original CMS to a standby cluster.

In part 2 of this series, I will address the second method.

First, let’s take a look at the topology.

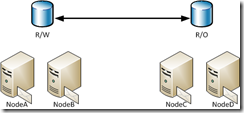

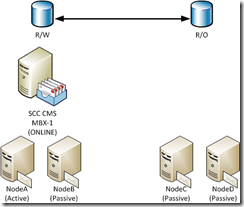

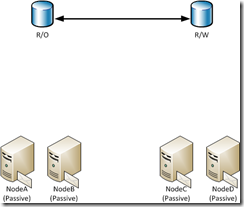

In my primary site, I establish a two-node shared-storage cluster with NodeA and NodeB. In my remote datacenter, I establish a second two-node shared-storage cluster with NodeC and NodeD. Third-party storage replication technology is used to replicate the storage from the primary site to the remote site.

Figure 1 - Implementation prior to introduction of CMS

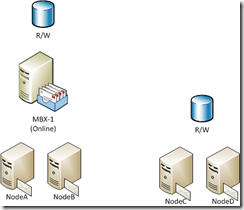

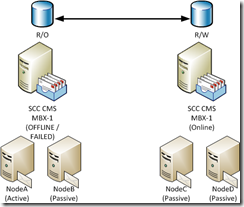

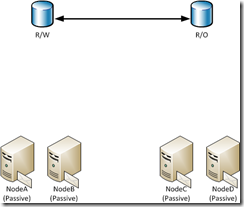

On the primary cluster, I install CMS named MBX-1 in an SCC configuration and create my desired storage groups and databases. This in turn creates the associated cluster resources for the database instances (in Exchange 2007, each database has an associated clustered resource called a Microsoft Exchange Database Instance).

Figure 2 - Implementation after introduction of CMS in primary site

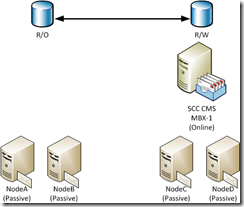

After establishing the resources in the primary site the administrator prepares the secondary site. In the secondary site a new CMS is created – for our example named MBX-2. The storage groups on MBX-2 would be created to mirror the configuration on MBX-1.

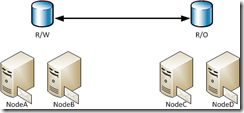

Figure 3 - Implementation after introduction of the CMS in the remote site

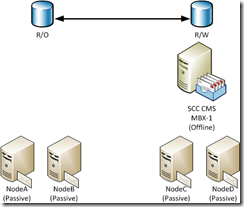

Once the CMS has been established in the remote site it is taken offline. At this point the read / write storage assigned to the remote cluster is placed under control of the storage replication solution and synchronized. The disks in turn are not read only to the cluster in the remote site.

Figure 4 - Implementation after storage replication solution

Because these solutions are often used for site resilience, when a failure of the primary cluster or site occurs, the administrator will perform the following steps to activate the standby cluster.

- Ensure that resources are offline on the primary site cluster.

- Change storage from R/O to R/W in the remote site.

- Bring resources online on the remote cluster and allow logging recovery to bring databases to a consistent state..

- Perform a move-mailbox –configurationOnly on each of the affected mailboxes.

Often these steps work just fine without any issues. But recently I’ve worked on some cases where this process does not work.

1. Resource configuration on the remote cluster is static.

Each database on a CMS has an associated clustered resource. When pre-staging the standby cluster, you are copying the configuration that existed at that time. Often, the configuration of the CMS on the primary cluster will change over time. I have worked with customers who added storage groups and databases to a CMS to a primary cluster after the standby cluster was configured. This results in clustered resources missing from the standby cluster.

To resolve this problem, some administrators have attempted to manually create clustered resources for the missing database instances. Unfortunately, this is not supported, and it results in the administrator having to follow a process similar to the one I recommend below.

2. Issues when applying Exchange Service Packs

When applying Exchange service packs to a CMS, the final step is to run /upgradeCMS. In order for /upgradeCMS to be considered successful (which is defined as the upgrade process reporting success and the CMS watermark being cleared from the registry) all of the resources on the cluster must be brought online.

For the primary cluster this does not present any issues. However, it is an issue for the standby cluster. On the standby cluster the following resources will not be able to come online:

· Physical Disk Resources – these resources in the remote site cluster are R/O and cannot brought online for the cluster upgrade

· Network Name Resource – this would result in a duplicate name on the network

Therefore, /upgradeCMS will fail. To resolve this condition, an administrator must either take the primary cluster offline or isolate the standby cluster from the primary cluster in order to complete the upgrade.

3. Logging recovery fails due to mismatched log generations.

When a storage group is created Exchange assigns the next available log generation – for example E00 / E01 etc. When storage groups and databases are created between the two clusters the configuration must be an exact mirror. The following factors must be considered:

- Correct database created in the correct storage group on both CMS.

- Log generations between the primary and secondary cluster must match. For example, if DB1 is in SG1 with log generation E00 on the primary cluster DB1 must be associated with the storage group that uses log generation E00 on the secondary cluster.

- Storage group paths must match between primary and secondary cluster.

- Database paths must match between primary and secondary cluster.

- Physical database file names must match between primary and secondary cluster.

When any of these conditions occur the automatic mounting of databases may not be possible. In some cases this is a recoverable condition when the administrator manually runs recovery using eseutil /r. There are no inbox checks to ensure any of the above are correct in this implementation and requires the administrator to mange this configuration fully.

4. Activation of the secondary cluster results in blank mailboxes.

Remember that in order to have clients utilize the secondary cluster an administrator must run the move-mailbox command with –configurationOnly. This command essentially updates the properties of an active directory account / mailbox to point it at a new mailbox store. There are no automated checks to ensure the user is moved to the correct target database on the remote cluster. If the administrator specifies the wrong database, the next time the Outlook client logs on they will essentially be logging onto a blank mailbox (OWA / Online). Offline mode clients may fail to open with a recovery mode error. Also, transport will begin delivering mail to this new mailbox as the configuration is replicated.

5. Activation requires changing multiple attributes of individual user accounts.

Although fully supported I personally do not prefer the move-mailbox –configurationOnly switch. When utilizing this process each user that is being moved must be touched. In turn each edit must be replicated around the directory fully in order for the process to be ultimately successful. (Compare scope of changes here to that required in my recommended method using /recoverCMS).

6. Legacy clients will not automatically direct.

In order for this process to be successful Outlook 2007 or newer must be deployed. Legacy clients, like Outlook 2003, have no knowledge of auto discover. Without being able to contact the source information store service and receive a notification that a “move” has occurred a profile update will not occur automatically. Outlook 2007 and newer will consult autodiscover and receive knowledge of the move.

Obviously, this process could cause some longer term issues in the environment after its initial establishment. So, I want to outline a process that I’ve recommended in these environments. The first few parts of the process are the same as above:

1. In my primary site, I establish a two-node shared-storage cluster with NodeA and NodeB. In my remote datacenter, I establish a second two-node shared-storage cluster with NodeC and NodeD. Third-party storage replication technology is used to replicate the storage from the primary site to the remote site.

Figure 5 - Implementation prior to introduction of CMS

2. On the primary cluster, I install CMS named MBX-1 in an SCC configuration and create my desired storage groups and databases. This in turn creates the associated cluster resources for the database instances.

3. From a storage standpoint, the disks connected to the primary cluster are in read-write mode and the disks connected to the standby cluster are in read-only mode.

Figure 6 - Implementation after introduction of CMS in primary site

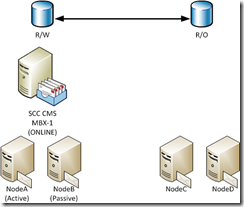

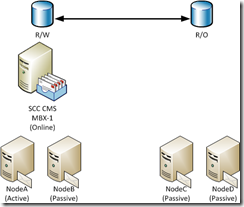

4. On the standby cluster I prepare each node by installing and configuring the SCC, but instead of performing a /recoverCMS operation, I install only the passive mailbox server role on each node. This is done by running setup.com /mode:install /roles:mailbox. This process puts the Exchange program files on the system, performs cluster registrations, and prepares the nodes to accept a CMS at a later time.

Figure 7 - Implementation after introduction of CMS in primary site and passive role installation on clustered nodes in remote site

At this point, all preparation for the two sites is completed. When a failure occurs and a decision is made to activate the standby cluster I recommend that customers use the following procedure:

1. Ensure that all CMS resources on the primary cluster are offline.

2. Change the replication direction to allow the disks in the remote site to be R/W and the disks in the primary site to be R/O.

Figure 8 – Storage direction changed

3. Use the Exchange installation media to run the /recoverCMS process and establish the CMS on the standby cluster.

setup.com /recoverCMS /cmsName:<NAME> /cmsIPV4Addresses:<IPAddress,IPAddress>

Figure 9 – Cluster configuration recovered to standby cluster.

4. Move disks into appropriate groups and update resource dependencies as necessary.

At this point, the resources have been established on the standby cluster and clients should be able to resume connectivity.

Assuming that the primary site will come back up and the original nodes are available, the following process can be used to prepare the nodes in the primary site.

1. Ensure that the disks and network name do not come online. This can be accomplished by ensuring that the nodes have no network connectivity.

2. On the node that shows as owner of the offline Exchange CMS group, run the command setup.com /clearLocalCMS. The setup command will clear the local cluster configuration from those nodes and remove the CMS resources. The physical disk resources will be maintained in a cluster group that was renamed.

Figure 10 – Clustered mailbox server resources cleared from primary site cluster.

3. Ensure that storage replication is in place, healthy, and that a full synchronization of changes has occurred.

4. Schedule downtime to accomplish the failback to the source nodes.

During this downtime, use the following steps can be utilized to establish services in the primary site.

1. Take the CMS offline in the remote site.

Figure 11 – Clustered mailbox server resources in remote site taken offline.

2. On the node owning the Exchange resource group in the remote site cluster execute a setup.com /clearLocalCMS command. This will remove the clustered instance from the remote cluster.

Figure 12 – Clustered mailbox server resources cleared from the remote site cluster.

3. Change the replication direction to allow the disks in the primary site to be R/W and the disks in the remote site to be R/O.

Figure 13 – Storage replication direction changed.

4. Using setup media run the /recoverCMS command to establish the clustered resources on the standby cluster.

setup.com /recoverCMS /cmsName:<NAME> /cmsIPV4Addresses:<IPAddress,IPAddress>

Figure 14 – Clustered mailbox server configuration recovered to primary site cluster.

5. Move disks into appropriate groups and update dependencies as necessary.

6. Clients should be able to resume connectivity when this process is completed.

How does this address the issues that I’ve outlined above?

1. The /recoverCMS process is a fully supported method to recover a CMS between nodes.

2. The /recoverCMS process will always recreate resources based on the configuration information in the directory. If databases are added to the primary cluster, the appropriate resources will be populated on the standby cluster when /recoverCMS is run. Similarly, if the CMS runs on the standby cluster for an extended period of time, and additional resources are created there, they will be added to the primary cluster when it is restored to service.

3. Service pack upgrades can be performed without having any special configuration. On the primary cluster you follow the standard practice of upgrading the program files with setup.com /mode:upgrade and then upgrading the CMS using setup.com /upgradeCMS. The nodes in the standby cluster are independent passive role installations and can be upgraded by using setup.com /mode:upgrade.

4. Legacy clients are automatically able to connect because there is no need to update profile information (after DNS changes have replicated to reflect the IP address change of the clustered mailbox server).