Considerations when using mountpoints with Exchange 2007 clusters.

It is not uncommon in many installations today, especially clustered installations, to see the use of mountpoints.

When using mountpoints with clustering it is a requirement that the physical disk resources created for the mountpoints be dependant on the lettered physical volume that hosts them.

The use of mountpoints also requires that the lettered physical volume hosting them be available at all times. Exchange uses the full path – for example X:\MountPoint\data…

I wanted to highlight a design consideration that I encourage you to consider when utilizing mountpoints.

I see two common designs where mountpoints are utilized.

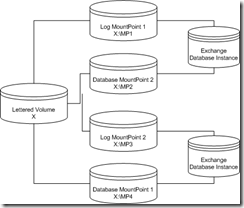

- A single lettered volume and all mountpoints created off that volume.

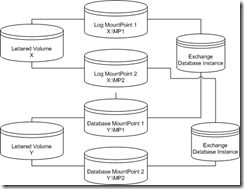

- Two lettered volumes – database mountpoints created off one lettered volume with log mountpoints created off the second lettered volume.

These two designs do give logical ( and possibly physical depending on storage design )separation to the storage for databases and the storage for log files. It also limits the amount of lettered physical volumes that are required to support the desired solution.

The issue it introduces though is a single point of failure.

In the first example in order for the mountpoints to function you have to have the X drive always available. If the X drive is lost, all mountpoints associated with the X drive are lost, and the database instances dependant on those mountpoints are lost.

In the second example you have the same issue as in the first example where a single point of failure exists. A loss of either the X or Y disk causes mounpoints associated on those disks to be unavailable. Since an Exchange database instance requires dependencies on both the mountpoint hosting the database, and the mountpoint hosting the logs, the database instance becomes unavailable.

You can increase the availability of the overall solution by spreading out the mountpoints over a series of lettered volumes. For example, let’s take a solution there there will be 25 storage groups. You could in this instance create 5 lettered volumes. Off of each of these 5 lettered volumes you would create 10 mountpoints. Each of the mountpoint pairs would serve as the database and log drives for a single database instance. That would mean that the loss of a single lettered volume would only affect users hosted in 5 of the databases in the solution, verses all 25 databases being affected when using the design outlined in either of the previous examples.

Here is a small picture example of this concept.

Another consideration is that when a root volume hosting mount points is lost and subsequently replaced, the administrator must manually recreate the necessary folder structure and map the mount points back. By spreading this out, you can hopefully eliminate the amount of work that would be necessary to restore the solution.

By reducing the single points of failure you can increase the overall availability of the solution.