Rethinking Enterprise Storage: Leapfrogging Backup with Cloud

Recently, Microsoft published a book titled Rethinking Enterprise Storage – A Hybrid Cloud Model – the book takes a close look at an innovative infrastructure storage architecture called hybrid cloud storage.

Last week we published experts from Chapter 1. This week we provide excerpts from Chapter 2, Leapfrogging Backup with Cloud Snapshots. Over the next several weeks on this blog, we will continue to publish excerpts from each chapter of this book via a series of posts. We think this is valuable information for all IT professionals, from executives responsible for determining IT strategies to administrators who manage systems and storage. We would love to hear from you and we encourage you to provide questions, comments and suggestions.

As you read this material, we also want to remind you that the Microsoft StorSimple 8000 series provides to customers innovative and game-changing hybrid cloud storage architecture and it is quickly becoming a standard for many global corporations who are deploying hybrid cloud storage.

Here are the chapters we will excerpt in this blog series:

Chapter 1 Rethinking enterprise storage

Chapter 2 Leapfrogging backup with cloud snapshots

Chapter 3 Accelerating and broadening disaster recover protection

Chapter 4 Taming the capacity monster

Chapter 5 Archiving data with the hybrid cloud

Chapter 6 Putting all the pieces together

Chapter 7 Imagining the possibilities with hybrid cloud storage

That’s a Wrap! Summary and glossary of terms for hybrid cloud storage

So, without further ado, here are some excerpts from Chapter 2 of Rethinking Enterprise Storage – A Hybrid Cloud Model

Chapter 2 Leapfrogging Backup with Cloud Snapshots

When catastrophes strike IT systems, the IT team relies on backup technology to put data and systems back in place. Systems administrators spend many hours managing backup processes and media. Despite all the work that they do to prepare for the worst, most IT team members worry about how things would work out in an actual disaster.

The inefficiencies and risks of backup processes

If cloud storage had existed decades ago, it’s unlikely that the industry would have developed the backup processes that are commonly used today. However, the cloud didn’t exist, and IT teams had to come up with ways to protect data from a diverse number of threats, including large storms, power outages, computer viruses, and operator errors. That’s why vendors and IT professionals developed backup technologies and best practices, to make copies of data and store them off site in remote facilities where they could be retrieved after a disaster. A single “backup system” is constructed from many different components that must be implemented and managed correctly for backup to achieve its ultimate goal: the ability to restore the organization’s data after a disaster has destroyed it.

Many companies have multiple, sometimes incompatible, backup systems and technologies protecting different types of computing equipment. Many standards were developed over the years, prescribing various technologies, such as tape formats and communication interfaces, to achieve basic interoperability. Despite these efforts, IT teams have often had a difficult time recognizing the commonality between their backup systems. To many, it is a byzantine mess of arcane processes.

The many complications and risks of tape

Magnetic tape technology was adopted for backup many years ago because it met most of the physical storage requirements, primarily by being portable so that it could be transported to an off-site facility. This gave rise to a sizeable ecosystem of related backup technologies and services, including tape media, tape drives, autoloaders, large scale libraries, device and subsystem firmware, peripheral interfaces, protocols, cables, backup software with numerous agents and options, off-site storage service providers, courier services, and a wide variety of consulting practices to help companies of all sizes understand how to implement and use it all effectively.

Tape media

Tape complexity starts with its physical construction. In one respect, it is almost miraculous that tape engineers have been able to design and manufacture media that meets so many challenging and conflicting requirements. Magnetic tape is a long ribbon of multiple laminated layers, including a microscopically jagged layer of extremely small metallic particles that record the data and a super-smooth base layer of polyester-like material that gives the media its strength and flexibility. It must be able to tolerate being wound and unwound and pulled and positioned through a high-tension alignment mechanism without losing the integrity of its dimensions. Manufacturing data grade magnetic tapes involves sophisticated chemistry, magnetics, materials, and processes.

Unfortunately, there are many environmental threats to tape, mostly because metals tend to oxidize and break apart. Tape manufacturers are moving to increase the environmental range that their products can withstand, but historically, they have recommended storing them in a fairly narrow humidity and temperature range. There is no question that the IT teams with the most success using tape take care to restrict its exposure to increased temperatures and humidity. Also, as the density of tape increases, vibration during transport has become a factor, resulting in new packaging and handling requirements. Given that tapes are stored in warehouses prior to being purchased and that they are regularly transported by courier services and stored off-site, there are environmental variables beyond the IT team’s control—and that makes people suspicious of its reliability.

Media management and rotation

Transporting tapes also exposes them to the risk of being lost, misplaced, or stolen. The exposure to the organization from lost tapes can be extremely negative, especially if they contain customer account information, financial data, or logon credentials. Businesses that have lost tapes in-transit have not only had to pay for extensive customer notification and education programs, but they have also suffered the loss of reputation.

Synthetic full backups

An alternative to making full backup copies is to make what are called synthetic full copies, which aggregate data from multiple tapes or disk-based backups onto a tape (or tapes) that contains all the data that would be captured if a full backup were to be run. They reduce the time needed to complete backup processing, but they still consume administrative resources and suffer from the same gremlins that haunt all tape processes.

The real issue is why it should be necessary to make so many copies of data that have already been made so many times before. Considering the incredible advances in computing technology over the years, it seems absurd that more intelligence could not be applied to data protection, and it highlights the fundamental weakness of tape as a portable media for off-site storage.

Restoring from tape

It would almost be comical if it weren’t so vexing, but exceptions are normal where recovering from tape is concerned. Things often go wrong with backup that keeps it from completing as expected. It’s never a problem until it’s time to recover data and then it can suddenly become extremely important in an unpleasant sort of way. Data that was skipped during backup cannot be recovered. Even worse, tape failures during recovery prevents data from being restored.

Backing up to disk

With all the challenges of tape, there has been widespread interest in using disk instead of tape as a backup target. At first glance, it would seem that simply copying files to a file server could do the job, but that doesn’t provide the ability to restore older versions of files. There are workarounds for this, but workarounds add complexity to something that is already complex enough.

Virtual tape: A step in the right direction

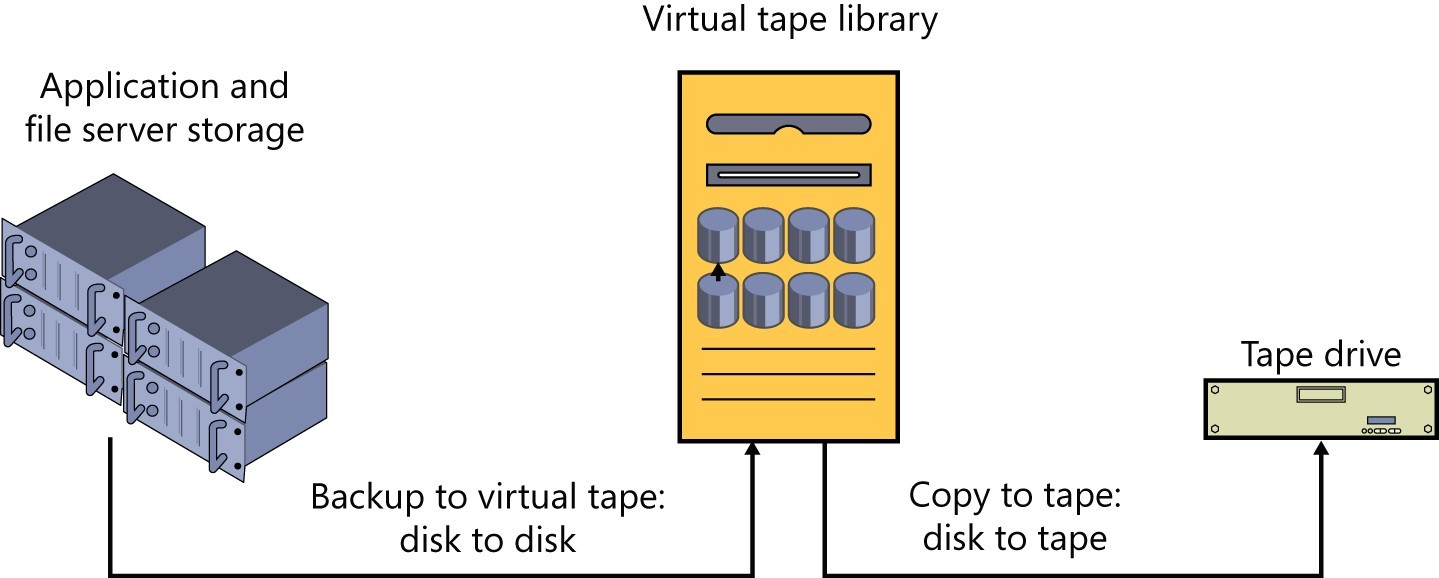

The desire to reduce the dependency on tape for recovery gave rise to the development of virtual tape libraries (VTLs) that use disk drives for storing backup data by emulating tapes and tape hardware. Off-site storage of backup data is accomplished by copying virtual tapes onto physical tapes and transporting them to an off-site facility. This backup design is called disk-to-disk-to-tape, or D2D2T— where the first disk (D) is in fi le server disk storage, the second disk (D) is in a virtual tape system, and tape refers to tape drives and media. Figure 2-1 shows a D2D2T backup design that uses a virtual tape system for storing backup data locally and generating tape copies for off-site storage.

FIGURE 2-1 Disk-to-disk-to-tape backup design.

Dedupe makes a big difference

A breakthrough in virtual tape technology came when dedupe technology was integrated with VTLs. Like previous-generation VTLs, dedupe VTLs require backup software products to generate backup data, but the dedupe function eliminates redundant data from backup streams. This translates directly into backup storage capacity savings and makes them much more cost-competitive with tape systems. Not only that, but dedupe VTLs improve backup performance by simultaneously backing up a larger number of servers and by keeping more backup copies readily available online. Many organizations happily replaced their tape backup systems with dedupe VTLs.

For the love of snapshots

Snapshot technology is an alternative to backup that was first made popular by NetApp in their storage systems. Snapshots are a system of pointers to internal storage locations that maintain access to older versions of data. Snapshots are commonly described as making point-in-time copies of data. With snapshots, storage administrators are able to recreate data as it existed at various times in the past.

One problem with snapshots is that they consume additional storage capacity on primary storage that has to be planned for. The amount of snapshot data depends on the breadth of changed data and the frequency of snapshots. As data growth consumes more and more capacity the amount of snapshot data also tends to increase and IT teams may be surprised to discover they are running out of primary storage capacity. A remedy for this is deleting snapshot data, but that means fewer versions of data are available to restore than expected.

A big breakthrough: Cloud Snapshots

The Microsoft HCS solution incorporates elements from backup, dedupe, and snapshot technologies to create a highly automated data protection system based on cloud snapshots. A cloud snapshot is like a storage snapshot but where the snapshot data is stored in Microsoft Azure Storage instead of in a storage array. Cloud snapshots provide system administrators with a tool they already know and love—snapshots—and extend them across the hybrid cloud boundary.

Fingerprints in the cloud

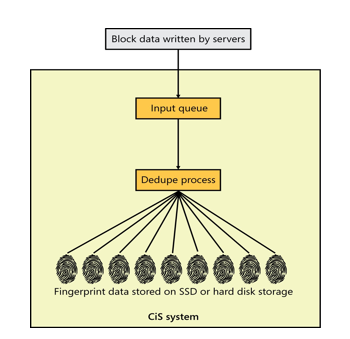

The data objects that are stored as snapshots in the cloud are called fingerprints. Fingerprints are logical data containers that are created early in the data lifecycle when data is moved out of the input queue in the CiS system. While CiS systems store and serve block data to servers, they manage the data internally as fingerprints. Figure 2-2 illustrates how data written to the CiS system is converted to fingerprints.

FIGURE 2-2 Block data is converted to fingerprints in the CiS system.

Just as backup processes work by copying newly written data to tapes or disk, cloud snapshots work by copying newly made fingerprints to Microsoft Azure Storage. One of the biggest differences between backup and cloud snapshots is that backup transforms the data by copying it into a different data format, whereas cloud snapshots copy fingerprints as-is without changing the data format. This means that fingerprints in Microsoft Azure Storage can be directly accessed by the CiS system and used for any storage management purpose.

Cloud snapshots work like incremental-only backups insofar that fingerprints only need to be uploaded once to Microsoft Azure Storage. Replication services in Microsoft Azure Storage makes multiple copies of the data as protection against failures. With most backup systems, there are many different backup data sets that need to be tracked and managed, but with cloud snapshots, there is only a single repository of fingerprints. In addition, there is no need to create synthetic full tapes because all the fingerprints needed to be recovered are located in the same Microsoft Azure Storage bucket.

Efficiency improvements with cloud snapshots

Cloud snapshots eliminate tape problems and operator errors because there are no tapes to manage, lose, or go bad. No tapes need to be loaded for the next backup operation, no tapes are transferred off site, there are no tape names and labels to worry about, and no courier services need to be engaged. The arcane best practices that were developed for tape backup no longer apply to cloud snapshots. This is an enormous time saver for the IT team and removes them from the drudgery of managing tapes, tape equipment, and backup processes.

Comparing cloud snapshots

The biggest difference between cloud snapshots with the Microsoft HCS solution and other backup products is the integration with Microsoft Azure Storage. Cloud snapshots improve data protection in three important ways:

1. Off-site automation. Cloud snapshots automatically copy data off site to Microsoft Azure Storage.

2. Access to off-site data. Cloud snapshot data stored off site is quickly accessed on premises.

3. Unlimited data storage and retention. The amount of backup data that can be retained on Microsoft Azure Storage is virtually unlimited.

Remote replication can be used to enhance disk-based backup and snapshot solutions by automating off-site data protection. The biggest difference between cloud snapshots and replication-empowered solutions is that replication has the added expense of remote systems and facilities overhead, including the cost of managing disk capacities and replication links.

Table 2-1 lists the differences in off-site automation, access to off-site data from primary storage, and data retention limits of various data protection options.

TABLE 2-1 A Comparison of Popular Data Protection Technologies

|

Automates Off-Site Storage |

Access Off-Site Data From Primary Storage |

Data Retention Limits |

Tape Backup |

No |

No |

None |

Incremental-Only Backup |

Uses replication |

No |

Disk capacity |

Dedupe VTL |

Requires replication |

No |

Disk capacity |

Snapshot |

Requires replication |

No |

Disk capacity |

Cloud Snapshot |

Yes |

Yes |

None |

Remoteofficedataprotection

Cloud snapshots are also effective for automating data protection in remote and branch offices (ROBOs). These locations often do not have skilled IT team members on site to manage backup, and as a result, it is common for companies with many ROBOs to have significant gaps in their data protection.

The role of local snapshots

CiS systems also provide local snapshots that are stored on the CiS system. Although local and cloud snapshots are managed independently, the first step in performing a cloud snapshot is running a local snapshot. In other words, all the data that is snapped to the cloud is also snapped locally first. The IT team can schedule local snapshots to run on a regular schedule—many times a day and on demand.

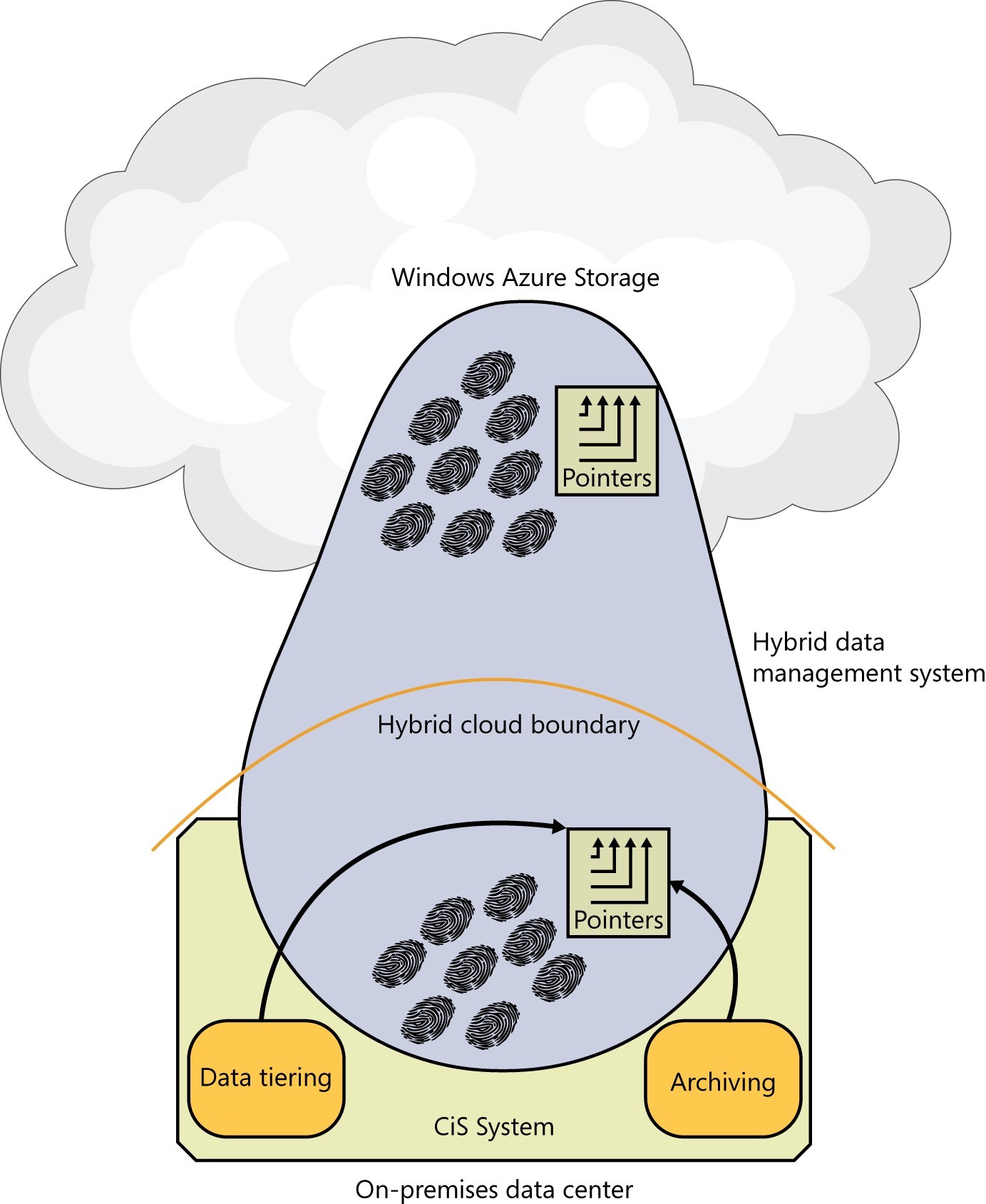

Looking beyond disaster protection

Snapshot technology is based on a system of pointers that provide access to all the versions of data stored by the system. The Microsoft HCS solution has pointers that provides access to all the fingerprints stored on the CiS system and in Microsoft Azure Storage.

The fingerprints and pointers in a Microsoft HCS solution are useful for much more than disaster protection and accessing point-in-time copies of data. Together they form a hybrid data management system that spans the hybrid cloud boundary. A set of pointers accompanies every cloud snapshot that is uploaded to Microsoft Azure Storage, referencing the fingerprints that are stored there. The system of pointers and fingerprints in the cloud is a portable data volume that uses Microsoft Azure Storage for both protection and portability.

FIGURE 2-3 The Microsoft HCS solution unifies data management across the hybrid cloud boundary.

Summary

Backing up data, in preparation for recovering from a disaster, has been a problem for IT teams for many years due to problems with tape technology and the time-consuming manual processes that it requires. New technologies for disk-based backup, including virtual tape libraries and data deduplication, have been instrumental in helping organizations reduce or eliminate the use of tape. Meanwhile, snapshot technology has become very popular with IT teams by making it easier to restore point-in-time copies of data. The growing use of remote replication with dedupe backup systems and snapshot solutions indicates the importance IT teams place on automated off-site backup storage. Nonetheless, data protection has continued to consume more financial and human resources than IT leaders want to devote to it.

The Microsoft HCS solution from Microsoft replaces traditional backup processes with a new technology—cloud snapshots; automating off-site data protection. The integration of data protection for primary storage with Microsoft Azure Storage transforms the error-prone tedium of managing backups into short daily routines that ensure nothing unexpected occurred. More than just a backup replacement, cloud snapshots are also used to quickly access and restore historical versions of data that were uploaded to the cloud.