Traffic Manager and Azure SLB - Better Together!

This post was contributed by Pedro Perez

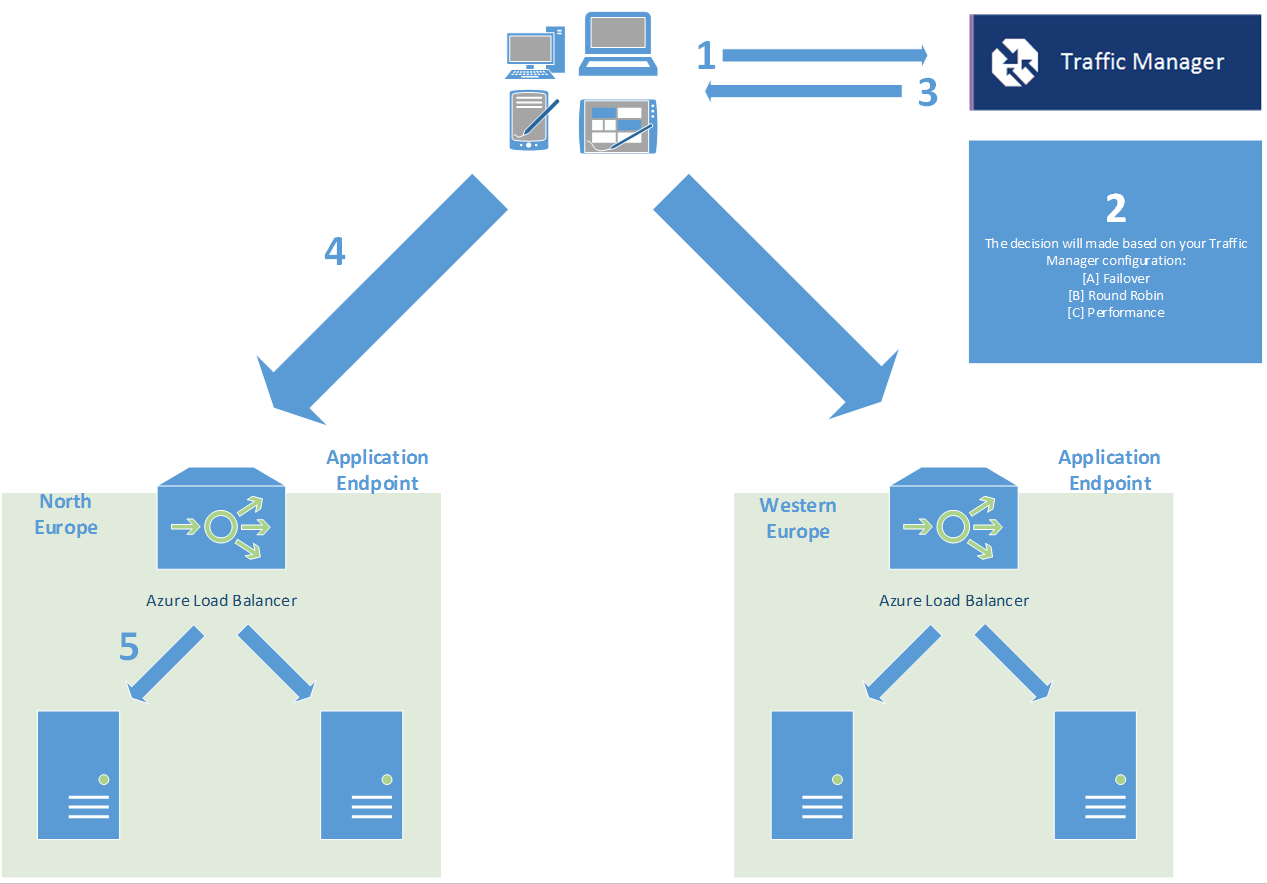

Can Traffic Manager coexist with Azure Load Balancer? And how do we keep session affinity with them? Yes they can coexist, and in fact it is a good idea to use both together. That is because Traffic Manager (TM) is a global load balancer (i.e. DNS load balancing) and the Azure Load Balancer (Azure LB) is a local load balancer.

Traffic Manager will failover or balance (whatever is your choice) between different endpoints that would ideally be located on different datacenters or regions. Azure LB, on the other hand, will balance between instances in the same Cloud Service, handling the traffic itself. Consider it as two different tiers of infrastructure/application logic. Traffic Manager could be used to reduce latency (e.g. resolving to the nearest datacenter) or disaster recovery(DR)/failover and Azure Load Balancer could be used for redundancy and scalability. Traffic Manager monitors the cloud service endpoints and Azure Load Balancer monitors the instances inside the cloud services. This way we make sure we’re sending traffic to a healthy server at any time.

As an example, TM will resolve the queries for www.contoso.com to the Cloud Service IP in the appropriate datacenter, so when the client contacts the Cloud Service on the endpoint’s port (e.g. 80) it’ll be effectively hitting Azure Load Balancer, which will make the decision to send the request to one of the VMs/instances and will keep a “source IP affinity” entry in a table.

In the above scenario we have the same application deployed in two different datacenters and we have one Cloud Service per datacenter (DC) that contains two VMs (instances). The application is deployed to all the 4 servers. The application needs to keep track of the client’s sessions so we need to use affinity. We decide to use North Europe DC as our primary datacenter, so we will direct all of our users to the endpoint in that DC, while we keep the Western Europe DC deployment as a DR solution. There’s no need for affinity between DCs because we will just be using one at a time.

You can choose the Traffic Manager’s load balancing method while creating the profile:

PS C:\>New-AzureTrafficManagerProfile -Name "ContosoTMProfile" -DomainName "wwwcontoso.trafficmanager.net" -LoadBalancingMethod "Failover"

Or change it at any time:

PS C:\> Get-AzureTrafficManagerProfile -Name "ContosoTMProfile" | Set-AzureTrafficManagerProfile -LoadBalancingMethod "Failover"

In order to enable session affinity on an endpoint you can also use PowerShell, specifying either sourceIP for a 2 tuple, sourceIPProtocol for a 3 tuple or none if you want to disable session affinity:

PS C:\> Set-AzureLoadBalancedEndpoint -ServiceName "ContosoWebSvc" -LBSetName "LBContosoWeb" -Protocol tcp -LocalPort 80 -ProbeProtocolTCP -ProbePort 8080 –LoadBalancerDistribution "sourceIPProtocol"

The traffic flow of a request to our application will go like this:

- A client resolves www.contoso.com through their DNS server, which is a CNAME pointing to wwwcontoso.trafficmanager.net, so it has to ultimately talk to Microsoft’s root DNS servers to get the underlying IP

- In the meanwhile, Traffic Manager constantly probes against your configured endpoints to determine their health and then updates Microsoft’s DNS servers accordingly. More information about this procedure could be found in https://blogs.msdn.com/b/kwill/archive/2013/09/17/windows-azure-traffic-manager-performance-impact.aspx

- The client gets the resolved IP address from Microsoft’s root DNS servers.

- The client sends a request to the resolved IP address, which happens to be in the North Europe datacenter.

- The request hits the Azure Load Balancer. As it is a new connection it has to check the affinity table, but there’s nothing for this 2-tuple or 3-tuple. It then decides to send the traffic to one of the servers and populates the affinity table with the client’s IP address, the endpoint’s IP and the endpoint’s port.

During the whole TCP session, incoming packets won’t be checked against the affinity table because they belong to the same connection. However, any new incoming TCP session will be checked against the table in order to be able to provide a session layer for the application. If the client is caching the DNS resolution, any subsequent connections will skip steps 1-3 and go straight to step 4. The Azure Load Balancer will match the connection in the table and choose the destination server based on that match.

Azure Load Balancer’s affinity feature has a couple of characteristics to take into account when architecting your application:

a) Affinity is configurable using a 2 tuple (Source IP, Destination IP) or 3 tuple (Source IP, Destination IP, Destination Port), but as you can see it is always dependent on the Source IP in order to identify the client. If the users of the application are behind a proxy (not an uncommon scenario for an enterprise app) then we’re basically sending all our traffic to only one server.

b) As of today, when a new server is added or a server is removed from the Cloud Service, the affinity table gets wiped out and any affinity is lost. That restricts scalability changes to out of hours / low traffic hours.

It may be a good idea consider building a common session cache layer (or use Session State Providers) accessible by all the webservers. This way your application doesn’t depend on affinity, the LB does actually balance equally among servers (due to the lack of affinity!) and we can scale whenever we need to. Another option available is to use a load balancing Network Virtual Appliance among the available ones in Azure, and configure it to use cookie affinity. There are various vendors in the Gallery in Azure Portal, please check them out. Please keep in mind there could be caveats with this approach (e.g. source NAT is probably needed to make it work inside Azure), so please make sure you understand these and how they affect your application.