Disingenuous Cost Comparisons

I was inspired by Eric Gray’s recent post “Disingenuous cost comparisons” which purports to show all the ways that VMware costs less than Microsoft. I thought I’d take Eric’s advice and see for myself by trying the VMware cost comparison calculator and see exactly how VMware are trying to reduce the high cost of their hypervisor.

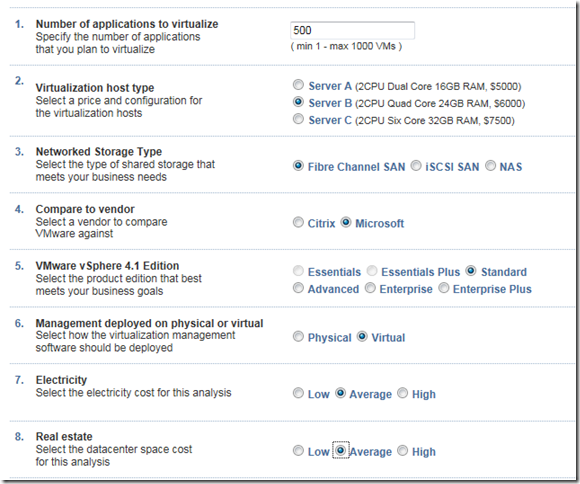

I entered the data below as my starting point:

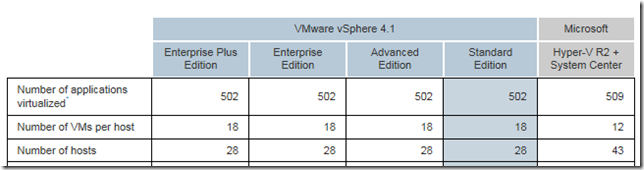

This gives the rather interesting claim that vSphere supports 50% more VM’s that Hyper-V:

That’s a pretty interesting claim, and in the spirit of transparency VMware are good enough to give the reasons they make this assumption. It’s interesting to see what those claims are, and how they stack up against reality.

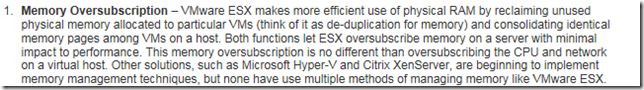

From what I know of VMware’s memory overcommitment methods, there are 4 techniques they use:

- Transparent Page Sharing (TPS)

- Memory Ballooning

- Memory Compression

- Host Paging/Swapping

The bottom two methods are only used once the host is under memory pressure, and isn’t a great place for you to be really. If you’re at the point that the host is paging memory out to disk (even SSD) it’s still orders of magnitude slower than real memory, and will have a performance impact. VMware point this out in their documentation:

“While ESX uses page sharing and ballooning to allow significant memory overcommitment, usually with

little or no impact on performance, you should avoid overcommitting memory to the point that it requires

host-level swapping.” – Page 23

TPS is the concept of finding shared pages of memory between multiple VM’s and collapsing that down to a single copy stored in physical memory, and ballooning allows the host to request the guest to release memory that it can, so it can reduce the overall memory footprint of a guest. So of the “multiple methods” of oversubscribing memory, two are really used in day to day production. What VMware don’t talk about much is that most of the benefit of TPS comes in the sharing of blank memory pages (i.e. memory that is allocated to a VM, but isn’t being used). There is an incremental benefit of shared OS memory pages as well, but the majority of the benefit is from those blank pages. TPS is also affected by large memory pages & ASLR technologies in modern versions of the Windows OS, and isn’t an immediate technology – TPS takes time to identify the shared pages, and runs on a periodic basis.

Hyper-V has Dynamic Memory functionality that allows machines to be allocated the memory that they need, and ballooning to reclaim memory that isn’t in use. In practice, this has similar benefits to TPS – blank pages are simply not allocated to VM’s until they need them so they can be used elsewhere as a scheduled resource. And it’s faster than TPS, as it is immediately responsive to VM demand. So on a direct comparison, TPS may save slightly more memory due to shared OS pages, but ultimately TPS & Dynamic Memory solve the blank memory pages issue in different ways.

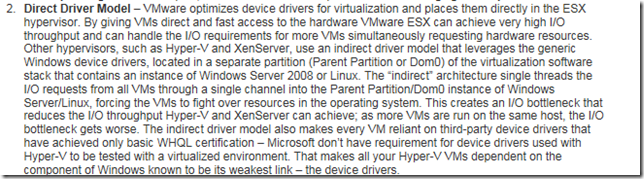

I think in this case it’s best to let the facts speak for themselves. It’s pretty clear from the sorts of IO numbers in those articles that the indirect driver model (parent partition architecture) doesn’t impose a bottleneck on Hyper-V IO performance. And it certainly appears that Hyper-V support is a requirement of WHQL. And it’s not like VMware can claim to have no driver problems. It also always makes me laugh when VMware claim that their drivers are optimised for virtualisation. What does that really mean? From what I can see, optimised for virtualisation means that you’ve got drivers that can deliver massive amounts of IO to your hardware – which is exactly what Windows Server 2008 R2 has.

Wouldn’t it be embarrassing if a company had invested so much time and money in building a highly optimised gang scheduler, and then someone came along with a general purpose OS scheduler and the independent test results showed that the general purpose OS scheduler performed as well as, or in some cases better than that gang scheduler (or even, that the vSphere results were so bad that they released a hotfix specifically for that issue)? The simple fact is that regardless of what VMware claim, Hyper-V does not use a general purpose OS scheduler – it just shows that they fundamentally don’t understand the Hyper-V architecture. The only place a general purpose OS scheduler is involved is in the parent partition & the running guests. The Hyper-V scheduler is not the parent partition scheduler – it is it’s own optimised for virtualisation scheduler. So in terms of performance, Hyper-V more than holds it’s own against vSphere and it’s clear that VMware are just throwing this out there and hoping their customers don’t look into it.

DRS is certainly a useful technology for adding flexibility to virtual environments, and Microsoft ships a similar technology with System Center (called PRO) that does a similar function. So if VMware’s cost calculator adds in extra virtual machines to allow for running System Center, surely they should also allow for the fact that this will give this extra functionality? And without wanting to talk about the future, Virtual Machine Manager 2012 offers Dynamic Optimisation as an alternative to PRO if you don’t have System Center Operations Manager – give it a try.

So looking through the claims for a 50% increase in VM’s per host we get:

- Memory overcommit – very slight advantage to VMware because of shared OS pages

- Direct Driver Model – no advantage

- High performance gang scheduler – no advantage

- DRS – similar functionality in System Center, and really contributes to flexibility, not a higher consolidation ratio.

Based on those claims, I’m struggling to figure out how VMware can make these claims with a straight face. And overall, my rating of VMware’s licensing calculator – nice try, but you’re smart guys and I’m sure you can do better.