Shared storage for Windows Failover Cluster with MPIO

Microsoft released the iSCSI Software Target 3.3 on the download center in April. I have seen many people download, try it out, and gave great feedback. We had a question raised about the policy for supporting iSCSI Target with initiator clusters using MPIO configurations. The support statement and relevant articles have been updated to remove this restriction. This blog will demonstrate a lab exercise that sets up a 2-node cluster with MPIO using iSCSI Target as the shared storage.

Test topology

I setup the following topology for testing:

Initiators are connected to the iSCSI Software Target using MPIO. To setup the entire topology, here are some good references:

- Configure iSCSI Target and provide shared storage to the iSCSI initiators

- Configure a failover cluster

- Configure MPIO

- Understand the MPIO feature

- This whitepaper provides end to end clustering creation using iSCSI Target

How to setup MPIO

I followed the steps described in the guide to setup MPIO on both cluster nodes. Note: The only difference is that, each node already had 2 NICs, so I didn’t need to go through the steps in section “Setting a second IP on my hosts”.

How to verify MPIO setup on the iSCSI Initiator

To verify all the disks have two paths, I opened the iSCSI Initiator control panel applet, and checked the device path:

As you can see each disk listed in the Devices pane had 2 paths associated with it, as well as the MPIO policy. You can change the policy by click the dropdown box on the Device details page.

There is also a report you can generate by:

- Open Microsoft iSCSI Initiator, and then click the Configuration tab.

- Click Report.

- Enter the file name, and then click Save.

My report file looks like:

iSCSI Initiator Report<br>=======================<br>List of Discovered Targets, Sessions and devices<br>==================================================<br>Target #0<br>========<br>Target name = iqn.1991-05.com.microsoft:svr1-target<br>Session Details<br>===============<br>Session #1 <= first session to the target<br>===========<br>Number of Connections = 1<br>Connection #1<br>==============<br>Target Address = 10.10.0.51<br>Target Port = 3260<br>#0. Disk 2<br>========<br>Address:Port 3: Bus 0: Target 0: LUN 0<br>#1. Disk 4<br>========<br>Address:Port 3: Bus 0: Target 0: LUN 1<br>#2. Disk 5<br>========<br>Address:Port 3: Bus 0: Target 0: LUN 2<br>Session #2 <= second session to the target<br>===========<br>Number of Connections = 1<br>Connection #1<br>==============<br>Target Address = 10.10.0.51<br>Target Port = 3260<br>#0. Disk 2<br>========<br>Address:Port 3: Bus 0: Target 1: LUN 0<br>#1. Disk 4<br>========<br>Address:Port 3: Bus 0: Target 1: LUN 1<br>#2. Disk 5<br>========<br>Address:Port 3: Bus 0: Target 1: LUN 2

How to verify MPIO setup on the iSCSI Target

To view the session/connection information on the Target server, you need to use WMI. The easiest way to execute WMI queries is the WMIC.exe in the commandline window.

C:\>wmic /namespace:\\root\wmi Path WT_HOST where (hostname = "T2") get /format:list

Where T2 is my target object name.

A sample output is listed below with minor formatting changes. Comments have been added to help understand the output and a prefixed with “<=”:

instance of WT_Host { CHAPSecret = ""; CHAPUserName = ""; Description = ""; Enable = TRUE; EnableCHAP = FALSE; EnableReverseCHAP = FALSE; EnforceIdleTimeoutDetection = TRUE; HostName = "T2"; LastLogIn = "20110502094448.082000-420"; NumRecvBuffers = 10; ResourceGroup = ""; ResourceName = ""; ResourceState = -1; ReverseCHAPSecret = ""; ReverseCHAPUserName = ""; Sessions = { instance of WT_Session <= First session information from initiator 10.10.2.77 { Connections = { instance of WT_Connection <= First connection information from initiator 10.10.2.77, since the iSCSI Target supports only one connection per session, you will see each session contains one connection. { CID = 1; DataDigestEnabled = FALSE; HeaderDigestEnabled = FALSE; InitiatorIPAddress = "10.10.2.77"; InitiatorPort = 63042; TargetIPAddress = "10.10.2.73"; TargetPort = 3260; TSIH = 5; }}; HostName = "T2"; InitiatorIQN = "iqn.1991-05.com.microsoft:svr.contoso.com"; ISID = "1100434440256"; SessionType = 1; TSIH = 5; }, instance of WT_Session <= Second session information from initiator 10.10.2.77 (multiple sessions from the same initiator as above { Connections = { instance of WT_Connection { CID = 1; DataDigestEnabled = FALSE; HeaderDigestEnabled = FALSE; InitiatorIPAddress = "10.10.2.77"; InitiatorPort = 63043; TargetIPAddress = "10.10.2.73"; TargetPort = 3260; TSIH = 6; }}; HostName = "T2"; InitiatorIQN = "iqn.1991-05.com.microsoft:svr.contoso.com"; ISID = "3299457695808"; SessionType = 1; TSIH = 6; }, instance of WT_Session <= First session information from initiator 10.10.2.69 { Connections = { instance of WT_Connection { CID = 1; DataDigestEnabled = FALSE; HeaderDigestEnabled = FALSE; InitiatorIPAddress = "10.10.2.69"; InitiatorPort = 60063; TargetIPAddress = "10.10.2.73"; TargetPort = 3260; TSIH = 10; }}; HostName = "T2"; InitiatorIQN = "iqn.1991-05.com.microsoft:svr2.contoso.com"; ISID = "2199946068032"; SessionType = 1; TSIH = 10; }, instance of WT_Session <= Second session information from initiator 10.10.2.69 { Connections = { instance of WT_Connection { CID = 1; DataDigestEnabled = FALSE; HeaderDigestEnabled = FALSE; InitiatorIPAddress = "10.10.2.69"; InitiatorPort = 60062; TargetIPAddress = "10.10.2.73"; TargetPort = 3260; TSIH = 11; }}; HostName = "T2"; InitiatorIQN = "iqn.1991-05.com.microsoft:svr2.contoso.com"; ISID = "922812480"; SessionType = 1; TSIH = 11; }}; Status = 1; TargetFirstBurstLength = 65536; TargetIQN = "iqn.1991-05.com.microsoft:cluster-yan03-t2-target"; TargetMaxBurstLength = 262144; TargetMaxRecvDataSegmentLength = 65536; };

As you can see in the above session information, each node (as the iSCSI initiator) has connected to the target with 2 sessions. You may have also noticed both sessions are using the same network path. This is because, when you configure iSCSI initiator, by default, it will pick the connection path for you. In the case of one path failure, another path will be used for the session reconnection. This configuration is easy to setup, and you don’t need to worry about the IP address assignment. It is good for failover MPIO policy.

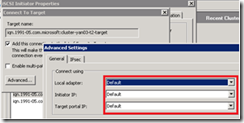

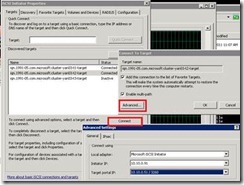

If you want to use specific network paths, or want to use both network paths, you will need to specify the settings when you connect the initiators. You can do this by going to the “Advanced” setting page.

This configuration allows you to use specific IPs, and can utilize multiple paths at the same time with different MPIO load balancing policies.

A word of caution on using the specific IP for Initiator and Target, if you are using DHCP in the environment, and if the IP address changes after the reboot, the initiator may not be able to reconnect. From the initiator UI, you will see the initiator is trying to “Reconnect” to the target after reboot. You will need to reconfigure the connection to get it out of this state:

- Remove the iSCSI Target Portal

- Add the iSCSI Target Portal back

- Connect to the discovered iSCSI Targets

Closing

This blog demonstrated setting up an initiator cluster with MPIO using a standalone iSCSI Target server. It is also possible to setup the iSCSI Target failover cluster. I am working on another blog to describe this configuration. Meanwhile, you can try it out yourself.