Tuning Enovia Smarteam at Langen Packaging Group – Summary

[Prior Post in Series] [Next Post in Series]

Recently I had an opportunity to do an example tuning on an Enovia SmarTeam installation. Marc Young at xML Solutions arranged for me to work with the folks at Langen Packaging Group in Mississauga, Ontario. Mirek Tokarz facilitated the access: dealing with NDAs, IT Security Policy and the many other issues that needed to be well addressed when an external consultant accesses a system containing intellectual property and proprietary information.

I will not subject you to yet another retelling on how to do a Tuning Trace and running the Database Engine Tuning Advisor (DTA). If you are not familiar with these, see my earlier post or:

- Creating a Workload by Using a Trace Log

- Running Database Engine Tuning Advisor (DTA) and Selecting Indexes

I was connected to an old developer test system populated with a 6 month old image of the production system. For purposes of tuning, this is more than adequate. For the purpose of intellectual property and protection from competition, it is an excellent choice. Regardless of NDA, you do not want to needlessly expose current inquiries and order to any 3rd party person, such as a consultant.

Mirek had taken me through a quick walk thru two weeks earlier, so I fired up Smarteam and proceeded to go into a quasi-black box testing. I got a few error messages that were handled well and I stopped when I had accumulated a small (1 MB) trace log consisting of 960 statements for tuning. My preference is to have a 200MB to 1 TB trace log.

The results of various runs using DTA are shown below. As cited in my introductory post, I tend to add indexes in steps because there is often a strong correlation between indexes. I will look at each run in detail in subsequent posts and provide a ready-to-try script as the last post of this series.

Time taken for tuning |

Expected percentage improvement |

Number of indexes recommended to be created |

Number of indexes on views recommended to be created |

Number of statistics recommended to be created |

User Actions |

8 Hours 37 Minutes |

59.34 |

67 |

3 |

87 |

Original |

1 Hour 40 Minutes |

43.35 |

15 |

3 |

91 |

Key on Object_ID,Class_ID |

1 Hour 45 Minutes |

43.30 |

14 |

3 |

91 |

Key on Creation_Date,User_Object_ID, User_ID_Mod |

1 Hour 3 Minutes |

42.36 |

7 |

3 |

88 |

Key on Object_ID (No Class_ID) |

55 minutes |

37.06 |

32 |

5 |

77 |

Applying the DTA 7 Indices ONLY |

58 Minutes |

37.05 |

32 |

5 |

0 |

Statistics Added |

9 Minutes |

0.00 |

0 |

0 |

0 |

Everything |

The purpose of the tuning was not to do a comprehensive tuning – rather an illustrative tuning in detail to show the logic that I am using. The purpose of the tuning is also to illustrate some of the side-effects that you may see and explain them. For example, adding one set of indices increased the number of recommended indexes from 7 to 32, which seems counter to expectations of seeing decreasing recommended indexes.

You can see the details in the following posts (coming soon):

- Tuning Indexes – Part 1: High Yield

- Tuning Indexes – Part 2: Three Successes and One Failure

- Tuning Indexes – Part 3: Closure on Clustered Indexes

- Tuning Statistics: Statistics are performance!

- Ready to run TSQL to apply to your installation on SmarTeam

User Experience after Tuning

Mirek reported to me that queries were taking literally half of the time that they were prior to my tuning. His staff will be more productive and less frustrated as a result. He is convinced of the benefit of tuning indexes on an ISV system.

Common Progress Exceptions

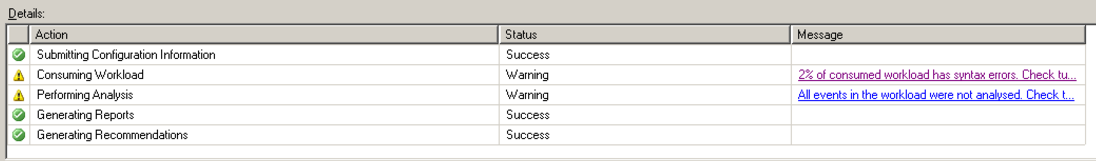

On my first run above, I received two warning messages on the [Progress] tab shown below. I should explain these a bit.

- So what is this 2% syntax error (approximately 18 statements)?

- During my testing, I had included some of LG’s custom features and had gotten errors on some of them.

- I speculate that there was a failure to check parameters sufficiently before submitting to SQL Server. An example is “Where LineNo= @Ln” with @Ln being set to spaces because there was no value, so what SQL Server sees is “Where LineNo = “ -- a syntax error.

- This occurs on every run.

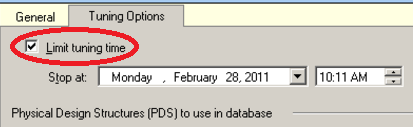

- The second item indicated that three hours were not sufficient to do analysis on the captured trace. I started a second run turning off the time limit to see how long it takes to do the entire trace.

- As cited above, this second run took 8 Hours and 37 Minutes.