Talking Database II

Hello All, this is ConfigNinja here to Talk about Databases. Welcome to the second blog post on Talking Database, since the first blog post was good and now that we have delivered the session at MMS https://www.mmsmoa.com. Thanks to all of the people that attended this event and the sessions I delivered with my peer, mentor, and friend Brett Bennett. He will be joining us for this blog post as well as a special guest, so keep reading to find out more about who is this special guest that is joining us for this great topic.

Let’s start by outlining what this topic will be about, in my role at Microsoft we deliver a service call Risk Assessment Program. Currently I’m one of the WW Leads for this delivery along with other great engineers around the world, during my deliveries that currently are more than 100+ deliveries of this service I found a common problem with many of my deliveries, so this blog post is intended to assist all the readers in solve those common issues that I have found on many of my assessments. If you want to learn more about this service, please go to https://aka.ms/RAP and talk to your Technical Account Manager or Microsoft Representative to schedule one on your environment.

Common Issues and Solutions based on our experience:

Issue #1

Configuration Manager Database Indexes are fragmented

The database CM_MMS has a fragmentation of 99.4405254196059%.

Detail Information

Database name: CM_MMS

Fragmentation level: 99.4405254196059%

Page Count: 32673

What does this mean for your database, this potentially mean that you have a series of indexes that are higher than the recommended percent. On my opinion the indexes should not be higher than 30%, so in this example the overall percentage of your database is on the 99%.

How do we fix this problem?

The solution to this issue is to create a maintenance plan in your system that will cover your indexes and statistics.

An example T-SQL Command that you can run is the following:

EXEC sp_MSforeachtable @command1="print '?' DBCC DBREINDEX ('?', ' ', 80)"

GO

Do you want to read more on this topic?

Check out the following blog post:

https://blogs.technet.com/b/smartinez/archive/2014/03/28/talking-database-in-configmgr.aspx

Issue #2

Fragmented indexes were found

So to follow up on our Issue #1, we have to look at this too. Issue #1 and #2 goes together. For the following reason:

The database [CM_MMS] has at least 10 fragmented index(es):

Index: [dbo].[Update_ComplianceStatus].[Update_ComplianceStatus_PK]. Page Count: 108584. AvgFragmentationInPercent: 98.43

Index: [dbo].[Add_Remove_Programs_HIST].[Add_Remove_Programs_HIST_PK]. Page Count: 65235. AvgFragmentationInPercent: 93.01

Now let's talk first about Page Count, in our check we look for indexes higher than 15000. The Average fragmentation on this index is very high as you can see here, what is the problem with this index. As you can see on this example, we have an index from Update Compliance Status, depending on how often you check for compliance will be the potential impact on the index. So if you do check for compliance in every 4 hours, there is a chance this index will get fragmented within a day. In the other hand we have an Add Remove Programs Index, this is part of your hardware inventory. By default, this is set to run every seven days, but we all know this setting is the one we always lower. So for example if you do Hardware Inventory every three days then this index is getting fragmented every three days as simple as that, so what do you need to do? Perform better maintenance around the processes you have for the system.

What is the solution here?

You can run the following query to identify the fragmented indexes:

1: SELECT s.name AS 'SchemaName', object_name(frag.object_id) AS 'TableName', si.name AS 'IndexName', frag.alloc_unit_type_desc AS 'AllocUnitType', frag.index_type_desc AS 'IndexType', frag.page_count AS 'PageCount', frag.index_depth AS 'IndexDepth', frag.avg_fragmentation_in_percent AS 'AvgFragmentationPercent', frag.fragment_count AS 'FragmentCount',

2: frag.avg_fragment_size_in_pages AS 'AvgFragmentPageCount', frag.object_id, frag.index_id, frag.partition_number

3: FROM sys.dm_db_index_physical_stats(DB_ID(),null,null,null,'LIMITED') frag

4: LEFT OUTER JOIN sys.indexes si (NOLOCK) ON si.object_id = frag.object_id AND si.index_id = frag.index_id

5: JOIN sys.objects o (NOLOCK) ON frag.object_id = o.object_id

6: JOIN sys.schemas AS s (NOLOCK) ON s.schema_id = o.schema_id

7: WHERE o.is_ms_shipped = 0

8: AND o.object_id not in (SELECT major_id FROM sys.extended_properties (NOLOCK) WHERE name = N'microsoft_database_tools_support')

9: AND frag.index_id <> 0

10: AND page_count > 5000

11: AND avg_fragmentation_in_percent > 10

12: ORDER BY frag.page_count DESC

How to prevent this 2 issues, the following will be outlined here as Solution #1.

Solution #1

This solution can assist you with Issue 1 and 2, so it will be outlined here as potential options.

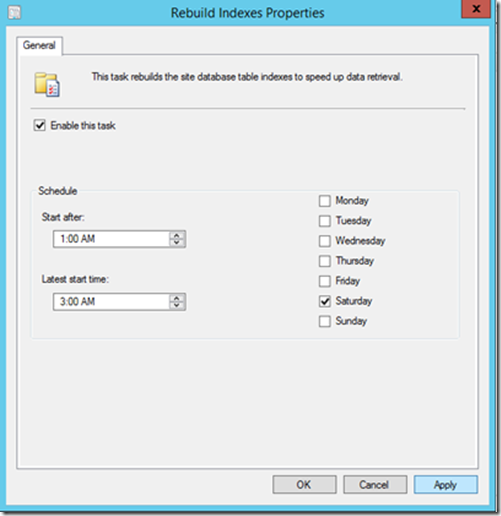

Option #1: First you can enable the ConfigMgr Rebuild Indexes at least once a week, the recommendation here is to avoid any overlap in maintenance from the other ones.

So in this example, I will be setting up this task to run on Saturdays around 1:00 AM Start Time and can't go over 3:00 AM.

Example of Rebuild Indexes Maintenance

Rebuild Indexes Example from a Site Server.

Option #2, in this example I will outline what I recommend doing in SQL Server, this is a maintenance task. If you want to learn more about this steps, make sure to take a look at our old blog post as was recommended in the Issue #1.

From that blog post, our recommended SQL maintenance plan should include the following.

- Reorganize Index

- Rebuild Index

- Update Statistics

- Clean Up History

So why Reorganize and Rebuild Index?

“The SQL Server Database Engine automatically maintains indexes whenever to insert, update, or delete operations are made to the underlying data. Over time, these modifications can cause the information in the index to become scattered in the database (fragmented). Fragmentation exists when indexes have pages in which the logical ordering, based on the key value, does not match the physical ordering inside the data file. Heavily fragmented indexes can degrade query performance and cause your application to respond slowly.”

Reorganize and Rebuild Indexes - MSDN - Microsoft. (n.d.). Retrieved from https://msdn.microsoft.com/en-us/library/ms189858.aspx

Why had Update Statistics?

“Statistics for query optimization are objects that contain statistical information about the distribution of values in one or more columns of a table or indexed view. The query optimizer uses these statistics to estimate the cardinality, or a number of rows, in the query result. These cardinality estimates enable the query optimizer to create a high-quality query plan. For example, the query optimizer could use cardinality estimates to choose the index to seek operator instead of the more resource-intensive index scan operator, and in doing so improve query performance.”

Statistics. (n.d.). Retrieved from https://msdn.microsoft.com/en-us/library/ms190397.aspx

There are other solutions out there to monitor and help with your indexing problem, this solution I outlined here is a basic solution, but it will do the work.

Avoid Overlap:

In the first Option we enable the Rebuild Index Task once a week on Saturdays from 1 AM to 3 AM, the SQL Maintenance Plan should not be run on the same day. This mean you can run the maintenance plan ever another day except Saturdays.

Issue #3

Database Auto Growth

The database Auto Growth is the setting everyone forgets about is critical to change the initial configuration of the databases once they are created. Let’s see the following example:

Database Name: CM_MMS

Logical File Name: CM_MMS_log

Next Growth Type: %

Next Growth Size in MB: 10073.45

In the below example, we can see that the subsequent growth of the database log file type is set to % and the Next Growth will be around 11 GB. We have two recommendations here; the first one is to ensure the Growth Type is set to MB instead of %, this will ensure you can specify the growth amount instead of the system making the calculation. The other recommendation here is around the size of this log file, we recommend a simple recovery model for ConfigMgr database so the log file does not need to growth that much since we only recover from last transaction and not to a point in time as you can do in the Full Recovery Model.

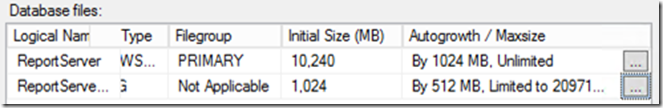

Example of Auto Growth:

Auto growth configuration for Data File and Log File.

Issue #4

SQL Server instance has only one tempdb data file while there is more than one scheduler in use.

This is a very important configuration; we will explain more details about this issue now so we can understand how important is it.

Why should we care?

“When the tempdb database is heavily used, SQL Server may experience contention when it tries to allocate pages. Depending on the degree of contention, this may cause queries and requests that involve tempdb to be unresponsive for short periods of time.”

Contention Cause. (n.d.). Retrieved from https://support.microsoft.com/en-us/kb/2154845

Here is an example of an SQL Server Configuration and How many Scheduler they have.

Scheduler Count: 8

Tempdb Data files count: 1

What do this mean is that if you have 8 logical processors you will add up to 8 tempdbs, you will start adding tempdb files until the contention if addressed. So my recommendation is to add 7 more tempdb files with the same initial size and the same auto growth to increase the performance of your SQL Server. In this case the tempdb of ConfigMgr is heavily used, so we are recommending implementing this changes to your system based on the logical processors you have. So if you have 4 logical processors just add 3 tempdb files, not 8, and if you have more than 8 logical processors don’t add more as there will be no real value after that.

In the same line of logical processors, there is a configuration that we can also configure and is the max degree of parallelism, here is a little bit more info about what this is and what to do.

“When SQL Server runs on a computer with more than one microprocessor or CPU, it detects the best degree of parallelism, that is, the number of processors employed to run a single statement, for each parallel plan execution. You can use the max degree of parallelism option to limit the number of processors to use in parallel plan execution. To enable the server to determine the maximum degree of parallelism, set this option to 0, the default value. Setting maximum degree of parallelism to 0 allows SQL Server to use all the available processors up to 64 processors. To suppress parallel plan generation, set max degree of parallelism to 1. Set the value to a number greater than 1 to restrict the maximum number of processors used by a single query execution. The maximum value for thedegree of parallelism setting is controlled by the edition of SQL Server, CPU type, and operating system. If a value greater than the number of available processors is specified, the actual number of available processors is used. If the computer has only one processor, the max degree of parallelism value is ignored.”

max degree of parallelism Option. (n.d.). Retrieved from https://technet.microsoft.com/en-us/library/ms181007(v=sql.105).aspx

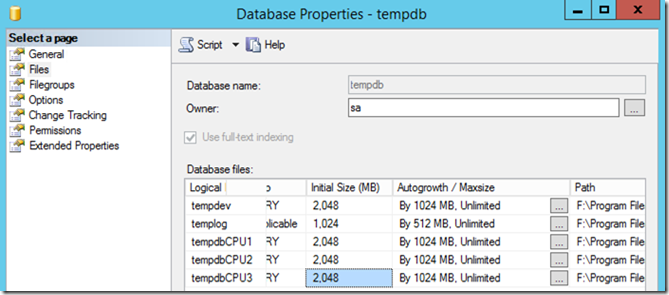

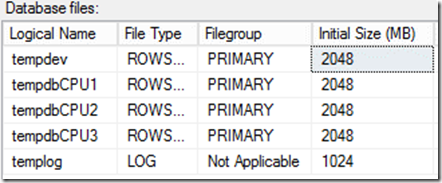

Example of TempDB Files for each scheduler:

In this example the Virtual Machine have 4 Virtual Processors, so I added 3 additional tempdb files. Each of the files have the same Initial Size of 2048 and the same auto growth of 1024. (recommend sizing templog the same size as the temp data files, use the same autogrowth)

NOTE: For SQL Server instances running Configuration Manager databases, start with 4 tempdb data files and increase to a maximum number of 8 (if needed).

Issue #5

SQL Server service is running under the Local System account

This is maybe one of the oldest best practice in SQL Server, especially on security of the database.

Let's take a look at the following example:

Server Name(s): NAC-CM01.ninja.ms

Service Account(s): LocalSystem

Recommendation:

“When choosing service accounts, consider the principle of least privilege. The service account should have exactly the privileges that it needs to do its job and no more privileges. You also need to consider account isolation; the service accounts should not only be different from one another, they should not be used by any other service on the same server. Do not grant additional permissions to the SQL Server service account or the service groups. Permissions will be granted through group membership or granted directly to a service SID, where a service SID is supported.”

Configure Windows Service Accounts and Permissions (n.d.). Retrieved from https://msdn.microsoft.com/en-us/library/ms143504.aspx#Use_startup_accounts

Also, Review the following documentation. Technical Reference for the Prerequisite Checker in ... (n.d.). Retrieved from https://technet.microsoft.com/en-us/library/hh368980.aspx

Example of a Service Account for SQL Service:

In this example, I only defined the service account for the SQL Server Engine.

Issue #6

The Windows 2008/R2 and later OS power saving setting may affect the CPU performance.

This configuration is one that can be missed many times, so hope with this explanation we can get the issue corrected in most of the SQL Servers that host ConfigMgr Database.

Why should we care?

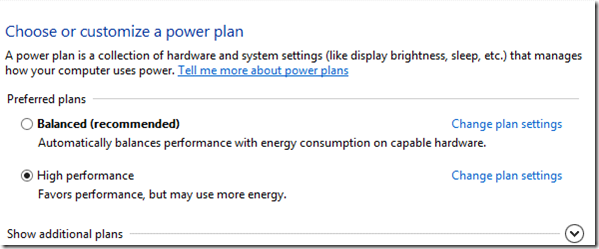

In Windows Server 2008 and later OS, the default power saving setting is set to Balanced, which means that components such as CPU and storage will be scaled back if the system is not busy. “In Slow Performance on Windows Server 2008 R2 when using the “Balanced” Power Plan some cases this may result in performance degradation for SQL Server.”

How to fix this?

You can manually change this value on the server to High Performance. However, if you have many servers to check, I suggest a look at the following script Setting the Power Plan with PowerShell – Wintellect DevCenter. (n.d.). Retrieved from https://www.wintellect.com/devcenter/jrobbins/setting-the-power-plan-with-powersh

Example of Power Options:

In this example the power options for the server is set to High Performance, and is how your power options should look like.

Issue #7

TempDB initial database Size may be too small

In the same line as Auto Growth and Schedulers, the initial size of the tempdb should not be a small size. By default the size of the tempdb will be around 1024 MB, and our recommendation will be that the size of your tempdb is more than that, many times I try to configure the size of all my tempdb very close to the maximum size of my drive to avoid any additional growth.

Why should we care?

“The size and physical placement of the tempdb database can affect the performance of a system. For example, if the size that is defined for tempdb is too small, part of the system-processing load may be taken up with autogrowing tempdb to the size required to support the workload every time you restart the instance of SQL Server. You can avoid this overhead by increasing the sizes of the tempdb data and log file.”

Optimizing tempdb Performance. (n.d.). Retrieved from https://technet.microsoft.com/en-us/library/ms175527(v=sql.105).aspx

Managing TempDB in SQL Server:TempDB Basics (cont..) - SQL ... (n.d.). Retrieved from https://blogs.msdn.com/b/sqlserverstorageengine/archive/2008/12/22/tempdb-basics-cont.aspx

Example of TempDB Size

Issue #8

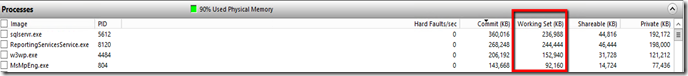

A Process Working Set Size Is Large

The working set of a program is a collection of those pages in its virtual address space that have been recently referenced. It includes both shared and private data. The shared data includes pages that contain all instructions your application executes, including those in your DLLs and the system DLLs. As the working set size increases, memory demand increases.

If there are other processes running on a Server with large working set sizes, this can reduce the availability of RAM for the rest of the machine, increase paging on the system and effectively decrease overall performance.

Example:

Process: sqlservr.exe Handle Count: 1942 Virtual Size (bytes): 42283147264

Process: w3wp.exe Handle Count: 1517 Virtual Size (bytes): 6367088640

On the following examples, we can see that SQL Server is using around 4GB of Memory and IIS is using around 6GB of Memory. If this server only has 16GB of RAM, this means that 10Gbs are being used by just this two process and only 6gbs are left for the other process tha the system may need. This creates a huge performance problem in the server since now it won't be able to handle the additional process in the site server.

Example of a Process Working Set

In this example, my top memory consumptions are SQL Server, Reporting Services and w3wp (IIS). However, you can see that they are not using all the memory of the server at this moment, since this is just for reference review your current working set and see how much memory your server has.

Issue #9

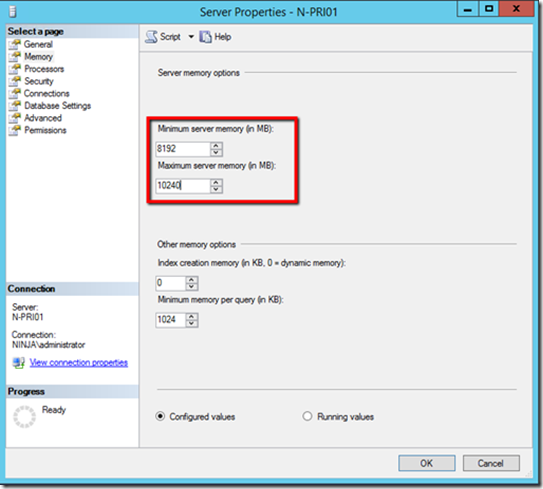

The SQL Server configuration setting, min server memory.

Since the beginning of System Center 2012 Configuration Manager one of the main recommendations that was introduced as part of the Pre Requirements Checker, is to validate if you have the correct minimum memory configured on SQL and the Maximum Memory.

Recommendation:

SQL Server memory |

When you use a database server that is co-located with the site server, limit the memory for SQL Server to 50 to 80 percent of the available addressable system memory. When you use a dedicated SQL Server, limit the memory for SQL Server to 80 to 90 percent of the available addressable system memory. Configuration Manager requires SQL Server to reserve a minimum of 8 gigabytes (GB) of memory in the buffer pool used by an instance of SQL Server for the central administration site and primary site and a minimum of 4 gigabytes (GB) for the secondary site. This memory is reserved by using the Minimum server memory setting under Server Memory Options and is configured by using SQL Server Management Studio. For more information about how to set a fixed amount of memory, see How to: Set a Fixed Amount of Memory (SQL Server Management Studio) |

Example of SQL Memory Configuration:

In this example, the Minimum Configuration for this server is 8GB, and the Maximum is 10GB, this should be a good configuration for a primary site with 16GB Minimum.

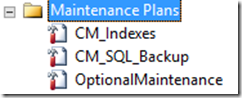

Issue #10

Do you have a Maintenance Plan in your SQL?

Based on my overall experience I have there is one common issue with many of the SQL Servers for ConfigMgr, and that problem is the lack of maintenance.

Why should we care?

Maintenance is one of the standard practice, we need a good maintenance over our SQL Server to ensure is performing at optimal conditions.

Solution:

Review the solution #1 on this blog post, review each of the 9 issues outlined in here and validate you do not have any of them. If you still not sure if this is a problem on your environment get with you Technical Account Manager or Microsoft Partner to ask the questions on how to get a healthy environment.

Example of Maintenance Plans on Primary Site:

There are 3 Maintenance Plans on this Site, one is for the indexes, one if for the backups and one is an on-demand job to perform additional maintenance task around the databases.

Note: as an alternative to these tasks, Steve Thompson Recommend the Ola Hallengren’s task as well. For more information, https://stevethompsonmvp.wordpress.com/2013/05/07/optimizing-configmgr-databases/

Special Thanks to Steve Thompson MVP –Configuration Manager for his contribution to this post.

Santos Martinez – SR PREMIER FIELD ENGINEER – Author

Brett Bennett – SR PREMIER FIELD ENGINEER – Contributor

Disclaimer: The information on this site is provided "AS IS" with no warranties, confers no rights, and is not supported by the authors or Microsoft Corporation. Use of any included script samples are subject to the terms specified in the Terms of Use