Software Defined Storage in Windows Server 2016 - The S2D story.

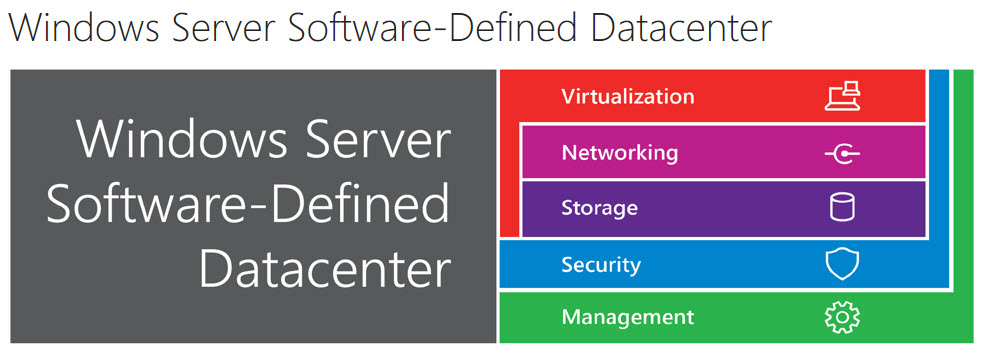

Software Defined Data Centre (SDDC)

In the world of computing there seem to be many buzzwords and phrases that pass in and out of favour. One of the latest to hit the world of on-premises server land is the Software Defined Datacentre and underpinning this concept the Software Defined Storage and Software Defined Network. To break this down makes a lot of sense, firstly one definition of Software Defined Data Centre is

"Software-defined data center (SDDC) is the phrase used to refer to a data center where all infrastructure is virtualized and delivered as a service. Control of the data center is fully automated by software, meaning hardware configuration is maintained through intelligent software systems. This is in contrast to traditional data centers where the infrastructure is typically defined by hardware and devices.

Software-defined data centers are considered by many to be the next step in the evolution of virtualization and cloud computing as it provides a solution to support both legacy enterprise applications and new cloud computing services."

Courtesy of https://www.webopedia.com/TERM/S/software_defined_data_center_SDDC.html

Now I find that a complete mouthful and hard to digest in real terms. So this post and my next on Software Defined Networking is aimed at those who are baffled by the definition, concepts and deployment of these services, more to the point to those who would benefit from them being so deployed in their own environments. Event he diagrams are easier to understand.

The SDDC consists of five main elements as shown above. This series of posts will cover two Software Defined Storage and Software Defined Networking.

First we will cover off Software Defined Storage.

So what is Software Defined Storage (SDS) then?

One definition of SDS is

"Storage infrastructure that is managed and automated by intelligent software as opposed to by the storage hardware itself. In this way, the pooled storage infrastructure resources in a software-defined storage (SDS) environment can be automatically and efficiently allocated to match the application needs of an enterprise."

Courtesy of https://www.webopedia.com/TERM/S/software-defined_storage_sds.html

Essentially for us this means allowing the operating system software, in this case Windows Server 2016 to manage all the directly attached physical storage and share that across your infrastructure. This separates the physical storage media from the hardware and provides pools of shareable storage to clusters, workloads and servers. The tool best suited to this in a cluster environment is Storage Spaces Direct or S2D. S2D is a new feature in Windows Server 2016 that builds on the storage spaces technology introduced in Windows Server 2012.

S2D is only available in Windows Server 2016 Datacenter edition. The primary use for this technology exists in failover clusters where multiple Virtual Machines (VMs) are deployed. This is the natural home of a datacenter edition. Remember that a datacenter licence in Windows Server 2016 covers 16 physical processor cores and an unlimited number of VMs.

For an excellent overview of S2D tune in to this Channel 9 video by Elden Christensen a Principal PM Manager in the High Availability & Storage team. It is about 20 minutes long and really does help explain how to plan and design your storage hardware for S2D.

But what exactly is S2D?

Well with Storage spaces in Windows Server it was possible to create Volumes on top of pools of storage made up of a number of different types and sizes of disk. The Server would manage the resilience and redundancy of the data. This solved a lot of previously expensive questions for highly available storage and reduced the need for third party storage solutions. With Windows Server 2016 Datacenter edition, this pool of storage can now be created across a number of physical servers (or nodes) within a failover cluster. The use of Cluster Shared Volume (CSV) file system and the Resilient File System (REFS) ensures that each node sees the storage and believes it to belong to itself. This way the storage is shared across the whole cluster.

The number of disks and the type of disks are important and it makes sense to have each node as close in disk inventory as possible.

S2D carves up your newly created storage pool into two distinct tiers of storage.

Performance tier and Capacity tier. The fastest disks are used for the performance tier (where the disks are mixed between spinning and solid state). S2D can manage NVMe and SSd disks as well as HDD.

What does all this mean?

The bottom line is that there is no longer any need to invest in expensive Storage Area Network (SAN) technology and to pay for the implementation support and maintenance of these traditional solutions. It is now possible to have resilient shared storage built into the cluster nodes and consisting of standard commodity disks.

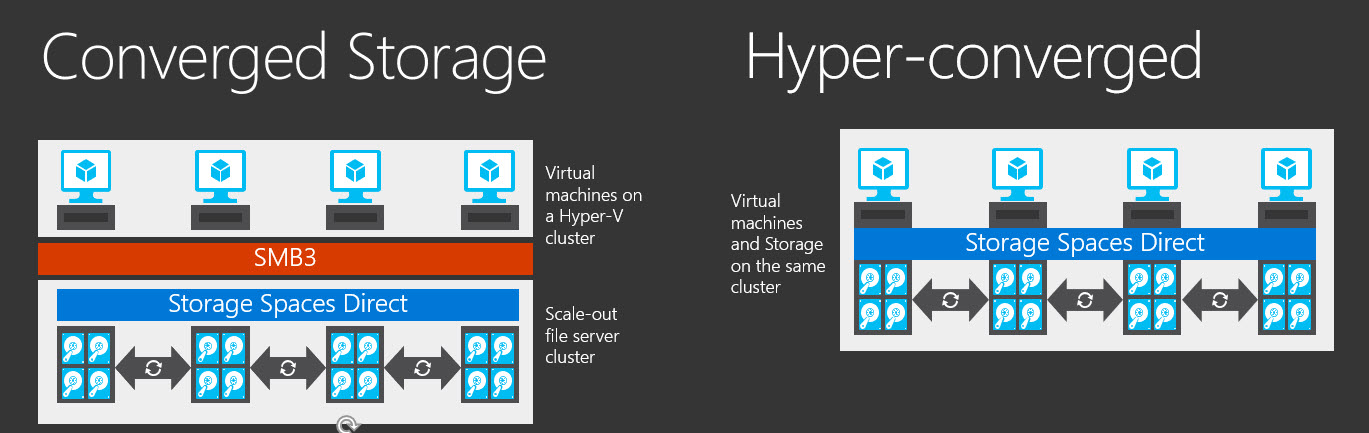

There are two types of S2D - Converged and hyper converged as shown in the graphic above. There are use cases and benefits to each.

With Hyper-converged it is possible to scale your storage and compute resources at the same time and to reduce the need for additional hardware purchases and software licensing, whereas with converged systems it is possible to scale your compute cluster and storage cluster independently.

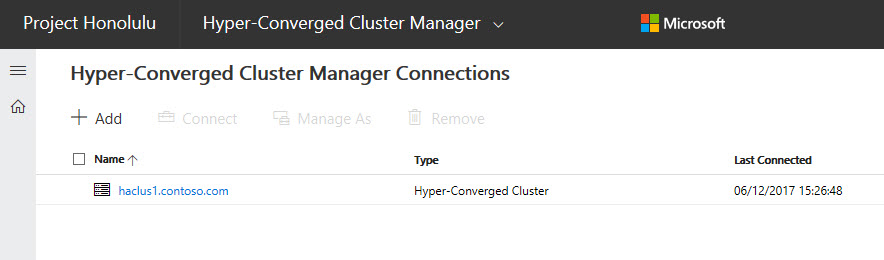

Storage spaces direct can be implemented and controlled with PowerShell and System Center Virtual Machine Manager (SCVMM) and the new Project Honolulu server management solution has a hyper converged cluster tab to allow for management of S2D implementations.

The deployment of S2D through PowerShell can be evaluated by using the new Microsoft Hands on Labs offering here. 9BONUS this lab also shows PowerShell JEA) This is a free resource with over 250 real time labs to experiment with.

There are a number of Microsoft Partners all working to provide S2D in pre-built appliances, these include Dell, HPE, Fujitsu and Lenovo (and others), checkout the Server Guy WSSD blog post here.

The technology behind S2D is incredibly complex and beyond the scope of this introductory post. The technology does require some pretty high level networking technology such as RDMA network cards and very fast Ethernet connectivity. Once deployed we are left with a software-defined shared nothing storage facility. There are no special cables or equipment controlling the resulting storage.

The benefits include high speed, self repairing, self tuning storage capable of millions of IOPS and throughput of many Gigabytes per second. The more nodes in the cluster the higher the performance and resilience.

For a quick five minute video showing deployment and use of S2D Cosmos Darwin a Program manager in the S2D team has posted on YouTube.

The result is that the storage provided can be made available across your environment as a highly available, resilient pool of shared storage without spending large amounts of money on proprietary storage solutions. This allows all your workloads to take advantage of this storage simply by using internal hardware all controlled by the Server operating system.

Microsoft recommend taking advantage of the pre built, configured and tested partner devices to maximise the benefits but that's no reason not to build a test rig or try the technology out in Microsoft Azure virtual machines first.

Next time we will cover Software Defined Networking.