Storage, High Availability and Site Resilience in Exchange Server 2013, Part 2

Microsoft Exchange Server 2013 continues to innovate in the areas of storage, high availability, and site resilience. In this three-part blog series, I’ll describe the significant improvements in Exchange 2013 related to these three areas. Part 1 focuses on the storage improvements that we’ve made. Part 2 focuses on high availability. And Part 3 will focus on site resilience.

Exchange 2013 continues to use DAGs and mailbox database copies, along with other features such as Single Item Recovery, Retention Policies, lagged database copies, etc., to provide Exchange Native Data Protection. However, the high availability platform, the Exchange Information Store, the best copy selection process, the Extensible Storage Engine (ESE), and internal monitoring have been significantly modified and enhanced to provide even greater availability, easier management, and to reduce costs.

With respect to high availability, some of these enhancements include:

- Managed Availability

- A New Best Copy Selection process

- Maintenance Mode

- DAG auto-network configuration

Managed Availability

Managed availability is the integration of built-in monitoring and recovery actions with Exchange’s built-in high availability platform. It is designed to detect and recover from problems as soon as they occur and are discovered by the system. Unlike previous external monitoring solutions for Exchange, managed availability does not try to identify or communicate the root cause of an issue. It is instead focused on recovery aspects that address three key areas of the user experience:

- Availability – can users access the service?

- Latency – how is the experience for users?

- Errors – are users able to accomplish what they want to?

The new architecture in Exchange 2013 makes each Exchange server an “island” where services on that island only service the active databases located on that server. The architectural changes in Exchange 2013 require a new approach to availability model used by Exchange. The Mailbox and Client Access server architecture imply that any Mailbox server with an active database is in production for all services, including all protocol services. As a result, this fundamentally changes the model used to manage the protocol services.

Managed availability was conceived of to address this change and to provide a native health monitoring and recovery solution. The integration of the building block architecture into a unified framework provides a powerful capability to detect failures and recover from them. Managed availability moves away from monitoring individual separate slices of the system to monitoring the end-to-end user experience, and protecting the end user’s experience through recovery-oriented computing.

Managed availability is an internal process that runs on every Exchange 2013 server. It is implemented in the form of two processes:

- Exchange Health Manager Service (MSExchangeHMHost.exe) - A controller process that is used to manage worker processes. It is used to build, execute, and start and stop the worker process, as needed. It is also used to recover the worker process in case that process crashes, to prevent the worker process from being a single point of failure.

- Exchange Health Manager Worker process (MSExchangeHMWorker.exe) - A worker process that is responsible for performing the runtime tasks.

Managed availability uses persistent storage to perform its functions:

- The Front-End Transport service uses XML configuration files to initialize the work item definitions during startup of its worker processes.

- Other components have their configuration information stored in code.

- The Windows registry is used to store runtime data, such as bookmarks.

- The Windows Crimson Channel event log infrastructure is used to store the work item results.

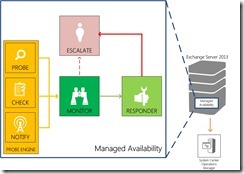

As illustrated in the following drawing, managed availability includes three main asynchronous components that are constantly doing work: the probe engine, the monitor, and the responder engine.

Figure 1 - Managed Availability in Exchange Server 2013

The probe engine measures and collects data from the servers and passes it to the monitor. The monitor contains the business logic used by the system to determine if the server is healthy, based on the pre-defined definitions of health and the collected data. Basically, the monitor is looking for patterns in the collected measurements, and then it makes a decision on whether or not something is healthy. If a server is considered unhealthy, the responder engine handles recovery actions. Generally, when a particular component becomes unhealthy, the first action is to try to recover just that component. If the recovery actions are unsuccessful, the system escalates the issue by notifying an administrator via event log entries.

The probe engine contains probes, checks and notification logic. Probes are synthetic transactions performed by the system to test the end-to-end user experience. Checks are the infrastructure that perform the collection of performance data, including user traffic, and measure the collected data against thresholds that are set to determine spikes in user failures. This enables the checks infrastructure to become aware of when users are experiencing issues. Finally, the notification logic enables the system to take action immediately based on a critical event, without having to wait for the results of the data collected by a probe. These are typically exceptions or conditions that can be detected and recognized without a large sample set.

Monitors query the data collected by probes to determine if action needs to be taken based on a pre-defined rule set. Depending on the rule, or the nature of the issue, a monitor can either initiate a responder or escalate the issue to a human via an event log entry. In addition, monitors define how much time after a failure that a responder is executed, as well as the workflow of the recovery action. Monitors have various states. From a system state perspective, monitors have two states:

- Healthy - The monitor is operating properly and all collected metrics are within normal operating parameters

- Unhealthy - The monitor is not healthy and has either initiated recovery through a responder or notified an administrator through escalation.

From an administrative perspective, monitors have additional states that will appear in PowerShell:

- Degraded - When a monitor is in an unhealthy state for X seconds, it is considered Degraded. If a monitor is unhealthy for more than Y seconds, it is considered Unhealthy. Each component defines its time intervals.

- Disabled - The monitor has been explicitly disabled by an administrator.

- Unavailable - The Microsoft Exchange Health service periodically queries each monitor for its state. If it does not get a response to the query, the monitor state becomes Unavailable.

- Repairing - Set by an administrator to indicate to the system that corrective action is in process by a human, which allows the system and humans to differentiate between other failures that may occur at the same time correction action is being taken (such as a database copy reseed operation).

Responders are responsible for executing a response to an alert generated by a monitor because a monitor has become Unhealthy. There are many different types of responders that are designed to address the particular nature of the alert by performing recovery actions in a specific sequence. For example, there are responders that terminate and restart services or cycle IIS application pools (the two that will likely be the most commonly triggered in most environments), responders that perform a server failover or forcibly reboots the server, responders that take servers in and out of service, and responders that escalate issues to administrators by generating additional alerts.

Probes, responders and monitors have default thresholds and parameters that can be overridden and configured by an administrator. This allows you to fine tune Managed Availability for your environment and it can be used to manage system behavior during unplanned outages or emergency situations. These overrides can be applied to the entire environment, or scoped to specific servers, or specific versions of the server. In addition, they can be configured to apply for a specific duration (e.g., while replacing failed equipment). Overrides that are applied to specific servers are stored in the registry of the servers to which they are applied. Overrides that apply to the entire environment are stored in Active Directory.

The probes, monitors and responders used by Managed Availability are grouped together into health sets. Health sets are used to determine whether or not a given system component is healthy. The overall status of a health set is an aggregate of the health of the monitors in the health set, with the least healthy status listed in the evaluation. Health sets are then grouped into health groups that are exposed in System Center Operations Manager (SCOM) for reporting purposes. Instead of SCOM or an external engine performing recovery actions, Managed Availability performs the recovery actions. SCOM is simply used as a portal to see health information related to the environment, and to receive alerts when an escalation responder is invoked.

For additional information on Managed Availability, see Ross Smith IV’s blog post.

Managed Availability Failovers

Failovers are the primary recovery mechanism for the Mailbox server and mailbox databases. This has been the case for the past few releases and past several years. And that continues to be the case today with Exchange 2013. However, the list of failures that can trigger failovers has been expanded as a result of the introduction of Managed Availability. Managed Availability-driven failovers are a new form of recovery from some detected failures. These are failures detected by Managed Availability probes and monitors via a synthetic operation or as a result of live data that is being monitored.

While many failures can be resolved through other means (for example, restarting a service or an IIS application pool), some require some form of failover to provide to provide corrective action. Managed Availability-driven failovers come in two forms: server and database. Protocol failures (for example, a failure affecting OWA) can trigger a server-wide response and initiate a server failover. Store-detected per-database failures can trigger a database failover.

When failovers due occur, internal throttling mechanisms across time and across the DAG are used to prevent database activation storms during certain types of failures (for example, when a protocol is repeatedly crashing).

In addition to these triggers, Active Manager also uses the information gathered by Managed Availability to determine where active copies should be moved to, based on a variety of health criteria regarding the passive copies and the servers hosting them. This has resulted in significant changes to the best copy selection algorithm in Exchange 2013, as described below.

Best Copy and Server Selection

Best copy selection (BCS) is an internal Active Manager algorithm used to select the “best” copy of a specific database to activate based on a list of inputs that include potential copies and their status. In Exchange 2010, four criteria are used to evaluate potential copies and determine which one to try to activate first:

- Copy queue length

- Replay queue length

- Database copy status

- Content index status

One of the architectural tenets of Exchange 2013 is that every server is an “island.” Among other things, this means that access to a database is provided through the protocols running on the server hosting the active copy. As a result of this architectural change, using only the above four criteria is not good enough for Exchange 2013 because protocol health is not considered. The new architecture in Exchange 2013 requires a much smarter selection of the copy being activated, and it begins with the premise that no failover operation should ever make the health worse.

Significant changes have been made to the BCS process to include Managed Availability health set information and to further tune the process. Exchange 2013 uses the database copy and content index health status information used by Exchange 2010, but Exchange 2013 also evaluates the health of the entire protocol stack using Managed Availability health sets. It evaluates the health of the triggering functionality on the potential target systems (e.g., if OWA failed on the current active copy, how healthy is OWA on the servers hosting passive copies), the health of the servers hosting passive copies, and the priority of the protocol health set. All health sets have a priority of either low, medium, high or critical.

As a result of these significant changes and new behaviors, we’re calling the process something slightly different in Exchange 2013: Best Copy and Server Selection (BCSS).

There are four new additional checks performed during BCSS (listed in the order in which they are performed):

- All Healthy - checks for a server hosting a copy of the affected database that has all monitoring components in a healthy state.

- Up to Normal Healthy - checks for a server hosting a copy of the affected database that has all monitoring components with Normal priority in a healthy state.

- All Better than Source - checks for a server hosting a copy of the affected database that has monitoring components in a state that is better than the current server hosting the affected copy.

- Same as Source - checks for a server hosting a copy of the affected database that has monitoring components in a state that is the same as the current server hosting the affected copy.

If BCSS is invoked as a result of a failover that is triggered by a monitoring component (e.g., via a failover responder), an additional mandatory constraint is enforced where the target server's component health must be better than the server on which the failover occurred. For example, if a failure of Outlook Web App (OWA) triggers a failover via a Failover responder, then BCSS must select a server hosting a copy of the affected database on which OWA is healthy.

| TIP: To see what Managed Availability is reporting for the health and health sets on your servers, you can use the Get-ServerHealth and Get-HealthReport cmdlets. The way these cmdlets are designed, the obviously way to get a health summary is to simply run get-serverhealth | get-healthreport. However, these cmdlets are capable of returning so much data, that this simplest way can often time out or error out. So when reviewing status of health sets, we recommend that you filter Get-ServerHealth to return a single health set (e.g., get-serverhealth | ? {$_.HealthSet -eq “AD”} | get-healthreport). |

Maintenance Mode

Maintenance mode is new functionality that enables you to designate a server as in-service or out-of-service by using the Set-ServerComponentState cmdlet. This is significantly different from StartDagServerMaintenance.ps1, the script we introduced in Exchange 2010, for preparing a server for maintenance.

Maintenance mode is used to determine whether or not the server should be available to service clients. In addition to marking a Mailbox server or a Client Access server as out-of-service, it also records information to prevent client workloads from occurring on the system. For example, if you want to perform maintenance on a Mailbox server you would perform a server switchover, and then use the Set-ServerComponentState cmdlet to put the Mailbox server into maintenance mode, which prevents the active copies from being activated on the server in maintenance mode.

When you put a Mailbox server into maintenance mode, it means that there are no active database copies on the server and that Transport services are offline. When you take a CAS out of service, CAS will stop acknowledging load balancer heartbeats and all proxy services will be offline.

DAG Network Autoconfig

As you may have gathered from previous Exchange releases, we have been pushing to make it easier and easier to have a highly available messaging system; to lower the bar for adoption, if you will. For example, in previous versions of Exchange, the system automatically installs and configures Windows Failover Clustering when needed. In Exchange 2013, we’ve taken that behavior even further by providing default behavior where DAG networks are managed for you automatically.

As with Exchange 2010, there are some tasks that the administrator needs to perform ahead of time; namely, the installation and configuration of network interface cards (NICs). It is still important that each NIC be configured based on its intended use as either a MAPI or Replication network (for example, the MAPI network is registered in DNS, and the Replication network is not; the MAPI network has a default gateway in a multi-subnet environment, and the Replication network does not). As long as the configuration settings are correct, then DAG networks become much more simplified in terms of management.

In addition, in a multi-subnet DAG, it is no longer necessary to collapse DAG networks in Exchange 2013. Exchange now does that for you automatically, provided that the above-mentioned configuration settings are correct.

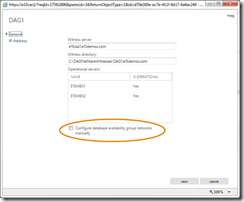

By default, all DAG networks are automatically managed by the system. To view DAG network settings in auto-config mode, you’ll need to use the Exchange Management Shell. You can, however, use the Exchange Administration Center (EAC) to configure manual control over DAG networks (see the following figure), which enables you to view, edit, create and remove DAG networks using EAC or the Shell.

You can also enable manual control by using Set-DatabaseAvailabilityGroup <DAGName> –ManualDagNetworkConfiguration:$True. Once you enable manual control, you can perform some common tasks, such as:

- Add or remove DAG networks, as needed

- Configuring the DAG to ignore iSCSI or dedicated backup networks, if any

- Disable replication on the MAPI network (optionally)