Refreshing State Messages in System Center Configuration Manager 2012

Recently, I worked with a customer who reported that his clients were not reporting accurate data in their ConfigMgr compliance reports. Though the clients showed the correct settings when we looked at them directly, these settings were not reflected correctly in the compliance reports. This is an issue that has been dealt with in the past and there is a fair amount on the internet about how to handle it. In this post, I want to add to that information a bit and hopefully answer a few questions that others might have when attempting to resolve this issue.

Compliance Reporting

First of all, let’s talk briefly about Compliance reporting in ConfigMgr 2012. I include this only for those who are unsure what I’m talking about when I use this term. If you're familiar with Compliance reporting, feel free to skip to the next section.

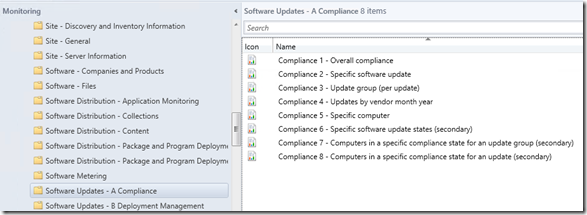

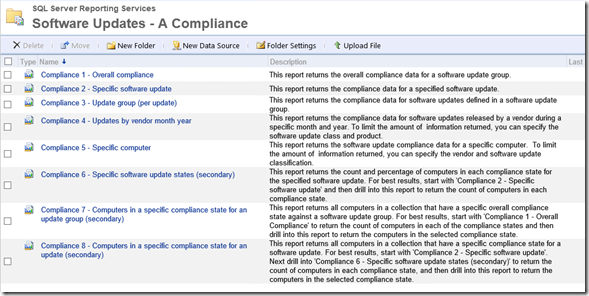

For those of you who are still reading this section, ConfigMgr comes with a fairly extensive set of pre-configured reports to provide information about the state of your infrastructure. There are different types of reports to become familiar with, but the ones we care about here can be found under Monitoring\Reports\Software Updates A - Compliance as shown below:

If you don’t see this in your environment, it’s likely you are either viewing a Site Server that is not set up with the Reporting Service Point role. If this role is set up on another Site Server in your environment, target the Admin Console there instead. If not, the following article goes through the process of setting up Reporting Services in your ConfigMgr hierarchy:

Configuring Reporting in Configuration Manager

Once reporting is set up correctly, it’s trivially easy to select one of the Compliance reports, fill out the requested information, and get the desired results. Sometimes, however, the results in the report might not reflect what you know to be the case in your environment. As an example, suppose that you have devices that are candidates for Office 2013 Service Pack 1. The Compliance reports should show this to help you plan for rolling out this update as needed.

In cases like this, the very first thing to do is determine whether the issue is restricted to reporting when viewed through ConfigMgr. To verify this, the following test can be done:

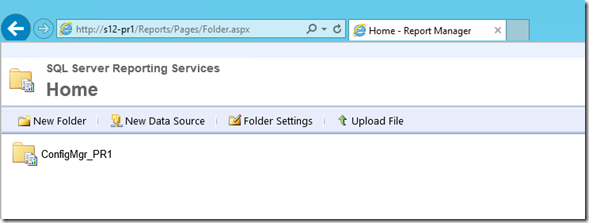

Open Internet Explorer on the Reporting Services Point and navigate to https://<servername>/reports as shown below:

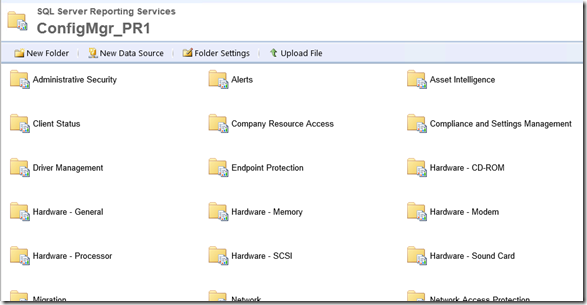

Select the parent folder (in my lab, ConfigMgr_PR1) to see a complete list of available reports:

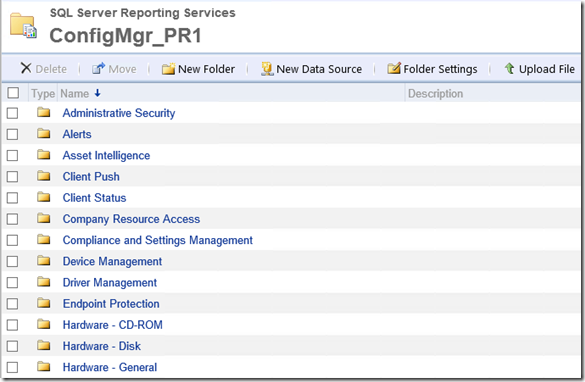

If so desired, you can choose the ‘Details View” on the right-hand side. I find this to be helpful when navigating reports in Internet Explorer, though it’s only my preference:

Select the folder containing the report of interest, which in our case is Software Updates A – Compliance and choose the report of interest:

By selecting the individual report, it is possible to see whether the discrepancy noted in ConfigMgr is also showing when viewed through the native reporting view. If the discrepancy is limited to ConfigMgr, then other methods of troubleshooting may be necessary. But in our case, the same information should be showing regardless of how we view the report.

The Reason for the Problem

Reports in ConfigMgr can only tell about things they know. The way ConfigMgr determines what should be in a report is through state messages sent by its managed devices. The state messaging system is fairly complicated, though an excellent blog that goes into this in some depth can be found here (full disclosure, this blog is about SCCM 2007, though the process is very similar).

The idea is that for some reason or other (network hiccup, etc.) the state messages relating to a particular compliance setting did not make it to the Site Server, which has resulted in the Compliance report showing inaccurate information. By default, state messages should be resent every 30 days from all managed devices so any issues detected should resolve themselves over time. But in practice, I have found that sometimes it’s necessary to give the state message system a jump start in order to resolve the reporting discrepancy.

The Traditional Solution

It’s a fairly straightforward idea to resolve the reporting discrepancy. Somehow, we need to tell the managed devices which are showing incorrect information to resend their state messages and hopefully resolve the issue. Traditionally, this has been done through a script available via MSDN:

How to Refresh the Compliance State

In reading the article, we can see that it was written for SCCM 2007 but I can personally attest that the script it contains (at least the VBScript, since I have not tested the C# code) still works in ConfigMgr 2012 R2. As the article also explains, the purpose of the script is as follows:

“In Microsoft System Center Configuration Manager 2007, you refresh the client compliance state by creating an instance of the UpdatesStore COM class and by using the RefreshServerComplianceState method. This causes the client to resend a full compliance report to the Configuration Manager 2007 server.”

The script which is typically used is as follows (taken directly from the MSDN article with only the comments removed):

Sub RefreshServerComplianceState()

dim newCCMUpdatesStore

set newCCMUpdatesStore = CreateObject ("Microsoft.CCM.UpdatesStore")

newCCMUpdatesStore.RefreshServerComplianceState

wscript.echo "Ran RefreshServerComplianceState."

End Sub

To run it manually, all that’s needed is to give the script a name, save it, then open a command prompt as an Administrator and type:

CSCRIPT <PATH>

In my lab, this looks like the following:

CSCRIPT C:\SCRIPTS\VBSCRIPT\RESYNCSTATE.VBS

NOTE: Depending on the architecture of the managed device, you will want to make sure you are running the correct version of cscript. There is a version under both the System32 and SysWOW64 directories. In my testing, I needed the version of cscript in System32, but if this causes problems test also with the version in the SysWOW64 directory.

The VBScript is very short and easy to use (and, indeed, can be used in testing scenarios when we want to manually send state messages from a managed device). And in many cases, this is the final solution which leads to case closure. In some cases, however, there is still work to be done.

The Problem – Packing the VBScript for ConfigMgr

Maybe some of you have figured out how to get this VBScript to run as a package in ConfigMgr. But while there is a good bit of information on how this script can solve the reporting discrepancy, there is virtually nothing published on how to package it in ConfigMgr so that it runs automatically on managed devices. And if you have hundreds or even thousands of devices that need to run this script, doing so manually on every one simply isn’t practical.

In the recent case I had, I worked for several hours to try and get the VBScript listed above to deploy and run successfully as a ConfigMgr package. I did many different variations including setting permissions, specifying the starting folder, and leveraging both versions of cscript. While I could get the script to install properly and while it would run successfully when I invoked it manually, I could never get it to run automatically regardless of the variations I tried.

PowerShell to the Rescue

After many frustrating hours, I decided there must be a better way. The VBScript itself was not hard. All it was doing was creating an instance of UpdatesStore in the WMI Repository on the managed client and then invoking the RefreshServerComplianceState method. Surely this could be done with PowerShell.

Rather than try to re-invent the wheel I did a quick search on the Internet and came up with a two-line PowerShell equivalent that claimed to do what the VBScript does (and it turns out, it did!). The article where I located the code can be found here. The author’s refresh script is two lines long, and there is also a 1-line version listed in the reply section. Both work, but I stuck with the 2-line version for ease of understanding.

Additionally, in order to provide a way for clients to see that the script ran successfully, I added a reporting piece to create an event in the Application log of each managed device. The full code is below:

$SCCMUpdatesStore = New-Object -ComObject Microsoft.CCM.UpdatesStore

$SCCMUpdatesStore.RefreshServerComplianceState()

New-EventLog -LogName Application -Source SyncStateScript -ErrorAction SilentlyContinue

Write-EventLog -LogName Application -Source SyncStateScript -EventId 555 -EntryType Information -Message "Sync State ran successfully"

Note that the first two lines are taken directly from the blog referenced immediately above. Line 3 is used to create the source that will be used when the events are written. The –ErrorAction SilentlyContinue is there because if the source has already been created, PowerShell will throw an error. Adding –ErrorAction allows the following to happen:

- Check to see if the source SyncStateScript has already been added to the managed device

- If not, add it

- If added already, suppress the error and continue with the script

The lines starting with New-EventLog and Write-EventLog are not strictly necessary as ConfigMgr contains log files that will tell if the state messages have been re-sent. But for those who prefer to see Event Log entries showing if and when the script ran successfully, this is one way to achieve that goal.

Verifying the Script

One possibility when running the script that I needed to test was to find out if the state messages were actually being resent. Sure, the Event Logs were being updated, but how did I know for sure the state messages were also being sent? I had a pretty good idea that no errors were being thrown (I didn’t have any language in the script to suppress errors in the first two lines of the script and I also had tested running it before adding the EventLog portion). But could I confirm that the state messages were actually sent? The answer is yes.

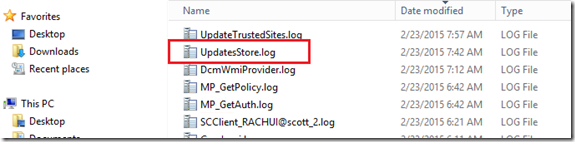

As an administrator in ConfigMgr, it is essential to become familiar with the way logs are created to report important information. For our present case, the log file we want is found under CCM\Logs (or SMS_CCM\Logs if you’re looking on a client machine that is also a Management Point). In my lab, the folder of interest is C:\Program Files\SMS_CCM\Logs. Within this folder, the log file to find is UpdatesStore.log as shown below:

Opening the log file after running the script, we should see the following:

Initiated refresh of update compliance to site server. UpdatesStore 2/23/2015 6:03:10 AM 7332 (0x1CA4)

Successfully called refresh of update compliance from automation object. UpdatesStore 2/23/2015 6:03:10 AM 13484 (0x34AC)

Message received to resend all update status, processing... UpdatesStore 2/23/2015 6:03:10 AM 14184 (0x3768)

Successfully raised Resync state message. UpdatesStore 2/23/2015 6:03:11 AM 14184 (0x3768)

Resend status completed successfully. UpdatesStore 2/23/2015 6:03:11 AM 14184 (0x3768)

If this information is found at the same time as the script has run, we can say with confidently that it has done what we asked.

From here, the only thing left to do is verify the Compliance report is now reporting correct information, which can be easily done by re-running the report.

Creating the Package

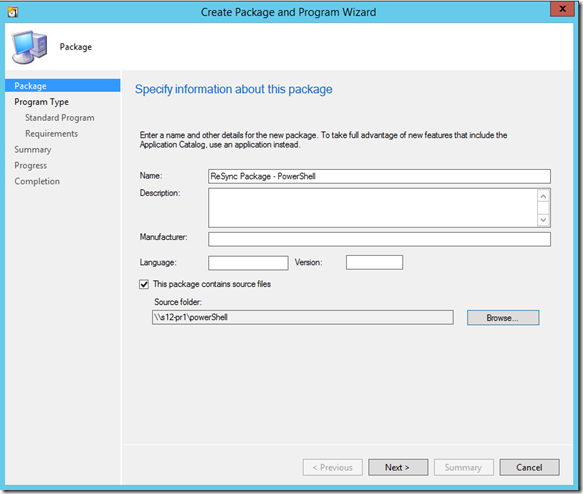

This part is something I have had difficulty locating online, so I thought I’d provide a step-by-step of how I configured the package in my own lab. Note that I am creating a package and not an application. To do this, navigate in the ConfigMgr admin console to Software Library\Application Management\Packages and right-click the Packages node. Select Create Package and enter the following information (note that I am not entering any optional information, though you will likely want to do this when working in your production environment).

On the initial page, I specified the following:

NOTE: In order to use the PowerShell script I had to do the following prior to creating the package:

- Create the script itself (copying the code above and saving as a .ps1 file is sufficient)

- Create a share (in my case \\s12-pr1\PowerShell)

- Set the share with appropriate permissions (in my case I granted the Everyone group ‘Read” permission)

It is important to use the network location when choosing the source folder. This way, remote devices can access the folder successfully.

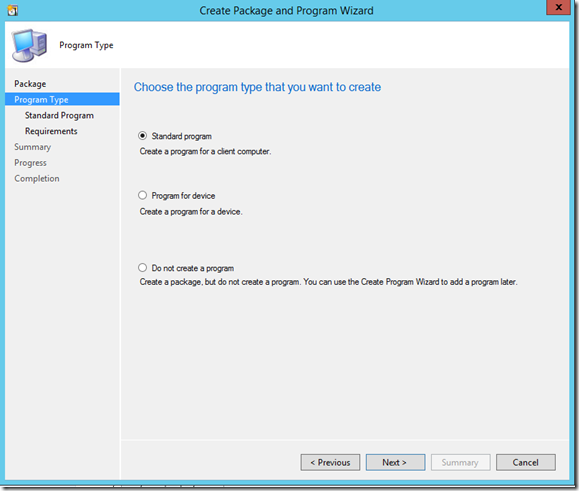

On the next page, select ‘Standard Program’ (assuming we are using a client computer and not a device such as a Windows phone):

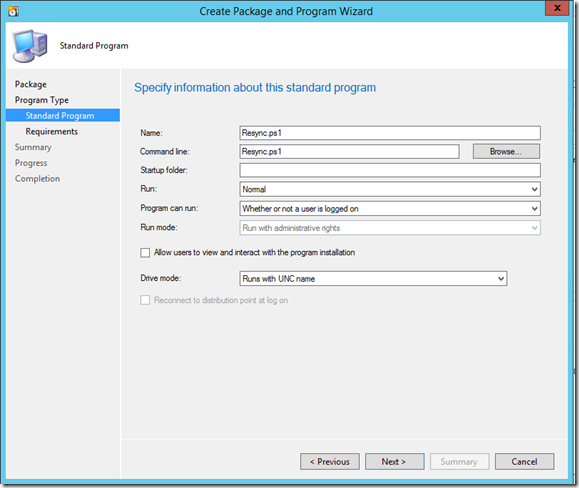

After selecting the Standard Program, we need to enter information on the script and how we want to run it. The following screen provides that detail:

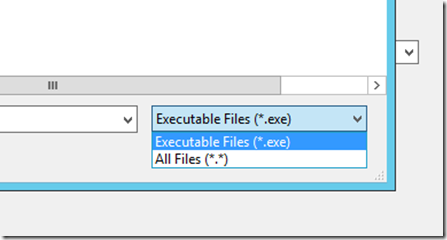

Note that I have set the program to run whether the user is logged on or not. This automatically sets the Run mode to “Run with administrative rights”. To set the command line, all I did was browse to the folder which opens automatically (because I specified it in the previous screen when I set the network share) and select the Resync.ps1 script. If you don’t see the script when you open the folder, look in the lower left-hand corner of the window and make sure “All Files (*.*)” is selected:

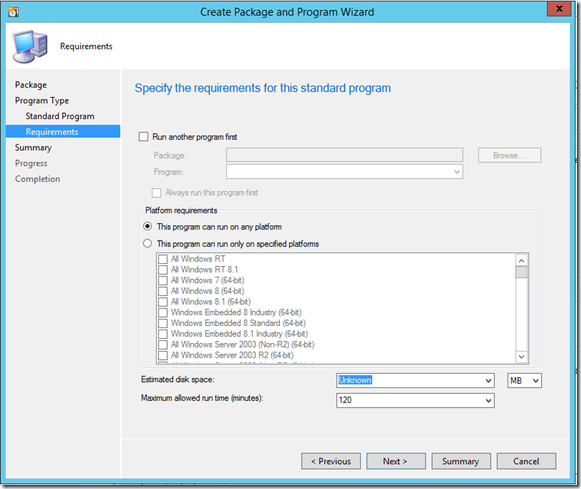

On the next page, choose whether to run another program first, and also which platforms can run the program. In my lab I left this blank as I did not want to run any other programs first and I was okay with the script running on any platform.

From here, confirm the settings on the next page and close the wizard.

The wizard will run and create both the package and the program. From here, you’ll want to distribute the package to your desired distribution points and then deploy the program.

Deploying the Program

This is the final step of deploying the PowerShell script, but it’s essential. First, you’ll right-click either the package or the program and select ‘Deploy’. Then you’ll do the following:

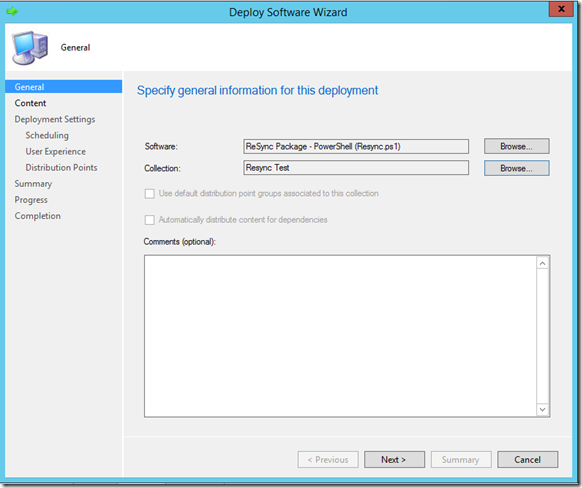

On the first page of the deployment wizard, select the Collection to which this program will be deployed. As can be seen below, I have created a collection in my lab called Resync Test and added my test devices to it.

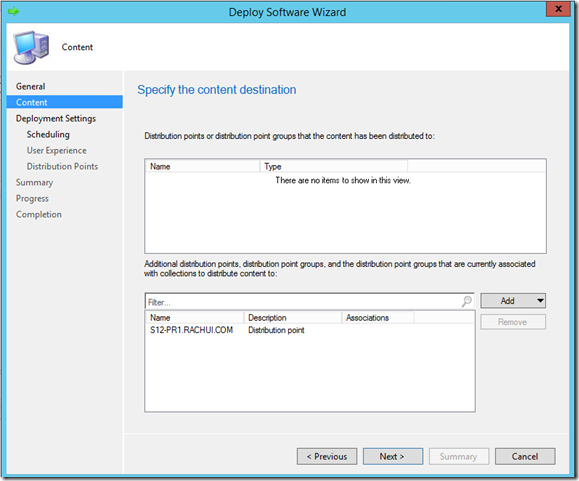

From here, ensure that the desired distribution points (or distribution point groups) are selected and select Next:

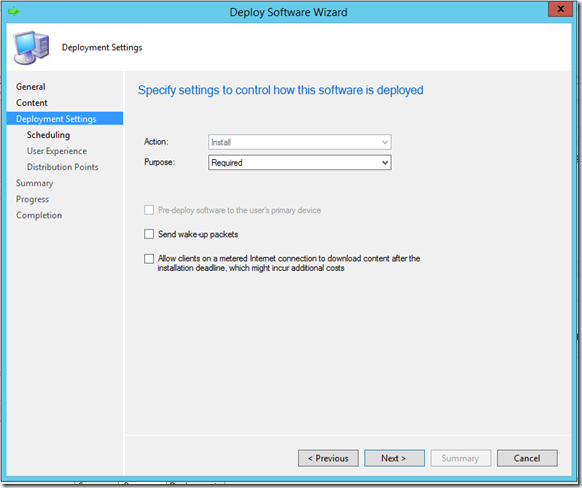

On the next screen, I selected ‘Required’ (I did not want my users to determine whether the script should run or not) and left the defaults as shown:

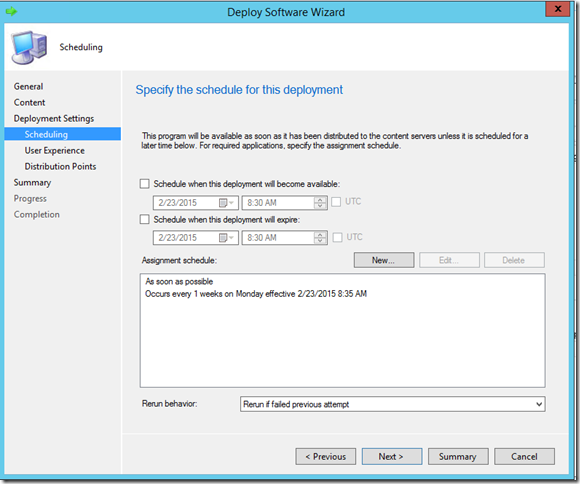

The next page is where I specified the script to run on a schedule. Because I don’t want to flood my environment with too many state messages, I set this to run immediately when installed and then after that on a weekly basis. I also specified that the script should re-run only if the previous attempt failed:

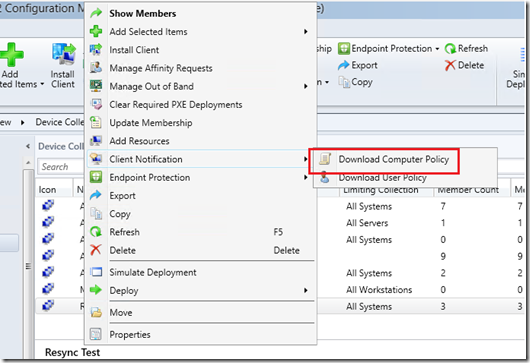

I left everything else at the defaults and then refreshed the Machine Policy for my test clients. Because this is ConfigMgr 2012 R2, I was able to go to my collection under Assets and Compliance and the admin console, right-click and select Client Notification\Download Computer Policy as shown below. This sends a notification via the “Fast Channel Notification” process to managed devices in the collection instructing them to refresh their machine policy as soon as possible.

From there it was a matter of monitoring my test clients to see that the script ran. Because I had enhanced the script, I was able to watch the Event Logs as well as the UpdatesStore.log file to ensure the script ran successfully.

Summary

While the process of running a compliance script to clear up reporting discrepancies has been known for some time, there has been very little documentation on doing this with PowerShell or using ConfigMgr packages to deploy and run the script. My goal in this blog has been to enhance the existing information with this extra detail for those seeking further information.

Because there can be a discrepancy in reporting from time to time, it is not a bad idea to deploy a script such as the one in this blog to all of your clients and have them refresh their state messages on a regular basis. I chose once a week for my lab, but you’ll want to configure settings that make sense for your own environment. And as always, you should thoroughly test this solution before ever running it in production. What works in my lab may not work as easily in your own, and testing is the only way to avoid preventable issues when pushing scripts like this into production.