Easily Multi thread Powershell commands and scripts

Update: I had recreated the same functionality in C# using Tasks. The Powershell binary module has some added features when compared with the below PS Version.

Download the powershell binary module from Github repo.

If you require the PS1 version of the script, download it here.

invokeallV2.5.renametoPS1

Rename the file to .ps1 to use it on the PS window

I want to share this script, I wrote for my customer few months back. This one is special as it is my first script without any Exchange commands in it :-) .

There are many everyday tasks in support and administration which takes long time to complete. Some of the common tasks are

- Collecting asset information, configuration from all the workstations

- Collect mailbox statistics or other details for thousands of objects...etc.

I had seen some commands/scripts that was executed for large number of objects and took more than 24 hours to complete. Scripting is all about reducing time spent performing mundane tasks, but seems it is not enough. The commands simply take so long just because of too many objects it has to process sequentially. Then comes the idea of Multi-threading in powershell, using powershell background runspaces seem fit for the job than using Powershell jobs. Background Runspaces are more faster and light weight to process more number of tasks in parallel.

I started integrating runspaces to achieve multi-threading on my scripts, but for every script, the requirement is different and coding it consumes time. To overcome this challenge, I decided to create a generic function that can be used on all the commands and scripts with very less modification to the actual command. Not all Administrators who uses powershell for the daily administrative tasks and reporting are well versed on scripting. With all that kept in mind, Invoke-all function was created, I have made the function very generic, easy-to-use and lightweight as possible.

The script can be downloaded from https://aka.ms/invokeall

Usage example:

#Actual command:

[powershell]

Get-Mailbox -database 'db1' | foreach{ Get-MailboxFolderStatistics -Identity $_.name -FolderScope "inbox" -IncludeOldestAndNewestItems } | Where-Object{$_.ItemsInFolder -gt 0} | Select Identity, Itemsinfolder, Foldersize, NewestItemReceivedDate

[/powershell]

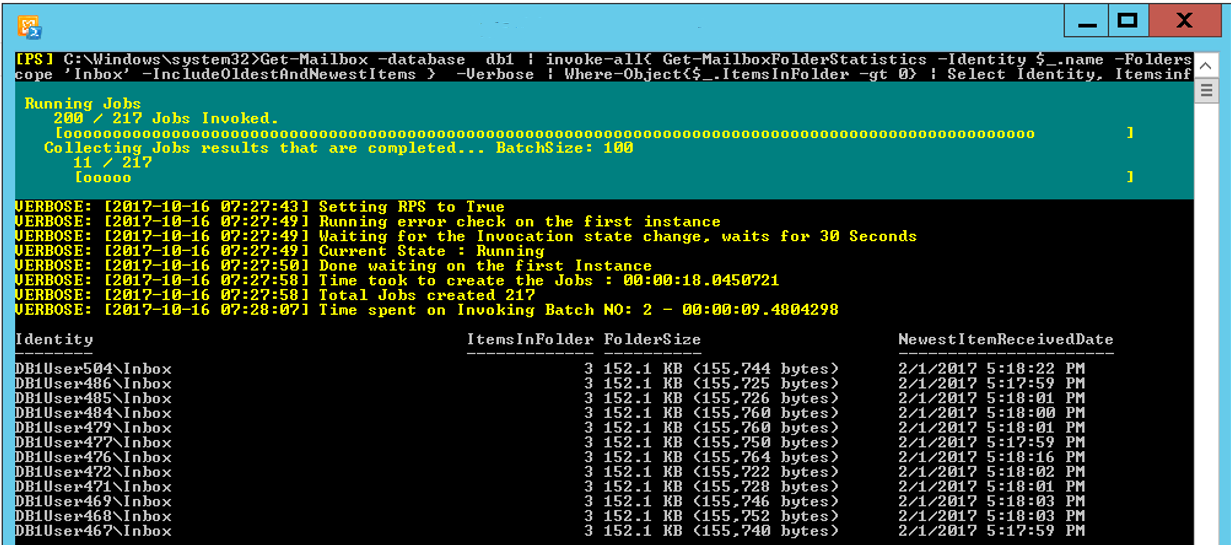

Same command with Invoke-all

[powershell]

Get-Mailbox -database 'db1' | Invoke-All{ Get-MailboxFolderStatistics -Identity $_.name -Folder Scope "inbox" -IncludeOldestAndNewestItems } | Where-Object{$_.ItemsInFolder -gt 0} | Select Identity, Itemsinfolder, Foldersize, NewestItemReceivedDate

[/powershell]

As you see it is that easy to use. Imagine, 100s of DBs and using invoke-all function is 10x faster than the conventional way.

Note: The scriptblock is the first parameter to the Invoke-all function and it should only contain the first command and its parameters that needs to be executed. Any Filtering that is done using another command (Where-Object) must be outside of the script block as mentioned in the above example.

Features:

- Easy to use.

- When used with Cmdlets or Remote PowerShell, it automatically detects and loads the (only) required modules to the sessions.

- Checks if it can complete the first job without errors before scheduling all the jobs to run in parallel.

- By default, the script processes the jobs in batches. With batching, the resource consumption is very less and its proportional to the batch size which can be adjusted as required.

- Ability to pause the Job scheduling in-between batches. This is very useful if too many requests are overloading the destination.

- Creates a runtime log by default.

The Invoke-all is a function exported and is a wrapper like function that takes input from Pipeline or using explicit parameter and process the command concurrently using runspaces. I have mainly focused on Exchange On-Perm (and some Online Remote powershell (RPS) commands) while developing the script. Though I have only tested it with Exchange On-Perm Remote sessions and Snap-ins, it can be used on any powershell cmdlet or function.

I haven’t tested it with any Set-* commands, but it is possible to use this script to modify objects in parallel. Carefully chose the command so that there is no contention created when using multiple threads.

Exchange remote PowerShell uses Implicit remoting, the Proxyfunctions used by the Remote session doesn’t accept value from pipeline. Therefore, the Script requires you to declare all the Parameters that you want to use in the command when using such commands. See examples on how to do it.

It can handle cmdlets and functions from any modules. I have updated script to handle external scripts as well. I will see to include applications handling in the next version, but it can be achieved in this version with a simple script as shown in the examples that can execute your EXE or application.

Please refer to the comments on the script to learn more about the technique used on the script or please leave a comment here. Hope you find this one useful to save some time on your long running powershell commands.