Microsoft Infrastructure as a Service Foundations-Multi-Tenant Designs

As part of Microsoft IaaS Foundations series, this document provides an overview of one of the Microsoft Infrastructure as a Service design patterns that you can use drive your own design exercises. These patterns are field tested and represent the results of Microsoft Consulting Services experiences in the field. This article discusses multi-tenant design patterns.

Table of Contents (for this article):

1.2 Infrastructure Requirements

1.3 Multi-Tenant Storage Considerations

1.4 Multi-Tenant Network Considerations

1.5 Multi-Tenant Compute Considerations

This document is part of the Microsoft Infrastructure as a Service Foundations series. The series includes the following documents:

Chapter 1: Microsoft Infrastructure as a Service Foundations

Chapter 2: Microsoft Infrastructure as a Service Compute Foundations

Chapter 3: Microsoft Infrastructure as a Service Network Foundations

Chapter 4: Microsoft Infrastructure as a Service Storage Foundations

Chapter 5: Microsoft Infrastructure as a Service Virtualization Platform Foundations

Chapter 6: Microsoft Infrastructure as a Service Design Patterns–Overview

Chapter 7: Microsoft Infrastructure as a Service Foundations—Converged Architecture Pattern

Chapter 8: Microsoft Infrastructure as a Service Foundations-Software Defined Architecture Pattern

Chapter 9: Microsoft Infrastructure as a Service Foundations-Multi-Tenant Designs (this article)

For more information about the Microsoft Infrastructure as a Service Foundations series, please see Chapter 1: Microsoft Infrastructure as a Service Foundations

Contributors:

Adam Fazio – Microsoft

David Ziembicki – Microsoft

Joel Yoker – Microsoft

Artem Pronichkin – Microsoft

Jeff Baker – Microsoft

Michael Lubanski – Microsoft

Robert Larson – Microsoft

Steve Chadly – Microsoft

Alex Lee – Microsoft

Yuri Diogenes – Microsoft

Carlos Mayol Berral – Microsoft

Ricardo Machado – Microsoft

Sacha Narinx – Microsoft

Tom Shinder – Microsoft

Jim Dial – Microsoft

Applies to:

Windows Server 2012 R2

System Center 2012 R2

Windows Azure Pack – October 2014 feature set

Microsoft Azure – October 2014 feature set

Document Version:

1.0

The goal of the Infrastructure-as-a-Service (IaaS) Foundations series is to help enterprise IT and cloud service providers understand, develop, and implement IaaS infrastructures. This series provides comprehensive conceptual background, a reference architecture and a reference implementation that combines Microsoft software, consolidated guidance, and validated configurations with partner technologies such as compute, network, and storage architectures, in addition to value-added software features.

The IaaS Foundations Series utilizes the core capabilities of the Windows Server operating system, Hyper-V, System Center, Windows Azure Pack and Microsoft Azure to deliver on-premises and hybrid cloud Infrastructure as a Service offerings.

As part of Microsoft IaaS Foundations series, this document provides an overview of Microsoft Infrastructure as a Service Design patterns that you can use drive your own design exercises. These patterns are field tested and represent the results of Microsoft Consulting Services experiences in the field. The patterns discussed in this article apply themselves to multi-tenant designs and include security and isolation considerations.

1 Multi-Tenant Designs

In many private cloud scenarios, and nearly all hosting scenarios, a multi-tenant infrastructure is required. This section illustrates how a multi-tenant fabric infrastructure can be created by using Windows Server 2012 R2 and the technologies described in this Fabric Architecture Guide.

In general, multi-tenancy implies multiple non-related consumers or customers of a set of services. Within a single organization, this could be multiple business units with resources and data that must remain separate for legal or compliance reasons. Most hosting companies require multi-tenancy as a core attribute of their business model. This might include a dedicated physical infrastructure for each hosted customer or logical segmentation of a shared infrastructure by using software-defined technologies.

1.1 Requirements Gathering

The design of a multi-tenant fabric begins with a careful analysis of the business requirements, which will drive the design. In many cases, legal or compliance requirements drive the design approach, which means that a team of several disciplines (for example, business, technical, and legal) should participate in the requirements gathering phase. If specific legal or compliance regimes are required, a plan to ensure compliance and ongoing auditing (internal or non-Microsoft) should be implemented.

To organize the requirements gathering process, an “outside in” approach can be helpful. For hosted services, the end customer or consumer is outside of the hosting organization. Requirements gathering can begin by taking on the persona of the consumer and determining how the consumer will become aware of and be able to request access to hosted services.

Then consider multiple consumers, and ask the following questions:

- Will consumers use accounts that the host creates or accounts that they use internally to access services?

- Is one consumer allowed to be aware of other consumer’s identities, or is a separation required?

Moving further into the “outside in” process, determine whether legal or compliance concerns require dedicated resources for each consumer:

- Can multiple consumers share a physical infrastructure?

- Can traffic from multiple consumers share a common network?

- Can software-defined isolation meet the requirements?

- How far into the infrastructure must authentication, authorization, and accounting be maintained for each consumer (for example, only at the database level, or including the disks and LUNs that are used by the consumer in the infrastructure)?

The following list provides a sample of the types of design and segmentation options that might be considered as part of a multi-tenant infrastructure:

- Physical separation by customer (dedicated hosts, network, and storage)

- Logical separation by customer (shared physical infrastructure with logical segmentation)

- Data separation (such as dedicated databases and LUNs)

- Network separation (VLANs or private VLANs)

- Performance separation by customer (shared infrastructure but guaranteed capacity or QoS)

The remainder of this section describes multi-tenancy options at the fabric level and how those technologies can be combined to enable a multi-tenant fabric.

1.2 Infrastructure Requirements

The above requirements gathering process should result in a clear direction and set of mandatory attributes that the fabric architecture must contain. The first key decision is whether a shared storage infrastructure or dedicated storage per tenant is required. For a host, driving toward as much shared infrastructure as possible is typically a business imperative, but there can be cases where it is prohibited.

As mentioned in the previous storage sections in this Fabric Architecture Guide, Windows Server 2012 R2 supports a range of traditional storage technologies such as JBOD, iSCSI and Fiber Channel SANs, and converged technologies such as FCoE. In addition, the new capabilities of storage spaces, cluster shared volumes, storage spaces tiering and Scale-Out File Server clusters present a potentially lower cost solution for advanced storage infrastructures.

The shared versus dedicated storage infrastructure requirement drives a significant portion of the design process. If dedicated storage infrastructures per tenant are required, appropriate sizing and minimization of cost are paramount. It can be difficult to scale down traditional SAN approaches to a large number of small- or medium-sized tenants. In this case, the Scale-Out File Server cluster and Storage Spaces approach, which uses shared SAS JBOD, can scale down cost effectively to a pair of file servers and a single SAS tray.

Figure 1 Scale out file server and storage spaces

On the other end of the spectrum, if shared but logically segmented storage is an option, nearly all storage options become potentially relevant. Traditional Fiber Channel and iSCSI SANs have evolved to provide a range of capabilities to support multi-tenant environments through technologies such as zoning, masking, and virtual SANs. With the scalability enhancements in Windows Server 2012 R2 in the storage stack, large-scale shared storage infrastructures that use the Scale-Out File Server cluster and Storage Spaces can also be a cost effective choice.

Although previous sections discussed architecture and scalability, this section highlights technologies for storage security and isolation in multi-tenant environments.

1.3 Multi-Tenant Storage Considerations

1.3.1 SMB 3.0

The Server Message Block (SMB) protocol is a network file sharing protocol that allows applications on a computer to read and write to files and to request services from server programs in a computer network. The SMB protocol can be used on top of TCP/IP or other network protocols.

By using the SMB protocol, an application (or the user of an application) can access files or other resources on a remote server. This allows users to read, create, and update files on the remote server. The application can also communicate with any server program that is set up to receive an SMB client request.

Windows Server 2012 R2 provides the following ways to use the SMB 3.0 protocol:

- File storage for virtualization (Hyper-V over SMB) :Hyper-V can store virtual machine files (such as configuration files, virtual hard disk (VHD) files, and snapshots) in file shares over the SMB 3.0 protocol. This can be used for stand-alone file servers and for clustered file servers that use Hyper-V with shared file storage for the cluster.

- SQL Server over SMB:SQL Server can store user database files on SMB file shares. Currently, this is supported with SQL Server 2008 R2 for stand-alone servers.

- Traditional storage for end-user data: The SMB 3.0 protocol provides enhancements to the information worker (client) workloads. These enhancements include reducing the application latencies that are experienced by branch office users when they access data over wide area networks (WANs), and protecting data from eavesdropping attacks.

1.3.1.1 SMB Encryption

A security concern for data that traverses untrusted networks is that it is prone to eavesdropping attacks. Existing solutions for this issue typically use IPsec, WAN accelerators, or other dedicated hardware solutions. However, these solutions are expensive to set up and maintain.

Windows Server 2012 R2 includes encryption that is built-in to the SMB protocol. This allows end-to-end data protection from snooping attacks with no additional deployment costs. You have the flexibility to decide whether the entire server or only specific file shares should be enabled for encryption. SMB Encryption is also relevant to server application workloads if the application data is on a file server and it traverses untrusted networks. With this feature, data security is maintained while it is on the wire.

1.3.1.2 Cluster Shared Volumes

By using Cluster Shared Volumes (CSV), you can unify storage access into a single namespace for ease-of-management. A common namespace folder that contains all CSV in the failover cluster is created at the path C:\ClusterStorage\. All cluster nodes can access a CSV at the same time, regardless of the number of servers, the number of JBOD enclosures, or the number of provisioned virtual disks.

This unified namespace enables high availability workloads to transparently fail over to another server if a server failure occurs. It also enables you to easily take a server offline for maintenance.

Clustered storage spaces can help protect against the following risks:

- Physical disk failures: When you deploy a clustered storage space, protection against physical disk failures is provided by creating storage spaces with the mirror resiliency type. Additionally, mirror spaces use “dirty region tracking” to track modifications to the disks in the pool. When the system resumes from a power fault or a hard reset event and the spaces are brought back online, dirty region tracking creates consistency among the disks in the pool.

- Data access failures: If you have redundancy at all levels, you can protect against failed components, such as a failed cable from the enclosure to the server, a failed SAS adapter, power faults, or failure of a JBOD enclosure. For example, in an enterprise deployment, you should have redundant SAS adapters, SAS I/O modules, and power supplies. To protect against a complete disk enclosure failure, you can use redundant JBOD enclosures.

- Data corruptions and volume unavailability: The NTFS file system and the Resilient File System (ReFS) help protect against corruption. For NTFS, improvements to the CHKDSK tool in Windows Server 2012 R2 can greatly improve availability. If you deploy highly available file servers, you can use ReFS to enable high levels of scalability and data integrity regardless of hardware or software failures.

- Server node failures: Through the Failover Clustering feature in Windows Server 2012 R2, you can provide high availability for the underlying storage and workloads. This helps protect against server failure and enables you to take a server offline for maintenance without service interruption.

The following are some of the technologies in Windows Server 2012 R2 that can enable multi-tenant architectures.

- File storage for virtualization (Hyper-V over SMB) :Hyper-V can store virtual machine files (such as configuration files, virtual hard disk (VHD) files, and snapshots) in file shares over the SMB 3.0 protocol. This can be used for stand-alone file servers and for clustered file servers that use Hyper-V with shared file storage for the cluster.

- SQL Server over SMB:SQL Server can store user database files on SMB file shares. Currently, this is supported by SQL Server 2012 and SQL Server 2008 R2 for stand-alone servers.

- Storage visibility to only a subset of nodes:Windows Server 2012 R2 enables cluster deployments that contain application and data nodes, so storage can be limited to a subset of nodes.

- Integration with Storage Spaces: This technology allows virtualization of cluster storage on groups of inexpensive disks. The Storage Spaces feature in Windows Server 2012 R2 can integrate with CSV to permit scale-out access to data.

1.3.1.3 Security and Storage Access Control

A solution that uses file clusters, storage spaces, and SMB 3.0 in Windows Server 2012 R2 eases the management of large scale storage solutions because nearly all the setup and configuration is Windows based with associated Windows PowerShell support.

Note:

Storage can be made visible to only a subset of nodes in the file cluster. This can be used in some scenarios to leverage the cost and management advantage of larger shared clusters, and to segment those clusters for performance or access purposes.

Additionally, at various levels of the storage stack (for example, file shares, CSV, and storage spaces), access control lists can be applied. In a multi-tenant scenario, this means that the full storage infrastructure can be shared and managed centrally and that dedicated and controlled access to segments of the storage infrastructure can be designed. A particular customer could have LUNs, storage pools, storage spaces, cluster shared volumes, and file shares dedicated to them, and access control lists can ensure only that tenant has access to them.

Additionally, by using SMB Encryption, all access to the file-based storage can be encrypted to protect against tampering and eavesdropping attacks. The biggest benefit of using SMB Encryption over more general solutions (such as IPsec) is that there are no deployment requirements or costs beyond changing the SMB settings in the server. The encryption algorithm used is AES-CCM, which also provides data integrity validation.

1.4 Multi-Tenant Network Considerations

The network infrastructure is one of the most common and critical layers of the fabric where multi-tenant design is implemented. It is also an area of rapid innovation because the traditional methods of traffic segmentation, such as VLANs and port ACLs, are beginning to show their age, and they are unable to keep up with highly virtualized, large-scale hosting data centers and hybrid cloud scenarios.

The following sections describe the range of technologies that are provided in Windows Server 2012 R2 for building modern, secure, multi-tenant network infrastructures.

1.4.1 Windows Network Virtualization

Hyper-V Network Virtualization provides the concept of a virtual network that is independent of the underlying physical network. With this concept of virtual networks, which are composed of one or more virtual subnets, the exact physical location of an IP subnet is decoupled from the virtual network topology.

As a result, customers can easily move their subnets to the cloud while preserving their existing IP addresses and topology in the cloud so that existing services continue to work unaware of the physical location of the subnets.

Hyper-V Network Virtualization provides policy-based, software-controlled network virtualization that reduces the management overhead that is faced by enterprises when they expand dedicated IaaS clouds, and it provides cloud hosts with better flexibility and scalability for managing virtual machines to achieve higher resource utilization.

An IaaS scenario that has multiple virtual machines from different organizational divisions (referred to as a dedicated cloud) or different customers (referred to as a hosted cloud) requires secure isolation. Virtual local area networks (VLANs), can present significant disadvantages in this scenario.

For more information:

Hyper-V Network Virtualization Overview

VLANs

Currently, VLANs are the mechanism that most organizations use to support address space reuse and tenant isolation. A VLAN uses explicit tagging (VLAN ID) in the Ethernet frame headers, and it relies on Ethernet switches to enforce isolation and restrict traffic to network nodes with the same VLAN ID. As described earlier, there are disadvantages with VLANs, which introduce challenges in large-scale, multi-tenant environments.

IP address assignment

In addition to the disadvantages that are presented by VLANs, virtual machine IP address assignment presents issues, which include:

- Physical locations in the data center network infrastructure determine virtual machine IP addresses. As a result, moving to the cloud typically requires rationalization and possibly sharing IP addresses across workloads and tenants.

- Policies are tied to IP addresses, such as firewall rules, resource discovery, and directory services. Changing IP addresses requires updating all the associated policies.

- Virtual machine deployment and traffic isolation are dependent on the network topology.

When data center network administrators plan the physical layout of the data center, they must make decisions about where subnets will be physically placed and routed. These decisions are based on IP and Ethernet technology that influence the potential IP addresses that are allowed for virtual machines running on a given server or a blade that is connected to a particular rack in the data center.

When a virtual machine is provisioned and placed in the data center, it must adhere to these choices and restrictions regarding the IP address. Therefore, the typical result is that the data center administrators assign new IP addresses to the virtual machines.The issue with this requirement is that in addition to being an address, there is semantic information associated with an IP address.

Example:

One subnet might contain given services or be in a distinct physical location. Firewall rules, access control policies, and IPsec security associations are commonly associated with IP addresses. Changing IP addresses forces the virtual machine owners to adjust all their policies that were based on the original IP address. This renumbering overhead is so high that many enterprises choose to deploy only new services to the cloud, leaving legacy applications alone.

Hyper-V Network Virtualization decouples virtual networks for customer virtual machines from the physical network infrastructure. As a result, it enables customer virtual machines to maintain their original IP addresses, while allowing data center administrators to provision customer virtual machines anywhere in the data center without reconfiguring physical IP addresses or VLAN IDs.

Each virtual network adapter in Hyper-V Network Virtualization is associated with two IP addresses:

- Customer address: The IP address that is assigned by the customer, based on their intranet infrastructure. This address enables the customer to exchange network traffic with the virtual machine as if it had not been moved to a public or private cloud. The customer address is visible to the virtual machine and reachable by the customer.

- Provider address: The IP address that is assigned by the host or the data center administrators, based on their physical network infrastructure. The provider address appears in the packets on the network that are exchanged with the server running Hyper-V that is hosting the virtual machine. The provider address is visible on the physical network, but not to the virtual machine.

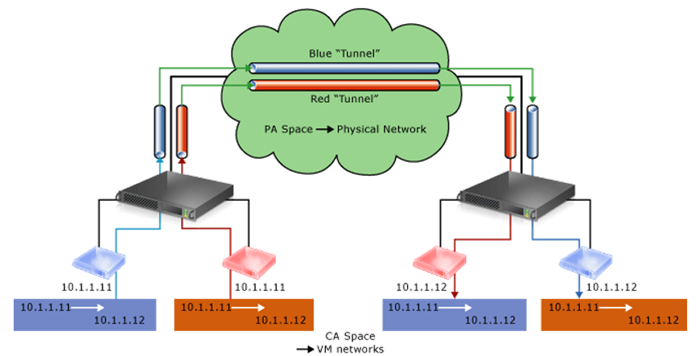

The customer addresses maintain the customer's network topology, which is virtualized and decoupled from the actual underlying physical network topology and addresses, as implemented by the provider addresses. Figure 2 shows the conceptual relationship between virtual machine customer addresses and network infrastructure provider addresses as a result of network virtualization.

Figure 2 HNV

Key aspects of network virtualization in this scenario include:

- Each customer address in the virtual machine is mapped to a physical host provider address.

- Virtual machines send data packets in the customer address spaces, which are put into an “envelope” with a provider address source and destination pair, based on the mapping.

- The customer address and provider address mappings must allow the hosts to differentiate packets for different customer virtual machines.

As a result, the network is virtualized by the network addresses that are used by the virtual machines.

Hyper-V Network Virtualization supports the following modes to virtualize the IP address:

- Generic Routing Encapsulation The Network Virtualization using Generic Routing Encapsulation (NVGRE) is part of the tunnel header. This mode is intended for the majority of data centers that deploy Hyper-V Network Virtualization. In NVGRE, the virtual machine’s packet is encapsulated inside another packet. The header of this new packet has the appropriate source and destination PA IP addresses in addition to the virtual subnet ID, which is stored in the Key field of the Generic Routing Encapsulation (GRE) header.

- IP Rewrite In this mode, the source and the destination CA IP addresses are rewritten with the corresponding PA addresses as the packets leave the end host. Similarly, when virtual subnet packets enter the end host, the PA IP addresses are rewritten with appropriate CA addresses before being delivered to the virtual machines. IP Rewrite is targeted for special scenarios where the virtual machine workloads require or consume very high bandwidth throughput (~10 Gbps) on existing hardware, and the customer cannot wait for NVGRE-aware hardware.

1.4.2 Hyper-V Virtual Switch

The Hyper-V virtual switch is a software-based, layer-2 network switch that is available in Hyper-V Manager when you install the Hyper-V role. The switch includes programmatically managed and extensible capabilities to connect virtual machines to virtual networks and to the physical network. In addition, Hyper-V virtual switch provides policy enforcement for security, isolation, and service levels.

The Hyper-V virtual switch in Windows Server 2012 R2 introduces several features and enhanced capabilities for tenant isolation, traffic shaping, protection against malicious virtual machines, and simplified troubleshooting.

With built-in support for Network Device Interface Specification (NDIS) filter drivers and Windows Filtering Platform (WFP) callout drivers, the Hyper-V virtual switch enables independent software vendors (ISVs) to create extensible plug-ins (known as virtual switch extensions) that can provide enhanced networking and security capabilities. Virtual switch extensions that you add to the Hyper-V virtual switch are listed in the Virtual Switch Manager feature of Hyper-V Manager.

The capabilities provided in the Hyper-V virtual switch mean that organizations have more options to enforce tenant isolation, to shape and control network traffic, and to employ protective measures against malicious virtual machines.

Some of the principal features that are included in the Hyper-V virtual switch are:

- ARP and Neighbor Discovery spoofing protection: Uses Address Resolution Protocol (ARP) to provide protection against a malicious virtual machine that uses spoofing to steal IP addresses from other virtual machines.

Also uses Neighbor Discovery spoofing to provide protection against attacks that can be launched through IPv6. For more information, see Neighbor Discovery. - DHCP guard protection: Protects against a malicious virtual machine representing itself as a Dynamic Host Configuration Protocol (DHCP) server for man-in-the-middle attacks.

- Port ACLs: Provides traffic filtering, based on Media Access Control (MAC) or Internet Protocol (IP) addresses and ranges, which enables you to set up virtual network isolation.

- Trunk mode to virtual machines: Enables administrators to set up a specific virtual machine as a virtual appliance, and then direct traffic from various VLANs to that virtual machine.

- Network traffic monitoring: Enables administrators to review traffic that is traversing the network switch.

- Isolated (private) VLAN: Enables administrators to segregate traffic on multiple VLANs, to more easily establish isolated tenant communities.

The features in this list can be combined to deliver a complete multi-tenant network design.

1.4.3 Example Network Design

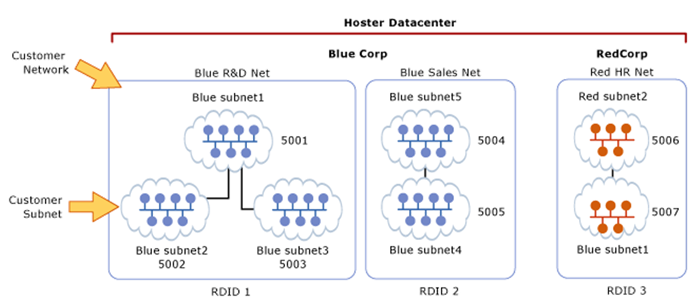

In Hyper-V Network Virtualization, a customer is defined as the owner of a group of virtual machines that are deployed in a data center. A customer can be a corporation or enterprise in a multi-tenant public data center, or a division or business unit within a private data center. Each customer can have one or more customer networks in the data center, and each customer network consists of one or more customer networks with virtual subnets.

Customer network

- Each customer network consists of one or more virtual subnets. A customer network forms an isolation boundary where the virtual machines within a customer network can communicate with each other. As a result, virtual subnets in the same customer network must not use overlapping IP address prefixes.

- Each customer network has a routing domain, which identifies the customer network. The routing domain ID is assigned by data center administrators or data center management software, such as System Center Virtual Machine Manager (VMM). The routing domain ID has a GUID format, for example, “{11111111-2222-3333-4444-000000000000}”.

Virtual subnets

- A virtual subnet implements the Layer 3 IP subnet semantics for virtual machines in the same virtual subnet. The virtual subnet is a broadcast domain (similar to a VLAN). Virtual machines in the same virtual subnet must use the same IP prefix, although a single virtual subnet can accommodate an IPv4 and an IPv6 prefix simultaneously.

- Each virtual subnet belongs to a single customer network (with a routing domain ID), and it is assigned a unique virtual subnet ID (VSID). The VSID is universally unique and may be in the range 4096 to 2^24-2).

A key advantage of the customer network and routing domain is that it allows customers to bring their network topologies to the cloud. The following diagram shows an example where the Blue Corp has two separate networks, the R&D Net and the Sales Net. Because these networks have different routing domain IDs, they cannot interact with each other. That is, Blue R&D Net is isolated from Blue Sales Net, even though both are owned by Blue Corp. Blue R&D Net contains three virtual subnets. Note that the routing domain ID and VSID are unique within a data center.

Figure 54 Virtual Subnets

In this example, the virtual machines with VSID 5001 can have their packets routed or forwarded by Hyper-V Network Virtualization to virtual machines with VSID 5002 or VSID 5003. Before delivering the packet to the virtual switch, Hyper-V Network Virtualization will update the VSID of the incoming packet to the VSID of the destination virtual machine. This will only happen if both VSIDs are in the same routing domain ID.

If the VSID that is associated with the packet does not match the VSID of the destination virtual machine, the packet will be dropped. Therefore, virtual network adapters with RDID 1 cannot send packets to virtual network adapters with RDID 2.

Each virtual subnet defines a Layer 3 IP subnet and a Layer 2 broadcast domain boundary similar to a VLAN. When a virtual machine broadcasts a packet, this broadcast is limited to the virtual machines that are attached to switch ports with the same VSID. Each VSID can be associated with a multicast address in the provider address. All broadcast traffic for a VSID is sent on this multicast address.

Note:

The VSID provides isolation. A virtual network adapter in Hyper-V Network Virtualization is connected to a Hyper-V switch port that has a VSID ACL. If a packet arrives on this Hyper-V virtual switch port with a different VSID, the packet is dropped. Packets will only be delivered on a Hyper-V virtual switch port if the VSID of the packet matches the VSID of the virtual switch port. This is the reason that packets flowing from VSID 5001 to 5003 must modify the VSID in the packet before delivery to the destination virtual machine.

If the Hyper-V virtual switch port does not have a VSID ACL, the virtual network adapter that is attached to that virtual switch port is not part of a Hyper-V Network Virtualization virtual subnet. Packets that are sent from a virtual network adapter that does not have a VSID ACL will pass unmodified through the Hyper-V Network Virtualization.

When a virtual machine sends a packet, the VSID of the Hyper-V virtual switch port is associated with this packet in the out-of-band (OOB) data. If Generic Routing Encapsulation (GRE) is the IP virtualization mechanism, the GRE Key field of the encapsulated packet contains the VSID.

On the receiving side, Hyper-V Network Virtualization delivers the VSID in the OOB data and the decapsulated packet to the Hyper-V virtual switch. If IP Rewrite is the IP virtualization mechanism, and the packet is destined for a different physical host, the IP addresses are changed from CA addresses to PA addresses, and the VSID in the OOB data is dropped. Hyper-V Network Virtualization verifies a policy and adds the VSID to the OOB data before the packet is passed to the Hyper-V virtual switch.

1.5 Multi-Tenant Compute Considerations

Similar to the storage and network layers, the compute layer of the fabric can be dedicated per tenant or shared across multiple tenants. That decision greatly impacts the design of the compute layer. Two primary decisions are required to begin the design process:

- Will the compute layer be shared between multiple tenants?

- Will the compute infrastructure provide high availability by using failover clustering?

This leads to four high-level design options:

- Dedicated stand-alone server running Hyper-V

- Shared stand-alone server running Hyper-V

- Dedicated Hyper-V failover clusters

- Shared Hyper-V failover clusters

A live migration without shared storage (also known as “shared nothing” live migration) in Windows Server 2012 R2 enables stand-alone servers running Hyper-V to be a viable option when the running virtual machines do not require high availability. A live migration without shared storage also enables virtual machines to be moved from any Hyper-V host running Windows Server 2012 R2 to another host, with nothing required but a network connection (it does not require shared storage).

For hosts that are delivering a stateless application and web hosting services, this may be an option. A live migration without shared storage enables the host to move virtual machines and evacuate hosts for patching without causing downtime to the running virtual machines. However, stand-alone hosts do not provide virtual machine high availability, so if the host fails, the virtual machines are not automatically started on another host.

The decision of using a dedicated vs. a shared Hyper-V host is primarily driven by the compliance or business model requirements discussed previously.

1.5.1 Hyper-V Role

The Hyper-V role enables you to create and manage a virtualized computing environment by using the virtualization technology that is built in to Windows Server 2012 R2. Installing the Hyper-V role installs the required components and optionally installs management tools. The required components include the Windows hypervisor, Hyper-V Virtual Machine Management Service, the virtualization WMI provider, and other virtualization components such as the virtual machine bus (VMBus), virtualization service provider, and virtual infrastructure driver.

The management tools for the Hyper-V role consist of:

- GUI-based management tools: Hyper-V Manager, a Microsoft Management Console (MMC) snap-in, and Virtual Machine Connection (which provides access to the video output of a virtual machine so you can interact with the virtual machine).

- Hyper-V-specific cmdlets for Windows PowerShell. Windows Server 2012 R2 and Windows Server 2012 include the Hyper-V module for Windows PowerShell, which provides command-line access to all the functionality that is available in the GUI, in addition to functionality that is not available through the GUI.

The scalability and availability improvements in Hyper-V allow for significantly larger clusters and greater consolidation ratios, which are key to the cost of ownership for enterprises and hosts. Hyper-V in Windows Server 2012 R2 supports significantly larger configurations of virtual and physical components than previous releases of Hyper-V. This increased capacity enables you to run Hyper-V on large physical computers and to virtualize high-performance, scaled-up workloads.

Hyper-V provides a multitude of options for segmentation and isolation of virtual machines that are running on the same server. This is critical for shared servers running Hyper-V and cluster scenarios where multiple tenants will host their virtual machines on the same servers. By design, Hyper-V ensures isolation of memory, VMBus, and other system and hypervisor constructs between all virtual machines on a host.

1.5.2 Failover Clustering

Failover Clustering in Windows Server 2012 R2 supports increased scalability, continuously available file-based server application storage, easier management, faster failover, automatic rebalancing, and more flexible architectures for failover clusters.

For the purposes of a multi-tenant design, clusters that are managed by Hyper-V can be used in conjunction with the aforementioned clusters that are managed by Scale-Out File Server for an end-to-end Microsoft solution for storage, network, and compute architectures.

1.5.3 Resource Metering

Service providers and enterprises that deploy private clouds need tools to charge business units that they support while providing the business units with the appropriate resources to match their needs. For hosting service providers, it is equally important to issue chargebacks based on the amount of usage by each customer.

To implement advanced billing strategies that measure the assigned capacity of a resource and its actual usage, earlier versions of Hyper-V required users to develop their own chargeback solutions that polled and aggregated performance counters. These solutions could be expensive to develop and sometimes led to loss of historical data.

To assist with more accurate, streamlined chargebacks while protecting historical information, Hyper-V in Windows Server 2012 R2 and Windows Server 2012 provides Resource Metering, which is a feature that allows customers to create cost-effective, usage-based billing solutions. With this feature, service providers can choose the best billing strategy for their business model, and independent software vendors can develop more reliable, end-to-end chargeback solutions by using Hyper-V.

1.5.4 Management

This guide deals only with fabric architecture solutions, and not the more comprehensive topic of fabric management by using System Center 2012 R2. However, there are significant management and automation options for multi-server, multi-tenant environments that are enabled by Windows Server 2012 R2 technologies.

Windows Server 2012 R2 provides management efficiency with broader automation for common management tasks. For example, Server Manager in Windows Server 2012 R2 enables multiple servers on the network to be managed effectively from a single computer.

With the Windows PowerShell 3.0 command-line interface, Windows Server 2012 R2 provides a platform for robust, multi-computer automation for all elements of a data center, including servers, Windows operating systems, storage, and networking. It also provides centralized administration and management capabilities such as deploying roles and features remotely to physical and virtual servers, and deploying roles and features to virtual hard disks, even when they are offline.