Microsoft Infrastructure as a Service Foundations-Software Defined Architecture Pattern

As part of Microsoft IaaS Foundations series, this document provides an overview of one of the Microsoft Infrastructure as a Service design patterns that you can use drive your own design exercises. These patterns are field tested and represent the results of Microsoft Consulting Services experiences in the field

Table of Contents (this article)

2 Software Defined Infrastructure Architecture Pattern

This document is part of the Microsoft Infrastructure as a Service Foundations series. The series includes the following documents:

Chapter 1: Microsoft Infrastructure as a Service Foundations

Chapter 2: Microsoft Infrastructure as a Service Compute Foundations

Chapter 3: Microsoft Infrastructure as a Service Network Foundations

Chapter 4: Microsoft Infrastructure as a Service Storage Foundations

Chapter 5: Microsoft Infrastructure as a Service Virtualization Platform Foundations

Chapter 6: Microsoft Infrastructure as a Service Design Patterns–Overview

Chapter 7: Microsoft Infrastructure as a Service Foundations—Converged Architecture Pattern

Chapter 8: Microsoft Infrastructure as a Service Foundations-Software Defined Architecture Pattern (this article)

Chapter 9: Microsoft Infrastructure as a Service Foundations-Multi-Tenant Designs

For more information about the Microsoft Infrastructure as a Service Foundations series, please see Chapter 1: Microsoft Infrastructure as a Service Foundations

Contributors:

Adam Fazio – Microsoft

David Ziembicki – Microsoft

Joel Yoker – Microsoft

Artem Pronichkin – Microsoft

Jeff Baker – Microsoft

Michael Lubanski – Microsoft

Robert Larson – Microsoft

Steve Chadly – Microsoft

Alex Lee – Microsoft

Yuri Diogenes – Microsoft

Carlos Mayol Berral – Microsoft

Ricardo Machado – Microsoft

Sacha Narinx – Microsoft

Tom Shinder – Microsoft

Jim Dial – Microsoft

Applies to:

Windows Server 2012 R2

System Center 2012 R2

Windows Azure Pack – October 2014 feature set

Microsoft Azure – October 2014 feature set

Document Version:

1.0

1 Introduction

The goal of the Infrastructure-as-a-Service (IaaS) Foundations series is to help enterprise IT and cloud service providers understand, develop, and implement IaaS infrastructures. This series provides comprehensive conceptual background, a reference architecture and a reference implementation that combines Microsoft software, consolidated guidance, and validated configurations with partner technologies such as compute, network, and storage architectures, in addition to value-added software features.

The IaaS Foundations Series utilizes the core capabilities of the Windows Server operating system, Hyper-V, System Center, Windows Azure Pack and Microsoft Azure to deliver on-premises and hybrid cloud Infrastructure as a Service offerings.

As part of Microsoft IaaS Foundations series, this document provides an overview of Microsoft Infrastructure as a Service Design patterns that you can use drive your own design exercises. These patterns are field tested and represent the results of Microsoft Consulting Services experiences in the field. The pattern discussed in this article is the Software Defined Infrastructure pattern, which represents Microsoft’s recommended pattern for building out Infrastructure as a Service deployments both on-premises and in hosted service provider environments.

2 Software Defined Infrastructure Architecture Pattern

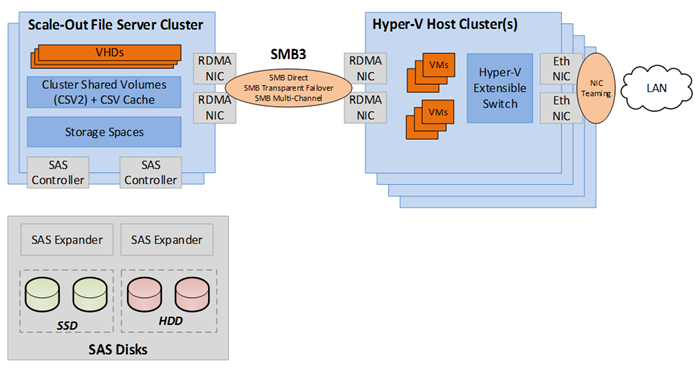

Key attributes of the Software Defined Infrastructure pattern (previously called the Continuous Availability over SMB Storage pattern) include the use of the SMB 3.02 protocol, and in the case of Variation A, the implementation of the new Scale-Out File Server cluster design pattern in Windows Server 2012 R2.

This section outlines a finished example of a Software Defined Infrastructure pattern that uses Variation A. The following diagram shows the high-level architecture.

Figure 1 Software Defined Infrastructure storage architecture pattern

The design consists of one or more Windows Server 2012 R2 Scale-Out File Server clusters (left) combined with one or more Hyper-V host clusters (right). In this sample design, a shared SAS storage architecture is utilized by the Scale-Out File Server clusters. The Hyper-V hosts store their virtual machines on SMB shares in the file cluster, which is built on top of Storage Spaces and Cluster Shared Volumes.

A key choice in the Software Defined Infrastructure pattern is whether to use InfiniBand or Ethernet as the network transport between the failover clusters that are managed by Hyper-V and the clusters that are managed by the Scale-Out File Server. Currently, InfiniBand provides higher speeds per port than Ethernet (56 Gbps for InfiniBand compared to 10 or 40 GbE). However, InfiniBand requires a separate switching infrastructure, whereas an Ethernet-based approach can utilize a single physical network infrastructure.

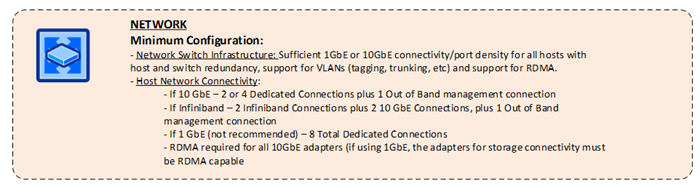

2.1 Compute

The compute infrastructure is one of the primary elements that provides fabric scale to support a large number of workloads. In a Software Defined Infrastructure pattern fabric infrastructure, an array of hosts that have the Hyper-V role enabled provide the fabric with the capability to achieve scale in the form of a large-scale failover cluster.

Figure 2 provides an overview of the compute layer of the private cloud fabric infrastructure.

Figure 2 Microsoft Azure poster

With the exception of storage connectivity, the compute infrastructure of this design pattern is similar to the infrastructure of the converged and non-converged patterns. However, the Hyper-V host clusters utilize the SMB protocol over Ethernet or InfiniBand to connect to storage.

2.1.1 Hyper-V Host Infrastructure

The server infrastructure is comprised of a minimum of four hosts and a maximum of 64 hosts in a single Hyper-V failover cluster instance. Although a minimum of two nodes is supported by failover clustering in Windows Server 2012 R2, a configuration at that scale does not provide sufficient reserve capacity to achieve cloud attributes such as elasticity and resource pooling.

Note

The same sizing and availability guidance that is provided in the Hyper-V Host Infrastructure subsection (in the Non-Converged Architecture Pattern section) applies to this pattern.

Figure 3 provides a conceptual view of this architecture for the Software Defined Infrastructure pattern.

Figure 3 Microsoft Azure poster

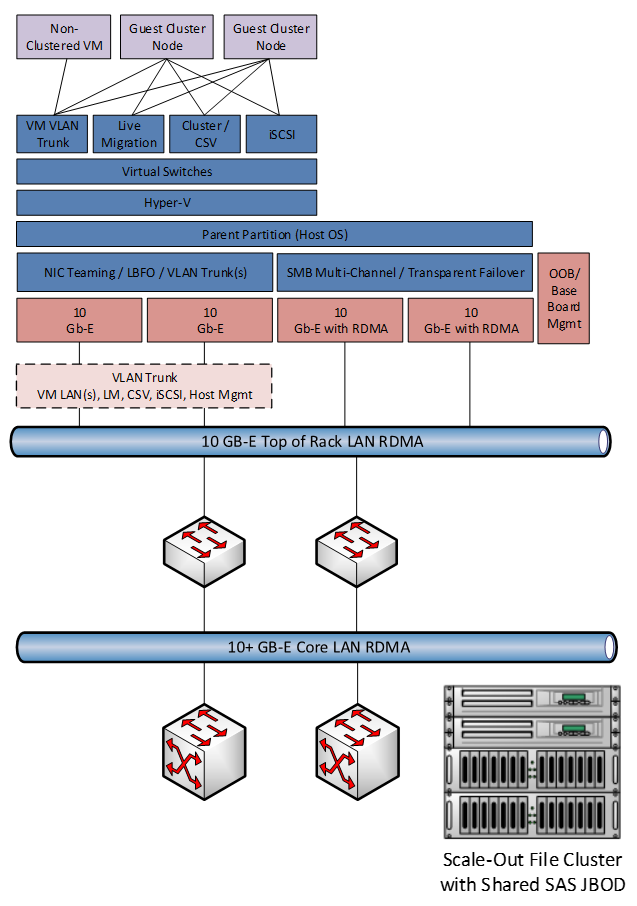

A key factor in this computer infrastructure is determining whether Ethernet or InfiniBand will be utilized as the transport between the Hyper-V host clusters and the Scale-Out File Server clusters. Another consideration is how RDMA (recommended) will be deployed to support the design.

As outlined in previous sections, RDMA cannot be used in conjunction with NIC Teaming. Therefore, in this design, which utilizes a 10 GbE network fabric, each Hyper-V host server in the compute layer contains four 10 GbE network adapters. One pair is for virtual machine and cluster traffic, and it utilizes NIC Teaming. The other pair is for storage connectivity to the Scale-Out File Server clusters, and it is RDMA-capable.

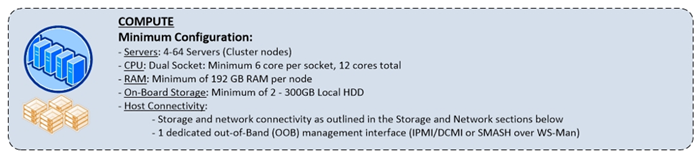

2.2 Network

When designing the fabric network for the failover cluster for Windows Server 2012 R2 Hyper-V, it is important to provide the necessary hardware and network throughput to provide resiliency and Quality of Service (QoS). Resiliency can be achieved through availability mechanisms, while QoS can be provided through dedicated network interfaces or through a combination of hardware and software QoS capabilities.

Figure 4 provides an overview of the network layer of the private cloud fabric infrastructure.

Figure 4 Network minimum configuration

2.2.1 Host Connectivity

When you are designing the network topology and associated network components of the private cloud infrastructure, certain key considerations apply. You should provide:

- Adequate network port density: Designs should contain top-of-rack switches that have sufficient density to support all host network interfaces.

- Adequate interfaces to support network resiliency: Designs should contain a sufficient number of network interfaces to establish redundancy through NIC Teaming.

- Network Quality of Service: Although the use of dedicated cluster networks is an acceptable way to achieve Quality of Service, utilizing high-speed network connections in combination with hardware- or software-defined network QoS policies provides a more flexible solution.

- RDMA support: For the adapters (InfiniBand or Ethernet) that will be used for storage (SMB) traffic, RDMA support is required.

The network architecture for this design pattern is critical because all storage traffic will traverse a network (Ethernet or InfiniBand) between the Hyper-V host clusters and the Scale-Out File Server clusters.

2.3 Storage

2.3.1 Storage Connectivity

For the operating system volume of the parent partition that is using direct-attached storage to the host, an internal SATA or SAS controller is required—unless the design utilizes SAN for all system storage requirements, including boot from SAN for the host operating system. (Fibre Channel and iSCSI boot are supported in Windows Server 2012 R2 and Windows Server 2012.)

Depending on the storage transport that is utilized for the Software Defined Infrastructure design pattern, the following adapters are required to allow shared storage access:

Hyper-V host clusters:

- 10 GbE adapters that support RDMA

- InfiniBand adapters that support RDMA

Scale-Out File Server clusters:

- 10 GbE adapters that support RDMA

- InfiniBand adapters that support RDMA

- SAS controllers (host bus adapters) for access to shared SAS storage

The number of adapters and ports that are required for storage connectivity between the Hyper-V host clusters and the Scale-Out File Server clusters depends on a variety of size and density planning factors. The larger the clusters and the higher the number of virtual machines that are to be hosted, the more bandwidth and IOPS capacity is required between the clusters.

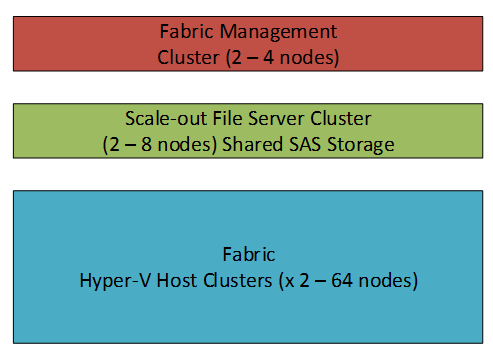

2.3.2 Scale-Out File Server Cluster Architecture

The key attribute of Variation A and B of the Software Defined Infrastructure design pattern is the usage of Scale-Out File Server clusters in Windows Server 2012 R2 as the “front end” or access point to storage. The Hyper-V host clusters that run virtual machines have no direct storage connectivity. Instead, they have SMB Direct (RDMA)–enabled network adapters, and they store their virtual machines on file shares that are presented by the Scale-Out File Server clusters.

For the Microsoft Infrastructure as a Service patterns, there are two options for the Scale-Out File Server clusters that are required for Variations A and B. The first is the Fast Track “small” SKU, or “Cluster-in-a-Box,” which can serve as the storage cluster. Any validated small SKU can be used as the storage tier for the “medium” IaaS PLA Software Defined Infrastructure Storage pattern. The small SKU is combined with one or more dedicated Hyper-V host clusters for the fabric.

The second option is a larger, dedicated Scale-Out File Server cluster that meets all of the validation requirements that are outlined in the Software Defined Infrastructure Storage section. Figures 5, 6, and 7illustrate these options.

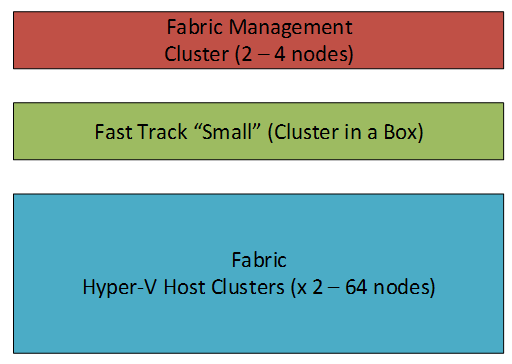

Figure 5 Software Defined Infrastructure storage options

In the preceding design, a dedicated fabric management cluster and one or more fabric clusters use a Scale-Out File Server cluster as the storage infrastructure.

Figure 6 Another option for fabric management design

In the preceding design, a dedicated fabric management cluster and one or more fabric clusters use a Fast Track “small” (or “Cluster-in-a-Box”) SKU as the storage infrastructure.

2.3.2.1 Cluster-in-a-Box

As part of the Fast Track program, Microsoft has been working with server industry customers to create a new generation of simpler, high-availability solutions that deliver small implementations as a “Cluster-in-a-Box” or as consolidation appliance solutions at a lower price.

In this scenario, the solution is designed as a storage “building block” for the data center, such as a dedicated storage appliance. Examples of this scenario are cloud solution builders and enterprise data centers. For example, suppose that the solution supported Server Message Block (SMB) 3.0 file shares for Hyper-V or SQL Server. In this case, the solution would enable the transfer of data from the drives to the network at bus and wire speeds with CPU utilization that is comparable to Fibre Channel.

In this scenario, the file server is enabled in an office environment in an enterprise equipment room that provides access to a switched network. As a high-performance file server, the solution can support variable workloads, hosted line-of-business (LOB) applications, and data.

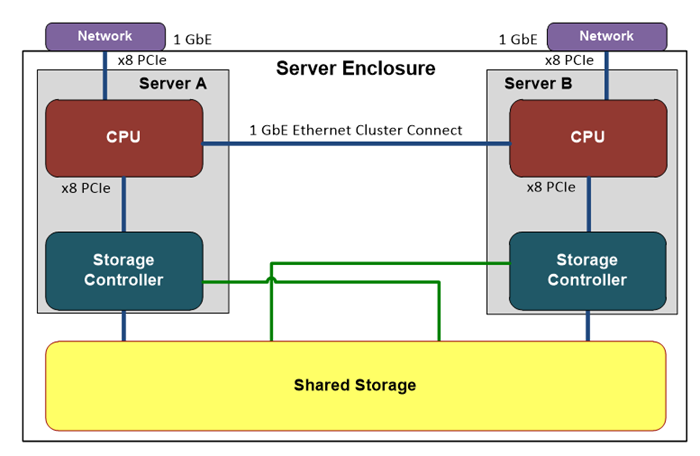

The “Cluster-in-a-Box” design pattern requires a minimum of two clustered server nodes and shared storage that can be housed within a single enclosure design or a multiple enclosure design, as shown in Figure 7.

Figure 7 Cluster-in-a-Box

2.3.2.2 Fast Track Medium Scale-Out File Server Cluster

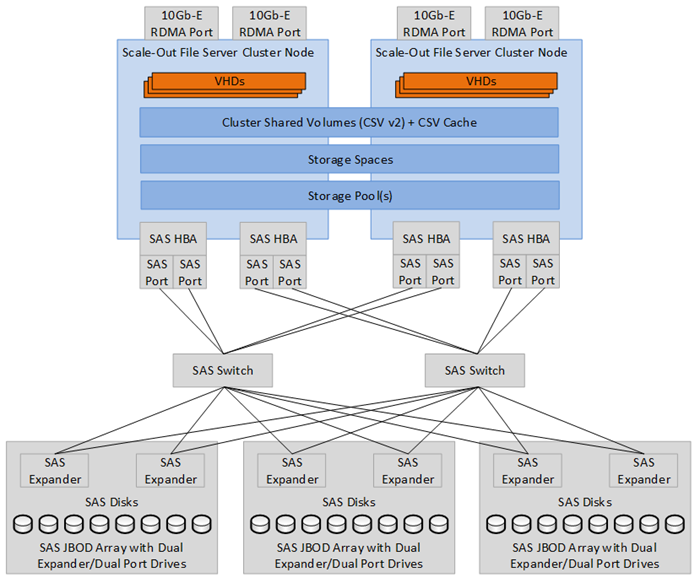

For higher end scenarios in which larger capacity I/O or performance is required, larger multinode Scale-Out File Server clusters can be utilized. Higher performing networks (such as 10 GbE or 56 GbE InfiniBand) can be used between the failover clusters that are managed by Hyper-V and the file cluster.

The Scale-Out File Server cluster design is scaled by adding additional file servers to the cluster. By using CSV 2.0, administrators can create file shares that provide simultaneous access to data files, with direct I/O, through all nodes in a file-server cluster. This provides better utilization of network bandwidth and load balancing of the file server clients (Hyper-V hosts).

Additional nodes also provide additional storage connectivity, which enables further load balancing between a larger number of servers and disks.

In many cases, the scaling of the file server cluster when you use SAS JBOD runs into limits in terms of how many adapters and individual disk trays can be attached to the same cluster. You can avoid these limitations and achieve additional scale by using a switched SAS infrastructure, as described in previous sections.

Figure 8 illustrates this approach. For simplicity, only file-cluster nodes are diagrammed; however, this could easily be four nodes or eight nodes for scale-out.

Figure 8 Medium scale-out file server

Highlights of this design include the SAS switches, which allow a significantly larger number of disk trays and paths between all hosts and the storage. This approach can enable hundreds of disks and many connections per server (for instance, two or more four-port SAS cards per server).

To have resiliency against the failure of one SAS enclosure, you can use two-way mirroring (use minimum of three disks in the mirror for failover clustering and CSV) and enclosure awareness, which requires three physical enclosures. Two-way mirror spaces must use three or more physical disks. Therefore, three enclosures are required with one disk in each enclosure so that the storage pool is resilient to one enclosure failure. For this design, the pool must be configured with the IsEnclosureAware flag, and the enclosures must be certified to use the Storage Spaces feature in Windows Server 2012 R2.

Note:

For enclosure awareness, Storage Spaces leverage the array’s failure and identify lights to indicate drive failure or a specific drive’s location within the disk tray. The array or enclosure must support SCSI Enclosure Services (SES) 3.0. Enclosure awareness is independent of a SAS switch or the number of compute nodes.

This design also illustrates a 10 GbE with RDMA design for the file server cluster to provide high bandwidth and low latency for SMB traffic. This could also be InfiniBand if requirements dictate that. Balancing the available I/O storage capacity through the SAS infrastructure to the demands of the failover clusters that are managed by Hyper-V that will be utilizing the file cluster for their storage is key to a good design. An extremely high-performance InfiniBand infrastructure does not make sense if the file servers will have only two SAS connections to storage.

2.3.3 Storage Infrastructure

For Hyper-V failover-cluster and workload operations in a continuous availability infrastructure, the fabric components utilize the following types of storage:

- Operating system: Non-shared physical boot disks (direct-attached storage or SAN) for the file servers and Hyper-V host servers.

- Cluster witness: File share to support the failover cluster quorum for the file server clusters and the Hyper-V host clusters (a shared witness disk is also supported).

- Cluster Shared Volumes (CSV) : One or more shared CSV LUNs for virtual machines on Storage Spaces that are backed by SAS JBOD.

- Guest clustering [optional]: Requires iSCSI or shared VHDX. For this pattern, adding the iSCSI Target Server to the file server cluster nodes can enable iSCSI shared storage for guest clustering. However, shared VHDX is the recommended approach because it maintains separation between the consumer and the virtualization infrastructure that is supplied by the provider.

As outlined in the overview, fabric and fabric management host controllers require sufficient storage to account for the operating system and paging files. However, in Windows Server 2012 R2, we recommend that virtual memory be configured as “Automatically manage paging file size for all drives.”

The Sizing of the physical storage architecture for this design pattern is highly dependent on the quantity and type of virtual machine workloads that are to be hosted.

Given the workload, virtual disks often exceed multiple gigabytes. Where it is supported by the workload, we recommend using dynamically expanding disks to provide higher density and more efficient use of storage.

Note:

CSV on the Scale-Out File Server clusters must be configured in Windows as a basic disk that is formatted as NTFS. While supported on CSV, ReFS is not recommended and is specifically not recommend for IaaS. In addition, CSV cannot be used as a witness disk, and they cannot have Windows Data Deduplication enabled.

A CSV has no restrictions in the number of virtual machines that it can support on an individual CSV, because metadata updates on a CSV are orchestrated on the server side, and they run in parallel to provide no interruption and increased scalability.

Performance considerations fall primarily on the IOPS that the file cluster provides, given that multiple servers from the Hyper-V failover cluster connect through SMB to a commonly shared CSV on the file cluster. Providing more than one CSV to the Hyper-V failover cluster within the fabric can increase performance, depending on the file cluster configuration.