Microsoft Infrastructure as a Service Compute Foundations

This document discusses the compute infrastructure components that are relevant for a Microsoft IaaS infrastructure and provides guidelines and requirements for building an IaaS network infrastructure using Microsoft products and technologies.

Table of Contents (for this article)

This document is part of the Microsoft Infrastructure as a Service Foundations series. The series includes the following documents:

Chapter 1: Microsoft Infrastructure as a Service Foundations

Chapter 2: Microsoft Infrastructure as a Service Compute Foundations (this article)

Chapter 3: Microsoft Infrastructure as a Service Network Foundations

Chapter 4: Microsoft Infrastructure as a Service Storage Foundations

Chapter 5: Microsoft Infrastructure as a Service Virtualization Platform Foundations

Chapter 6: Microsoft Infrastructure as a Service Design Patterns–Overview

Chapter 7: Microsoft Infrastructure as a Service Foundations—Converged Architecture Pattern

Chapter 8: Microsoft Infrastructure as a Service Foundations-Software Defined Architecture Pattern

Chapter 9: Microsoft Infrastructure as a Service Foundations-Multi-Tenant Designs

For more information about the Microsoft Infrastructure as a Service Foundations series, please see Chapter 1: Microsoft Infrastructure as a Service Foundations

Contributors:

Adam Fazio – Microsoft

David Ziembicki – Microsoft

Joel Yoker – Microsoft

Artem Pronichkin – Microsoft

Jeff Baker – Microsoft

Michael Lubanski – Microsoft

Robert Larson – Microsoft

Steve Chadly – Microsoft

Alex Lee – Microsoft

Carlos Mayol Berral – Microsoft

Ricardo Machado – Microsoft

Sacha Narinx – Microsoft

Tom Shinder – Microsoft

Applies to:

Windows Server 2012 R2

System Center 2012 R2

Windows Azure Pack – October 2014 feature set

Microsoft Azure – October 2014 feature set

Document Version:

1.0

1 Introduction

The goal of the Infrastructure-as-a-Service (IaaS) Foundations series is to help enterprise IT and cloud service providers understand, develop, and implement IaaS infrastructures. This series provides comprehensive conceptual background, a reference architecture and a reference implementation that combines Microsoft software, consolidated guidance, and validated configurations with partner technologies such as compute, network, and storage architectures, in addition to value-added software features.

The IaaS Foundations Series utilizes the core capabilities of the Windows Server operating system, Hyper-V, System Center, Windows Azure Pack and Microsoft Azure to deliver on-premises and hybrid cloud Infrastructure as a Service offerings.

As part of Microsoft IaaS Foundations series, this document discusses the compute infrastructure components that are relevant to a Microsoft IaaS infrastructure and provides guidelines and requirements for building a compute infrastructure using Microsoft products and technologies. These components can be used to compose an IaaS solution based on private clouds, public clouds (for example, in a hosting service provider environment) or hybrid clouds. Each major section of this document will include sub-sections on private, public and hybrid infrastructure elements. Discussions of public cloud components are scoped to Microsoft Azure services and capabilities.

2 On-premises

2.1 Compute Architecture

2.1.1 Server Architecture

The host server architecture is a critical component of the virtualized infrastructure, and a key variable in calculating server consolidation ratios and cost analysis. The ability of the host server to handle the workload of a large number of consolidation candidates increases the consolidation ratio and helps provide the desired cost benefit.

The system architecture of the host server refers to the general category of the server hardware. Examples include:

- Rack mounted servers

- Blade servers

- Large symmetric multiprocessor (SMP) servers

The primary tenet to consider when selecting system architectures is that each server running Hyper-V will contain multiple guests with multiple workloads. Critical components for success include decisions around:

- Processor

- RAM

- Storage

- Network capacity

- High I/O capacity

- Low latency.

The host server must be able to provide the required capacity in each of these categories.

Note:

The Windows Server Catalog is useful for assisting customers in selecting appropriate hardware. It contains information about all servers, storage and other hardware devices that are certified for Windows Server 2012 R2 and Hyper-V. However, the logo program and support policy for failover-cluster solutions in Windows Server 2012and cluster solutions are not listed in the Windows Server Catalog. All individual components that make up a cluster configuration must earn the appropriate "Certified for" or "Supported on" Windows Server designations. These designations are listed in their device-specific category in the Windows Server Catalog.

2.1.1.1 Server and Blade Network Connectivity

Multiple network adapters or multiport network adapters are recommended on each host server. For converged designs, network technologies that provide teaming or virtual network adapters can be utilized, provided that two or more physical adapters can be teamed for redundancy, and multiple virtual network adapters or VLANs can be presented to the hosts for traffic segmentation and bandwidth control.

2.1.1.2 Storage Multipath I/O

Multipath I/O (MPIO) architecture supports iSCSI, Fibre Channel, and SAS SAN connectivity by establishing multiple sessions or connections to a storage array.

Multipath solutions use redundant physical path components—adapters, cables, and switches—to create logical paths between the server and the storage device. If one or more of these components should fail (causing the path to fail), multipath logic uses an alternate path for I/O, so that applications can still access their data. Each network adapter (in the iSCSI case) or HBA should be connected by using redundant switch infrastructures, to provide continued access to storage in the event of a failure in a storage fabric component.

Failover times vary by storage vendor, and they can be configured by using timers in the Microsoft iSCSI Initiator driver or by modifying the parameter settings of the Fibre Channel host bus adapter driver.

In all cases, storage multipath solutions should be used. Generally, storage vendors will build a device-specific module on top of the MPIO software in Windows Server 2012 R2 or Windows Server 2012. Each device-specific module and HBA will have its own unique multipath options and recommended number of connections.

2.1.1.3 Consistent Device Naming (CDN)

Windows Server 2012 R2 supports consistent device naming (CDN), which provides the ability for hardware manufacturers to identify descriptive names of onboard network adapters within the BIOS. Windows Server 2012 R2 assigns these descriptive names to each interface, providing users with the ability to match chassis printed interface names with the network interfaces that are created within Windows. The specification for this change is outlined in the Slot Naming PCI-SIG Engineering Change Request.

2.1.2 Failover Clustering

2.1.2.1 Cluster-Aware Updating (CAU)

Cluster-Aware Updating (CAU) reduces server downtime and user disruption by allowing IT administrators to update clustered servers with little or no loss in availability when updates are performed on cluster nodes. CAU transparently takes one node of the cluster offline, installs the updates, performs a restart (if necessary), brings the node back online, and moves on to the next node. This feature is integrated into the existing Windows Update management infrastructure, and it can be extended and automated with Windows PowerShell for integrating into larger IT automation initiatives.

CAU facilitates the cluster updating operation while running from a computer running Windows Server 2012 R2 or Windows 8.1. The computer running the CAU process is called an orchestrator. CAU supports two modes of operation:

- Remote-updating mode. In remote-updating mode, a computer that is remote from the cluster that is being updated acts as an orchestrator

- Self-updating mode. In self-updating mode, one of the cluster nodes that is being updated acts as an orchestrator, and it is capable of self-updating the cluster on a user-defined schedule

When using Cluster-Aware Updating, the end-to-end cluster update process by way of the CAU is cluster-aware, and it is completely automated. It integrates seamlessly with an existing Windows Update Agent (WUA) and Microsoft Windows Server Update Services (WSUS) infrastructure.

CAU also includes an extensible architecture that supports new plug-in development to orchestrate any node-updating tools, such as custom software installers, BIOS updating tools, and network adapter and HBA firmware updating tools. After they have been integrated with CAU, these tools can work across all cluster nodes in a cluster-aware manner.

2.1.2.2 Cluster Shared Volumes (CSV)

The Cluster Shared Volumes (CSV) feature was introduced in Windows Server 2008 R2 as a more efficient way for administrators to deploy storage for cluster-enabled virtual machines on Hyper-V and other server roles, such as Scale-Out File Server and SQL Server.

Before CSV, administrators had to provision a LUN on shared storage for each virtual machine, so that each machine had exclusive access to its virtual hard disks, and conflicting write operations could be avoided. By using CSV, all cluster hosts have simultaneous access to a single or multiple shared volumes where storage for multiple virtual machines can be hosted. Thus, there is no need to provision a new LUN whenever you created a new virtual machine.

Windows Server 2012 R2 provides the following CSV capabilities:

- Application and file storage: CSV 2.0 provides capabilities to clusters through shared namespaces to share configurations across all cluster nodes, including the ability to build continuously available cluster-wide file systems. Application storage can be served from the same shared resource as data, eliminating the need to deploy two clusters (an application and a separate storage cluster) to support high availability application scenarios.

- Integration with other features in Windows Server 2012 R2: Allows for inexpensive scalability, reliability, and management simplicity through integration with Storage Spaces. You gain high performance and resiliency capabilities with SMB Direct, SMB Multichannel, and thin provisioning. In addition, Windows Server 2012 R2 supports ReFS, data deduplication, parity, tiered Storage Spaces, and Write-back cache in Storage Spaces.

- Single namespace: Provides a single consistent file namespace where files have the same name and path when viewed from any node in the cluster. CSV are exposed as directories and subdirectories under the C:\ClusterStorage directory.

- Backup and restore: Supports the full feature set of VSS and also supports hardware and software backup of CSV. CSV also offers a distributed backup infrastructure for software snapshots. The Software Snapshot Provider coordinates to create a CSV 2.0 snapshot, point-in-time semantics at a cluster level, and the ability to perform remote snapshots.

- Placement policies: CSV ownership is evenly distributed across the failover cluster nodes, based on the number of CSV that each node owns. Ownership is automatically rebalanced during conditions such as restart, failover, and the addition of cluster nodes.

- Resiliency: The SMB protocol is comprised of multiple instances per failover cluster node: a default instance that handles incoming traffic from SMB clients that access regular file shares, and a second CSV instance that handles only internode CSV metadata access and redirected I/O traffic between nodes. This improves the scalability of internode SMB traffic between CSV nodes.

- CSV Cache: Allows system memory (RAM) as Write-back cache. The CSV Cache provides caching of Read-only, unbuffered I/O, which can improve performance for applications that use unbuffered I/O when accessing files (for example, Hyper-V). CSV Cache delivers caching at the block level, which enables it to perform caching of pieces of data being accessed within the VHD file. CSV Cache reserves its cache from system memory and handles orchestration across the sets of nodes in the cluster. In Windows Server 2012 R2, CSV Cache is enabled by default and you can allocate up 80% of the total physical RAM for CSV Write-back cache.

There are several CSV deployment models, which are outlined in the following sections.

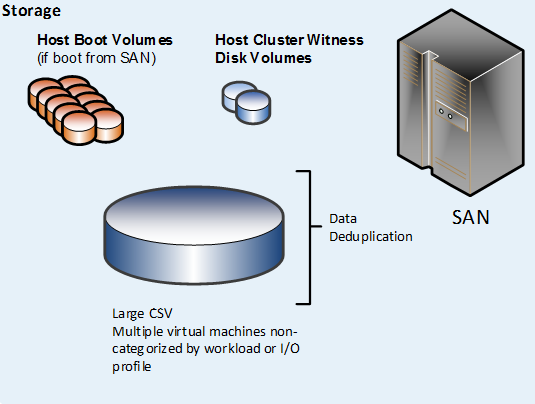

Single CSV per Cluster

In the “single CSV per cluster” design pattern, the SAN is configured to present a single large LUN to all the nodes in the host cluster. The LUN is configured as a CSV in failover clustering. All files that belong to the virtual machines that are hosted on the cluster are stored on the CSV. Optionally, data deduplication functionality that is provided by the SAN can be utilized (if it is supported by the SAN vendor).

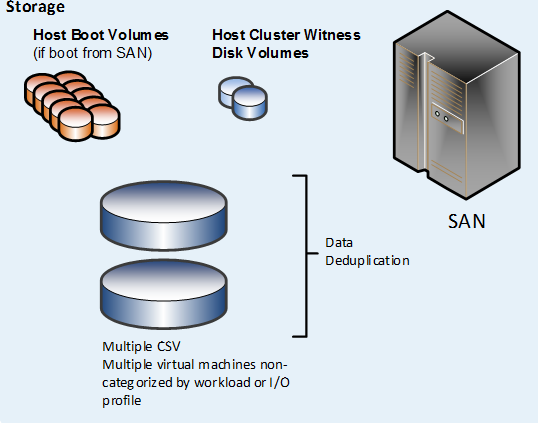

Multiple CSV per Cluster

In the “multiple CSV per cluster” design pattern, the SAN is configured to present two or more large LUNs to all the nodes in the host cluster. The LUNs are configured as CSV in failover clustering. All virtual machine–related files are hosted on the cluster are stored on the CSV.

In addition, data deduplication functionality that the SAN provides can be utilized (if supported by the SAN vendor).

For the single and multiple CSV patterns, each CSV has the same I/O characteristics, so that each individual virtual machine has all of its associated virtual hard disks (VHDs) stored on one CSV.

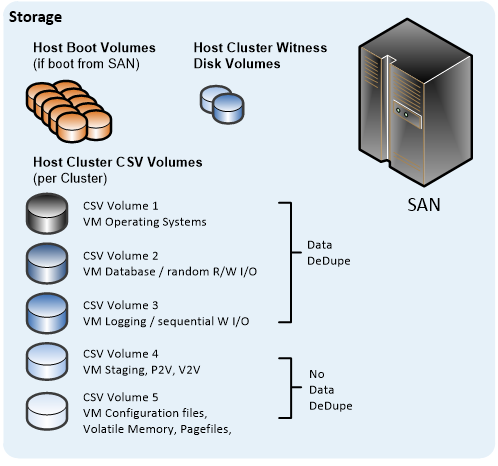

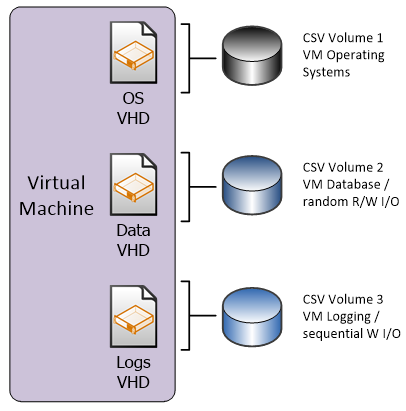

Multiple I/O Optimized CSV per Cluster

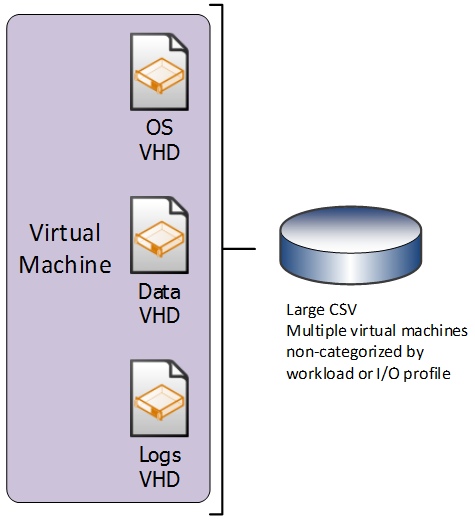

In the “multiple I/O optimized CSV per cluster” design pattern, the SAN is configured to present multiple LUNs to all the nodes in the host cluster; however, the LUNs are optimized for particular I/O patterns like fast sequential Read performance, or fast random Write performance. The LUNs are configured as CSV in failover clustering. All VHDs that belong to the virtual machines that are hosted on the cluster are stored on the CSV, but they are targeted to appropriate CSV for the given I/O needs.

In the “multiple I/O optimized CSV per cluster” design pattern, each individual virtual machine has all of its associated VHDs stored on the appropriate CSV, per required I/O requirements.

Note:

A single virtual machine can have multiple VHDs, and each VHD can be stored on a different CSV (provided that all CSV are available to the host cluster on which the virtual machine is created).

BitLocker-Encrypted Cluster Volumes

Hyper-V, failover clustering and BitLocker work together to create a secure platform for a private cloud infrastructure. Cluster disks that are encrypted with BitLocker drive encryption in Windows Server 2012 R2 enable better physical security for deployments outside secure data centers (if there is a critical safeguard for private cloud infrastructure) and help protect against data leaks.

2.1.3 Hyper-V Failover Clustering

A Hyper-V host failover cluster is a group of independent servers that work together to increase the availability of applications and services. The clustered servers are connected by physical cables and software. If one of the cluster nodes fails, another node begins to provide service—a process that is known as failover. In the case of a planned live migration, users will experience no perceptible service interruption.

2.1.3.1 Host Failover-Cluster Topology

We recommend that the server topology consist of at least two Hyper-V host clusters. The first needs at least two nodes, and it is referred to as the fabric management cluster. The second, plus any additional clusters, is referred to as fabric host clusters.

In scenarios of smaller scale or specialized solutions, the management and fabric clusters can be consolidated in the fabric host cluster. Special care has to be taken to provide resource availability for the virtual machines that host the various parts of the management stack.

Each host cluster can contain up to 64 nodes. Host clusters require some form of shared storage such as a Storage Spaces, Scale-Out File Server cluster, Fibre Channel SAN, or iSCSI SAN.

2.1.3.2 Cluster Quorum and Witness Configurations

In cluster quorum configurations, every cluster node has one vote, and a witness (disk or file share) also has one vote. A witness is recommended when the number of voting nodes is even, but it is not required when the number of voting nodes is odd. We always recommend that you keep an odd total number of votes in a cluster. Therefore, a cluster witness should be configured to support cluster configurations in Hyper-V when the number of failover cluster nodes is even.

Choices for a cluster witness include:

- Shared disk witness

- File-share witness

Disk Witness Quorum Model

Consists of a dedicated LUN that serves as the quorum disk. A disk witness stores a copy of cluster database for all nodes to share. We recommend that this disk consist of a small partition that is at least 512 MB in size; however, it is commonly recommended to reserve a 1 GB disk for each cluster. This LUN can be NTFS- or ReFS-formatted, and it does not require the assignment of a drive letter.

File-Share Witness Quorum Model

This model uses a unique file share that is located on a file server to support one or more clusters. We highly recommend that this file share exist outside any of the cluster nodes, and therefore, this carries the requirement of additional physical or virtual servers outside the Hyper-V compute cluster within the fabric. Writing to this file share results in minimal network traffic, because all nodes contain separate copies of the cluster database, and only cluster membership changes are written to the file share.

Additional quorum and witness capabilities in Windows Server 2012 R2 include:

- Dynamic witness: By default Windows Server 2012 R2 clusters are configured to use dynamic quorum, which allows the witness vote to be dynamically adjusted and reduces the risk that the cluster will be impacted by witness failure. By using this configuration, the cluster decides whether to use the witness vote based on the number of voting nodes that are available in the cluster, which simplifies the witness configuration.

Windows Server 2012 R2 cluster can dynamically adjust a running node’s vote to keep the total number of votes at an odd number, which allows the cluster to continue to run in the event of a 50% node split where neither side would normally have quorum. - Force quorum resiliency: This allows for the ability to force quorum in the event of a partitioned cluster. A partitioned cluster occurs when a cluster breaks into subnets that are not aware of each other and the service is restarted by forcing quorum.

2.1.3.3 Host Cluster Networks

A variety of host cluster networks are required for a Hyper-V failover cluster. The network requirements enable high availability and high performance. The specific requirements and recommendations for network configuration are published in the TechNet Library in the Hyper-V: Live Migration Network Configuration Guide.

Note:

The following list provides some examples, and it does not contain all network access types. For instance, some implementations would include a dedicated backup network.

Network Access Type |

Purpose of the network-access type |

Network-traffic requirements |

Recommended network access |

Storage |

Access storage through SMB, iSCSI, or Fibre Channel. (Fibre Channel does not need a network adapter.) |

High bandwidth and low latency. |

Usually dedicated and private access. Refer to your storage vendor for guidelines. |

Virtual machine access |

Workloads that run on virtual machines usually require external network connectivity to service client requests. |

Varies. |

Public access, which could be teamed for link aggregation or to fail over the cluster. |

Management |

Managing the Hyper-V management operating system. This network is used by Hyper-V Manager or System Center Virtual Machine Manager (VMM). |

Low bandwidth. |

Public access, which could be teamed to fail over the cluster. |

Cluster and Cluster Shared Volumes (CSV) |

Preferred network that is used by the cluster for communications to maintain cluster health. Also used by CSV to send data between owner and non-owner nodes. If storage access is interrupted, this network is used to access the CSV or to maintain and back up the CSV. The cluster should have access to more than one network for communication to help make sure that it is a high availability cluster. |

Usually low bandwidth and low latency. Occasionally high bandwidth. |

Private access. |

Live migration |

Transfer virtual machine memory and state. |

High bandwidth and low latency during migrations. |

Private access. |

Management Network

A dedicated management network is required so that hosts can be managed through a dedicated network to prevent competition with guest traffic needs. A dedicated network provides a degree of separation for the purposes of security and ease-of-management. A dedicated management network typically implies dedicating one network adapter for each host and port per network device to the management network.

Additionally, many server manufacturers provide a separate out-of-band (OOB) management capability that enables remote management of server hardware outside the host operating system.

iSCSI Network

If you are using iSCSI, a dedicated iSCSI network is required so that storage traffic is not in contention with any other traffic. This typically implies dedicating two network adapters for each host, and two ports per network device to the management network.

CSV/Cluster Communication Network

Usually, when the cluster node that owns a VHD file in a CSV performs disk I/O, the node communicates directly with the storage. However, storage connectivity failures sometimes prevent a given node from communicating directly with the storage. To maintain functionality until the failure is corrected, the node redirects the disk I/O through a cluster network (the preferred network for CSV) to the node where the disk is currently mounted. This is called CSV redirected I/O mode.

Live-Migration Network

During live migration, the contents of the memory of the virtual machine that is running on the source node must be transferred to the destination node over a LAN connection. To enable high-speed transfer, a dedicated live-migration network is required.

Virtual Machine Network(s)

The virtual machine networks are dedicated to virtual machine LAN traffic. A virtual machine network can be two or more 1 GbE networks, one or more networks that have been created through NIC Teaming, or virtual networks that have been created from shared 10 GbE network adapters.

2.1.3.4 Hyper-V Application Monitoring

With Windows Server 2012 R2, Hyper-V and failover clustering work together to bring higher availability to workloads that do not support clustering. They do so by providing a lightweight, simple solution to monitor applications that are running on virtual machines and by integrating with the host. By monitoring services and event logs inside the virtual machine, Hyper-V and failover clustering can detect if the key services that a virtual machine provides are healthy. If necessary, they provide automatic corrective action such as restarting the virtual machine or restarting a service within the virtual machine.

2.1.3.5 Virtual Machine Failover Prioritization

Virtual machine priorities can be configured to control the order in which specific virtual machines fail over or start. This helps make sure that high-priority virtual machines get the resources that they need and that lower-priority virtual machines are given resources as they become available.

2.1.3.6 Virtual Machine Anti-Affinity

Administrators can specify that two specific virtual machines cannot coexist on the same node in a failover scenario. By leveraging anti-affinity workloads, resiliency guidelines can be respected when they are hosted on a single failover cluster.

2.1.3.7 Virtual Machine Drain on Shutdown

Windows Server 2012 R2 supports shutting down a failover cluster node in Hyper-V without first putting the node into maintenance mode to drain any running clustered roles. The cluster automatically migrates all running virtual machines to another host before the computer shuts down.

2.1.3.8 Shared Virtual Hard Disk

Hyper-V in Windows Server 2012 R2 includes support for virtual machines to leverage shared VHDX files for shared storage scenarios such as guest clustering. Shared and non-shared virtual hard disk files that are attached as virtual SCSI disks appear as virtual SAS disks when you add a SCSI hard disk to a virtual machine.

3 Public Cloud

3.1 Azure IaaS

There are a number of compute-related services in Windows Azure, including web and worker roles, cloud services, and HD Insight. The focus of document paper is IaaS.

3.1.1 Azure Cloud Service

When you create a virtual machine or application and run it in Microsoft Azure, the virtual machine is placed into a Microsoft Azure cloud service. A cloud service is a container in which you can place virtual machines. All virtual machines within the same cloud service can communicate with one another and they can be accessed from a single public IP address.

By creating a cloud service, you can deploy multiple virtual machines or a multi-tier application in Microsoft Azure, defining multiple roles to distribute processing and allow scaling of applications. An IaaS cloud service can consist of one or more virtual machines.

Microsoft Azure virtual machines must be contained within cloud services. A single Windows Azure subscription by default is limited to 20 cloud services, and each cloud service can include up to 50 virtual machines.

Note:

For a comprehensive list of Subscription Limitations, please see Azure Subscription and Service Limits, Quotas, and Constraints.

3.1.2 Microsoft Azure Virtual Machine

An IaaS virtual machine in Microsoft Azure is a persistent virtual machine in the cloud. After you create a virtual machine in Microsoft Azure, you can delete and re-create it whenever you have to, and you can access the virtual machine just like any other physical or virtualized server. Virtual hard disks (.vhd files) are used to create a virtual machine. You can use the following types of virtual hard disks to create a virtual machine:

- Image—a template that you use to create a new virtual machine. An image does not have specific settings—such as the computer name and user account settings—that a running virtual machine has. If you use an image to create a virtual machine, an operating system disk is created automatically for the new virtual machine.

- Disk—a VHD that you can start and mount as a running version of an operating system. After an image has been provisioned, it becomes a disk. A disk is always created when you use an image to create a virtual machine. Any VHD that is attached to virtualized hardware and running as part of a service is a disk.

You can use the following options to create a virtual machine from an image:

- Create a virtual machine by using a platform image from the Windows Azure Management Portal.

- Create and upload a .vhd file that contains an image to Windows Azure, and then use the uploaded image to create a virtual machine.

Windows Azure provides specific combinations of central processing unit (CPU) cores and memory for IaaS virtual machines. These combinations are known as virtual machine sizes. When you create a virtual machine, you select a specific size. This size can be changed after deployment. You can find a current list of virtual machines sizes at Virtual Machine and Cloud Service Sizes for Azure.

Note:

Note that virtual machines will begin to incur cost as soon as they are provisioned, regardless of whether or not they are turned on.

We recommend that when creating Windows Azure virtual machines, be sure to use complex passwords and non-default ports for traffic such as Remote Desktop Protocol (RDP). Generic ports and passwords will be guessed, and virtual machines will be compromised.

3.1.3 Virtual Machine Storage

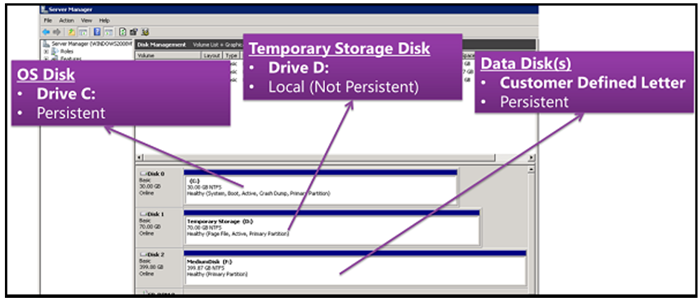

A Windows Azure virtual machine is created from an image or a disk. All virtual machines use one operating system disk, a temporary local disk, and possibly multiple data disks. All images and disks, except for the temporary local disk, are created from VHDs, which are .vhd files that are stored as page blobs in a storage account in Azure.

You can use platform images that are available in Azure to create virtual machines, or you can upload your own images to create customized virtual machines. The disks that are created from images are also stored in Azure storage. You can create new virtual machines easily by using existing disks.

3.1.3.1 VHD Files

A .vhd file is stored as a page blob in Azure storage and can be used for creating images, operating system disks, or data disks in Azure. You can upload a .vhd file to Windows Azure and manage it just as you would any other page blob. The .vhd files can be copied or moved, and they can be deleted as long as a lease does not exist on the VHD.

Only fixed format of .vhd files are supported in Azure. Often, the fixed format wastes space because most disks contain large unused ranges. However, in Azure, fixed .vhd files are stored in a sparse format, so that you receive the benefits of both the fixed and dynamic disks at the same time.

3.1.3.2 Images

An image is a .vhd file that you can use as a template to create a new virtual machine. An image is a template because it does not have specific settings—such as the computer name and user account settings—that a configured virtual machine does. You can use images from the Image Gallery to create virtual machines, or you can create your own images.

The Azure Management Portal enables you to choose from several platform images to create a virtual machine. These images contain several versions of the Windows Server operating system and several distributions of the Linux operating system. A platform image can also contain applications, such as SQL Server.

Note:

To create a Windows Server image, you must run the Sysprep command on your development server to generalize and shut it down before you can upload the .vhd file that contains the operating system.

For more information on Azure Images, please see About Virtual Machine Images in Azure.

3.1.3.3 Disks

Disks are used different ways with a virtual machine in Azure. An operating system disk is a VHD that you use to provide an operating system for a virtual machine. A data disk is a VHD that you attach to a virtual machine to store application data. You can create and delete disks whenever you have to.

There are a number of methods available to create disks, depending on the needs of your application.

For example, a typical way to create an operating system disk is to use an image from the Image Gallery when you create a virtual machine, and an operating system disk is created for you. You can create a data disk by attaching an empty disk to a virtual machine, and a new data disk is created for you. You can also create a disk by using a VHD file that has been uploaded or copied to a storage account in your subscription.

Note:

You cannot use the portal to upload VHD files, but you can use other tools that work with Windows Azure storage—including the Windows Azure PowerShell cmdlets—to upload or copy the file.

There are three types of disks associated with Azure virtual machines:

- Operating system disks

- Data disks

- Temporary disks

The following sections discuss each type of disk

3.1.3.4 Operating System Disk

Every virtual machine has one operating system disk. You can upload a VHD that can be used as an operating system disk, or you can create a virtual machine from an image, and a disk is created for you. An operating system disk is a VHD that you can start and mount as a running version of an operating system. Any VHD that is attached to virtualized hardware and running as part of a service is an operating system disk.

The maximum size of an operating system disk can be 127 GB. When an operating system disk is created in Windows Azure, three copies of the disk are created for high durability. Additionally, if you choose to use disaster recovery that is geo-replication–based, your VHD is also replicated at a distance of more than 400 miles away.

3.1.3.5 Data Disk

A data disk is a VHD that can be attached to a running virtual machine to store application data persistently. You can upload and attach to the virtual machine a data disk that already contains data, or you can use the Windows Azure Management Portal to attach an empty disk to the virtual machine. The maximum size of a data disk is 1 TB; you are limited in the number of disks that you can attach to a virtual machine, based on the size of the virtual machine.

If multiple data disks are attached to a virtual machine, striping inside the virtual machine operating system can be utilized to create a single volume on the multiple attached disks (a volume of up to 16 TB that consists of a stripe of 16 1-TB data disks).

Note:

Use of operating system striping is not possible with geo-redundant data disks.

3.1.3.6 Temporary Local Disk

Each virtual machine that you create has a temporary local disk, which is labeled as drive D. This disk exists only on the physical host server on which the virtual machine is running; it is not stored in blobs on Windows Azure storage. This disk is used by applications and processes that are running in the virtual machine for transient and temporary storage of data. It is used also to store page files for the operating system. Note that any data will not survive a host-machine failure or any other operation that requires moving the virtual machine to another piece of hardware.

Use of the letter D for the drive is by default. You can change the letter used by the temporary drive you need to do so.

If the virtual machine is resized or moved to new hardware for service healing, the drive naming will stay in place. The data disk will stay at drive D, and the resource disk will always be the first available drive letter (which would be E, in this example).

Note:

For more information on operating system disks, temporary storage disks and data disks, please see About Virtual Machine Disks in Azure.

3.1.3.7 Host Caching

The operating system disk and data disk has a host-caching setting (sometimes called host-cache mode) that enables improved performance under some circumstances. However, these settings can have a negative effect on performance in other circumstances, depending on the application. By default, host caching is OFF for both read operations and write operations for data disks. Host caching is ON by default for read and write operations for operating system disks.

3.1.3.8 RDP and Remote Windows PowerShell

New virtual machines that are created through the Windows Azure Management portal will have both RDP and Remote Windows PowerShell available.

3.1.4 Virtual Machine Placement and Affinity Groups

Using affinity groups is how you group the services in your Azure subscription that must work together to achieve optimal performance. When you create an affinity group, it lets Azure know to keep all of the services that belong to your affinity group running at the same data-center cluster.

For example, if you wanted to keep different virtual machines close together (within the same data center), you would specify the same affinity group for those virtual machines and associated storage. That way, when you deploy those virtual machines, Azure will locate them in a data center as close to each other as possible. This reduces latency and increases performance, while potentially lowering costs.

Affinity groups are defined at the subscription level, and the name of each affinity group has to be unique within the subscription. When you create a new resource, you can either use an affinity group that you previously created or create a new one.

Recommended:

For related virtual machines (such as two tiers within an application), utilize affinity groups to ensure that they are placed in the same data center or cluster in Windows Azure.

3.1.5 Endpoints and ACLs

Endpoints

When you create a virtual machine, it is fully accessible from any of your other virtual machines that are within the Windows Azure virtual network to which it is connected. All protocols—such as TCP, UDP, and Internet Control Message Protocol (ICMP)—are supported within the local virtual network. Virtual machines on your virtual network are automatically given an internal IP address from a private range (RFC 1918) that you defined when you created the network.

To provide access to your virtual machines from outside of your virtual network, you will have to use the external IP address and configure public endpoints. These endpoints are similar to firewall and port forwarding rules and can be configured in the Windows Azure portal.

By default, when they are created by using the Azure Management Portal, inbound access for both RDP and Remote Windows PowerShell is enabled. These ports use random public-port addresses, which are redirected to the correct ports on the virtual machines. You can remove these preconfigured endpoints. However, if you do so you will need to have VPN access to connect to the virtual machines.

Security Alert:

Use the principle of least-privilege for virtual machine access. Verify that the default endpoints such as RDP and remote PowerShell are required, and enable new endpoints only for required application or workload functionality.

Network ACLs

A Network Access Control List (ACL) is a security enhancement available for your Azure deployment. An ACL provides the ability to selectively permit or deny traffic for a virtual machine endpoint. This packet filtering capability provides an additional layer of security. You can specify network ACLs for virtual machines endpoints only. You cannot specify an ACL for a virtual network or a specific subnet contained in a virtual network

Using Network ACLs, you can do the following:

- Permit or deny incoming traffic based on remote subnet IPv4 address range to a virtual machine input endpoint.

- Blacklist IP addresses

- Create multiple rules per virtual machine endpoint

- Specify up to 50 ACL rules per virtual machine endpoint

- Use rule ordering to ensure the correct set of rules are applied on a given virtual machine endpoint (lowest to highest)

- Specify an ACL for a specific remote subnet IPv4 address.

When you create an ACL and apply it to a virtual machine endpoint, packet filtering takes place on the host node of your VM. This means the traffic from remote IP addresses is filtered by the host node for matching ACL rules instead of on your VM. This prevents your VM from spending the precious CPU cycles on packet filtering.

When a virtual machine is created, a default ACL is put in place to block all incoming traffic except for management RDP and Remote PowerShell management traffic. All other ports are blocked for inbound traffic unless endpoints are created for those ports. Outbound traffic is allowed by default.

Points to consider:

- No ACL – By default when an endpoint is created

- Permit - When you add one or more “permit” ranges, you deny all other ranges by default. Only packets from the permitted IP range will be able to communicate with the virtual machine endpoint.

- Deny - When you add one or more “deny” ranges, you are permitting all other ranges of traffic by default.

- Combination of Permit and Deny - You can use a combination of “permit” and “deny” when you want to carve out a specific IP range to be permitted or denied.

Network ACLs can be set up on specific virtual machine endpoints. For example, you can specify a network ACL for an RDP endpoint created on a virtual machine which locks down access for certain IP addresses. The table below shows a way to grant access to public virtual IPs (VIPs) of a certain range to permit access for RDP. All other remote IPs are denied. We follow a lowest takes precedence rule order.

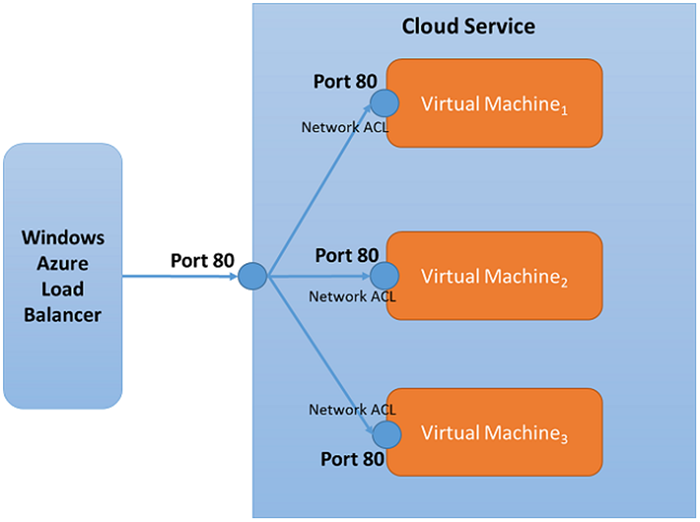

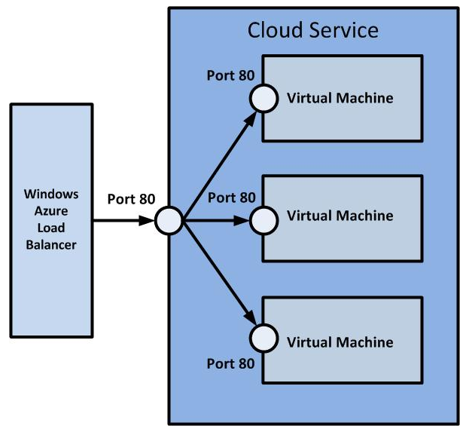

Network ACLs can be specified on a Load balanced set (LB Set) endpoint. If an ACL is specified for a LB Set, the Network ACL is applied to all Virtual Machines in that LB Set. For example, if a LB Set is created with “Port 80” and the LB Set contains 3 VMs, the Network ACL created on endpoint “Port 80” of one VM will automatically apply to the other VMs.

3.1.6 Virtual Machine High Availability (HA)

Availability sets are used to increase the availability of applications running on Azure virtual machines. Availability sets are directly related to fault domains and update domains.

A fault domain in Windows Azure is defined by avoiding single points of failure, like the network switch or power unit of a rack of servers. A fault domain is closely equivalent to a rack of physical servers. When multiple virtual machines are connected together in a cloud service, an availability set can be used to ensure that the virtual machines are located in different fault domains.

The following diagram shows two availability sets, each of which contains two virtual machines.

Windows Azure periodically updates the operating system that hosts the instances of an application. A virtual machine is shut down when an update is applied. An update domain is used to ensure that not all of the virtual machine instances are updated at the same time. When you assign multiple virtual machines to an availability set, Windows Azure ensures that the virtual machines are assigned to different update domains.

Note:

Azure does not support live migration or movement of running virtual machines as part of an HA strategy. We recommend that you create multiple virtual machines per application or role, and Azure components such as availability groups and load balancing will provide HA for the application. If an application cannot use multiple roles or instances to support HA (meaning that a single virtual machine that is running the application must be online at all times, typical of stateful applications), Azure will not be able to support that requirement.

You should use a combination of availability sets and load-balanced endpoints (discussed in subsequent sections) to help ensure that your application is always available and running efficiently.

HA Alert:

In order to qualify for the Azure virtual machine SLA guarantee of 99.95%, two or more virtual machines in the same Availability Group are required.

For more information on Windows Azure host updates and how they affect virtual machines and services, please see Microsoft Azure Host Updates

3.1.7 External Load Balancing

External communication with virtual machines can occur through Azure endpoints. These endpoints are used for different purposes, such as load-balanced traffic or direct virtual machine connectivity like RDP or SSH. You define endpoints that are associated with specific ports and are assigned a specific communication protocol.

An endpoint can be assigned a protocol of TCP or UDP (the TCP protocol includes HTTP and HTTPS traffic). Each endpoint that is defined for a virtual machine is assigned a:

- Public port – the public port is used by external clients to connect to the virtual machine contained within an Azure cloud service.

- Private port – the private port is one on the virtual machine that is listening for the inbound connection.

The incoming connections from external clients are made to the public port and then the connection is redirected and forwarded to the private port on the virtual machine.

Azure provides round-robin load balancing of network traffic to publicly defined ports of a cloud service. You enable this load balancing by setting the number of instances that are running in the service to greater than or equal to two. For virtual machines, you can set up load balancing by creating new virtual machines, placing them insight the same cloud service, and adding load-balanced endpoints to the virtual machines.

A load-balanced endpoint is a specific TCP or UDP endpoint that is used by all virtual machines that are contained in a cloud service. The following image shows a load-balanced endpoint that is shared among three virtual machines and uses a public and private port of 80:

A virtual machine must be in a healthy state to receive network traffic. You can define your own method for determining the health of the virtual machine by adding a load-balancing probe to the load-balanced endpoint. Windows Azure probes for a response from the virtual machine every 15 seconds and takes a virtual machine out of the rotation if no response has been received after two probes.

Note:

Load balancing is required for any workload or service that must remain online through host or virtual machine maintenance.

3.1.8 Internal Load Balancing

Azure Internal Load Balancing (ILB) provides load balancing between virtual machines that reside inside of a cloud service or a virtual network with a regional scope. For information about the use and configuration of virtual networks with a regional scope, see Regional Virtual Networks in the Azure blog. Existing virtual networks that have been configured for an affinity group cannot use ILB.

ILB enables the following new types of load balancing:

- Within a cloud service, from virtual machines to a set of virtual machines that reside within the same cloud service.

- Within a virtual network, from virtual machines in the virtual network to a set of virtual machines that reside within the same cloud service of the virtual network.

- For a cross-premises virtual network, from on-premises computers to a set of virtual machines that reside within the same cloud service of the virtual network.

In all cases, the set of virtual machines receiving the incoming, load-balanced traffic—known as the internal load-balanced set—must reside within the same cloud service. You cannot create an internal load-balanced set that contains virtual machines from more than one cloud service.

The existing Azure load balancing only provides load balancing between Internet-based computers and virtual machines in a cloud service. ILB enables new capabilities for hosting virtual machines in Azure.

For additional information, see Internal Load Balancing.

3.1.9 Limitations of Azure IaaS Virtual Machines

While Windows Azure IaaS virtual machines are full running instances of Windows or Linux from an operating system perspective, in some cases—because they are virtual machines or are running on a cloud infrastructure—some operating system features and capabilities might not be supported.

The following table provides examples of Windows operating system features that are not supported in Windows Azure virtual machines:

OS Roles/Features Not Supported in Azure IaaS VMs |

Explanation |

Hyper-V |

It is not supported to run Hyper-V within a virtual machine that is already running on Hyper-V. |

Dynamic Host Configuration Protocol (DHCP) |

Windows Azure virtual machines do not support broadcast traffic to other virtual machines. |

Failover clustering |

Azure does not handle clustering’s “virtual” or floating IP addressing for network resources. |

BitLocker on operating system disk |

Azure does not support Trusted Platform Module (TPM). |

Client operating systems |

Azure licensing does not support client operating systems. |

Virtual Desktop Infrastructure (VDI) using RDS |

Azure licensing does not support the running of VDI virtual machines through RDS. |

Additionally, over time, more Microsoft applications are being tested and supported for deployment in Windows Azure virtual machines. For more information on Microsoft software that is supported in Windows Azure virtual machines, please see Microsoft Software Supported in Windows Azure Virtual Machines .

Application Alert:

Verify that any Microsoft or 3rd-party software that you intend to deploy on Azure is supported in Azure Infrastructure Services.

4 Hybrid Cloud

4.1 Hybrid Cloud Design

Hybrid cloud compute relates to how on-premises and cloud based compute instances interrelate. This is a very broad subject and exceeds the scope of this document. For comprehensive coverage of hybrid compute foundations information and attendant design considerations, please see Hybrid Cloud Design Considerations.