Microsoft Infrastructure as a Service Storage Foundations

This document discusses the storage infrastructure components that are relevant for a Microsoft IaaS infrastructure and provides guidelines and requirements for building an IaaS storage infrastructure using Microsoft products and technologies.

Table of Contents (for this article)

2.2 Storage Controller Architectures

2.6 Storage Management and Automation

3.1 Public Cloud Storage Types and Accounts

3.3 Storage Scalability and Performance

This document is part of the Microsoft Infrastructure as a Service Foundations series. The series includes the following documents:

Chapter 1: Microsoft Infrastructure as a Service Foundations

Chapter 2: Microsoft Infrastructure as a Service Compute Foundations

Chapter 3: Microsoft Infrastructure as a Service Network Foundations

Chapter 4: Microsoft Infrastructure as a Service Storage Foundations (this article)

Chapter 5: Microsoft Infrastructure as a Service Virtualization Platform Foundations

Chapter 6: Microsoft Infrastructure as a Service Design Patterns–Overview

Chapter 7: Microsoft Infrastructure as a Service Foundations—Converged Architecture Pattern

Chapter 8: Microsoft Infrastructure as a Service Foundations-Software Defined Architecture Pattern

Chapter 9: Microsoft Infrastructure as a Service Foundations-Multi-Tenant Designs

For more information about the Microsoft Infrastructure as a Service Foundations series, please see Chapter 1: Microsoft Infrastructure as a Service Foundations

Contributors:

Adam Fazio – Microsoft

David Ziembicki – Microsoft

Joel Yoker – Microsoft

Artem Pronichkin – Microsoft

Jeff Baker – Microsoft

Michael Lubanski – Microsoft

Robert Larson – Microsoft

Steve Chadly – Microsoft

Alex Lee – Microsoft

Carlos Mayol Berral – Microsoft

Ricardo Machado – Microsoft

Sacha Narinx – Microsoft

Tom Shinder – Microsoft

Applies to:

Windows Server 2012 R2

System Center 2012 R2

Windows Azure Pack – October 2014 feature set

Microsoft Azure – October 2014 feature set

Document Version:

1.0

1 Introduction

The goal of the Infrastructure-as-a-Service (IaaS) Foundations series is to help enterprise IT and cloud service providers understand, develop, and implement IaaS infrastructures. This series provides comprehensive conceptual background, a reference architecture and a reference implementation that combines Microsoft software, consolidated guidance, and validated configurations with partner technologies such as compute, network, and storage architectures, in addition to value-added software features.

The IaaS Foundations Series utilizes the core capabilities of the Windows Server operating system, Hyper-V, System Center, Windows Azure Pack and Microsoft Azure to deliver on-premises and hybrid cloud infrastructure as a service offerings.

As part of Microsoft IaaS Foundations series, this document discusses the storage infrastructure components that are relevant for a Microsoft IaaS infrastructure and provides guidelines and requirements for building a storage infrastructure using Microsoft products and technologies. These components can be used to compose an IaaS solution based on private clouds, public clouds (for example, in a hosting service provider environment) or hybrid clouds. Each major section of this document will include sub-sections on private, public and hybrid infrastructure elements.Discussions of public cloud components are scoped to Microsoft Azure services and capabilities.

2.0 On-Premises

The following sections discuss storage options and capabilities that can be included in an on-premises IaaS design. Note that these on-premises options are also pertinent to cloud service providers interested in delivering a commercial IaaS offering.

2.1 Drive Architectures

The type of hard drives in the host server or in a storage array that are used by the file servers have significant impact on the overall performance of the storage architecture. The critical performance factors for hard drives are:

- The interface architecture (for example, SAS or SATA)

- The rotational speed of the drive (for example, 10K, or 15K RPM) or a solid-state drive (SSD) that does not have moving parts

- The Read and Write speed

- The average latency in milliseconds (ms)

Additional factors that might be important to specific cloud storage instantiations that focus on performance include:

- Drive cache

- Native Command Queuing (NCQ)

- TRIM (SATA only)

- Tagged Command Queuing

- UNMAP (SAS and Fibre Channel)

As with the storage connectivity, high I/O operations per second (IOPS) and low latency are more critical than maximum sustained throughput when it comes to sizing and guest performance on the server running Hyper-V. This translates into selecting drives that have the highest rotational speed and lowest latency possible, and choosing when to use SSDs for extreme performance.

2.1.1 Serial ATA (SATA)

Serial ATA (SATA) drives are a low-cost and relatively high-performance option for storage. SATA drives are available primarily in the 3 Gbps (SATA II) and 6 Gbps (SATA III) standards with a rotational speed of 7,200 RPM and an average latency of around four milliseconds.

Typically, SATA drives are not designed to enterprise-level standards of reliability, although new technologies in Windows Server 2012 R2 such as the Resilient File System (ReFS) can help make SATA drives a viable option for single server scenarios. However, SAS disks are required for all cluster and high availability scenarios that use Windows Server Storage Spaces.

2.1.2 SAS

Serial attached SCSI (SAS) drives are typically more expensive than SATA drives, but they can provide higher performance in throughput, and more importantly, low latency. SAS drives typically have a rotational speed of 10K or 15K RPM with an average latency of 2ms to 3ms and 6 Gbps interfaces (SAS III).

There are also SAS Solid State Drives (SSDs). Unlike SATA drives, SAS drives have dual interface ports that are required for using clustered storage spaces. (Details are provided in subsequent sections.) The SCSI Trade Association has a range of information about SAS drives. In addition, you can find several white papers and solutions on the LSI website.

The majority of SAN arrays today use SAS drives; but a few higher end arrays also use Fibre Channel, SAS drives, and SATA drives. For example, SAS drives are used in the Software Defined Infrastructure pattern (discussed in the patterns document of this Microsoft IaaS series) in conjunction with a JBOD storage enclosure, which enables the Storage Spaces feature.

Aside from the enclosure requirements that will be outlined later, the following requirements exist for SAS drives when they are used in this configuration:

- Drives must provide port association. Windows depends on drive enclosures to provide SES-3 capabilities such as drive-slot identification and visual drive indications (commonly implemented as drive LEDs). Windows matches a drive in an enclosure with SES-3 identification capabilities through the port address of the drive. The computer hosts can be separate from drive enclosures or integrated into drive enclosures.

- Multiport drives must provide symmetric access. Drives must provide the same performance for data-access commands and the same behavior for persistent reservation commands that arrive on different ports as they provide when those commands arrive on the same port.

- Drives must provide persistent reservations. Windows can use physical disks to form a storage pool. From the storage pool, Windows can define virtual disks, called storage spaces. A failover cluster can create high availability for a pool of physical disks, the storage spaces that they define, and the data that they contain. In addition to the standard Windows Hardware Compatibility Test qualification, physical disks should pass through the Microsoft Cluster Configuration Validation Wizard.

In addition to the drives, the following enclosure requirements exist:

- Drive enclosures must provide drive-identification services. Drive enclosures must provide numerical (for example, a drive bay number) and visual (for example, a failure LED or a drive-of-interest LED) drive-identification services. Enclosures must provide this service through SCSI Enclosure Service (SES-3) commands. Windows depends on proper behavior for the following enclosure services. Windows correlates enclosure services to drives through protocol-specific information and their vital product data page 83h inquiry association type 1.

- Drive enclosures must provide direct access to the drives that they house. Enclosures must not abstract the drives that they house (for example, form into a logical RAID disk). If they are present, integrated switches must provide discovery of and access to all of the drives in the enclosure, without requiring additional physical host connections. If possible, multiple host connections must provide discovery of and access to the same set of drives.

Hardware vendors should pay specific attention to these storage drive and enclosure requirements for SAS configurations when they are used in conjunction with the Storage Spaces feature in Windows Server 2012 R2.

2.1.3 Nearline SAS (NL-SAS)

Nearline SAS (NL-SAS) drives deliver the larger capacity benefits of enterprise SATA drives with a fully capable SAS interface. The physical properties of the drive are identical to those of traditional SATA drives, with a rotational speed of 7,200 RPM and average latency of around four milliseconds. However, exposing the SATA drive through a SAS interface provides all the enterprise features that come with an SAS drive, including:

- Multiple host support

- Concurrent data channels

- Redundant paths to the disk array (required for clustered storage spaces)

- Enterprise command queuing

This enables SAS drives to be capable of much larger capacities and availability at significantly lower costs.

It is important to consider that although NL-SAS drives provide greater capacity through the SAS interface, they have the same latency, reliability, and performance limitations of traditional enterprise SATA drives, which results in the higher drive failure rates of SATA drives compared to native SAS drives.

As a result, NL-SAS drives can be used in cluster and high availability scenarios that use Storage Spaces, although using SSD drives to implement storage tiers is highly recommended to improve storage performance.

2.1.4 Fibre Channel

Fibre Channel is traditionally used in SAN arrays and it provides:

- High speed (same as SAS)

- Low latency

- Enterprise-level reliability

Fibre Channel is usually more expensive than using SATA or SAS drives. Fibre Channel typically has performance characteristics that are similar to those of SAS drives, but it uses a different interface.

The choice of Fibre Channel or SAS drives is usually determined by the choice of storage array or drive tray. In many cases, SSDs can also be used in SAN arrays that use Fibre Channel interfaces. All arrays also support SATA devices, sometimes with an adapter. Disk and array vendors have largely transitioned to SAS drives.

2.1.5 Solid-State Storage

SATA, SAS, NL-SAS, and Fibre Channel describe the interface type. In contrast, a solid-state drive (SSD) refers to the classification of media type. Solid-state storage has several advantages over traditional spinning media disks, but it comes at a premium cost. The most prevalent type of solid-state storage is a solid-state drive.

Some advantages include:

- Significantly lower latency

- No spin-up time

- Faster transfer rates

- Lower power and cooling requirements

- No fragmentation concerns

Recent years have shown greater adoption of SSDs in enterprise storage markets. These more expensive devices are usually reserved for workloads that have high-performance requirements.

Mixing SSDs with spinning disks in storage arrays is common to minimize cost. These storage arrays often have software algorithms that automatically place the frequently accessed storage blocks on the SSDs and the less frequently accessed blocks on the lower-cost disks (referred to as auto-tiering), although manual segregation of disk pools is also acceptable. NAND Flash Memory is most commonly used in SSDs for enterprise storage.

2.1.6 Hybrid Drives

Hybrid drives combine traditional spinning disks with nonvolatile memory or small SSDs that act as a large buffer. This method provides the potential benefits of solid-state storage with the cost effectiveness of traditional disks. Currently, these drives are not commonly found in enterprise storage arrays.

2.1.7 Advanced Format Drives

Windows Server 2012 introduced support for Advanced Format large sector disks that support 4096-byte sectors (referred to as 4K), rather than the traditional 512-byte sectors, which ship with most hard drives.

This change offers:

- Higher capacity drives

- Better error correction

- More efficient signal-to-noise ratios

To support compatibility, two types of 4K drives exist: 512-byte emulation (512e) and 4K native. 512e drives present a 512-byte logical sector to use as the unit of addressing, and they present a 4K physical sector to use as the unit of atomic write (the unit defined by the completion of Read and Write operations in a single operation).

2.2 Storage Controller Architectures

For servers that will be directly connected to storage devices or arrays (which could be servers running Hyper-V or file servers that will provide storage), the choice of storage controller architecture is critical to performance, scale, and overall cost.

2.2.1 SATA III

SATA III controllers can operate at speeds of up to 6 Gbps, and enterprise-oriented controllers can include varying amounts of cache on the controller to improve performance.

SAS controllers can also operate at speeds of up to 6 Gbps, and they are more common in server form factors than SATA. When running Windows Server 2012 R2, it is important to understand the difference between host bus adapters (HBAs) and RAID controllers.

SAS HBAs provide direct access to the disks, trays, or arrays that are attached to the controller. There is no controller-based RAID. Disk high availability is provided by the array or by the tray. In Storage Spaces, high availability is provided by higher-level software layers. SAS HBAs are common in one-, two-, and four-port models.

To support the Storage Spaces feature in Windows Server 2012 R2, the HBA must report the HBA that is used to connected devices. For example, drives that are connected through the SAS HBA provide a valid configuration, whereas drives that are connected through the RAID HBA provides an invalid configuration. In addition, all commands must be passed directly to the underlying physical devices. The physical devices must not be abstracted (that is, formed into a logical RAID device), and the HBA must not respond to commands on behalf of the physical devices.

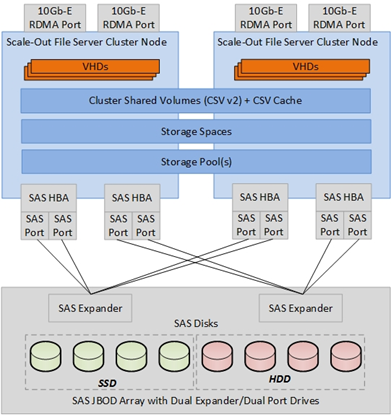

Figure 1 Example SAS JBOD Storage architecture

2.2.2 PCIe RAID/Clustered RAID

Peripheral Component Interconnect Express (PCIe) RAID controllers are the traditional cards that are found in servers. They provide access to storage systems, and they can include RAID technology. RAID controllers are not typically used in cluster scenarios, because clustering requires shared storage. If Storage Spaces is used, RAID should not be enabled because Storage Spaces handles data availability and redundancy.

Clustered RAID controllers are a type of storage interface card that can be used with shared storage and cluster scenarios. The clustered RAID controllers can provide shared storage across configured servers.

The clustered RAID controller solution must pass the Cluster in a Box Validation Kit. This step is required to make sure that the solution provides the storage capabilities that are necessary for IaaS capable failover cluster environments.

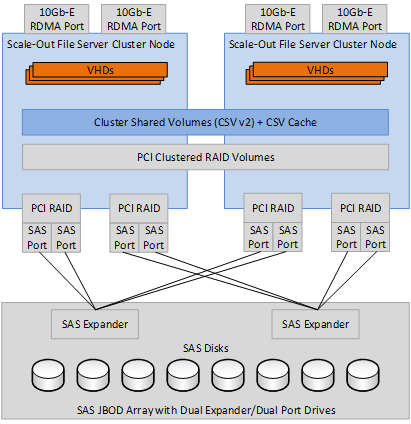

Figure 2 Clustered RAID controllers

2.2.3 Fibre Channel HBA

Fibre Channel HBAs provide one of the more common connectivity methods for storage, particularly in clustering and shared storage scenarios. Some HBAs include two or four ports, with ranges of four, eight, or 16 (Gen5) Gbps. Windows Server 2012 R2 supports a large number of logical unit numbers (LUNs) per HBA[1]. The capacity is expected to exceed the needs of customers for addressable LUNs in a SAN.

Hyper-V in Windows Server 2012 R2 provides the ability to support virtual Fibre Channel adapters within a guest operating system. This is not necessarily required even if Fibre Channel presents storage to servers running Hyper-V, as guest Fibre Channel is designed to reflect the Fibre Channel interface on the host machine to the virtual machines located on the host server.

Although virtual Fibre Channel is discussed in later sections of this document, it is important to understand that the HBA ports that are used with virtual Fibre Channel should be set up in a Fibre Channel topology that supports N_Port ID Virtualization (NPIV), and they should be connected to an NPIV-enabled SAN. To utilize this feature, the Fibre Channel adapters must also support devices that present LUNs.

2.3 Storage Networking

A variety of storage networking protocols exist to support traditional SAN-based scenarios, network-attached storage scenarios, and the newer software-defined infrastructure scenarios that support file-based storage through the use of SMB.

2.3.1 Fibre Channel

Historically, Fibre Channel has been the storage protocol of choice for enterprise data centers for a variety of reasons, including performance and low latency. These considerations have offset the typically higher costs of Fibre Channel. The continually advancing performance of Ethernet from one Gbps to 10 Gbps and beyond has led to great interest in storage protocols that use Ethernet transports, such as iSCSI and Fibre Channel over Ethernet (FCoE).

Given the long history of Fibre Channel in the data center, many organizations have a significant investment in a Fibre Channel–based SAN infrastructure. Windows Server 2012 R2 continues to provide full support for Fibre Channel hardware that is logo-certified. There is also support for virtual Fibre Channel in guest virtual machines through a Hyper-V feature in Windows Server 2012 and Windows Server 2012 R2.

2.3.2 iSCSI

In Windows Server 2012 R2 and Windows Server 2012, the iSCSI Target Server is available as a built-in option under the file and storage service role. The iSCSI Target Server capabilities were enhanced to support diskless network-boot capabilities.

In Windows Server 2012 R2 and Windows Server 2012, the iSCSI Target Server feature provides a network-boot capability from operating system images that are stored in a centralized location. This capability supports commodity hardware for up to 256 computers.

The Windows Server 2012 R2 iSCSI target supports, per target:

- Up to a maximum of 276 LUNs

- Maximum number of 544 sessions

In addition, this capability does not require special hardware, but it is recommended to be used in conjunction with 10 GbE adapters that support iSCSI boot capabilities. For Hyper-V, iSCSI-capable storage provides an advantage because it is the protocol that is utilized by Hyper-V virtual machines for clustering.

The iSCSI Target Server uses the VHDX format to provide a storage format for LUNs. The VHDX format provides data corruption protection during power failures and optimizes structural alignments of dynamic and differencing disks to prevent performance degradation on large-sector physical disks. This also provides the ability to:

- Provision target LUNs up to 64 TB

- Provision fixed-size disks

- Provision dynamically growing disks

In Windows Server 2012 R2, all new disks that are created in the iSCSI Target Server use the VHDX format; however, standard disks with the VHD format can be imported.

Note:

At the time this document was written, Microsoft Azure IaaS supports only the .vhd file format. You will need to convert .vhdx disks before importing them into Microsoft Azure Infrastructure Services.

The iSCSI Target Server in Windows Server 2012 R2 enables Force Unit Access (FUA) for I/O on its back-end virtual disk only if the front-end that the iSCSI Target Server received from the initiator requires it. This has the potential to improve performance, assuming FUA-capable back-end disks or JBODs are used with the iSCSI Target Server.

2.3.3 Fibre Channel over Ethernet (FCoE)

A key advantage of the storage protocols using an Ethernet transport is the ability to use a converged network architecture. Converged networks have an Ethernet infrastructure that serves as the transport for LAN and storage traffic. This can reduce costs by eliminating dedicated Fibre Channel switches and cables.

Several enhancements to standard Ethernet are required for FCoE. The enriched Ethernet is commonly referred to as enhanced Ethernet or Data Center Ethernet. These enhancements require Ethernet switches that are capable of supporting enhanced Ethernet.

2.3.4 InfiniBand

InfiniBand is an industry-standard specification that defines an input/output architecture that is used to interconnect servers, communications infrastructure equipment, storage, and embedded systems. InfiniBand is a true fabric architecture that utilizes switched, point-to-point channels with data transfers of up to 120 gigabits per second (Gbps), in chassis backplane applications and through external copper and optical fiber connections.

InfiniBand provides a low-latency, high-bandwidth interconnection that requires low processing overhead. It is ideal for carrying multiple traffic types (such as clustering, communications, storage, and management) over a single connection.

2.3.5 Switched SAS

Although switched SAS is not traditionally viewed as a storage networking technology, it is possible to design switched SAS storage infrastructures. This can be a low cost and powerful approach when combined with Windows Server 2012 R2 features, such as Storage Spaces and SMB 3.0.

SAS switches enable multiple host servers to be connected to multiple storage trays (SAS JBODs) with multiple paths between each, as shown in Figure 10. Multiple path SAS implementations use a single domain method to provide fault tolerance. Current mainstream SAS hardware supports 6 Gbps. SAS switches support domains that enable functionality similar to zoning in Fibre Channel.

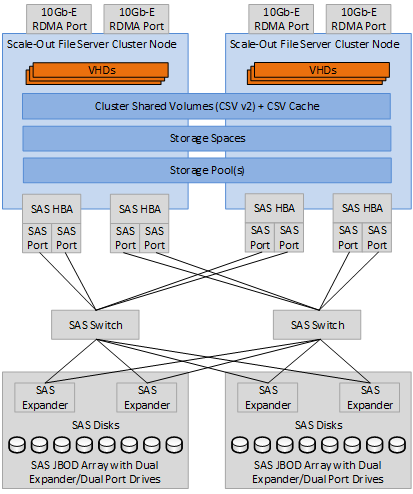

Figure 3 SAS switch connected to multiple SAS JBOD arrays

2.3.6 Network File System

File-based storage is a practical alternative to more SAN storage because it is straightforward to provision. It is popular because it is simple to provision and manage. One example of this trend is the popularity of deploying and running VMware vSphere virtual machines from file-based storage that is accessed over the Network File System (NFS) protocol.

To help you utilize this, Windows Server 2012 R2 includes an updated Server for NFS that supports NFS 4.1 and can utilize many other performance, reliability, and availability enhancements that are available throughout the storage stack in Windows.

Some of the key features that are available in Server for NFS include:

- Storage for VMware virtual machines over NFS. In Windows Server 2012 R2, you can deploy Server for NFS as a highly available storage back end for VMware virtual machines. Critical components of the NFS stack have been designed to provide transparent failover to NFS clients.

- NFS 4.1 protocol. Some of the features of NFS 4.1 include a single-server namespace for share management, Kerberos version 5 support for security (including authentication, integrity, and privacy), VSS snapshot integration for backup, and Unmapped UNIX User Access for user account integration. Windows Server 2012 R2 supports simultaneous SMB 3.0 and NFS access to the same share, identity mapping by using stores based on RFC-2307 for identity integration, and high availability cluster deployments.

- Windows PowerShell. Over 40 Windows PowerShell cmdlets provide task-based remote management for every aspect of Server for NFS.

- Identity mapping. Windows Server 2012 R2 includes a flat file–based identity-mapping store. Windows PowerShell cmdlets replace manual steps to provision Active Directory Lightweight Directory Services (AD LDS) as an identity-mapping store and to manage mapped identities.

2.3.7 SMB 3.0

Similar to the other file-based storage options that were discussed earlier, Hyper-V and SQL Server can take advantage of Server Message Block (SMB)-based storage for virtual machines and SQL Server database data and logs. This support is enabled by the features and capabilities provided in Windows Server 2012 R2 and Windows Server 2012 as outlined in the following sections.

Note:

It is strongly recommended that Scale-Out File Server is used in conjunction with Microsoft IaaS architectures that use SMB storage as discussed in the following sections.

Figure 4 Example of a SMB 3.0-enabled network-attached storage

2.3.7.1 SMB Direct (SMB over RDMA)

The SMB protocol in Windows Server 2012 R2 includes support for remote direct memory access (RDMA) on network adapters, which allows storage-performance capabilities that rival Fibre Channel. RDMA enables this performance capability because network adapters can operate at full speed with very low latency due to their ability to bypass the kernel and perform Read and Write operations directly to and from memory. This capability is possible because transport protocols are implemented on the network adapter hardware, which allows for zero-copy networking by bypassing the kernel.

Note:

SMB Direct is often referred to as Remote DMA or RDMA

Applications using SMB can transfer data directly from memory, through the network adapter, to the network, and then to the memory of the application that is requesting data from the file share. This capability is especially useful for Read and Write intensive workloads, such as in Hyper-V or Microsoft SQL Server, and it results in remote file server performance that is comparable to local storage.

SMB Direct requires:

- At least two computers running Windows Server 2012 R2. No additional features have to be installed, and the technology is available by default.

- Network adapters that are RDMA-capable with the latest vendor drivers installed. SMB Direct supports common RDMA-capable network adapter types, including Internet Wide Area RDMA Protocol (iWARP), InfiniBand, and RDMA over Converged Ethernet (RoCE).

SMB Direct works in conjunction with SMB Multichannel to transparently provide a combination of performance and failover resiliency when multiple RDMA links between clients and SMB file servers are detected. RDMA bypasses the kernel stack, and therefore RDMA does not work with NIC Teaming; however, it does work with SMB Multichannel, because SMB Multichannel is enabled at the application layer.

Note:

SMB multichannel provides the equivalent of NIC teaming for access to SMB file shares.

SMB Direct optimizes I/O with high-speed network adapters, including 40 Gbps Ethernet and 56 Gbps InfiniBand through the use of batching operations, RDMA remote invalidation, and non-uniform memory access (NUMA) optimizations.

2.3.7.2 SMB Multichannel

SMB 3.0 protocol in Windows Server 2012 R2 supports SMB Multichannel, which provides scalable and resilient connections to SMB shares that dynamically create multiple connections for single sessions or multiple sessions on single connections, depending on connection capabilities and current demand. This capability to create session-to-connection associations gives SMB a number of key features, including:

- Connection resiliency: With the ability to dynamically associate multiple connections with a single session, SMB gains resiliency against connection failures that are usually caused by network interfaces or components. SMB Multichannel also allows clients to actively manage paths of similar network capability in a failover configuration that automatically switches sessions to the available paths if one path becomes unresponsive.

- Network usage: SMB can utilize receive-side scaling (RSS)–capable network interfaces with the multiple connection capability of SMB Multichannel to fully use high-bandwidth connections, such as those that are available on 10 GbE networks, during Read and Write operations with workloads that are evenly distributed across multiple CPUs.

- Load balancing: Clients can adapt to changing network conditions to dynamically rebalance loads to a connection or across a set of connections that are more responsive when congestion or other performance issues occur.

- Transport flexibility: Because SMB Multichannel also supports single session to multiple connection capabilities, SMB clients can adjust dynamically when new network interfaces become active. This is how SMB Multichannel is automatically enabled whenever multiple UNC paths are detected and can grow dynamically to use multiple paths as more are added, without administrator intervention.

SMB Multichannel has the following requirements, which are organized by how SMB Multichannel prioritizes connections when multiple connection types are available:

- RDMA-capable network connections:SMB Multichannel can be used with a single InfiniBand connection on the client and server or with a dual InfiniBand connection on each server, connected to different subnets. Although SMB Multichannel offers scaling performance enhancements in single adapter scenarios through RDMA and RSS, if available, it cannot supply failover and load balancing capabilities without multiple paths. RDMA-capable network adapters include iWARP, InfiniBand, and RoCE.

- RSS-capable network connections: SMB Multichannel can utilize RSS-capable connections in 1-1 connection scenarios or multiple connection scenarios. Multichannel load balancing and failover capabilities are not available unless multiple paths exist, but it provides scaling performance usage by spreading overhead between multiple processors by using RSS-capable NICs.

- Load balancing and failover or aggregate interfaces: When RDMA or RSS connections are not available, SMB prioritizes connections that use a collection of two or more physical interfaces. This requires more than one network interface on the client and server, where both are configured as a network adapter team. In this scenario, load balancing and failover are the responsibility of the teaming protocol, not SMB Multichannel, when only one NIC Teaming connection is present and no other connection path is available.

- Standard interfaces and Hyper-V virtual networks:These connection types can use SMB Multichannel capabilities but only when multiple paths exist. For all practical intentions, one GB Ethernet connection is the lowest priority connection type that is capable of using SMB Multichannel.

- Wireless network interfaces:Wireless interfaces are not capable of multichannel operations.

When connections are not similar between client and server, SMB Multichannel utilizes available connections when multiple connection paths exist. For example, if the SMB file server has a 10 GbE connection, but the client has only four 1 GbE connections, and each connection forms a path to the file server, then SMB Multichannel can create connections on each 1 GbE interface. This provides better performance and resiliency, even though the network capabilities of the server exceed the network capabilities of the client.

Note:

SMB Multichannel only affects multiple file operations and you cannot distribute a single file operation (such as accessing a particular VHD) over multiple channels simultaneously. However, a single file copy or accessing a single file on a VHD uses multiple channels although each Read or Write operation travels through only one of the channels.

2.3.7.3 SMB Transparent Failover

SMB Transparent Failover helps administrators configure file shares in Windows failover cluster configurations for continuous availability. Continuous availability enables administrators to perform hardware or software maintenance on any cluster node without interrupting the server applications that are storing their data files on these file shares.

If there is a hardware or software failure, the server application nodes transparently reconnect to another cluster node without interrupting the server application I/O operations. By using Scale-Out File Server, SMB Transparent Failover allows the administrator to redirect a server application node to a different file-server cluster node to facilitate better load balancing.

SMB Transparent Failover has the following requirements:

- A failover cluster that is running Windows Server 2012 R2 with at least two nodes. The configuration of servers, storage, and networking must pass all of the tests performed in the Cluster Configuration Validation Wizard.

- Scale-Out File Server role installed on all cluster nodes.

- Clustered file server configured with one or more file shares that have continuous availability.

- Client computers running Windows Server 2012 R2, Windows Server 2012, Windows 8.1, or Windows 8.

Computers running down-level SMB versions, such as SMB 2.1 or SMB 2.0 can connect and access data on a file share that has continuous availability, but they will not be able to realize the potential benefits of the SMB Transparent Failover feature.

Note:

Hyper-V over SMB requires SMB 3.0; therefore, down-level versions of the SMB protocol are not relevant for these designs.

2.3.7.4 SMB Encryption

SMB Encryption protects incoming data from snooping threats, with no additional setup requirements. SMB 3.0 in Windows Server 2012 R2 secures data transfers by encrypting incoming data, to protect against tampering and eavesdropping attacks.

The biggest potential benefit of using SMB Encryption instead of general solutions (such as IPsec) is that there are no deployment requirements or costs beyond changing the SMB settings in the server.

SMB 3.0 uses a newer algorithm (AES-CMAC) for validation, instead of the HMAC-SHA-256 algorithm that SMB 2.0 uses. AES-CCM and AES-CMAC can be dramatically accelerated on most modern CPUs that have AES instruction support.

An administrator can enable SMB Encryption for the entire server, or for only specific file shares. It is an extremely cost effective way to protect data from snooping and tampering attacks. Administrators can turn on SMB Encryption simply by using the File Server Manager or Windows PowerShell.

2.3.8 Volume Shadow Copy Service

Volume Shadow Copy Service (VSS) is a framework that enables volume backups to run while applications on a system continue to write to the volumes. A new feature called VSS for SMB File Shares was introduced in Windows Server 2012 R2 to support applications that store data files on remote SMB file shares. This feature enables VSS-aware backup applications to perform consistent shadow copies of VSS-aware server applications that store data on SMB 3.0 file shares. Prior to this feature, VSS supported only shadow copies of data that were stored on local volumes.

2.3.9 Scale-Out File Server

One the main advantages of file storage over block storage is the ease of configuration, paired with the ability to configure folders that can be shared by multiple clients. SMB takes this one step farther by introducing the SMB Scale-Out feature, which provides the ability to share the same folders from multiple nodes of the same file server cluster. This is made possible by the use of the Cluster Shared Volumes (CSV) feature, which supports file sharing in Windows Server 2012 R2.

For example, if you have a four-node file-server cluster that uses Scale-Out File Server, an SMB client will be able to access the share from any of the four nodes. This active-active configuration lets you balance the load across cluster nodes by allowing you to move clients without any service interruption. This means that the maximum file-serving capacity for a given share is no longer limited by the capacity of a single cluster node.

Scale-Out File Server also helps keep configurations simple, because a share is configured only once to be consistently available from all nodes of the cluster. Additionally, SMB Scale-Out simplifies administration by not requiring cluster virtual IP addresses or by creating multiple cluster file-server resources to utilize all cluster nodes.

Scale-Out File Server requires:

- A failover cluster that is running Windows Server 2012 R2 with at least two nodes. The cluster must pass the tests in the Cluster Configuration Validation Wizard. In addition, the clustered role should be created for scale out. This is not applicable to traditional file server clustered roles. An existing traditional file server clustered role cannot be used in (or upgraded to) a Scale-Out File Server cluster.

- File shares that are created on a Cluster Shared Volume with continuous availability. This is the default setting.

- Computers running Windows Server 2012 R2, Windows Server 2012, Windows 8.1, or Windows 8.

Windows Server 2012 R2 provides several capabilities with respect to Scale-Out File Server functionality including:

- Support for multiple instances

- Bandwidth management

- Automatic rebalancing

SMB in Windows Server 2012 R2 provides an additional instance on each cluster node in Scale-Out File Server specifically for CSV traffic. A default instance can handle incoming traffic from SMB clients that are accessing regular file shares, while another instance only handles inter-node CSV traffic.

SMB in Windows Server 2012 R2 also supports the ability to configure bandwidth limits based for predefined categories. SMB traffic is divided into three predefined categories that are named Default, VirtualMachine, and LiveMigration. It is possible to configure a bandwidth limit for each predefined category through Windows PowerShell or WMI by using bytes-per-second. This is especially useful when live migration and SMB Direct (SMB over RDMA) are utilized.

2.4 File Services

2.4.1 Storage Spaces

Storage Spaces introduces a new class of sophisticated storage virtualization enhancements to the storage stack that incorporates two concepts:

- Storage pools: Virtualized units of physical disk units that enable storage aggregation, elastic capacity expansion, and delegated administration.

- Storage spaces: Virtual disks with associated attributes that include a desired level of resiliency, thin or fixed provisioning, automatic or controlled allocation on diverse storage media, and precise administrative control.

The Storage Spaces feature in Windows Server 2012 R2 can utilize failover clustering for high availability, and it can be integrated with CSV for scalable deployments.

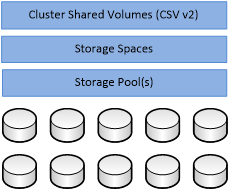

Figure 5 CSV v2 can be integrated with Storage Spaces

The features that Storage Spaces includes are:

- Storage pooling: Storage pools are created based on the needs of the deployment. For example, given a set of physical disks, you can create one pool by using all of the physical disks that are available or multiple pools by dividing the physical disks as required. In addition, you can map a storage pool to combinations of hard disk drives in addition to solid-state drives (SSDs). Pools can be expanded dynamically simply by adding more drives, thereby scaling to cope with increasing data growth as needed.

- Multitenancy: Administration of storage pools can be controlled through access control lists (ACLs) and delegated on a per-pool basis, thereby supporting multi-tenant hosting scenarios that require tenant isolation. Storage Spaces follows the Windows security model and therefore can be integrated fully with Active Directory Domain Services (AD DS).

- Resilient storage: Storage Spaces supports two optional resiliency modes: mirroring and parity. Capabilities such as per-pool hot spare support, background scrubbing, and intelligent error correction enable optimal service availability despite storage component failures.

- Continuous availability through integration with failover clustering: Storage Spaces is fully integrated with failover clustering to deliver continuous availability. One or more pools can be clustered across multiple nodes in a single cluster. Storage Spaces can then be instantiated on individual nodes, and it will migrate or fail over to a different node in response to failure conditions or because of load balancing. Integration with CSV 2.0 enables scalable access to data on storage infrastructures.

- Optimal storage use: Server consolidation frequently results in multiple datasets that share the same storage hardware. Storage Spaces supports thin provisioning to enable you to share storage capacity among multiple unrelated datasets. Trim support enables capacity reclamation when possible.

- Operational simplicity: Scriptable remote management is permitted through the Windows Storage Management API, Windows Management Instrumentation (WMI), and Windows PowerShell. Storage Spaces can be managed through the File Services GUI in Server Manager or by using task automation with Windows PowerShell cmdlets.

- Fast rebuild: If a physical disk fails, Storage Spaces will regenerate the data from the failed physical disk in parallel. During parallel regeneration, a single disk in the pool serves as the source of data or the target of data, and Storage Spaces maximizes peak sequential throughput. No user action is necessary, and the newly created Storage Spaces will use the new policy.

For single-node environments, Windows Server 2012 R2 requires the following:

- Serial or SAS-connected disks (in an optional JBOD enclosure)

For multiserver and multisite environments, Windows Server 2012 R2 requires the following:

- Any requirements that are specified for failover clustering and CSV 2.0

- Three or more SAS-connected disks (JBODs) to encourage compliance with Windows Certification requirements

2.4.1.1 Write-Back Cache

Storage Spaces in Windows Server 2012 R2 supports an optional write-back cache that can be configured with simple, parity, and mirrored spaces. The write-back cache is designed to improve performance for workloads with small, random writes by using solid-state drives (SSDs) to provide a low-latency cache. The write-back cache has the same resiliency requirements as the Storage Spaces it is configured for. That is, a simple space requires a single journal drive, a two-way mirror requires two journal drives, and a three-way mirror requires three journal drives.

If you have dedicated journal drives, they are automatically selected for the write-back cache, and if there are no dedicated journal drives, drives that report the media type as SSDs are selected to host the write-back cache.

The write-back cache is associated with an individual space, and it is not shared. However, the physical SSDs that are associated with the write-back cache can be shared among multiple write-back caches. The write-back cache is enabled by default, if there are SSDs available in the pool.

Note:

The default size of the write-back cache is 1 GB, and it is not recommended to change the size. After the write-back cache is created, its size cannot be changed.

2.4.1.2 Storage Tiers

Storage Spaces in Windows Server 2012 R2 supports the capability for a virtual hard disk to have the best characteristics of SSDs and hard disk drives to optimize placement of workload data. This is referred to as Storage Tiering. The most frequently accessed data is prioritized to be placed on high-performance SSDs, and the less frequently accessed data is prioritized to be placed on high-capacity, lower-performance hard disk drives.

Data activity is measured in the background and periodically moved to the appropriate location with minimal performance impact to a running workload. Administrators can further override automated placement of files, based on access frequency. It is important to note that storage tiers are compatible only with mirror spaces or simple spaces. Parity spaces are not compatible with storage tiers.

2.4.2 Resilient File System

Windows Server 2012 R2 supports the updated local file system called Resilient File System (ReFS). ReFS promotes data availability and online operation, despite errors that would historically cause data loss or downtime.

ReFS was designed with three key goals in mind:

- Maintain the highest possible levels of system availability and reliability, under the assumption that the underlying storage might be unreliable.

- Provide a full end-to-end resilient architecture when it is used in conjunction with Storage Spaces, so that these two features magnify the capabilities and reliability of one another when they are used together.

- Maintain compatibility with widely adopted and successful NTFS file system features

In Windows Server 2012 R2, Cluster Shared Volumes includes compatibility for ReFS. ReFS in Windows Server 2012 R2 also provides support to automatically correct corruption in a Storage Spaces parity space.

2.4.3 NTFS

In Windows Server 2012 R2 and Windows Server 2012, NTFS has been enhanced to maintain data integrity when using SATA drives. NTFS provides online corruption scanning and repair capabilities that reduce the need to take volumes offline.

Two key features of NTFS make it a good choice for Microsoft IaaS storage infrastructures. The first one targets the need to maintain data integrity in inexpensive commodity storage. This was accomplished by enhancing NTFS so that it relies on the flush command instead of “forced unit access” for all operations that require Write ordering. This improves resiliency against metadata inconsistencies that are caused by unexpected power loss. This means that you can more safely use SATA drives.

NTFS high availability is achieved through a combination of features, which include:

- Online corruption scanning: Windows Server 2012 R2 performs online corruption scanning operations as a background operation on NTFS volumes. This scanning operation identifies and corrects areas of data corruption if they occur, and it includes logic that distinguishes between transient conditions and actual data corruption, which reduces the need for CHKDSK operations.

- Improved self-healing: Windows Server 2012 R2 provides online self-healing to resolve issues on NTFS volumes without the need to take the volume offline to run CHKDSK.

- Reduced repair times: In the rare case of data corruption that cannot be fixed with online self-healing, you are notified that data corruption has occurred, and you can choose when to take the volume offline for a CHKDSK operation. Furthermore, because of the online corruption-scanning capability, CHKDSK scans and repairs only tagged areas of data corruption. Because it does not have to scan the whole volume, the time that is necessary to perform an offline repair is greatly reduced. In most cases, repairs that would have taken hours on volumes that contain a large number of files, now take seconds.

2.4.4 Scale-Out File Server

In Windows Server 2012 R2, the following clustered file-server types are available:

- Scale-Out File Server cluster for application data: This clustered file server lets you store server application data (such as virtual machine files in Hyper-V) on file shares, and obtain a similar level of reliability, availability, manageability, and performance that you would expect from a storage area network. All file shares are online on all nodes simultaneously. File shares that are associated with this type of clustered file server are called scale-out file shares. This is sometimes referred to as an Active/Active cluster.

- File Server for general use: This is the continuation of the clustered file server that has been supported in Windows Server since the introduction of failover clustering. This type of clustered file server, and thus all of the file shares that are associated with the clustered file server, is online on one node at a time. This is sometimes referred to as an Active/Passive or a Dual/Active cluster. File shares that are associated with this type of clustered file server are called clustered file shares.

A Scale-Out File Server cluster is designed to provide file shares that are continuously available for file-based server application storage. Scale-out file shares provide the ability to share the same folder from multiple nodes of the same cluster.

For instance, if you have a four-node file-server cluster that is using the SMB Scale-Out feature, a computer that is running Windows Server 2012 R2 or Windows Server 2012 can access file shares from any of the four nodes. This is achieved by utilizing failover-clustering features in Windows Server 2012 R2 and Windows Server 2012 and in SMB 3.0.

File server administrators can provide scale-out file shares and continuously available file services to server applications and respond to increased demands quickly by bringing more servers online. All of this is transparent to the server application. When combined with Scale-Out File Server, this provides comparable capabilities to traditional SAN architectures.

Potential benefits that are provided by a Scale-Out File Server cluster in Windows Server 2012 R2 include:

- Active/Active file shares. All cluster nodes can accept and serve SMB client requests. By making the file-share content accessible through all cluster nodes simultaneously, SMB 3.0 clusters and clients cooperate to provide transparent failover (continuous availability) to alternative cluster nodes during planned maintenance and unplanned failures without service interruption.

- Increased bandwidth. The maximum file share bandwidth is the total bandwidth of all file-server cluster nodes. The total bandwidth is not constrained to the bandwidth of a single cluster node, but instead to the capability of the backing storage system. You can increase the total bandwidth by adding nodes.

- CHKDSK with zero downtime. A CSV file system can perform CHKDSK without affecting applications that have open handles in the file system.

- Clustered Shared Volumes cache. CSV in Windows Server 2012 R2 support a Read cache, which can significantly improve performance in certain scenarios, such as a Virtual Desktop Infrastructure.

- Simplified management. You create the Scale-Out File Server cluster and then add the necessary CSV and file shares.

2.5 Storage Capabilities

2.5.1 Data Deduplication

By using the data deduplication, you can significantly improve the efficiency of storage capacity usage. In Windows Server 2012 R2, data deduplication provides the following features:

- Capacity optimization: Data deduplication lets you store more data in less physical space. Data deduplication uses variable size chunking and compression, which deliver optimization ratios of up to 2:1 for general file servers and up to 20:1 for VHD libraries.

- Scalability and performance: Data deduplication is scalable, resource-efficient, and non-intrusive. It can run on dozens of large volumes of primary data simultaneously, without affecting other workloads on the server.

- Reliability and data integrity: Windows Server 2012 R2 utilizes checksum, consistency, and identity validation. In addition, to recover data in the event of corruption, Windows Server 2012 R2 maintains redundancy for all metadata and the most frequently referenced data.

- Bandwidth efficiency with BranchCache: Through integration with BranchCache, the same optimization techniques that are applied for improving data storage efficiency on the disk are applied to transferring data over a wide area network (WAN) to a branch office. This integration results in faster file download times and reduced bandwidth consumption.

Data deduplication is supported only on NTFS data volumes that are hosted on Windows Server 2012 R2 or Windows Server 2012 or Cluster Shared Volumes in Windows Server 2012 R2.

Deduplication is not supported on:

- Boot volumes

- FAT or ReFS volumes

- Remote mapped or remote mounted drives,

- CSV file system volumes in Windows Server 2012, live data (such as SQL Server databases)

- Exchange Server stores

- Virtual machines that use local storage on a server running Hyper-V

Files that are not supported include those with:

- Extended attributes

- Encrypted files

- Files smaller than 32K

- Files with reparse points

From a design perspective, deduplication is not supported for files that are open and constantly changing for extended periods or that have high I/O requirements, such as running virtual machines or live SQL Server databases. The exception is that in Windows Server 2012 R2, deduplication supports live VHDs for VDI for workloads.

2.5.2 Thin Provisioning and Trim

Instead of removing redundant data on the volume, thin provisioning gains efficiencies by making it possible to allocate just enough storage at the moment of storage allocation, and then increase capacity as your business needs grow over time.

Windows Server 2012 R2 provides full support for thinly provisioned storage arrays, which lets you get the most out of your storage infrastructure. These sophisticated storage solutions offer just-in-time allocations (known as thin provisioning), and the ability to reclaim storage that is no longer needed (known as trim).

2.5.3 Volume Cloning

Volume cloning is another common practice in virtualization environments. Volume cloning can be used for host and virtual machine volumes to dramatically improve provisioning times.

2.5.4 Volume Snapshot

SAN volume snapshots are a common method of providing a point-in-time, instantaneous backup of a SAN volume or LUN. These snapshots are typically block-level, and they only utilize storage capacity as blocks change on the originating volume. However, Windows Server does not control this behavior—it varies by storage array vendor.

2.5.5 Storage Tiering

Storage tiering physically partitions data into multiple distinct classes such as price or performance. Data can be dynamically moved among classes in a tiered storage implementation, based on access, activity, or other considerations.

Storage tiering normally combine varying types of disks that are used for different data types (for example, production, non-production, or backups).

2.6 Storage Management and Automation

Windows Server 2012 R2 provides a unified interface for managing storage. The storage interface provides capabilities for advanced management of storage, in addition to a core set of defined WMI and Windows PowerShell interfaces.

The interface uses WMI for management of physical and virtual storage, including non-Microsoft intelligent storage subsystems, and it provides a rich experience for IT professionals and developers by using Windows PowerShell scripting to help make a diverse set of solutions available.

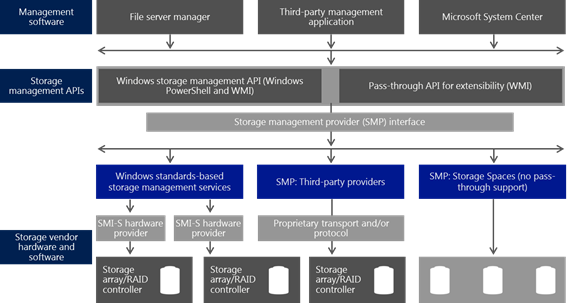

Management applications can use a single Windows API to manage storage types by using SMP or standards-based protocols such as Storage Management Initiative Specification (SMI-S). The WMI-based interface provides a single mechanism through which to manage all storage, including non-Microsoft intelligent storage subsystems and virtualized local storage (known as Storage Spaces).

Figure 6 shows the unified storage management architecture.

Figure 6 Unified storage management architecture

2.6.1 Windows Offloaded Data Transfers (ODX)

Offloaded data transfers (ODX) is a feature in the storage stack in Windows Server 2012 R2. When used with offload-capable SAN storage hardware, ODX lets a storage device perform a file copy operation without the main processor of the server running Hyper-V reading the content from one storage place and writing it to another.

ODX enables rapid provisioning and migration of virtual machines, and it provides significantly faster transfers of large files, such as database or video files. By offloading the file transfer to the storage array, ODX minimizes latencies, promotes the use of array throughput, and reduces host resource usage such as central processing unit (CPU) and network consumption. File transfers are automatically and transparently offloaded when you move or copy files. No administrator setup or intervention is necessary.

3 Public Cloud

This section discusses Microsoft Azure public cloud related options and their capabilities. The primary focus is on Microsoft Azure Infrastructure Services, which provides support for running virtual machines in a shared, public cloud environment.

3.1 Public Cloud Storage Types and Accounts

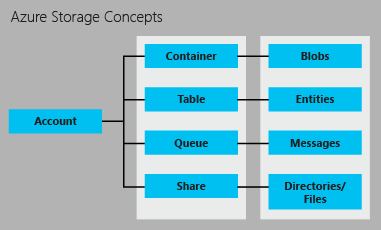

The Azure Storage services include the following types of storage:

- Blob storage stores file data. A blob can be any type of text or binary data, such as a document, media file, or application installer.

- Table storage stores structured datasets. Table storage is a NoSQL key-attribute data store, which allows for rapid development and fast access to large quantities of data.

- Queue storage provides reliable messaging for workflow processing and for communication between components of cloud services.

- File storage offers shared storage for applications using the SMB 2.1 protocol. Azure virtual machines and cloud services can share file data across application components via mounted shares, and on-premises applications can access file data in a share via the File service REST API.

The Blob and File storage options are of primary interest for IaaS scenarios.

Blob, Table, and Queue storage are included in every storage account, while File storage is available by request via the Azure Preview page.

The storage account is a unique namespace that gives you access to Azure Storage. Each storage account can contain up to 500 TB of combined blob, queue, table, and file data. See Azure Storage Scalability and Performance Targets for details about Azure storage account capacity.

The figure below shows the relationships between the Azure storage resources:

Figure 7: Azure storage relationships

Note:

Before you can create a storage account, you must have an Azure subscription, which is a plan that gives you access to a wide array of Azure cloud services. You can create up to 100 uniquely named storage accounts with a single subscription. See Storage Pricing Details for information on volume pricing.

3.1.1 Storage Accounts

Storage costs are based on four factors:

- Storage capacity

- Replication scheme

- Storage transactions

- Data egress

Storage capacity refers to how much of your storage account allotment you are using to store data. The cost of simply storing your data is determined by how much data you are storing, and how it is replicated.

Replication scheme refers to the option to replicate data outside of the Azure region in which that data resides.

Transactions refer to all read and write operations to Azure Storage.

Data egress refers to data transferred out of an Azure region. When the data in your storage account is accessed by an application that is not running in the same region, whether that application is a cloud service or some other type of application, then you are charged for data egress.

Cost Considerations:

For Azure services, you can take steps to group your data and services in the same data centers to reduce or eliminate data egress charges. The Storage Pricing Details page provides detailed pricing information for storage capacity, replication, and transactions. The Data Transfers Pricing Details provides detailed pricing information for data egress

3.1.1.1 Storage Account Security

When you create a storage account, Azure generates two 512-bit storage access keys, which are used for authentication when the storage account is accessed. By providing two storage access keys, Azure enables you to regenerate the keys with no interruption to your storage service or access to that service.

We recommend that you avoid sharing your storage account access keys with anyone else. If there is a reason to believe that your account has been compromised, you can regenerate your access keys from within the portal.

To permit access to storage resources without giving out your access keys, you can use a shared access signature. A shared access signature provides access to a resource in your account for an interval that you define and with the permissions that you specify.

3.1.1.2 Storage account metrics and logging

You can configure minimal or verbose metrics in the monitoring settings for your storage account.

- Minimal metrics collects metrics on data such as ingress/egress, availability, latency, and success percentages, which are aggregated for the Blob, Table, and Queue services.

- Verbose metrics collects operations-level detail in addition to service-level aggregates for the same metrics. Verbose metrics enable closer analysis of issues that occur during application operations.

For the full list of available metrics, see Storage Analytics Metrics Table Schema. For more information about storage monitoring, see About Storage Analytics Metrics.

Logging is a configurable feature of storage accounts that enables logging of requests to read, write, and delete blobs, tables, and queues. You configure logging in the Azure Management Portal, but you can't view the logs in the Management Portal. The logs are stored and accessed in the storage account. For more information, see Storage Analytics Overview.

3.1.1.3 Affinity groups for co-locating Storage and other services

An affinity group is a geographic grouping of Azure services and VMs with an Azure storage account. An affinity group can improve service performance by locating computer workloads in the same data center or near the target user audience.

Cost Considerations:

No billing charges are incurred for egress traffic when data in a storage account is accessed from another service that is part of the same affinity group

3.1.2 Blob Storage

For users with large amounts of unstructured data to store in the cloud, Blob storage enables you to store content such as:

- Documents

- Social data such as photos, videos, music, and blogs

- Backups of files, computers, databases, and devices

- Images and text for web applications

- Configuration data for cloud applications

- Big data, such as logs and other large datasets

Every blob exists in a container. Containers provide a way to assign security policies to groups of objects. A storage account can contain any number of containers, and a container can contain any number of blobs, up to the 500 TB capacity limit of the storage account.

Note:

Containers represent a single level of folder-type storage. You cannot create “sub” containers.

Blob storage offers two types of blobs, block blobs and page blobs (disks). Block blobs are optimized for streaming and storing cloud objects, such as documents, media files, and backups. A block blob can be up to 200 GB in size.

Page blobs are optimized for representing virtual machine .vhd files. They support random writes and may be up to 1 TB in size. An Azure virtual machine attached disk is a VHD file stored as a page blob.

Tip:

For very large data sets where network constraints make uploading or downloading data to Blob storage over the wire unrealistic, you can ship a hard drive to Microsoft to import or export data directly from the data center using the Azure Import/Export Service. You can also copy blob data within your storage account or across storage accounts.

For more information about Blob storage, please see An Introduction to Windows Azure BLOB Storage.

3.1.3 Table Storage

Table storage offers highly available, massively scalable storage, so that your application can automatically scale to meet user demand. Table storage is Microsoft’s NoSQL key/attribute store – it has a schema-less design, making it different from traditional relational databases. Access to data is fast and cost-effective for all kinds of applications. Table storage is typically significantly lower in cost than traditional SQL for similar volumes of data.

Note:

Table storage is not typically used in Microsoft IaaS Infrastructures, but might be used in mixed IaaS and PaaS scenarios.

For more information on Microsoft Azure Table Storage, please see Microsoft Azure Table Storage – Not Your Father’s Database.

3.1.4 Queue Storage

Queue storage provides a messaging solution for asynchronous communication between application components, whether they are running in the cloud, on the desktop, on an on-premises server, or on a mobile device. Queue storage also supports managing asynchronous tasks and building process workflows.

Note:

Queue storage is not typically used in Microsoft IaaS Infrastructures, but might be used in mixed IaaS and PaaS scenarios.

For more information about Microsoft Azure Queue storage, please see Azure Queues and Service Bus Queues – Compared and Contrasted.

3.1.5 SMB File Storage

Azure File storage offers cloud-based file shares.

Applications running in Azure virtual machines or cloud services can mount a File storage share to access file data, just as a desktop application would mount a typical SMB share. Any number of application components can mount and access the File storage share simultaneously.

A File storage share is a standard SMB 2.1 file share. Applications running in Azure can access data in the share via the OS file system. Developers can leverage existing code to migrate existing applications. IT Pros can create, mount, and manage File storage shares as part of the administration of Azure applications.

On-premises applications need to call the File storage REST API to access data in a file share. This way, an enterprise can choose to migrate some legacy applications to Azure and continue running others from within their own organization.

Note:

Mounting a file share is only possible for applications running in Azure; an on-premises application may only access the file share via the REST API.

Distributed applications can also use Azure File storage to store and share useful application data and development and testing tools.

For example, an application may store configuration files and diagnostic data such as logs, metrics, and crash dumps in a File storage share so that they are available to multiple virtual machines or roles. Developers and administrators can store utilities that they need to build or manage an application in a File storage share that is available to all components, rather than installing them on every virtual machine or role instance.

For more information on Microsoft Azure File Storage, please see Introducing Microsoft Azure File Service.

3.2 Highly Available Storage

Data in your storage account is replicated to ensure that it meets the Azure Storage SLA even in the face of transient hardware failures. Azure Storage is deployed in 15 regions around the world and also includes support for replicating data between regions. You have several options for replicating the data in your storage account:

- Locally redundant storage (LRS) maintains three copies of your data. LRS is replicated three times within a single facility in a single region. LRS protects data from normal hardware failures, but not from the failure of a single facility. LRS is offered at a discount. For maximum durability, we recommend geo-redundant storage, described below.

- Zone-redundant storage (ZRS) maintains three copies of your data. ZRS is replicated three times across two to three facilities, either within a single region or across two regions, providing higher durability than LRS. ZRS ensures that your data is durable within a single region. ZRS provides a higher level of durability than LRS; however, for maximum durability, we recommend that you use geo-redundant storage, described below

Note:

ZRS is currently available only for block blobs. Note that once you have created your storage account and selected zone-redundant replication, you cannot convert it to use to any other type of replication, or vice versa.

- Geo-redundant storage (GRS) is enabled for your storage account by default when you create it. GRS maintains six copies of your data. With GRS, your data is replicated three times within the primary region, and is also replicated three times in a secondary region hundreds of miles away from the primary region, providing the highest level of durability. In the event of a failure at the primary region, Azure Storage will failover to the secondary region. GRS is recommended over ZRS or LRS for maximum durability.

- Read-access geo-redundant storage (RA-GRS) provides all of the benefits of geo-redundant storage noted above, and also allows read access to data at the secondary region in the event that the primary region becomes unavailable. Read-access geo-redundant storage is recommended for maximum availability in addition to durability

3.3 Storage Scalability and Performance

The scalability targets for a storage account depend on the region where it was created. The following sections outline scalability targets for US, European, and Asian regions.

For more information on the evolving nature of these storage scalability and performance targets, and for information on other regions, please see Azure Storage Scalability and Performance Targets.

The following table describes the scalability targets for storage accounts in US regions, based on the level of redundancy chosen:

Total Account Capacity |

Total Request Rate (assuming 1KB object size) |

Total Bandwidth for a Geo-Redundant Storage Account |

Total Bandwidth for a Locally Redundant Storage Account |

500 TB |

Up to 20,000 entities or messages per second |

*Ingress: Up to 10 gigabits per second *Egress: Up to 20 gigabits per second |

*Ingress: Up to 20 gigabits per second *Egress: Up to 30 gigabits per second |

* Ingress refers to all data (requests) being sent to a storage account.

* Egress refers to all data (responses) being received from a storage account

The following table describes the performance targets for a single partition for each service:

Target Throughput for Single Blob |

Target Throughput for Single Queue (1 KB messages) |

Target Throughput for Single Table Partition (1 KB entities) |

Up to 60 MB per second, or up to 500 requests per second |

Up to 2000 messages per second |

Up to 2000 entities per second |

High Performance Solid State Disks

In addition to blob, queue and table storage options is the option to use Solid State Disks. There is a series of VM sizes for Microsoft Azure Virtual Machines and Web/Worker Roles called the D-Series. These sizes offer up to 112 GB in memory with compute processors that are approximately 60 percent faster than the A-Series VM sizes. These sizes have up to 800 GB of local SSD disk. The new sizes offer an ideal configuration for running workloads that require increased processing power and fast local disk I/O. These sizes are available for both Virtual Machines and Cloud Services.

The temporary drive (D:\ on Windows, /mnt or /mnt/resource on Linux) uses local SSDs for the D-Series virtual machines. The SSD local temporary disk is best used for workloads that replicate across multiple instances, like MongoDB, or that can leverage this high I/O disk for a local and temporary cache, like SQL Server 2014′s Buffer Pool Extensions.

Note:

These SSD temporary drives are not guaranteed to be persistent. Thus, while physical hardware failure is rare, the data on this disk may be lost when it occurs.

Buffer Pool Extensions (BPE), introduced in SQL Server 2014, allows extending the SQL Engine Buffer Pool using local SSDs to significantly improve the read latency of database workloads. The Buffer Pool is a global memory resource used to cache data pages for more efficient reads. Database read scenarios where the working set doesn’t fit in the memory will benefit significantly from configuring BPE.

4 Hybrid Cloud

4.1 Storage Appliance

One of the biggest challenges facing enterprise storage customers is massive data growth and the amount of storage management work required to keep up with it. StorSimple hybrid storage arrays enable you to manage data growth and reduce the rate at which you acquire new storage.

Some of the key features of StorSimple include:

- Performance & Scalability – Massively scalable hybrid cloud storage with larger on-premises capacity for SSD and HDD

- Data Mobility – Access to enterprise data in Microsoft Azure through the StorSimple Virtual Appliance

- Consolidated Management – Azure portal to manage geographically dispersed physical arrays and virtual appliances, data protection policies, and data mobility

Manage data growth and lower storage costs:

- Cloud storage is used to offload inactive data from on-premises and for storing backup and archive data

- Inline deduplication and compression reduce storage growth rates and increase storage utilization

- Solid state drives (SSD) deliver low-cost input/output per second

Streamline storage and management:

- Primary, backup, snapshot, archive and offsite storage are converged into a hybrid cloud storage solution

- Storage functions are controlled centrally from an Azure management portal

- Automated cloud snapshots replace costly remote replication and time-consuming tape management

Improve disaster recovery and compliance

Migrate and copy data through the cloud:

- StorSimple Virtual Appliance makes enterprise data available to VMs running in Azure for dev/test, disaster recovery and DR testing, and other cloud applications *

- Data uploaded to the cloud can be accessed from other sites for recovery and data migrations

For more information on StorSimple and how it works, please see the StorSimple Resources page for a number of informative white papers.

[1]Using the following formula, Windows Server 2012 supports a total of 261,120 LUNs per HBA – (255 LUN ID per target) × (128 targets per bus) × (8 buses per adapter).