Evaluating Windows Server and System Center on a Laptop (or two, or three) – Failover Clustering (part 1)

How to build a Failover Cluster on a single laptop

|

Continuing on from my Introduction and Fabric posts, we’re now going to start playing with (learning) Failover Clustering for our evaluation of Windows Server and System Center. Note: This is NOT best practice deployment advice. This is “notes from the field”. This is how to get by with what we have. |

And if I only had a single laptop and needed to learn about Failover Clustering, this is what I’d do:

If you’ve been following along, and have completed all the steps in my Introduction post and Fabric post, you have a laptop (or two, or three) dual booting into Windows Server with the Hyper-V role enabled. They are domain joined and have internet access.

A brief history lesson in Microsoft’s Failover Clustering

If you know what “Wolfpack” was, then feel free to skip this part (you’re as old as me)![]()

- Windows NT Server 4.0 Enterprise Edition was the first version of Windows Server to include the Microsoft Cluster Service (MSCS) feature. It’s development/beta codename was “Wolfpack”. It gave us a two-node, failover cluster that provided us with high availability with (almost) seamless failover of mission critical workloads.

- Windows 2000 Advanced Server gave us an improved two-node cluster and Windows 2000 Datacenter Server gave us a four-node cluster. Datacenter edition was only available directly from OEMs, so you had to buy the entire four-node solution in one go (hardware and all). If you wanted support from Microsoft, you were forced to use hardware that was on the Hardware Compatibility List (HCL), specifically for MSCS.

- Windows Server 2003 Enterprise introduced eight-node clustering – as did Windows Server 2003 Datacenter.

- Windows Server 2003 R2 didn’t add anything to MSCS.

- Windows Server 2008 Enterprise and Datacenter editions introduced the Cluster Validation Wizard which let you (the system administrator) run all the hardware and software tests that Microsoft would have used to qualify the solution for support. If your tests passed, you had a fully supported configuration. The Microsoft Cluster Service (MSCS) was now known as Failover Clustering.

- Windows Server 2008 R2 didn’t add anything to Failover Clustering.

- Windows Server 2012 introduced 64-node Failover Clusters in both the Standard and Datacenter editions.

- Windows Server 2012 R2 doesn’t change and is still 64-node Failover Clusters.

High Availability (unplanned downtime) of virtual machines was introduced with Windows Server 2003 R2 running Virtual Server 2005 SP1 and improved with Windows Server 2008 and Hyper-V.

Planned downtime of the hosts was initially enabled with Quick Migration of the virtual machines (save , move, restore) back with Virtual Server and the first release of Hyper-V and then improved with Live Migration (copy with no downtime using shared storage) with Windows Server 2008 R2. Windows Server 2012 gave us shared nothing live migration (copy a running VM onto another host with just a network cable in common). Windows Server 2012 R2 improves Live Migration further still.

Really high level overview of Failover Clustering

This (picture) is Failover Clustering at its simplest. Both nodes have access to some Shared Storage, are communicating with each other over a Heart Beat network and give access to the clustered resources to the clients over the LAN.

To get your head around this, think Active-Passive (one node doing the work, with the other one waiting). Node 1 is running the mission critical workload. The data is on the shared storage. The workload publishes its name and IP address onto the LAN. The clients resolve the name to the IP address and get access to the data (via Node 1).

In the event of an unplanned failure of Node 1 (pulling the power cord), the remaining node detects the failure (no heartbeat). Node 2 will take ownership of the Shared Storage, will spin up the workload and will publish the name and IP address onto the network. Clients continue to access the clustered resource via Node 2. This can take a short time as the workload needs to start on the surviving node.

Planned downtime is easier (scheduled maintenance of one of the nodes). We simply “move” the clustered resources to one node, perform the maintenance and move them back. This can have zero impact on the workload.

Each node can be actively doing more than one thing and be passively waiting for the others to fail (File & Print – SQL & Exchange, etc). In a two-node configuration, each node has to have enough resources to run all the workloads (to cope with a failure of the other node).

As you add more nodes into the solution, you “normally” end up with a hot standby node (a server doing nothing but waiting). And as we can now have up to 64 nodes in a cluster, we “normally” have multiple standby nodes.

Let’s build a Failover Cluster (on a single laptop)

Note: This is NOT best practice deployment advice. This is “notes from the field”. This is how to get by with what we have.

But also note that building a Failover Cluster using virtual machines is a best practice – they are known as Guest Clusters and address the issue of an operating system failure in one of the VMs and allow for scheduled maintenance (patching) of the guests.

So, for test & dev only (NOT for production):

The easiest way to do this is to use Windows Server 2012 R2, as it introduces a Shared VHDX feature. The other option is to use an iSCSI Target – I’ll mention this in part 2 of this post.

In production, each guest cluster node would be on a separate physical host and the shared VHDX file(s) on shared storage. We are going to “cheat” and get this working on a single laptop:

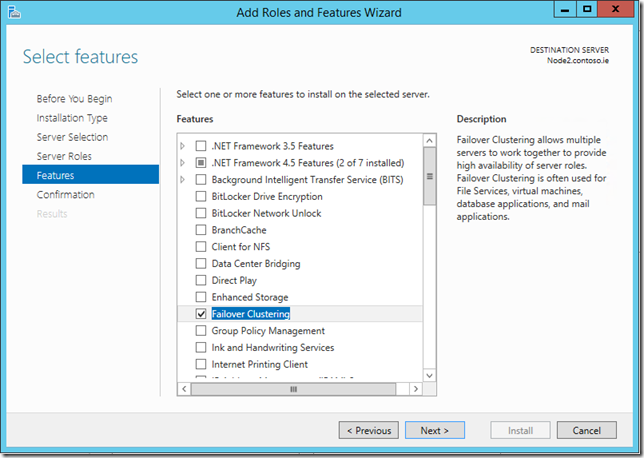

- Install the Failover Clustering feature on the hostwhere you want to run the guest VMs

- This enables the Shared VHDX feature

- Create two (or more), domain joined Guest VMs and install the Failover Clusteringfeature

- If you took the advice in my Fabric post (and performed the Optional Step), you already have VM Template to get you started.

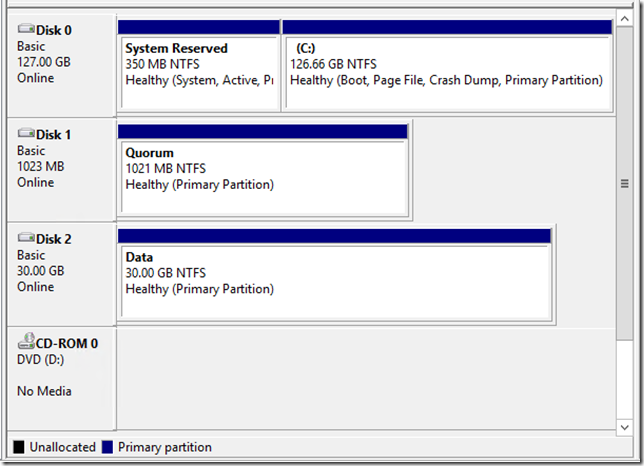

- Create two new VHDX files (these will be the shared cluster disks) – Quorum=1GB, Data=30GB

- Hyper-V Manager, New, Hard Disk

- Or PowerShell: New-VHD –Path d:\VMs\Quorum.vhdx –Dynamic –SizeBytes 1GB

- Manually attach the Shared Virtual Disk filter to the host

- FLTMC attach svhdxflt D: (where D: is the volume where the shared VHDX resides)

- This is ONLY needed because we are “cheating” – it will need to be done after every host reboot

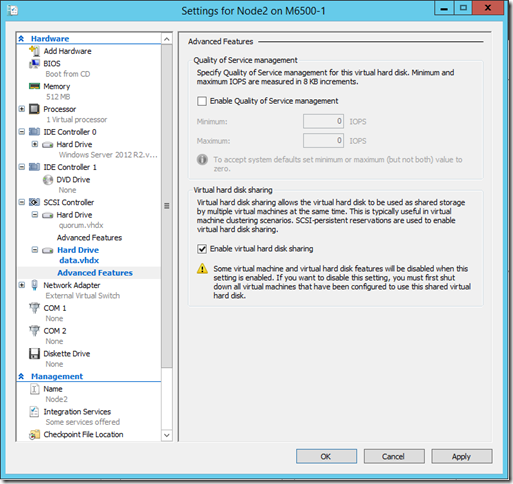

- In Hyper-V Manager add the Shared VHDX file(s) to the SCSI controller of the VMs

- NOTE: Before Applying changes, go into the Advanced Features of the VHDX file and check Enable virtual hard disk sharing

-

- Or with PowerShell:

- Add-VMHardDiskDrive –VMName Node2 –Path D:\VMs\Quorum.vhdx –ShareVirtualDisk

On one of the nodes, go into Disk Management and online and initialize the two disks then create NTFS volumes (don’t assign drive letters to them).

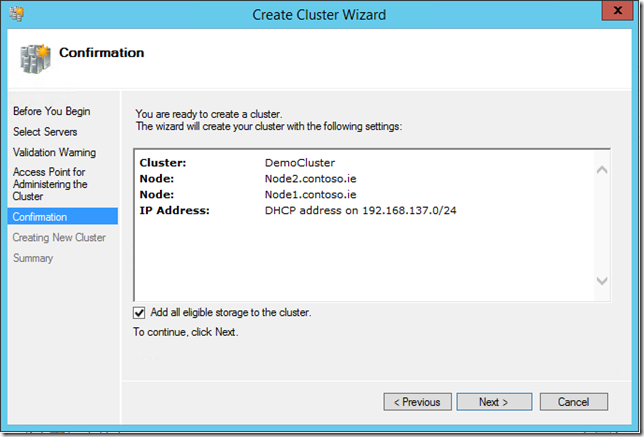

- In Failover Cluster Manager you can now Create a Cluster

- Select your nodes (Node1 & Node2)

- Say No to Validation (or Yes – just to see what it does)

- Give the Cluster a Name (DemoCluster)

- And optionally an IP address (if you’re not using DHCP)

- Leave the Add all eligible storage to the cluster checkbox ticked

- And press Next

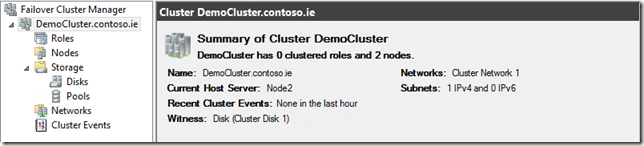

- In less than a minute your Failover Cluster is up and running

I’ll continue this post in a Part 2 later on.

Meantime, here’s some related “bedtime reading”:

Deploy a Guest Cluster Using a Shared Virtual Hard Disk

Enjoy

@DaveNorthey

Part 1 in this series is here:Introduction

Part 2 in this series is here:Fabric

Part 3 in this series is here: Failover Clustering (part 1)

Part 4 in this series is here: Failover Clustering (part 2)