Automation-Executing a PowerShell v3 (Parallel Execution) Script in System Center Orchestrator 2012

Hello readers! I have a post that I think will be very interesting to those of you who have wanted to execute PowerShell v3 through Orchestrator and have had issues. The core of Orchestrator (if you didn’t already know) was built around an x86 PowerShell base for execution of PowerShell. Meaning, by default if you fired up the Orchestrator Designer, built a quick and dirty Runbook to execute some PowerShell in the “Run.Net” activity that comes with the foundation activities, you would be executing that PowerShell with PowerShell v2 and in an x86 runspace. Some of the newer activities that have been bolted on to integration packs (such as the VMM Integration Pack) have provided a PowerShell activity that provides you the ability to fire off some x64 native code and even better on the server that has the PowerShell modules you need, etc.

Well, with the introduction of PowerShell v3 and some of the coolness around parallel execution using workflow, it started us thinking about how we may combine some parallel execution in Orchestrator leveraging PowerShell v3 and x64. This post starts with an overview of a PowerShell v3 script written to execute in parallel and finishes off with how you would integrate this example into Orchestrator. The integration method, by the way, doesn’t limit you to only PowerShell v3, but at this point this method is the only way to get v3 working that we’ve found.

Let’s Start with a Dissection of the Example Script

First step in this example is to provide the script we are using in our demo environment internally (SCDEMO) and give some introduction to that. This PowerShell script is used to “warm up” our IIS sites in our SCDEMO Clouds for demonstration purposes. Essentially, we want to ensure that the IIS site is immediately available when we navigate to it for demonstrations to customers. To do this, we have a URL warm up PowerShell script that is triggered as a component in our provisioning Runbook that kicks off and continues with providing access to a provisioned cloud. We initially configured this as a script to allow for parallel execution against 1 – N clouds (ex: 1 – 200) and ran on a schedule. This script executes in parallel rather than synchronous to allow us to execute the URL warm up against 200 machines (all at once in parallel) in roughly 60 seconds or so, rather than ~60 seconds * 200 URLs synchronously. This buys us speed that we need when we are setting up a demo for a customer. We opted to change this to a single execution script against a single URL but the example still holds true for a scheduled process. The next few sections concentrate on the chunks of the script and what they are doing.

Parameters to Get Us Started

So this section starts off by providing us the ability to either use defaults or take in parameters. Obviously I set my script up to use defaults that work for me. Knock yourself out customizing this for your own needs.

Param (

[parameter(Mandatory=$false,Position=0,HelpMessage=”Specify a base name to use ex: BlogEngine")] [String] $ServerBaseName=("BlogEngine"),

[parameter(Mandatory=$false,Position=1,HelpMessage=”Specify the number of digits ex: 0000")] [String] $ServerDigits=("0000"),

[parameter(Mandatory=$false,Position=2,HelpMessage=”Specify the domain name to use ex: .foo.com")] [String] $ServerDomain=(".contoso.com"),

[parameter(Mandatory=$false,Position=4,HelpMessage=”Specify the number of the server to start with.")] [Int] $ServerStart=(1),

[parameter(Mandatory=$false,Position=3,HelpMessage=”Specify the number of the server to end with.")] [Int] $ServerEnd=(50) ) |

This script takes in as parameters

- $ServerBaseName: We have an array of machines (explained later) that all start with a base machine name such as “BlogEngine”. We build the array with this base name and a series of numbers also explained next. Basically, we end up with server names such as “BlogEngine0001, BlogEngine0002, BlogEngine0003, you get the idea”

- $ServerDigits: This is another array building variable to allow us to go out to “4 zeros” and allow the zeros to be padding such as 0001, 0002….0010, etc.

- $ServerDomain: This provides us the “FQDN” part of our server URL

- $ServerStart:This is another array building variable. This value is where you want to start in your series of servers using the 0000 + 1 essentially to get 0001, 0000 + 10 would be of course 0010, etc.

- $ServerEnd: This value is the last server number in your list of servers. So if you supplied 50 in this example, BlogEngine0050 would be the last server in the series.

Build the Array of Servers

Next, we need to build our array of servers from the parameters we have. We start with initializing the $Servers array using the “@()” to essentially be an empty array ready to receive data. Then we take those parameters in a ForEach-Object loop to build our our array list of servers.

$Servers=@() $ServerStart..$ServerEnd| ForEach-Object {$Servers += "$ServerBaseName" + $_.ToString("$ServerDigits") + "$ServerDomain"} |

If we used 1 as our $ServerStart and 4 as our $ServerEnd the above would basically build a list that would look a lot like the below

BlogEngine0001.contoso.com

BlogEngine0002.contoso.com

BlogEngine0003.contoso.com

BlogEngine0004.contoso.com

Next We Kick off the Fun and Execute the Workflow

I’ve put a “Try” and “Catch” just for completeness here. Technically this could be executed without those. Notice the SilentlyContinue as well which is allowing us to continue without throwing errors. We’ll capture them anyway in a couple of steps from now. The execution of the workflow happens with the Invoke-URLRequest with the required parameters following. In this case we are sending it the name of the servers (Serverlist) using our array we built ( $Servers). Also, we kick this off twice because if a URL was “asleep” we kick this process of again to ensure it “woke up”.

try { $WakeUp = Invoke-URLRequest -Serverlist $Servers -ErrorAction SilentlyContinue $FinalResults = Invoke-URLRequest -Serverlist $Servers -ErrorAction SilentlyContinue } catch {} |

Main Workflow Function

Our main workflow function takes in as a parameter the server list we built (mentioned above) and as output it provides the status of the URL state. If you notice, there is also a ForEach -Parallel statement which indicates that anything that is being processed in this workflow function, will do so in parallel (instead of synchronously). Another note is that $Workflow:myoutput is being used here to gather data within this workflow function and allow it to be updated within the ForEach loop and returned to the main calling script.

workflow Invoke-URLRequest { param([parameter(Mandatory=$True)][String[]] $Serverlist)

$myoutput=@()

ForEach -Parallel ($server in $Serverlist) { $Result = Invoke-WebRequest -Uri "$server" -UseDefaultCredentials -TimeoutSec 30 -UseBasicParsing if (($Result -ne $Null) -and ($Result.links[1].href -match "syndication")){ $Alive = $True } Else { $Alive = $False } $Workflow:myoutput += $server + ":" + $Alive } Return $myoutput } |

Key points from the above piece of code

- Parallel execution so each server we have in our list will execute at exactly the same time and return to the calling main part of the script when done

- For our use – we’re searching for the word “syndication” since that is a word that should show up on the main site. For you it would be different.

- We’re setting a value of “$True” to “$Alive” here so we can leverage that data later on. Anything that is showing “$False” will be displayed as an issue.

- $MyOutput will store the ServerURL:$True or ServerURL:$False for each URL you pass it

Example

BlogEngine0001.contoso.com:$True

BlogEngine0002.contoso.com:$True

BlogEngine0003.contoso.com:$True

BlogEngine0004.contoso.com:$False

Build Array for Failure Data

Since this script is returning failures only when it executes, we need to build a final array of machines that failed so we can leverage this data for alerting in Orchestrator. The below essentially pulls any server name that didn’t return a “$True” value for being online and adds it to a failure array. Then outputs the final results into a $OutputResults variable.

$Failed=@() ForEach ($Server in $Servers){ if ($FinalResults -notcontains $Server + ":True") { $Failed += $Server } }

$OutputResults = $null if (!$Failed) {$OutputResults = "None"} else {$Failed} $OutputResults |

The Complete Script

For your viewing pleasure, I’ve provided the complete script below. This script will also live on the TechNet Gallery as a downloadable option for those that just want to borrow anything from it to build their own parallel v3 PowerShell script. Download the script from TechNet Gallery PowerShell v3 Parallel Execution Workflow Script for URL WarmUp to ensure you don’t have to deal with “copy / paste” issues.

Param (

[parameter(Mandatory=$false,Position=0,HelpMessage=”Specify a base name to use ex: BlogEngine")] [String] $ServerBaseName=("BlogEngine"),

[parameter(Mandatory=$false,Position=1,HelpMessage=”Specify the number of digits ex: 0000")] [String] $ServerDigits=("0000"),

[parameter(Mandatory=$false,Position=2,HelpMessage=”Specify the domain name to use ex: .foo.com")] [String] $ServerDomain=(".contoso.com"),

[parameter(Mandatory=$false,Position=4,HelpMessage=”Specify the number of the server to start with.")] [Int] $ServerStart=(1),

[parameter(Mandatory=$false,Position=3,HelpMessage=”Specify the number of the server to end with.")] [Int] $ServerEnd=(50) )

workflow Invoke-URLRequest { param([parameter(Mandatory=$True)][String[]] $Serverlist)

$myoutput=@()

ForEach -Parallel ($server in $Serverlist) { $Result = Invoke-WebRequest -Uri "$server" -UseDefaultCredentials -TimeoutSec 30 -UseBasicParsing if (($Result -ne $Null) -and ($Result.links[1].href -match "syndication")){ $Alive = $True } Else { $Alive = $False } $Workflow:myoutput += $server + ":" + $Alive } Return $myoutput }

$Servers=@() $ServerStart..$ServerEnd| ForEach-Object {$Servers += "$ServerBaseName" + $_.ToString("$ServerDigits") + "$ServerDomain"}

try { $WakeUp = Invoke-URLRequest -Serverlist $Servers -ErrorAction SilentlyContinue $FinalResults = Invoke-URLRequest -Serverlist $Servers -ErrorAction SilentlyContinue } catch {}

$Failed=@() ForEach ($Server in $Servers){ if ($FinalResults -notcontains $Server + ":True") { $Failed += $Server } }

$OutputResults = $null if (!$Failed) {$OutputResults = "None"} else {$Failed} $OutputResults |

Integration With Orchestrator

Now that I’ve gone through this script in a bit of detail to give you an idea of “what is doing what”, how do we get it integrated into Orchestrator to leverage in your IT process automation?

Basic Runbook Example

To keep this simple, I’ll illustrate 2 activities

- Simple initiate activity (no parameters just something to stub out the process)

Note This could contain all the published data you want to send to the “Warm Up BlogEngine URL” activity - Warm Up BlogEngine URL activity (Run Program) shown next

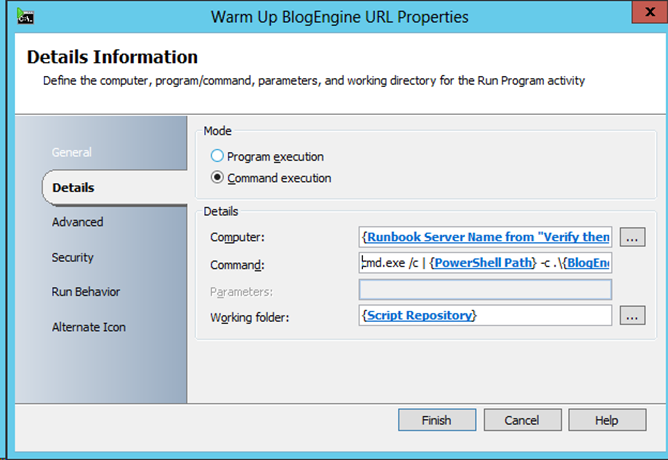

PowerShell and Run Program to allow for PowerShell v3 execution

Below we basically call PowerShell directly via the Command execution option in a Run Program activity using a CMD.EXE /c switch.

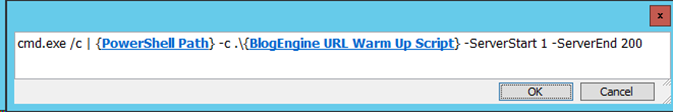

Expanded view (by right clicking on the command window and left clicking Expand)

Breaking this down we are doing the following:

- Computer: We’re using Published Data for the value of the current Runbook Server that is executing this Runbook. This is important since the script needs to exist on this server (and all Runbook Servers that will be leveraged).

- Command: What we are actually executing here

- CMD.exe /c to execute a command window

- A “|” or pipe to add a command to run

- We’re using a variable to define the PowerShell exe location (Example: C:\Windows\system32\WindowsPowerShell\v1.0\powershell.exe)

- -c .\scriptfile.PS1 to indicate we are going to execute a dot sourced script from the current working directory

- Providing the required parameters for our PowerShell script (ServerStart and ServerEnd)

- Working Folder: We are also using a variable here to specify location of scripts (Example: c:\Scripts)

Essentially the above is in the format of the below.

cmd.exe /c | powershell.exe -c .\scriptfile.ps1 -ServerStart 1 -ServerEnd 200

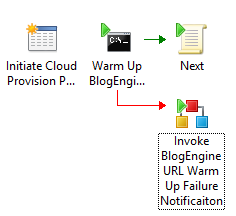

Handling Failures

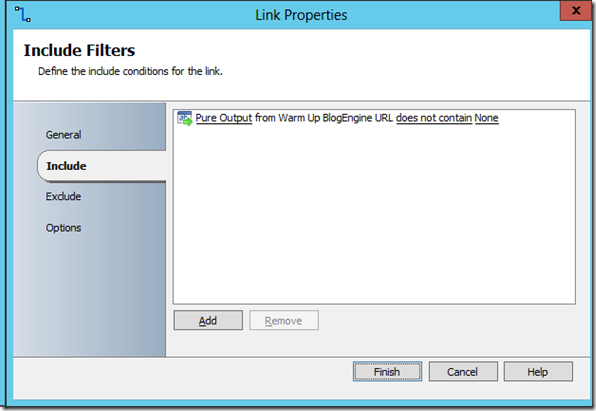

As a final piece to this, I said above that we are capturing what machines have a “$False” for "$Alive”. Since we may want to do something with that piece of information, one way to control flow is with a Link condition following the activity.

To throw an error and also continue on, we can specify (on the red link shown above) that when Pure Output from the Run Program activity does not contain None, let’s do something special. That something special in our case is sending an email with the names of servers that are having an issue. In your case it could be a different action and set of results of course.

Supporting URLs for PowerShell Workflow

In case you want to read up more on PowerShell Workflow in v3 I’m providing a handful of links below.

Use PowerShell Workflow to Ping Computers in Parallel: https://blogs.technet.com/b/heyscriptingguy/archive/2012/11/20/use-powershell-workflow-to-ping-computers-in-parallel.aspx

PowerShell Workflows: The Basics: https://blogs.technet.com/b/heyscriptingguy/archive/2012/12/26/powershell-workflows-the-basics.aspx

Writing a Script Workflow: https://technet.microsoft.com/en-us/library/jj574157.aspx

TechNet Gallery Location for Script: PowerShell v3 Parallel Execution Workflow Script for URL WarmUp

That does it for this post. If you have any questions, don’t hesitate to comment below. Also, in the spirit of this blog and keeping this as an open discussion, feel free to post other options that have worked for you.

Till next time,

Happy Automating! ![]()