Cloud Datacenter Storage Approaches in the Windows Server 2012 era

In our previous blog post, we talked about the network architecture of a Windows Server 2012 datacenter and how it opens up the door for new ways of thinking about networking. In this post, we’ll

previous blog post, we talked about the network architecture of a Windows Server 2012 datacenter and how it opens up the door for new ways of thinking about networking. In this post, we’ll  discuss the different conceptual approaches for storage when building cloud infrastructure and see how Windows Server 2012 presents new opportunities for improved datacenter efficiency on the storage front as well.

discuss the different conceptual approaches for storage when building cloud infrastructure and see how Windows Server 2012 presents new opportunities for improved datacenter efficiency on the storage front as well.

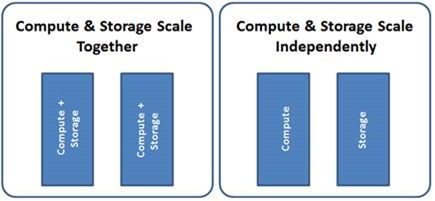

Let’s start at a very high level. When designing a datacenter, you’d probably start by making one important decision: Do you need your storage resources to be managed and scaled separately from your compute resources, or do you want to mix your compute and storage infrastructure? To illustrate this, it means you basically have to choose between these two approaches of how your datacenter “scale units” would look like:

Both approaches are valid. You may have considerations such as who manages the datacenter - do you have separate IT teams managing the servers and your storage, or just one team managing everything? It might depend on whether your compute and storage needs to scale uniformly on average, or on whether you desire the independence to be able to scale one but not the other. It might be that you have specific workloads that require the storage to be physically close to the compute nodes using them, or maybe cost is the primary factor and one approach is deemed more cost effective to you over the other. In all of these cases, you basically end up choosing one approach over another.

With Windows Server 2008 R2, the way you’d achieve the scale independently approach is to deploy SANs in the datacenter, and allocate LUNs that are accessible to the compute nodes over iSCSI or Fibre channel. If you wanted your compute and storage to scale together, then you’d use a DAS approach and have local disks (e.g. SAS or IDE) directly connected to your compute nodes. Note that the DAS based approach on Windows Server 2008 R2 does not provide reliable storage, and thus was unsuitable for many deployments unless the application software provided storage fault tolerance.

Windows Server 2012 delivers improvements on both scaling approaches, making both of them better and appropriate for cloud deployments.

Scaling Storage and Compute Independently

Windows Server 2012 based continuously available file servers deliver very significant benefits in manageability, performance and scale, while satisfying mission-critical workload needs for availability. All major datacenter workloads can now be deployed to access data through easily manageable file servers, thereby enabling independent scaling of compute and storage resources. These major improvements made to the file server in Windows Server 2012, as described in Thomas Pfenning’s blog post, have made it a suitable choice for building your cloud infrastructure storage.

Using File servers provides the following benefits for cloud:

- Continues Availability – File servers are now reliable, scalable and highly performant, providing appropriate storage for mission critical workloads. On top of that, with innovations like the new “Cluster in a Box” (watch John Loveall talk about it here), we’ve also made them easy to deploy, configure and manage.

- Low Cost - File Server clusters can use Storage Spaces or clustered PCI RAID controllers and CSV v2.0 to directly connect to lower-cost SAS disks, lowering the overall cost of storage while still maintaining separation between your storage and your compute nodes.

- Use Ethernet-based network fabric – Using File Servers, similarly to iSCSI or FCoE, you can standardize on Ethernet fabric to communicate between your compute and storage racks, and avoid the need for dedicated fabric such as Fibre Channel (FC), or for managing complex FC zones.

- Easy to manage - Most people find it easier to manage file shares and permissions compared to managing SANs and LUNs.

Note that you can still leverage all of your existing storage investments and use them either side by side with your file-servers, or even connect them to the file Servers. Such an approach can help realize the benefits of File Servers, and if your SANs require FC fabric, it’ll help minimize your non-Ethernet fabric and limit that for connectivity only between the SANs and the File Servers instead of having to make it accessible to all the compute nodes.

Also note that with the new improvements in SMB and RDMA in Windows Server 2012, file server performance really runs through the roof. Just take a look at Jose Barreto’s blog for some preliminary performance information!

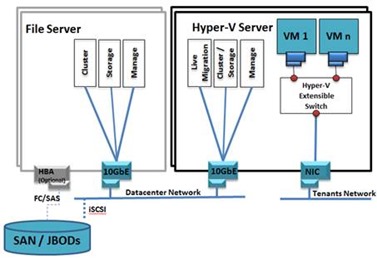

If we go back to the representative configurations we’ve discussed in the past, this approach would mean that your cloud infrastructure logically would look like the following diagram:

Just to remind you, this topology is discussed in the following configuration document on TechNet, and has all of the step by step instructions how to configure a cloud infrastructure with the file server approach in mind.

Scaling Storage and Compute Together

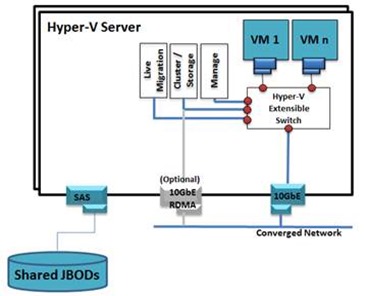

Now, if you’re in the “Scale together” camp, and you want to take advantage of new Windows Server 2012 capabilities to build that in a more efficient manner, you can now have compute and storage integrated into standard scale units – each comprising a 2-4 nodes and connected to shared-SAS storage. You would use Storage Spaces or PCI RAID controllers on the Hyper-V servers themselves to allow the Hyper-V nodes to directly talk to low-cost SAS disks without going through a dedicated file server, and have the data accessible across your scale units via CSV v2.0, similar to the File Server case.

Within each scale unit, compute and storage will be connected through shared SAS. Compute nodes will be connected to each other (both intra-scale-unit and across scale-units) through high-speed Ethernet (preferably with RDMA) thereby enabling very low-latency and near uniform data access regardless of whether the data resides. A well designed and highly available scale unit would prevent any single-point-of-failure by leveraging, for example, Multi-Path I/O (MPIO) connectivity to shared SAS based JBODs, and teamed NICs for inter-node communication.

We’ve just released the promised new configuration guide that will show you how to make that configuration work. Basically, your cloud infrastructure scale-unit could look like the following diagram. Please note that this configuration actually demonstrates two separate decisions – one is to fully route all of the Hyper-V and VM traffic through the Hyper-V switch using a single NIC or NIC team (which was discussed in the previous blog), and the other is the use of Storage Spaces on the Hyper-V node as described in this blog.

This approach provides an alternative low-cost solution for cloud deployments with appropriate level of availability and large scale. It provides better resource sharing and resiliency than the classical DAS approach. This is a new option not previously possible with Windows Server 2008 R2.

In summary, Windows Server 2012 increases flexibility in datacenter storage design. Combined with the simplification and new options in datacenter network design, it is going to change the way people think about how they architect the cloud infrastructure and open up new possibilities with increased efficiency and lower cost – and without compromising on scale, performance or functionality!

Yigal Edery

Principal Program Manager, Windows Server

On behalf of the Windows Server 8 Cloud Infrastructure team