Linux and Windows Interoperability: On the Metal and On the Wire

by MichaelF on August 13, 2007 03:10pm

I had the opportunity to present at both OSCON in Portland and at LinuxWorld in San Francisco in the last three weeks – both O’Reilly and IDG were gracious enough to grant me a session on the work that Microsoft is doing with Novell, XenSource, and others on Linux and Windows interoperability.

Overall our focus is on three critical technology areas for the next-generation datacenter: virtualization, systems management, and identity. Identity in particular spans enterprise datacenters and web user experiences, so it’s critical that everyone shares a strong commitment to cross-platform cooperation.

Here are the slides as I presented them, with some words about each to give context, but few enough to make this post readable overall. I skipped the intro slides about the Open Source Software Lab since most Port 25 readers know who we are and what we do.

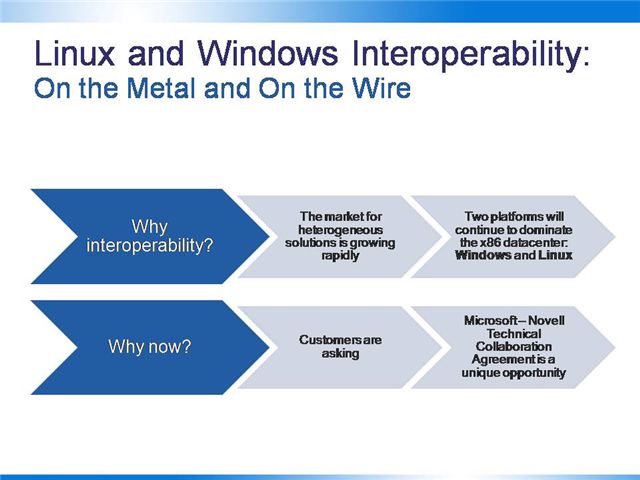

Why interoperability?

The market for heterogeneous solutions is growing rapidly. One visible sign of this is virtualization, an “indicator technology,” which by its nature promotes heterogeneity. Virtualization has become one of the most important trends in the computing industry today. According to leading analysts, enterprise spending on virtualization will reach $15B worldwide by 2009, at which point more than 50% of all servers sold will include virtualization-enabled processors. Most of this investment will manifest itself on production servers running business critical workloads.

Given the ever improving x86 economics, companies are continuing to migrate off UNIX and specialty hardware down to Windows and Linux on commodity processors.

So, why now?

First, customers are insisting on support for interoperable, heterogeneous solutions. At Microsoft, we run a customer-led product business. One year ago, we established our Interoperability Executive Customer Council, a group of Global CIOs from 30 top global companies and governments – from Goldman Sachs to Aetna to NATO to the UN. On the Microsoft side, this council is run by Bob Muglia, the senior vice president of our server software and developer tools division. The purpose of this is to get consistent input on where customers need us to improve interoperability between our platforms and others – like Linux, Eclipse, and Java. They gave us clear direction: “we are picking both Windows and Linux for our datacenters, and will continue to do so. We need you to make them work better together.”

Second, MS and Novell have established a technical collaboration agreement that allows us to combine our engineering resources to address specific interoperability issues.

As part of this broader interoperability collaboration, Microsoft and Novell technical experts are architecting and testing cross-platform virtualization for Linux and Windows and developing the tools and infrastructure necessary to manage and secure these heterogeneous environments.

I am often asked, “Why is the agreement so long?” as well as “Why is the agreement so short?” The Novell-Microsoft TCA is 5 years mutual commitment. To put this in context, 5 years from now (2012) is two full releases of Windows Server and 20 Linux kernel updates (given the 2.5 month cycle we’ve seen for the last few years). This is an eternity in technology. What’s important to me is that it’s a multi-product commitment to building and improving interoperability between the flagship products of two major technology companies. This means we can build the practices to sustain great interoperable software over the long term as our industry and customer needs continue to evolve.

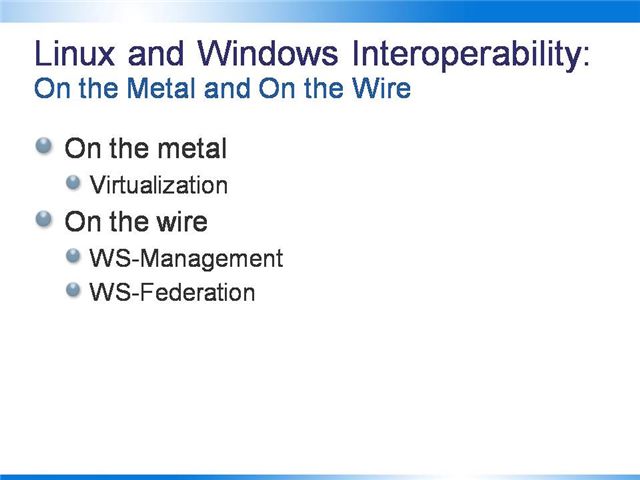

This talk covers two major components of the future of Linux and Windows interoperability: Virtualization and Web Services protocols.

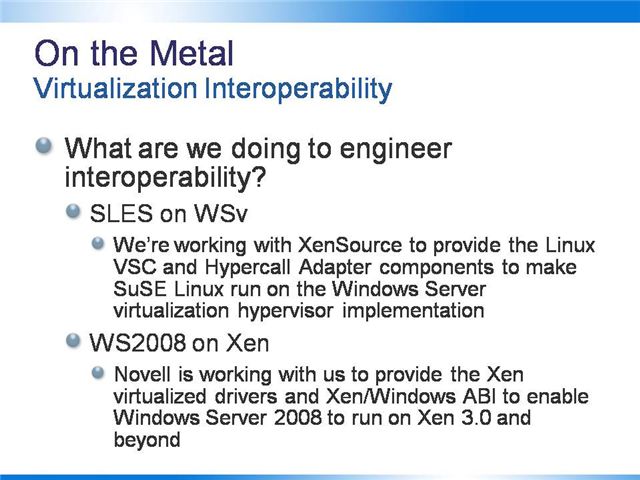

On the Metal focuses on the virtualization interoperability work being done between Windows Server 2008 and Windows Server virtualization, and SUSE Linux Enterprise Server and Xen.

On the Wire covers the details and challenges of implementing standards specifications, such as WS-Federation and WS-Management; and how protocol interoperability will enable effective and secure virtualization deployment and management.

These are the key components required for the next-generation datacenter. We know the datacenters of today are mixtures of Windows, Linux, and Unix, x86, x64 and RISC architectures, and a range of storage and networking gear. Virtualization is required to enable server consolidation and dynamic IT; it must be cross-platform. Once applications from multiple platforms are running on a single server, they need to be managed – ideally from a single console. Finally, they must still meet the demands of security and auditability, so regardless of OS they must be accessible by the right users at the right levels of privilege. Hence, cross-platform virtualization demands cross-platform management and identity.

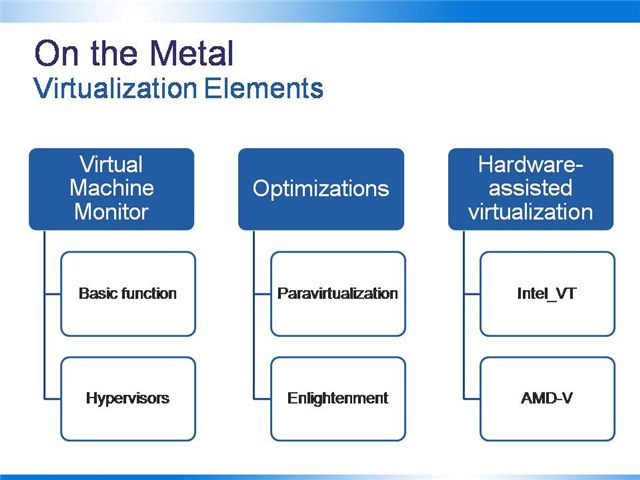

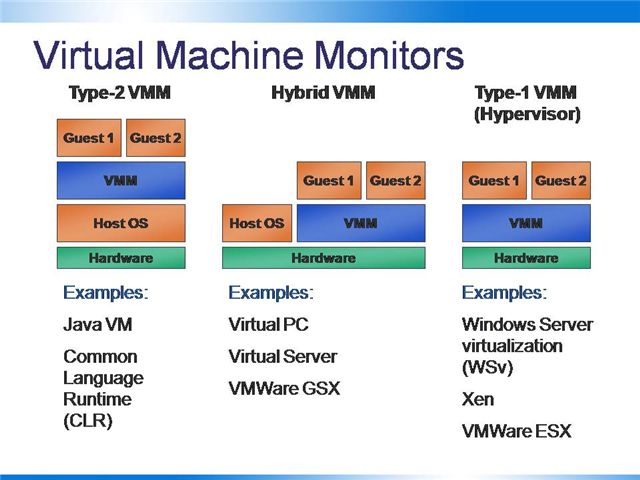

In non-virtualized environments, a single operating system is in direct control of the hardware. In a virtualized environment a Virtual Machine Monitor manages one or more guest operating systems that are in “virtual” control of the hardware, each independent of the other.

A hypervisor is a special implementation of a Virtual Machine Monitor. It is software that provides a level of abstraction between a system’s hardware and one or more operating systems running on the platform.

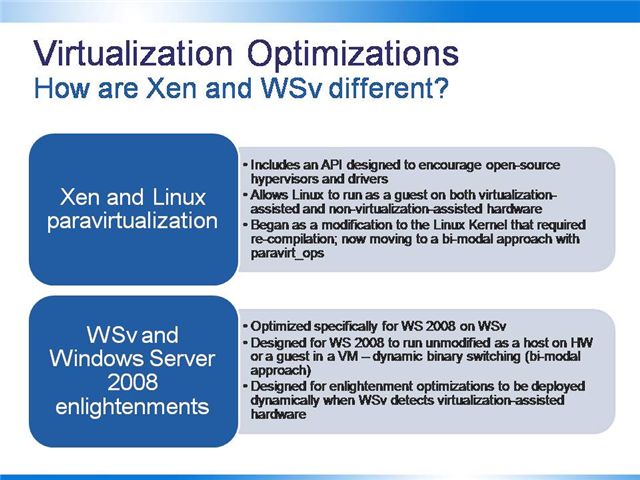

Virtualization optimizations enable better performance by taking advantage of “knowing” when an OS is a host running on HW or a guest running on a virtual machine.

Paravirtualization , as it applies to Xen and Linux, is an open API between a hypervisor and Linux and a set of optimizations that together, in keeping with the open source philosophy, encourage development of open-source hypervisors and device drivers.

Enlightenment is an API and a set of optimizations designed specifically to enhance the performance of Windows Server in a Windows virtualized environment.

Hardware manfuacturers are interested in virtualization as well. Intel and AMD have independently developed virtualization extensions to the x86 architecture. They are not directly compatible with each other, but serve largely the same functions. Either will allow a hypervisor to run an unmodified guest operating system without incurring significant performance penalties.

Intel's virtualization extension for 32-bit and 64-bit x86 architecture is named IVT (short for Intel Virtualization Technology). The 32-bit or IA-32 IVT extensions are referred to as VT-x. Intel has also published specifications for IVT for the IA-64 (Itanium) processors which are referred to as VT-i; .

AMD's virtualization extensions to the 64-bit x86 architecture is named AMD Virtualization, abbreviated AMD-V.

There are three Virtual Machine Monitor models.

A type 2 Virtual Machine Monitor runs within a host operating system. It operates at a level above the host OS and all guest environments operate at a level above that. Examples of these guest environments include the Java Virtual Machine and Microsoft’s Common Language Runtime, which runs as part of the .NET environment and is a “managed execution environment” that allows object-oriented classes to be shared among applications.

The hybrid model, shown in the middle of the diagram has been used to implement Virtual PC, Virtual Server and VMWare GSX. These rely on a host operating system that shares control of the hardware with the virtual machine monitor.

A type 1 Virtual Machine Monitor employs a hypervisor to control the hardware with all operating systems run at a level above it. Windows Server virtualization (WSv) and Xen are examples of type 1 hypervisor implementations.

Development of Xen and the Linux hypervisor API paravirt_ops began prior to release of Intel and AMD’s virtualized hardware and were designed, in part, to solve the problems inherent in running a virtualized environment on non-virtualization-assisted hardware. They continue to support both virtualization-assisted and non-virtualization-assisted hardware. These approaches are distinct from KVM, or the Kernel-based Virtual Machine, supports only virtualization-assisted hardware; this approach uses the Linux kernel as the hypervisor and QEMU to set up virtual environments for Linux guest OS partitions.

In keeping with the open source community’s philosophy of encouraging development of open source code, the paravirt_ops API is designed to support open-source hypervisors. Earlier this year VMware’s VMI was added to the kernel as was Xen. Paravirt_ops is in effect a function table that enables different hypervisors – Xen, VMware, WSv – to provide implementation of a standard hypercall interface, including a default set of functions that write to the hardware normally.

Windows Server 2008 enlightenments have been designed to allow WS 2008 to run in either a virtualized or non-virtualized environment *unmodified*. WS 2008 recognizes when it is running as a guest on top of WSv and dynamically applies the enlightenment optimizations in such instances.

In addition to a hypercall interface and a synthethic device model, memory management and the WS 2008 scheduler are designed with optimizations for when the OS runs as a virtual machine.

The WSv architecture is designed so that a parent partition provides services to the child partitions that run as guests in the virtual environment. From left to right:

Native WSv Components:

-

- VMBus – Virtual Machine Bus – Serves as a synthetic bus for the system, enabling child partitions to access native drivers.

- VSP – Virtual Service Provider – Serves as an interface between the VMBus and a physical device

- HCL Drivers – “Hardware Compatibility List” Drivers (standard native Windows drivers that have passed WHQL certification)

- VSC – Virtual Service Consumer – Functions as a synthetic device. For example, a filesystem will talk to the VSC controller instead of an IDE controller. This in turn communicates with the VSP to dispatch requests through the native driver.

Interoperability Components:

-

- Linux VSC – Interoperability component that serves as a synthetic Linux driver. Functions like the VSC in a Windows partition. Developed by XenSource and published under a BSD-style license.

- Hypercall Adapter – Adapts Linux paravirt_ops hypercalls to WSv

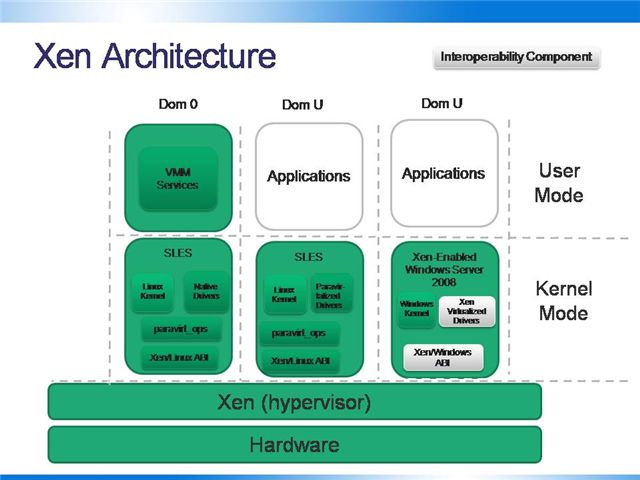

Like the WSv architecture, the Xen architecture is designed so that a special partition, in this case Dom 0, provides services to guest partitions that run in a virtual environment.

Native Xen Components:

-

- paravirt_ops is a Linux-kernel-internal function table that is designed to support hypervisor-specific function calls. The default function pointers from paravirt_ops support running as a host on bare metal. Xen provides its own set of functions that implement paravirtualization.

- Native Drivers – standard set of drivers in the Linux kernel

- Xen/Linux ABI – having a consistent ABI enables long-term compatibility between guest operating systems and the Xen hypervisor

Interoperability Components:

-

- Xen Virtualized Drivers – Windows synthetic device drivers must be converted to Xen-virtualized drivers. These are developed using the Windows DDK and will be distributed as binary only per the DDK license.

- Xen/Windows ABI – The binary interface that integrates Windows with Xen, enabling Windows hypercalls to be executed through Xen instead of WSv. This will be licensed under the GPL and made available when the WSv top-level functional specification is made public.

The slide says it all… I couldn’t figure out a way to put this one in a graphic. ;)

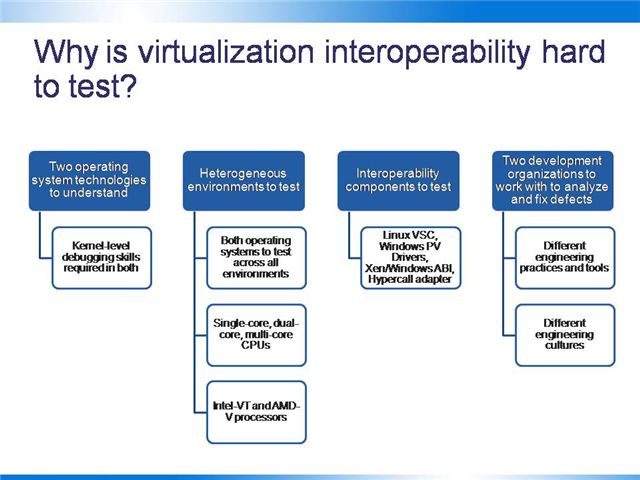

Virtualization interoperability testing is very challenging. While the architecture may look similar at a high level, the devil is in the details – down at the API and ABI level, the technologies are quite different.

From a personnel standpoint, the expertise required to debug OS kernels is hard to find, let alone software engineers with these skills who are focused on writing test code. Microsoft has established a role known as “Software Design Engineer in Test” or “SDE/T” which describes the combination of skills and attitude required to test large-scale complex software rigorously through automated white-box test development.

The problem of testing Linux and Windows OSes across WSv and Xen requires these kernel-level skills, but on both operating systems. It’s a non-trivial challenge.

Next is the technical issue of the test matrix:

-

- Two full operating systems to test (Windows Server 2008 and SUSE Linux Enterprise Server 10)

- Single-core, dual-core, and quad-core CPUs

- Single-processor, dual-processor, and quad-processor boards

- Intel-VT and AMD-V chips

- Basic device configuration (NIC, HD, etc.)

To put this in context, we need a minimum of 40 server chassis to test this matrix – for each operating system.

On top of this, the software components that must be tested include:

-

- Linux VSC

- Windows PV hardware drivers

- Xen/Windows ABI

- Linux/WSv hypercall adapter

Since Windows and Linux are general-purpose operating systems, these components must be tested across a range of workloads which will guarantee consistent, high-performance operation regardless of usage (file serving, web serving, compute-intensive operations, networking, etc.).

Finally – and no less a challenge than the skills and technology aspects – is that of building a shared culture between two very different and mature engineering culture. What is the definition of a “Severity 1” or “Priority 1” designation for a defect? How do these defects compete for the core product engineering teams’ attention? How are defects tracked, escalated, processed, and closed across two different test organizations’ software tools? Most importantly, what is the quality of the professional relationships between engineers and engineering management of the two organizations? These are the critical issues to make the work happen at high quality and with consistency over the long term.

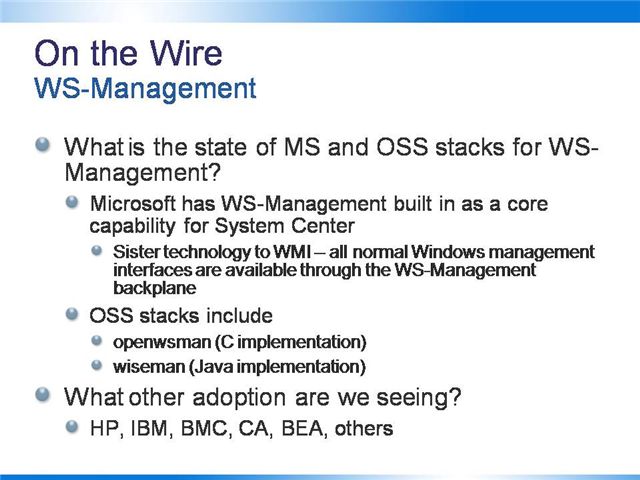

WS-Management is an industry standard protocol managed by the DMTF (Distributed Management Taskforce), whose working group members include HP, IBM, Sun, BEA, CA, Intel, and Microsoft among others. The purpose is to bring a unified cross-platform management backplane to the industry, enabling customers to implement heterogeneous datacenters without having separate management systems for each platform.

All Microsoft server products ship with extensive instrumentation, known as WMI. A great way to see the breadth of this management surface is to download Hyperic (an open source management tool) and attach it to a Windows server – all of the different events and instrumentation will show up in the interface, typically several screen pages long.

It is not surprising that the management tools vendors are collaborating on this work – and it’s essential to have not just hardware, OS, and management providers but application layer vendors like BEA as well – but to me the most important aspect of the work is the open source interoperability.

In the Microsoft-Novell Joint Interoperability Lab, we are testing the Microsoft implementation of WS-Management (WinRM) against the openwsman and wiseman open source stacks. This matters because the availability of proven, interoperable open source implementations will make it relatively easy for all types of providers of both management software and managed endpoints to adopt a technology that works together with existing systems out of the box. Regardless of development or licensing model, commercial and community software will be able to connect and be well-managed in customer environments.

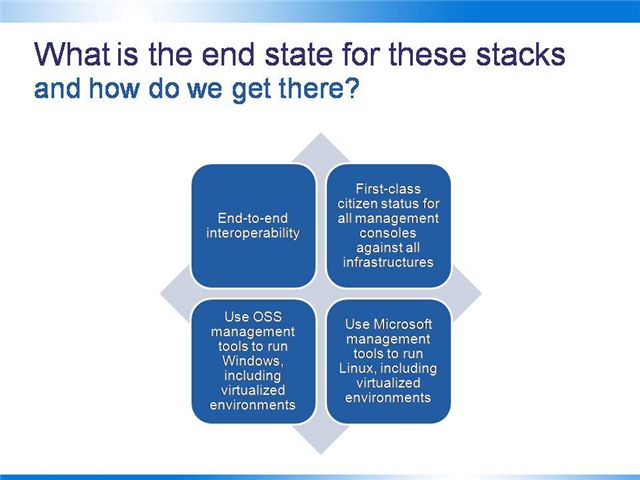

So what does this all mean? We’ll see end-to-end interoperability, where any compliant console can manage any conforming infrastructure – and since the specification and the code are open, the barriers to entry are very low. It’s important that this capability extends to virtualized environments (which is non-trivial) so that customers can get the full potential of the benefits of virtualization – not just reducing servers at the cost of increased management effort.

Sometimes people challenge me with the statement “if you would just build software to the specification, you wouldn’t need to all this interoperability engineering!” This is in fact a mistaken understanding of interoperability engineering. Once you’ve read through a specification – tens to hundreds of pages of technical detail – and written an implementation that matches the specification, then the real work begins. Real-world interoperability is not about matching what’s on paper, but what’s on the wire. This is why it’s essential to have dedicated engineering, comprehensive automated testing, and multiple products and projects working together. A good example of this is the engineering process for Microsoft’s Web Services stack. The specifications (all 36 of them) are open, and licensed under the OSP (Open Specification Promise). In the engineering process, Microsoft tests the Windows Web Services implementation against the IBM and the Apache Axis implementations according to the WS-I Basic profile. A successful pass against all these tests is “ship criteria” for Microsoft, meaning we won’t ship our implementation unless it passes.

In the messy world of systems management, where multiple generations of technologies at a wide range of ontological levels (devices, motherboards, networking gear, operating systems, databases, middleware, applications, event aggregators, and so on) testing is complex. Adding virtualization into this mix adds another layer of complexity, necessitating methodical and disciplined testing.

Open ID is a distributed single sign-on system, primarily for websites. It’s supported by a range of technology providers including AOL, LiveJournal, and Microsoft.

WS-Federation is the identity federation web services standard which allows different identity providers to work together to exchange or negotiate information about user identity. It is layered on top of other Web Services specifications including WS-Trust, WS-Security, and WS-SecurityPolicy – many of which are lacking an open source implementation today.

ADFS is Active Directory Federation Services, a mechanism for identity federation built into Microsoft Active Directory.

Cardspace is an identity metasystem, used to secure user information and unify identity management across any internet site.

Project Higgins is an Eclipse project intended to develop open source implementations of the WS-Federation protocol stack as well as other identity technologies including OpenID and SAML.

Samba is a Linux/Unix implementation of Microsoft’s SMB/CIFS protocols for file sharing and access control information. It is widely deployed in Linux-based appliances and devices, and ships in every popular distribution of Linux as well as with Apple’s OS X.

This work is still in early phases, and you can expect more details here in the future. Mike Milinkovich of Eclipse has been a champion for improving the interoperability of Eclipse and Microsoft technologies, especially Higgins. Separately the Bandit Project has made significant progress in building technologies which support CardSpace. I appreciate the work of these teams and look forward to more progress here.

The slide says it all here. We’re committed to long term development and delivery of customer-grade interoperability solutions for Windows and Linux, and we’ll do it in a transparent manner. Tom Hanrahan, the Director of the Microsoft-Novell Joint Interoperability Lab, brings many years of experience in running projects where the open source community is a primary participant. I and my colleagues at Microsoft are excited to learn from him as he puts his experiences at the OSDL/Linux Foundation and at IBM’s Linux Technology Center into practice guiding the work of the lab.

You can expect regular updates from us on the progress and plans for our technical work, and I expect you to hold me and Tom accountable for this promise.

I hope you found the presentation valuable. I felt it was important to get this material out broadly since it will impact many people and essential to be clear about what we are building together with Novell, XenSource, and the open source community.