Layers

by billhilf on April 26, 2007 06:56pm

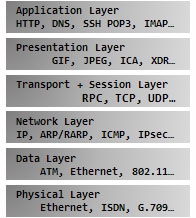

When I started programming, it helped me a lot to think about the OSI model (Open Systems Interconnection Basic Reference Model). On the right is a simple example of a five layer OSI model. This type of model can help when coding or administering a system so you can effectively debug at the right ‘layer’. I’ve found that I use this same logic now in all sorts of other areas, as it helps me parse out the details of an issue. I also was reminded of this while reading one of Cory Doctorow’s new short stories, ‘When Sysadmins Ruled the Earth’ in ‘Overclocked’.

I’ve recently been looking at broadband statistics and, as usual, working on various business model issues. So let me parlay the OSI framework concept into a topic around mixed models and the Web. I often hear others try to simplify open source by comparing it to the Web or the Internet. This description is often used disingenuously but it did get me thinking about the relationship and it’s a fun thought experiment so let’s break the totality of the Web down for a minute to prove the point – and let’s use an OSI-like model from the bottom up (including, but not limited to, protocols).

Physical, Data, and Network Layers

For the Internet this would be not only Ethernet standards but also electrical specifications, bridges, switches, host adapters, and signals operating over copper and fiber. ATM, Frame Relay, IPv4/v6, IPSec, RIP, X.25, and other protocols also live at these layers. But the Internet isn’t just protocols. Companies such as AT&T, Quest and Level 3 have laid hundreds of thousands of miles of fiber-optic cable at the physical layer and infrastructure providers such as Foundry, Juniper Networks and Cisco build technologies that allow Internet exchange points and ISPs to interconnect.

Transport+Session, Presentation and Application Layers

Here we have the layers that move the data between end users and programs. Fundamental to the Internet are TCP/IP of course (and UDP for you gamers). TCP/IP is over 25 years old and being an open standard was critical for its dissemination and success. Other important protocols and services at this layer are POP3, SMTP, SSH, HTTP, DNS, instant messaging protocols (and many more). These protocols have been implemented in both open source and non-open source software, the key was having standard protocols for communication. Also at this layer are other infrastructure-like providers such as Akamai, VitalStream, BitTorrent, Amazon’s S3 and other caching and content delivery networks.

It is important to note that at all of these layers above were other once-relevant technologies that have since faded or altogether expired. When I worked for IBM I use to carry a Token ring adapter for my ThinkPad as many IBM offices didn’t have Ethernet, but only Token ring (this was true just four years ago). Anyone use much Token ring today? Or RUDP? Or FDDI? Or even telnet? These each have diminished or disappeared, IMHO, because either 1) something better came along and/or 2) lack of relevance or value to consumers, users and/or businesses. These are positive market forces: we want better, higher value, more relevant technologies and standards to replace lesser, lower value, irrelevant versions of the same.

There is an important, non-OSI layer above all of this and that’s the content that is driving the growth of the Web and broadband (global number of broadband connections rose 33% last year). My highly subjective distillation of ‘content’ is the YouTube, MySpaces, Yahoo, MSN, Google conglomeration of data that pumps across those layers above every day in all their data hungry glory. Oh, and all that advertising too. Certainly, there is a supply-demand correlation between the infrastructures at all levels of the stack and the content users are demanding (and supplying back). These are also positive market forces. Companies such as Level 3 (which was almost itself leveled in late-90s) are seeing growth in traffic on their fiber lines and also in their revenue – and they are buying more. Comcast has signed up over 12 million homes for cable-based broadband connectivity. Western Europe broadband penetration is growing faster than the U.S., and Japan now has 7.9 million fibre-to-the-home subscribers. The home media and phone technologies will also be tapping into these bigger pipes, from the TiVo to iPhone to Windows Mobile devices. And all sorts of amazing applications are sprouting up to take advantage of this broadband growth – for a test, think back to how many videos you watched online just three years ago compared to today.

So what is the relationship between all of this? Certainly, without useful and relevant standards like TCP/IP and HTTP we wouldn’t be very far. But we also wouldn’t have today’s Web without the physical fiber and backbone providers, IXPs/ISPs, and router manufacturers that provide the infrastructure. And without software such as Apache, IIS, Firefox, Internet Explorer, etc., we wouldn’t be using the Internet like we do today. And last but not least, without something to do on the Web, from reading news on Yahoo to auctions on eBay to Skype phone calls to videos on YouTube or social networking such as MySpace or doing business online, the Internet would have just been a neat technology experiment (or, minimally, as one of my favorite BBC columnists Bill Thompson points out, a tool for ‘computer scientists to find ways to share time on expensive mainframe computers’). Open source, proprietary, infrastructure, protocols and standards… and lots of hard work and innovation. It’s all in there – that’s the Web we have today. Just like there is a mix of content that makes up the Web, mixed software, hardware, infrastructure, and a community are all necessary parts of the body Internet.

If someone needs proof that open source and commercial models/software/hardware/etc. can be and are compatible, just look at the Web. Not only are they compatible, they have proven to be an amazing powerful combination. The challenge for the OSS pundits is to dig deep, don’t be superficial. I like how Stephen Walli challenges a lot of ‘stack’ thinking by explaining how the application is a network, and the network isn’t simple. It’s a good analogy, and although the OSI layer-thinking helps draw some lines, the network model is more realistic – which is why I am using the Web as subject matter here.

When I was a kid, my oldest brother used to sell me gravity insurance for $1 (for the record, I only bought one policy when I was six). It was his lesson that I shouldn’t forget about reality. He tried to sell me another policy again when I finished graduate school – likely worried I was getting lost in theoretical thinking. In reality, there are powerful combinations of mixed models in software design/development, licensing, and businesses. We can bury our heads in the sand, or in the clouds, and believe there are only two camps, two separate and foreign tribes – open source and commercial. It might even make us feel better to believe this. Or we can see that, in the real world, there is no spoon.

-Bill