Opalis 6.3: VM Checkpoint Orchestration with Opalis and System Center Virtual Machine Manager

The great thing about virtual machines is that their state is capture as data on a drive. System Center Virtual Machine Manager lets you record this state at any time in a checkpoint. These checkpointing a vm gives you an at-the-moment revision of the virtual machine that you can revert to. Using Opalis 6.3 you can now orchestrate checkpoint processes so that they happen consistently every time.

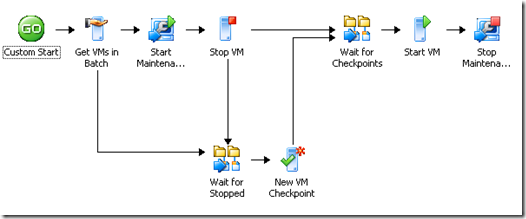

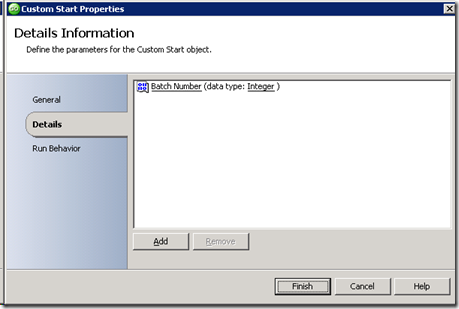

The Custom Start activity kicks off the workflow by requiring a Batch Number parameter. The Batch Number corresponds to a number assigned to the virtual machines. Each virtual machine has a custom property that contains the batch number.

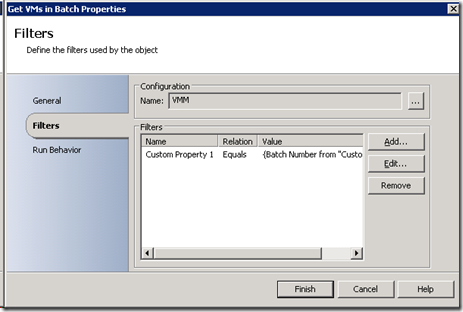

The Get VMs in Batch then retrieves all the VMs that have their Custom Property 1 set to the same Batch Number that was input into the Custom Start.

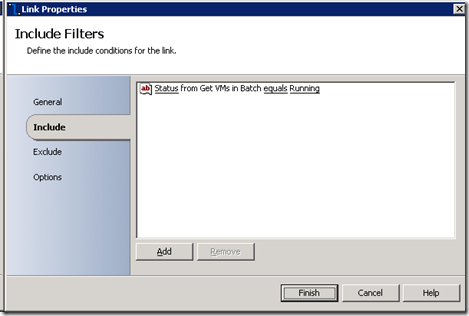

The Link between the Get VMs in Batch and Start Maintenance Mode is then set to only allow the VMs that have a status of Running to pass through.

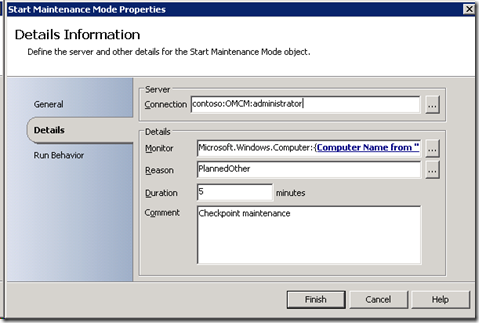

The Start Maintenance Mode activity then tells System Center Operations Manager to suppress all alerts related to the running virtual machines. This means that those machines will not throw alerts while they are shut off. This is very handy as it will allow operators to understand that the machine is in a maintenance window and why it is there. The virtualization administration team can use tools that the operations team understand to communicate.

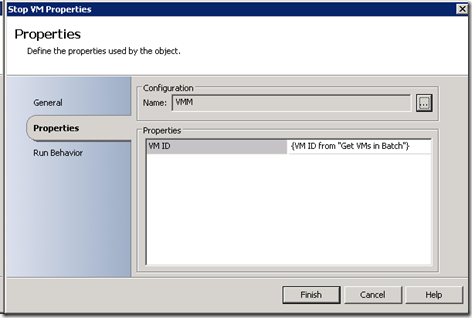

Creating a checkpoint of a stopped virtual machine means that the system will power on from a known state. This is helpful when performing recovery related activities. Now that maintenance mode is turned on for all the virtual machines the Stop VM activity will stop all the Running VMs. You will notice that the VM ID property subscribes to the VM ID of Get VMs in Batch. The Stop VM will only receive the VMs that are currently running since the link condition between Get VMs in Batch and Start Maintenance Mode filters out all the VMs that are not currently running. This filter continues through this branch of the workflow.

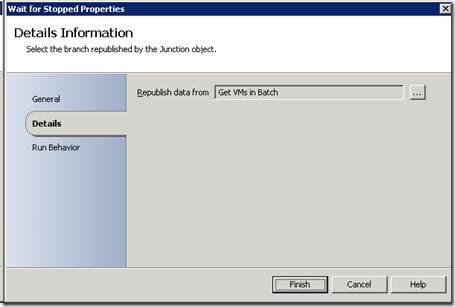

Now we need to do something tricky (i.e. advanced). We want to checkpoint ALL the VMs but the Stop VM has a filtered set of VMs. Basically only the VMs that were previously running that were stopped by the Stop VM activity will be available after the Stop VM activity runs. The Junction activity will save the day here. The Junction activity allows us to synchronize branches of a workflow and continue the data from one of the branches. By linking the Get VMs in Batch and the Stop VM activities to the Wait for Stopped (Junction) activity we will be guaranteed that all the running VMs have been stopped and we will have all the data from the Get VMs in Batch activity available for the next activity. To set this just tell the Wait for Stopped activity to republish the data from Get VMs in Batch and leave the link between them at the default values.

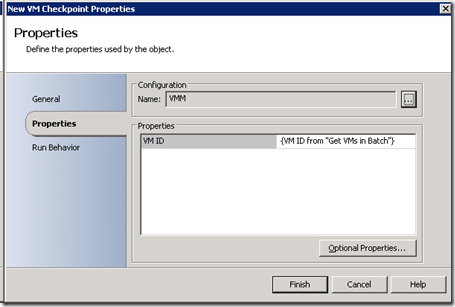

Now that all the data has arrived to the New VM Checkpoint activity, it will create a checkpoint for each VM from Get VMs in Batch.

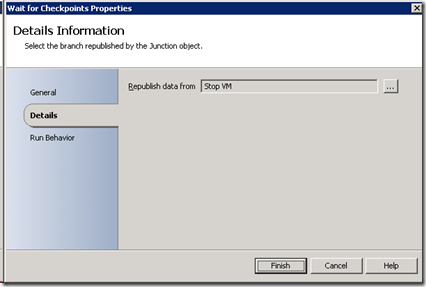

After Checkpointing the VMs there is a similar problem that we need to re-start the VMs that have been stopped and take them out of maintenance mode. However we can only do that once the checkpoint has been completed. The Wait for Checkpoints activity synchronizes the two paths and then republishes the data from the Stop VM branch.

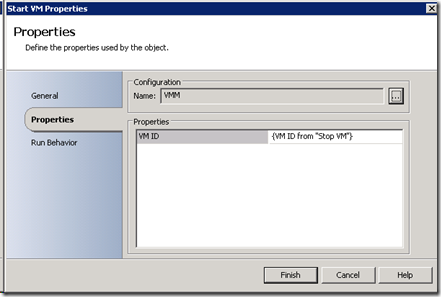

Now the Start VM activity starts the VMs that were stopped.

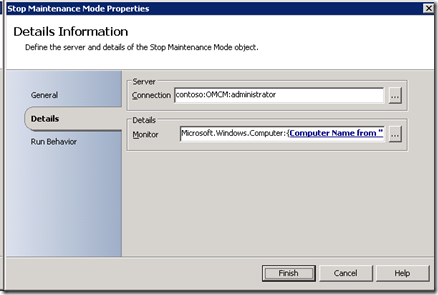

And the virtual machine can be taken out of maintenance mode.

Now that this workflows has been created you can set this to run on a schedule or ad-hoc by operations personal. You can be sure that all your VMs are ready to be restored when something unforeseen happens.