MS SQL Server Dump / Copy / Load

| Caution |

| Test the script(s), processes and/or data file(s) thoroughly in a test environment, and customize them to meet the requirements of your organization before attempting to use it in a production capacity. (See the legal notice here) |

Note: The workflow sample mentioned in this article can be downloaded from the Opalis project on CodePlex: https://opalis.codeplex.com |

Overview

The MS SQL Server Dump/Copy/Load workflow has been built as an example of how to automate a common workload for an MS SQL Server DBA. The workflow takes a target database, creates an MS SQL Server “dump”, copies this dump file to the file system of another MS SQL Server, and restores the backup. This sample has the following features:

**

Request Driven Provisioning – The workflow is designed to be called from an external system such as an email message or a Service Desk. The input request is parameterized such that the acceptance of the request is separate from the actual processing of the request. This means the workflow can be leveraged in a wider range of scenarios.

Fully Functional – The sample has been built such that it can actually run in a real-world environment, possibly with some very minor tweaking. This would include scenarios where a database would be transferred between two different systems that are remote with respect to the Opalis Action Server where the workflow is run.

Modular – The child workflows offer example of how to solve simple problems with Opalis such as making a backup of a MS SQL Server, restoring a backup (and renaming data files so they don’t conflict with existing data files), etc.

Demo Mode – The sample provides a simple and effective demonstration of process orchestration in action. It’s easy to set up a single-system instance of Opalis and get the sample running. There is a trigger provided that allows three separate database dump/copy/load requests to be processed in parallel for demonstration purposes. The sample provides the best demo when run from the Opalis Operator Console.

The MS SQL Server Dump/Copy/Load sample consists of three sub-folders of Opalis workflows:

“0. Setup” – This folder contains the basic workflows needed to configure the sample to work in a typical install of Opalis. This includes the creation of sample databases for using the sample in demo mode. Also, there is a workflow to generate a “sample” request that can be used as demo/test data or as a sample of how one could inject a request from an external system. The request shows the transfer of three databases (three requests running in parallel). Finally, there is a workflow that “resets” the demo by dropping the copied databases.

“1. MS SQL Server Dump-Copy-Load” – This is the primary top-line. It orchestrates Child Workflows into a process that results in the requested database transfer.

“2. Child Workflows” – These are the secondary modular workflows. These are fully-functional workflows, each of which performs a basic task (example: Dump a database, restore a database, copy a database backup, etc). While these workflows are fully functional, there is no reason why a workflow author couldn’t replace the workflow activities with a different set unique to their environment. For example, if one wanted to use FTP rather than Windows UNC for copying the database dump file, all one would have to do is edit the appropriate child workflow. Because the “copy” functionality is abstracted from the top line workflow, such changes are transparent to the overall process.

Setup

For most environments, the sample is “ready to run” out of the box. Naturally, it is possible the sample might need to be edited. For example, by default the sample assumes Windows authentication will permit access to the MS SQL Server databases and source/target services via the Opalis Integration Server service account.

The sample consists of a “Custom Start” that could be integrated with a request management system or triggered from another Opalis Workflow.

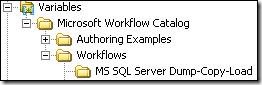

The sample can be run as a demo. There is a workflow that creates three “sample” databases (empty MS SQL Server database) and lets an author submit three database transfer requests. One can watch the workflow run in the Designer or (often a better demo) the Opalis Operator console. The required variables to configure the demo can be found in the Opalis Designer under “Variables:Microsoft Workflow Catalog:MS SQL Server Dump-Copy-Load”. There are four variables that must be configured if you wan to run the demo. Note: These are only used by the demo and are not needed for normal use of the sample.

“Source Demo Directory” – This is the location of the temp space used by the sample when the source database is “dumped” to a backup file. Note that this is temp space and once the sample finishes it cleans up after itself and deletes the files it creates.

“Source Demo Server” – This is name of the MS SQL Server host where the source database resides. The Opalis Action Server service must have rights to access the database being transferred. If one wishes, one may edit the sample and change workflow activities associated with accessing the MS SQL Server to use a different authentication mechanism.

“Destination Demo Directory” – This is the location of the temp space used by the sample when the source database is “restored” from a backup file. Note that this is temp space and once the sample finishes it cleans up after itself and deletes the files it creates.

“Destination Demo Server” – This is name of the MS SQL Server host where the database is restored. The Opalis Action Server service must have rights to access the database being transferred. If one wishes, one may edit the sample and change workflow activities associated with accessing the MS SQL Server to use a different authentication mechanism.

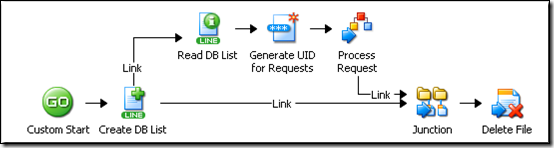

Once the four Variables have been configured, one can run the “3. Create 3 Sample Requests” workflow found in the MS SQL Server Dump/Copy/Load workflow folder. This workflow can be run from the Opalis Designer by selecting the Workflow and clicking the  button or by running it from the Opalis Operator Console (this generally provides for a better looking demonstration).

button or by running it from the Opalis Operator Console (this generally provides for a better looking demonstration).

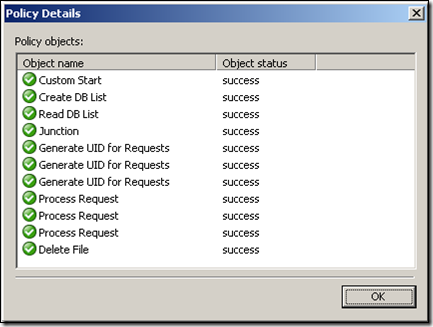

Verify the workflow ran properly by looking at the Log History that will eventually appear below the workflow itself. Double-clicking on the log entry will bring up the activity-by-activity status. Successful execution of each stop will show a series of  icons next to the workflow activity names.

icons next to the workflow activity names.

Solution Reset and Demo Data

Contained in the “0. Setup” folder is a workflows designed to support the Sample. “2. Drop Copied Databases (Reset Demo)” drops the database that are copied as a result of running the demo. After this workflow is run the demo is “reset” and the “3. Create 3 Sample Requests” can be run once again.

These workflows can be run from the Opalis Designer by selecting the Workflow and clicking the ![clip_image008[1] clip_image008[1]](https://msdntnarchive.blob.core.windows.net/media/TNBlogsFS/BlogFileStorage/blogs_technet/opalis/WindowsLiveWriter/MSSQLServerDumpCopyLoad_BF97/clip_image008%5B1%5D_thumb.jpg) button. Verify they ran properly by looking at the Log History that will eventually appear below the workflow itself. Double-clicking on the log entry will bring up the activity-by-activity status. Successful execution of each stop will show a series of

button. Verify they ran properly by looking at the Log History that will eventually appear below the workflow itself. Double-clicking on the log entry will bring up the activity-by-activity status. Successful execution of each stop will show a series of ![clip_image012[1] clip_image012[1]](https://msdntnarchive.blob.core.windows.net/media/TNBlogsFS/BlogFileStorage/blogs_technet/opalis/WindowsLiveWriter/MSSQLServerDumpCopyLoad_BF97/clip_image012%5B1%5D_thumb.jpg) icons next to the workflow activity names.

icons next to the workflow activity names.

Top-Level Workflows

There is only one top-level workflows that can be found in the “1. MS SQL Server Dump/Copy/Load” folder.

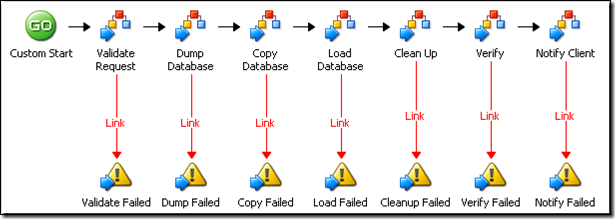

“1. Process Requests”

This is the top-level process that orchestrates the database dump/copy/load. It calls in sequence the child workflows that perform the key tasks associated with the automation. Exceptions are deal t with in the form of Opalis Platform Events (red links to the warning icons shown in the workflow). This workflow can be edited as needed to support changes in the process that may be desired or different exception handling processes.

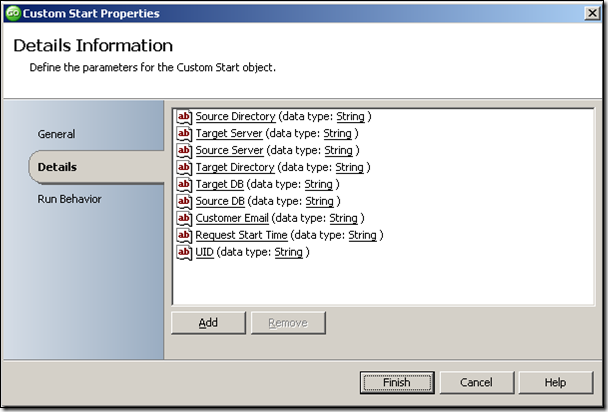

The sample is designed to run through any number of possible mechanisms. When run as a demo, the “3. Create 3 Sample Requests” (located in the “0. Setup” folder) creates a list of databases to be run through this process and then generates a Trigger for each request. For more generic use, one would trigger this workflow using the Custom Start parameters:

**

Source Directory – This is the temp directory on the source server where the database “dump” (an MS SQL Server Backup file) will be created. The files are deleted after they are created. The directory must exist prior to running the sample.

Target Server – This is the host name or IP address of the system hosting the MS SQL Server where the database is to be restored.

Source Server – This is the host name or IP address of the system hosting the MS SQL Server where the database to be copied resides.

Target Directory – This is the temp directory on the target server where the database will be restored. The database “dump” is copied into this folder. The files are deleted after they are restored. The directory must exist prior to running the sample.

Target DB – The name of the database once it is restored. The database name must NOT already be in use on the Target Server.

Source DB – This is the name of the database on the Source Server that is to be dumped. The database must exist prior to running the sample.

Customer Email – This is the email address where notification is to be sent once the process finishes the dump/copy/load.

Request Start Time – This is the time stamp to be used for evaluation of the length of time it takes to run the entire sample. The workflow allows this information to be provided from an external source so different ways of measuring the time to complete the request can be calculated. For example, if this time stamp was the time the policy was called then the workflow would report the total time to run the sample. One may wish to use the time the customer requested the transfer (which might include approval times, etc) such that the time evaluated was the total time from request to fulfillment. The total time is reported in the email message sent to the Customer Email Address at the end of the sample.

UID – A unique identifier for a given request. This can be pretty much anything (random string, etc) as long as it’s unique for each execution of the workflow. This would generally be a request ID or a tracking number originating from a Service Catalog or Service Desk. When run in “demo” mode, the UID is nothing more than a random 12-digit number.

Child Workflows

There are 7 child workflows that support the top-line processes. These are small workflows called from the top-line workflow as needed to complete the transaction. They all perform some small but useful task and could possibly be re-used in other workflows. It is possible that these workflows might need to be edited to deal with local variations in the environment where the sample is run:

Clean Up

This workflow knows how to delete the target and source files once the parent workflow finishes. It also drops the dump device created for the purpose of creating the database backup.

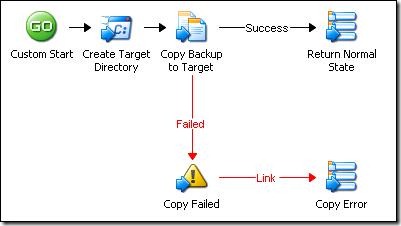

Copy Database

This is a workflow stub takes the database backup from the Target Directory and copies it to the Source Directory. It uses a Windows file copy, however the workflow could be edited to support other mechanisms (such as FTP, etc).

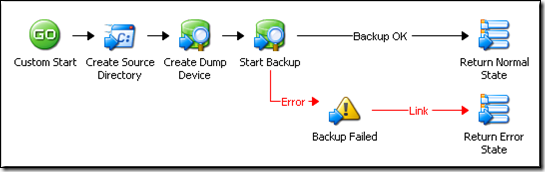

Dump Database

This workflow takes the Source Database on the Source Server and dumps it to the Target Directory. It creates an MS SQL dump device and then issues a MSSQL backup command to create the backup. It looks at the output of the SQL statement to verify the backup was successful. This workflow could be modified to use a different backup mechanism as long as the end result was restorable backup placed in the Target Directory folder.

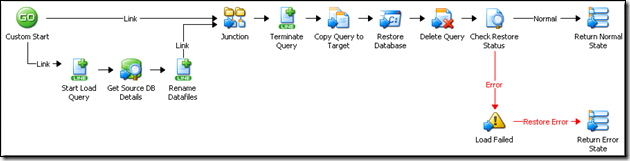

Restore Database

This workflow knows how to restore an MS SQL Server 2005 backup. It composes the MS SQL query required for this purpose. It looks at the data files in the backup and makes certain the files have a unique name on the system where the backup is being restored. Once the query is composed, the restore is run. The output of the SQL restore is checked for success. This workflow could be modified to restore a different type of backup as long as the type of backup being restored (and the steps to perform the restore) matched the type of backup that was created in the “Dump Database” child workflow.

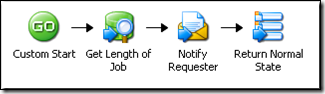

Notify Requester

This is a very simple workflow that no doubt will have to be edited for any given use of the sample. The “Notify Requester” activity contains information on connecting to an SMTP server that will need to be configured. That said, really this workflow is a stub into which one could put any sort of notification that the request was finished. This could include updating a Service Desk case, updating a Service Catalog, etc.

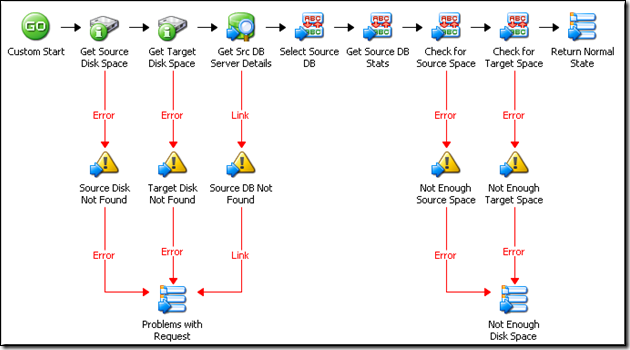

Validate Request

This workflow is used to validate that the request is likely to be successful prior to running the transaction. By putting the exception handling up front in a single workflow, the resulting child workflows are made much cleaner and easier to read. In this example, the exceptions dealt with involve checking that there is enough disk space on the source and target systems, that the source and target temp folders exist and also that the source database exists on the source MS SQL Server.

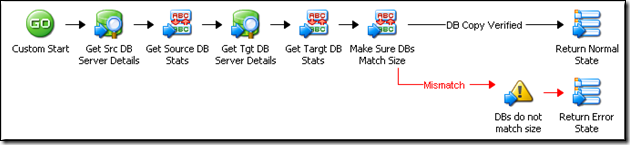

Verify

This workflow double-checks that the database is ready to hand-off to the customer. It gets the details of the source and target database after the restore finishes and makes sure the sizes of the database match. If they don’t match (or there are problems connecting to the target database) then an error condition is flagged.

| Share this post : |  |