Ensuring your Office 365 network connection isn’t throttled by your Proxy

One of the things I'm regularly running into when looking at performance issue with Office 365 customers is a network restricted by TCP Window scaling on the network egress point such as the Proxy used to connect to Office 365. This affects all connections through the device, be it to Azure, bbc.co.uk or google Bing. We'll concentrate on Office 365 here, but the advice applies regardless of where you're connecting to.

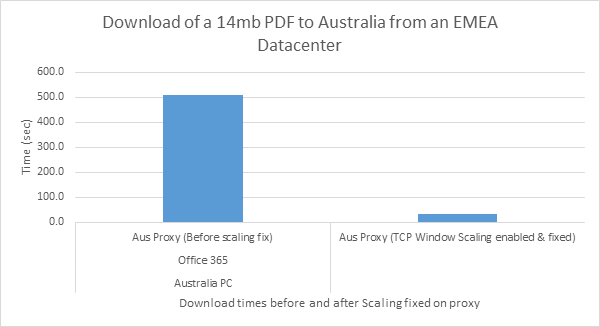

I'll explain shortly what this is and why it's so important but to give you an idea of its impact, one customer I worked with recently saw the download time of a 14mb PDF from an EMEA tenant to an Australia (Sydney) based client improve from 500 seconds before, to 32 seconds after properly enabling this setting. Imagine that kind of impact on the performance of your SharePoint pages or pulling large emails in from Exchange online, it's a noticeable improvement which can change Office 365 in some circumstances from being frustrating to use, to being comparable to on-prem.

Below is a visual representation of a real world example of the impact of TCP Window scaling on Office 365 use.

The premise of this is quite complicated, hence the very wordy blog for those of you interested in the detail, but the resolution is easy, it's normally a single setting on a Proxy or NAT device so just skip to the end if you want the solution.

What is TCP Window scaling?

When the TCP RFC was first designed, a 16bit field in the TCP header was reserved for the TCP Window. This essentially being a receive buffer so you can send data to another machine up to the limit of this buffer, without waiting for an acknowledgement from the receiver to say it had received the data.

This 16 bit field means the maximum value of this is 2^16 or 65535 bytes. I'm sure when this was envisaged, it was thought it would be difficult to send such an amount of data so quickly that we could saturate the buffer.

However, as we now know, computing and networks have developed at such a pace that this value is now relatively tiny and it's entirely feasible to fill this buffer in a matter of milliseconds. When this occurs it causes the sender to back off sending until it receives an acknowledgement from the receiving machine which has the obvious effect of causing slow throughput.

A solution was provided in RFC 1323 which describes the TCP window scaling mechanism. This is a method which allows us to assign a number in a 3 byte field which is in essence a multiplier of the TCP window size described above.

It's referred to as the shift count (as we're essentially shifting bits right) and has a valid value of 0-14. With this value we can theoretically increase the TCP window size to around 1gb.

The way we get to the actual TCP Window size is (2^Scalingfactor)*TCP Window Size so If we take the maximum possible values it would be (2^14)*65535 = 1073725440 bytes or just over 1gb.

TCP Window Scaling enabled? |

Maximum TCP receive buffer (Bytes) |

No |

65535 (64k) |

Yes |

1073725440 (1gb) |

What is the impact of having this disabled?

The obvious issue is that if we fill this buffer, the sender has to back off sending any more data until it receives an acknowledgement from the receiving node so we end up with a stop start data transfer which will be slow. However, there is a bigger issue and it's most apparent on high bandwidth, high latency connections, for example, an intercontinental link (from Australia to an EMEA datacentre for example) or a satellite link.

To use a high bandwidth link efficiently we want to fill the connection with as much data as possible as quickly as possible. With a TCP Window size limited to 64k when we have Window Scaling disabled we can't get anywhere near filling this pipe and thus use all the bandwidth available.

With a couple of bits of data we can work out exactly what the mathematical maximum throughput can be on the link.

Let's assume we've got a round trip time (RTT) from Australia to the EMEA datacentre of 300ms (RTT being the time it takes to get a packet to the other end of the link and back) and we've got TCP Window Scaling disabled so we've got a maximum TCP window of 65535 bytes.

So with these two figures, and an assumption the link is 1000Mbit/sec we can work out the maximum throughput by using the following calculation:

Throughput = TCP maximum receive windowsize / RTT

There are various calculators available online to help you check your maths with this, a good example which I use is https://www.switch.ch/network/tools/tcp_throughput/

- TCP buffer required to reach 1000 Mbps with RTT of 300.0 ms >= 38400.0 KByte

- Maximum throughput with a TCP window of 64 KByte and RTT of 300.0 ms <= 1.71 Mbit/sec.

So we need a TCP Window size of 38400 Kbyte to saturate the pipe and use the full bandwidth, instead we're limited to <=1.71 Mbit/sec on this link due to the 64k window, which is a fraction of the possible throughput.

The higher the round trip time the more obvious this problem becomes but as the table below shows, even with a RTT of 1 second (which is extremely good and unlikely to occur other than on the local network segment) we cannot fully utilise the 1000 Mbps on the link.

Presuming a 1000 Mbps link here is the maximum throughput we can get with TCP window scaling disabled:

RTT (ms) |

Maximum Throughput (Mbit/sec) |

300 |

1.71 |

200 |

2.56 |

100 |

5.12 |

50 |

10.24 |

25 |

20.48 |

10 |

51.20 |

5 |

102.40 |

1 |

512.00 |

So it's clear from this table that even with an extremely low RTT of 1ms, we simply cannot use this link to full capacity due to having window scaling disabled.

What is the impact of enabling this setting?

We used to see the occasional issue caused by this setting when older networking equipment didn't understand the Scaling Factor and this caused issues with connectivity. However it's very rare to see issues like this nowadays and thus it's extremely safe to have the setting enabled. Windows has this enabled by default since Windows 2000 (if my memory serves).

As we're increasing the window size beyond 64k the sending machine can therefore push more data onto the network before having to stop and wait for an acknowledgement from the receiver.

To compare the maximum throughput with the table above, this table shows a modest scale factor of 8 and a maximum TCP window of 64k.

(2^8)*65535 = Maximum window size of 16776960 bytes

The following table shows we can saturate the 1000 Mbps link at a 100ms RTT when we have window scaling enabled. Even on a 300ms RTT we're still able to achieve a massively greater throughput than with window scaling disabled.

RTT (ms) |

Maximum Throughput (Mbit/sec) |

300 |

447.36 |

200 |

655.32 |

100 |

1310.64 |

50 |

2684.16 |

25 |

5368.32 |

10 |

13420.80 |

5 |

26841.60 |

1 |

134208.00 |

In reality you'll probably not see such high window sizes as we generally slowly increase the window as the transfer progresses as it's not good behaviour to instantly flood the link. However, with Window scaling enabled you should see the window quickly rise above that 64k mark and thus the throughput rate rises with it. We have very complicated algorithms in Windows to optimise the window size (from Vista onwards) which works out the optimum TCP window size on a per link basis.

For example Windows might start with an 8k (multiplied by the scale factor) window and slowly increase it over the length of the transfer all being well.

How do I check if it's enabled?

So we've seen how essential this setting is, but how do you know if it's disabled in your environment? Well there a number of ways.

Windows should have this enabled by default but you can check by running (on a modern OS version)

Netsh int tcp show global

"Receive Window Auto-Tuning level" should be "normal" by default.

On a proxy, the name of this setting varies by device but may be referred to as RFC1323 such as is the case with Bluecoat.

https://kb.bluecoat.com/index?page=content&id=FAQ1006

This article suggests Bluecoat needs the TCP window value setting above 64k and RFC1323 setting is enabled for it to use window scaling. A lot of the older Bluecoat devices I've seen at customer sites have the window set at 64k which means scaling isn't used.

However, we can quickly check that it is being used by taking a packet trace on the proxy itself. Bluecoat and Cisco proxies have built in mechanisms to take a packet capture. You can then open the trace in Netmon or Wireshark and look for the following.

Firstly you need to find the session you are interested in and look at the TCP 3 way handshake. The scaling factor is negotiated in the handshake in the TCP options. Netmon shows this in the description field however as shown.

Here you can see the proxy connecting to Office 365 and offering a scaling factor of zero, meaning we have a 64k window for Office 365 to send us data.

7692 12:28:03 14/03/2014 12:28:03.8450000 0.0000000 100.8450000 MyProxy contoso.sharepointonline.com TCP TCP: [Bad CheckSum]Flags=......S., SrcPort=43210, DstPort=HTTPS(443), PayloadLen=0, Seq=3807440828, Ack=0, Win=65535 ( Negotiating scale factor 0x0 ) = 65535

7740 12:28:04 14/03/2014 12:28:04.1440000 0.2990000 101.1440000 contoso.sharepointonline.com MyProxy TCP TCP:Flags=...A..S., SrcPort=HTTPS(443), DstPort=43210, PayloadLen=0, Seq=3293427307, Ack=3807440829, Win=4380 ( Negotiated scale factor 0x2 ) = 17520

In the TCP options of the packet you'll see this.

- TCPOptions:

+ MaxSegmentSize: 1

+ NoOption:

+ WindowsScaleFactor: ShiftCount: 0

+ NoOption:

+ NoOption:

+ TimeStamp:

+ SACKPermitted:

+ EndofOptionList:

If you're using Wireshark then it'll look something like this.

1748 2014-03-14 12:56:40.277292 My Proxy contoso.sharepointonline.com TCP 78 39747 > https [SYN] Seq=0 Win=65535 Len=0 MSS=1370 WS=1 TSval=653970932 TSecr=0 SACK_PERM=1

In the TCP options of the syn/synack packet the following is shown if window scaling is disabled.

Window scale: 0 (multiply by 1)

If you can't take a trace on the proxy itself, try taking one on a client connecting to the proxy and look at the syn/ack coming back from the proxy. If it is disabled there, then it's highly likely the proxy is disabling the setting on its outbound connections.

It's worth pointing out here that the scaling factor is negotiated in the three way handshake and is then set for the lifetime of the TCP session. The window size however is variable and can be increased to a maximum of 65535 or decreased during the lifetime of the session. (Which is how we manage the autotuning in Windows).

Summary:

As we've seen here, TCP Window Scaling is an important feature and it's highly recommended that you ensure it is used on your network perimeter devices if you want to fully utilise the available bandwidth and have optimum performance for network traffic. Enabling the setting is normally very simple but the method varies by device. Sometimes it'll be referred to as TCP1323 and sometimes TCP Window Scaling but refer to the vendor supplying the device for specific instructions.

Having this disabled can have quite a performance impact on Office 365 and also any other traffic flowing through the device. The impact will become greater the larger the bandwidth and larger the RTT, such as a high speed, long distance WAN link.

https://technet.microsoft.com/en-us/magazine/2007.01.cableguy.aspxis a good article with a bit more detail on areas of this subject I haven't covered here .

This setting is generally safe to use and it's rare that we see issues with it nowadays, just keep an eye on the traffic after enabling it and look out for dropped TCP sessions or poor performance which may be an indicator there is a device on the route which doesn't understand or deal with window scaling well.

Ensuring this setting is enabled should be one of the first steps you do when assessing whether your network is optimised for Office 365 as having it disabled can have an enormous impact on the performance of the service and can be a very tricky problem to track down until you know to and how to look for it. And now you do!