Know your content - Part 2

In one of my previous posts I was showing a script to analyze your SharePoint content. This script came from a customer engagement where I was able to run the script on the SharePoint server. Currently I am on an engagement where I am facing the same requirements but will not have any access to the server.

I was wondering on how to get the same metrics without having server access and the only solution that came into my mind was using the SharePoint web services. Mainly I will use the following two web services:

- https://msdn.microsoft.com/en-us/library/webs.webs(v=office.12).aspx - Methods for working with sites and subsites

- https://msdn.microsoft.com/en-us/library/lists.lists(v=office.12).aspx - Methods for working with lists and items

With these two web services I am able to get information on my content from the client side assuming that I have access to the content. At the end of this posting you will find the entire PowerShell code to get the content information.

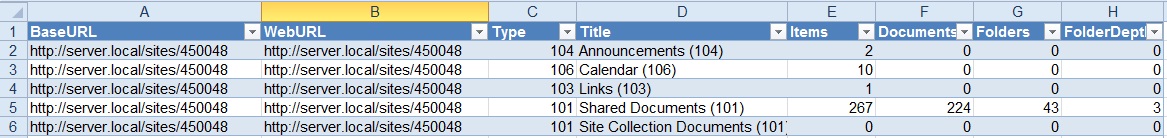

The code will create a .csv-file that you can then analyze with Excel. The following screenshot will show you an example of such an analysis:

PowerShell code:

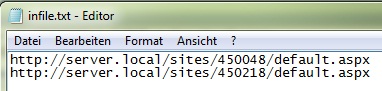

The infile for this code is simply a notepad file that contains the URLs that should be analyzed. The following screenshot will show you an example:

# THIS SCRIPT WILL:

# ------------------------------------------

# This script will analyze a given list of SharePoint sites regarding their content.

# Especially the lists and libraries will analyzed to get an impression on how many information

# is stored to the site and how complex the structure of the site is

clear-host

# activate/deactivate debug mode

$DEBUG = $false

# URLs to be analyzed will be fetched from this file

$inFile = "infile.txt"

# Parameters for this script

$noiseLists = @("Content and Structure Reports", "Form Templates", "Reusable Content", "Style Library", "Workflow Tasks")

$fileInfo = "AnalysisResults.csv"

$errorFile = "error.txt"

# *************************************

# S C R I P T L O G I C

# Do not edit anything from now on

# *************************************

# for speeding up execution time we keep a list with all processed URLs not not analyze them multiple times

$global:processedURLs = @()

# clean the error variable

$error.clear()

# *************************************

# F U N C T I O N S

# *************************************

function AnalyzeWeb {

param ($siteUrl, $webURL)

# initialize variable that will capture our needed data

$numLists = 0

$numLibs = 0

$numItems = 0

$numFolders = 0

Write-Host ("Analyzing web with URL: {0}" -f $webURL)

# set the URI of the webservice

$uriLists = ("{0}/_vti_bin/Lists.asmx?WSDL" -f $webURL)

# create the web service handles

$wsLists = New-WebServiceProxy -Uri $uriLists -Namespace SpWs -UseDefaultCredential

# call the needed web service method

$xmlListCollectionResult = $wsLists.GetListCollection()

foreach ($oneList in $xmlListCollectionResult.List) {

if ($oneList.Hidden -eq $false) {

if (!($noiseLists -contains $oneList.Title) ) {

$xmlListResult = $wsLists.GetList($oneList.Title)

Write-Host (" List '{0}' ({1}, {2}) has '{3}' items." -f $xmlListResult.Title, $xmlListResult.ID, $xmlListResult.ServerTemplate, $xmlListResult.ItemCount)

# capture data about the list/library

if ($xmlListResult.ServerTemplate -eq "101") {

$numLibs++

} else {

$numLists++

} # if..else

# Analyze the library / list content

$x = new-object "System.Xml.XmlDocument"

$q = $x.CreateElement("Query")

$v = $x.CreateElement("ViewFields")

$qo = $x.CreateElement("QueryOptions")

$v = $x.CreateElement("ViewFields")

$q.set_InnerXml(“”)

$qo.set_InnerXml("<Folder></Folder>")

# clear capture data

$numFolders = 0

$numItems = 0

$maxFolderDepthLocal = 0

# Call the web service. Details about parameterization: https://msdn.microsoft.com/en-us/library/lists.lists.getlistitems(v=office.12).aspx

$xmlListItemResult = $wsLists.GetListItems($xmlListResult.ID, $null, $q, $v, "5000", $qo, $null)

foreach ($oneListItem in $xmlListItemResult.data.row) {

if ($oneListItem.ows_FSObjType -ne $null) {

if ($DEBUG) { Write-Host (" ID: '{0}', Title: '{1}', FSObjType: '{2}'" -f $oneListItem.ows_ID, $oneListItem.ows_Title, $oneListItem.ows_FSObjType) }

$temp = $oneListItem.ows_FSObjType.Split(";#")

if ($temp[2] -eq "1") {

if ($DEBUG) { Write-Host ("*-*-* FOUND the folder '{0}' *-*-*" -f $oneListItem.ows_Title) }

# calculate folder depth

if ($xmlListResult.ServerTemplate -eq "101") {

# $tempDepth = $oneListItem.ows_ServerUrl.length - ($oneListItem.ows_ServerUrl.Replace("/","")).Length - 3

$tempDepth = $oneListItem.ows_EncodedAbsUrl.replace($webUrl,"").length - $oneListItem.ows_EncodedAbsUrl.replace($webUrl,"").replace("/","").length - 1

if ($maxFolderDepthLocal -lt $tempDepth) {

$maxFolderDepthLocal = $tempDepth

if ($DEBUG) { Write-Host (" Setting new local folder depth: {0}" -f $maxFolderDepthLocal) }

} # if

$numFolders++

} # if ServerTemplate -eq 101

} # if

} # if

if ($oneListItem.ows_DocIcon -ne $null) {

if ($DEBUG) { Write-Host " ======> Found a document <====== " }

$numItems++

} # if..else

} # foreach $oneListItem

# dump info to lib log file

# ("BaseURL`tWebURL`tType`tTitle`tItems`tDocuments`tFolders`tFolderDepth") | Out-File $fileInfo

("{0}`t{1}`t{2}`t{3}`t{4}`t{5}`t{6}`t{7}" -f $siteUrl, $webURL, $xmlListResult.ServerTemplate, ("{0} ({1})" -f $xmlListResult.Title, $xmlListResult.ServerTemplate), $xmlListResult.ItemCount,$numItems, $numFolders, $maxFolderDepthLocal) | Out-File $fileInfo -append

} # if

} # if

} # foreach $oneList

} # function AnalyzeWeb

function startAnalysis {

param ($url)

# clear the URL

$url = $url.Replace("/default.aspx", "")

# set the URI of the webservice

$uri = ("{0}/_vti_bin/Webs.asmx?WSDL" -f $url)

# create the service handle

$wsWebCollection = New-WebServiceProxy -Uri $uri -Namespace SpWs -UseDefaultCredential

# check if an error occured an log the info to the error file

if ($error.count -ne 0) {

("Error with URL: '{0}'" -f $uri) | Out-File $errorFile -append

$error.clear()

}

# call the needed web service method

$xmlWebCollectionResult = $null

$xmlWebCollectionResult = $wsWebCollection.GetAllSubWebCollection()

if ($xmlWebCollectionResult -ne $null) {

# analyze the sites within site collection

foreach($oneWeb in $xmlWebCollectionResult.Web) {

if ($global:processedURLs -match $oneWeb.Url) {

if ($DEBUG) { Write-Host ("Skipping URL '{0}' since it has already been processed" -f $oneWeb.Url) }

} else {

if ($DEBUG) { Write-Host ("Adding URL '{0}' to the processed list" -f $oneWeb.Url) }

$global:processedURLs += $oneWeb.Url

# run analysis

AnalyzeWeb $url $oneWeb.Url

} # if..else

} # foreach

} else {

Write-Host ("Cannot access site at URL: {0}" -f $url) -foregroundcolor red

} # if..else

} # function startAnalysis

# *************************************

# M A I N

# *************************************

# Create the lib output file

$timeStamp = (Get-Date -format "yyyyMMdd_HHmmss")

$fileInfo = $fileInfo.Replace(".csv", ("_{0}_{1}.csv" -f $timeStamp, $inFile.Replace(".txt","")))

$errorFile = $errorFile.Replace(".txt", ("_{0}_{1}.txt" -f $timeStamp, $inFile.Replace(".txt","")))

if ($DEBUG) {

Write-Host ("fileInfo = {0}" -f $fileInfo)

}

# Create the header information of log files

("BaseURL`tWebURL`tType`tTitle`tItems`tDocuments`tFolders`tFolderDepth") | Out-File $fileInfo

measure-command {

$i = 1

$inFileContent = Get-Content $inFile

foreach ($oneUrl in $inFileContent) {

Write-Host ("[{0}/{1}] {2}" -f $i, $inFileContent.Length, $oneUrl) -backgroundcolor blue -foregroundcolor yellow

startAnalysis $oneUrl

$i++

} # foreach

} # measure-command

Write-Host "Finished."