Using SCOM to Detect Failed Pass the Hash attacks (Part 2)

Disclaimer: Due to changes in the MSFT corporate blogging policy, I’m moving all of my content to the following location. Please reference all future content from that location. Thanks.

A couple weeks back, I wrote a piece on creating some rules to potentially detect pass the hash attacks in your environment. This is the second article in this series, and if time permits one of many more I hope to do over the next year or so on using SCOM to detect active threats in an environment. To start, I want to apologize on the delay, my lab crashed on me and I had to spend way too much time fixing it.

Today we will discuss looking at failure attempts. When an attacker compromises a machine, they are able to use windows credential editor or mimikatz to enumerate the users that are logged on as well as the hashes stored inside of the LSA. What they do not necessarily know is what permissions said accounts have; as such, they only thing they can do is attempt to login with these stored accounts until they find one that works, giving them complete access to the victim’s environment. They accomplish this by moving laterally throughout the organization, desktop to desktop or server to server until they finally steal credentials of a domain admin account, giving them the keys to the kingdom. That reason alone is why domain admin accounts should never sign on to a desktop, nor should they sign on to a server or run as a service. On average, it takes an attacker approximately 2 days to go from infiltrating a desktop, to stealing the keys to the kingdom. It also takes the victim the better part of a year to realize that they’ve been owned.

As a reminder, the goal here is to avoid noise generated by normal events. I’ve seen several implementations of security monitoring in SCOM which do nothing but generate thousands of alerts that no one will look at. Alert management is the most difficult aspect to a SCOM environment, and even with good processes in place, asking a person or team to sift through hundreds, if not thousands, of alerts generated by standard, every-day activity. This accomplishes nothing but re-enforcing the check-the-monitoring-box mentality that so many organizations already have.

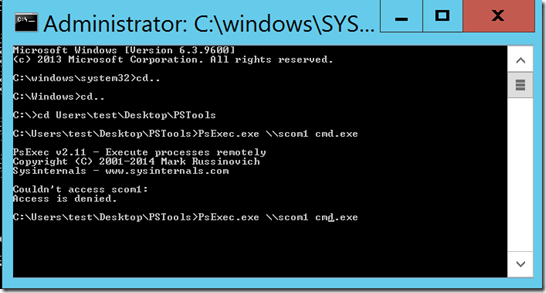

As for the experiment, I’m going to use my compromised system to steal accounts, much like I did in part 1. The big difference is that instead of the account having the domain admin rights that the attacker desires, it will be nothing more than a standard account which has no access to other machines in the environment. The process is pretty much the same as what I did in part 1, and as such the rule I created to look for credential swap flagged immediately. That’s good. And, as expected, I see the following result when launching a remote psexec to my server.

Essentially, I could not move laterally in this scenario, but how his looks surprised me. I expected this to lead some failures (4625 events) that can be tracked. However, this turns out to not be the case. For one, my tier 1 monitor that I setup in part 1 of this series flagged. I was not expecting that, and sure enough, I see 4624 events on my SCOM server for the user account whose credentials I used. It was followed immediately by a 4634 event indicating the session was destroyed. There were no 4625 events on this server, nor were there any on my domain controller. I’m not quite sure why authentication is handled in this capacity, but then again, I’ve never really looked at it this closely. Unfortunately, this does me little good as it is a very common sequence of events, but since my other rules still alerted in this scenario, I have visibility into this attempt. I attempted a few other means with similar results. I’m still generating a 4624, with the only difference being the 4634 that follows immediately after it. Attacking a DC with a non-DA account yielded the same results. There was a 4624 followed immediately by a 4634 event, and the 4624 was picked up by my previous rules, so there’s no need to create another rule.

I suspect that Rule 2 in my previous post will likely be the noisiest. I had to turn it off in my lab for SQL servers and DCs due to standard traffic. That’s fine, as it still has some value, but I suspect that some types of normal front end to back end type communication will trigger this. That doesn’t do any favors to the person or team responsible for security monitoring. An occasional false positive is acceptable, but at the end of the day, I prefer my alerts to be actionable every time. If they are not, SCOM quickly turns into that tool that no one uses because everything that comes out of it is worthless. This brings me to another point. If you’re having problems with Rule 2 in particular (though 3 may come into play here too), then consider Parameter 19 a bit closer. That parameter contains the source IP address. While I wouldn’t consider it to be the best practice, a tier 1 environment may have service accounts and applications that connect to other systems within tier 1. Lots of that is probably not a good thing, but it is somewhat normal for a front end/back end configuration. Any type of web server/DB is likely to trigger false positives. However, the anatomy of an attack is a bit different.

Attackers rarely get to start at the server level or the DC level. They almost always start in tier 2. Security professionals refer to this as “assumed breach.” Simply put, no matter how much you train people, roughly 10% of your environment is not going to verify the source but will instead click on the crazy cat link or whatever the popular meme of the day is. Security teams unfortunately cannot stop this. That said, this is also your attacker’s entry point into the environment. Chances are good that someone is sitting in your tier 2 environment right now, because getting there through one of the myriad of flash or java vulnerabilities is pretty easy to do. But that also gives us a unique way to search for attackers, because SCOM can use wild cards. Since Parameter 19 contains an IP address, you can make these rules and use wildcards to filter down the results so that you’re getting alerts when these anomalies are detected from a tier 2 IP address accessing a tier 1 or tier 0 system. Other than your IT staff, this shouldn’t be happing at all. This would have to be customized to an environment, but this is not something that would be terribly difficult to do.

One final note, I’m working on a management pack that contains these rules along with additional security rules along with other security related items that SCOM can provide. My only test environment is my lab, which is hardly a production grade environment. I do welcome feedback. While I cannot support this management pack, I can provide it if this is something you are interested in trying it out. My main goal is to keep the noise down to a minimum so that each alert is actionable. While that is not always easy to do, trying this out in other environments will go along way to getting it to that point. If this is something you are interested in testing, please hit me up on linked in.

Technorati Tags: SCOM 2012,pass the Hash,security monitoring