Using SCOM to Detect Successful Pass the Hash attacks (Part 1)

Disclaimer: Due to changes in the MSFT corporate blogging policy, I’m moving all of my content to the following location. Please reference all future content from that location. Thanks.

Those that know me know I’ve been using my free time to mess around with the idea of being able to use SCOM to help in identifying when an advanced persistent threat is active in your environment. This is a problem that most IT organizations have given that the average attacker isn’t discovered until more than 250 days after they owned the environment. Many are never found. Part of the problem associated with this is the massive amount of log information that needs to be parsed in order to determine an active presence in the environment. There are products you can buy such as Microsoft ATA, Splunk, or forwarding log information to Azure and using OMS. Products like these can be expensive, but in the same token much better at log analytics than a tool like SCOM. That said, my goal is to create a poor mans solution to identifying a possible PtH in progress event. I’ll do so by seeing what is generated when I reproduce an event in my lab. This entry will cover successful elevation attempts. My next entry will cover my attempt to detect an attacker who is attempting to elevate with a non-DA account.

To start, I download all the necessary tools to a machine. On the same machine, I’ve made a standard domain account local admin on the machine. This is because a pass the hash attack requires having local admin rights to the machine in order to read from the LSA. To be clear, for the average attacker, getting local access to a machine, any machine, is easy to do. Typically this starts at tier 2 with a targeted fishing attack, and despite the fact that we try and educate users to never open up that email from a non-trusted source, they do it anyways, roughly to the tune of about 11% of users. I’m not bothering with this piece as I’m assuming that an attacker can get to this point fairly easily… reality is they can.

Step 1 – switching credentials:

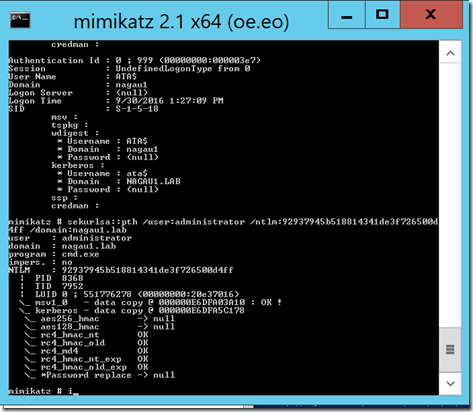

The first thing I’ve done is to simply execute mimikatz and launch a local command shell under a different set of creds than what I’m running under. My user account that I’m signed with with is “test”. I have a domain admin on another session, and unbeknownst to this DA, my test account is compromised. This is straight forward:

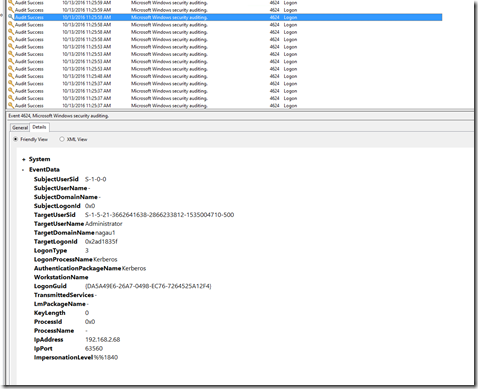

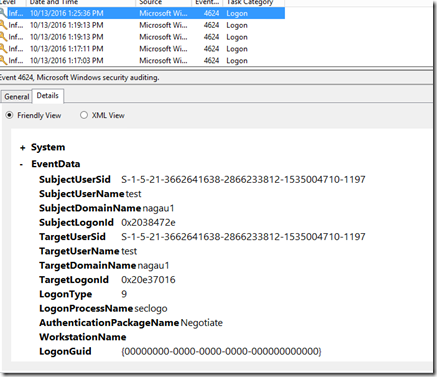

I grabbed the hash and launched a command shell. It appears that this generates traffic. Using mimikatz to launch a command line under a domain admin’s credentials generates this:

Each of these items are parameterized, which makes it somewhat easy to craft a rule in SCOM. The trick is making sure that the events in question are unique to this type of an attack. If they aren’t, all I’ve done is create a whole bunch of noise that will be ignored. As luck would have it, the LogonProcessName and LogonType fields are distinctly different from the average 4624 event in my environment. Let’s hold on to that thought for the moment.

Step 2 – Lateral Movement:

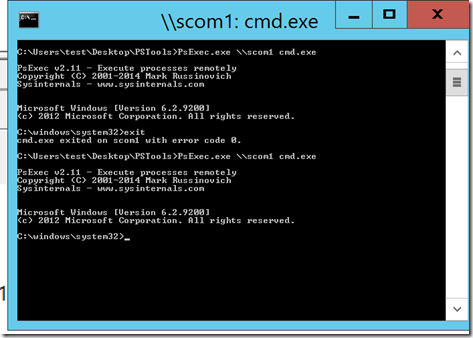

Now, I’m going to use those credentials to hit another machine. This is why PtH attacks are of such concern. This is easy. From the command prompt that opened, I simply launched a psexec from the new shell to a remote system. My logged on user has no rights to this system. However, I’m in.

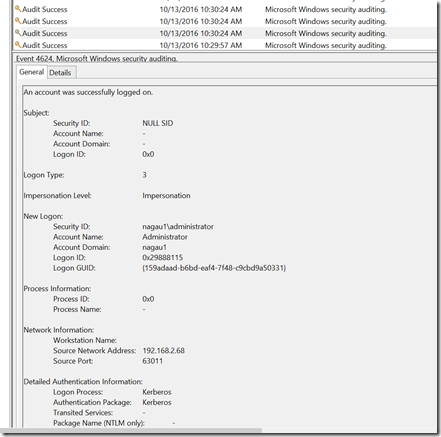

I’d note that I’m trying this on my SCOM server, but moving to my DC in step 3 was just as easy, though it did manage to kick off my logged on user as an unexpected result. Same command, different machine. My generic user account now owns my environment. I found this event on my SCOM machine’s security log:

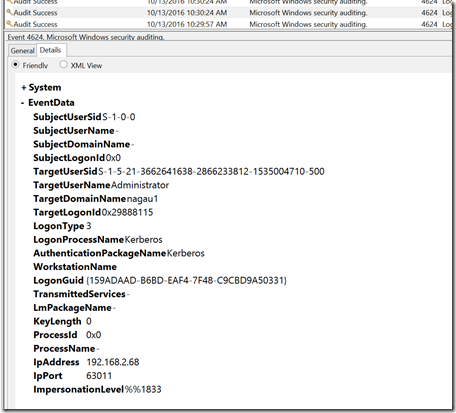

The XML view is a bit more complex as the impersonation level for whatever reason doesn’t translate properly. Instead of seeing “Impersonation” in the XML, I simply see a code (%%1833).

That’s fine. That code unfortunately is not unique to this type of a movement.

Step 3 – The DC:

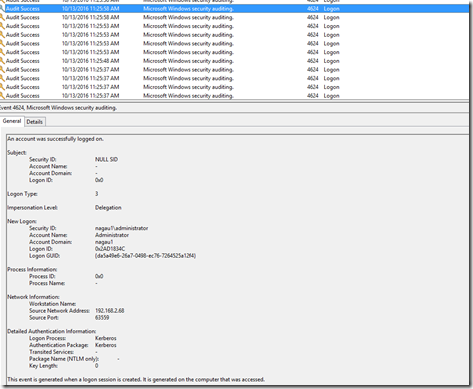

On the DC, I see the pictures below. The problem is that I see a bunch of these, so at this point I’m going to have to configure some sort of alert flood protection. The other odd behavior here is that the impersonation level on the DC is set to Delegation, whereas on the member server, it was simply Impersonation.

The other part is that there isn’t much for bread crumbs. The impersonation level is “Delegation”, but this is hardly uncommon for 4624 events. It does, however, at least in my small sample limited view, appear to be unusual for a domain admin to sign on to a machine with an impersonation level of delegation. I could be wrong, and that’s part of why I’m publishing this. Computer accounts commonly have this type of impersonation, but not user accounts. This will (hopefully) give me something unique to create in SCOM.

Now that we can see the behavior of this attack, we can potentially monitor for it. DISCLAIMER: This is me in my lab. I’m writing this in part for my own benefit and in part because my lab is not a production environment. My goal here is to be able to monitor for an attack in progress, but to do so in a way that does not generate noise. I cannot emphasize enough that your organization will need a good alert management process in order to actually respond properly to these alerts. I’m hoping that some people with some better lab environments as well as a security background can potentially reproduce this as well as verify that the noise level in a quiet state is low.

So on to the rules. I want to test this in some environments other than my lab to see if this holds up. It’s quite possible that it does not and instead either generates a lot of noise or doesn’t fire in certain circumstances. Feel free to add comments with your own result. The rule is straight forward.

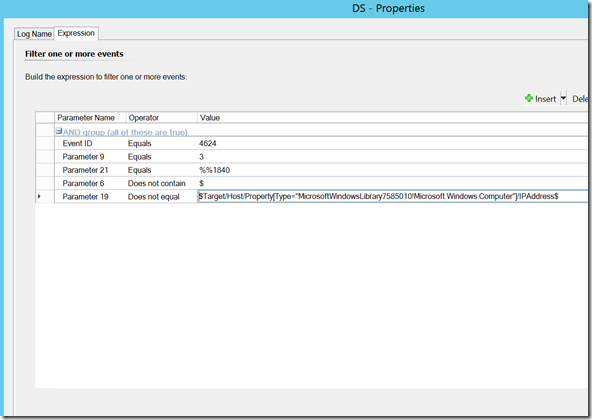

Rule 1: Monitoring the DC for Step 3 related events:

Target: Active Directory 2008 DC Computers

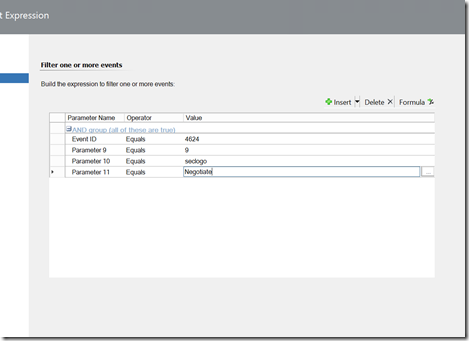

The rule type is NT Event. Here’s a screenshot of the parameters:

Parameter 9 is the logon type, parameter 21 is the impersonation level, and parameter 6 is specifically ignoring these events if there’s a $ symbol in them (which is true in the case of a machine account doing impersonation). I configure alert flood via the Source Network address (parameter 19) as well as a filter to make sure it’s not catching any local authentication. I’m not sure if that’s the right answer or not, but this should keep this to one IP address. If someone is hopping from destination to destination, this would show as multiple alerts. The flip side is that if they sit on one system and hit many, it shows only one alert. I’m not sure there’s an easy way to configure alert flood for this given that this event shows up multiple times on a DC with only one login attempt.

On the same token, we can configure something similar against the server OS to capture the events seen when an account does side to side movement in a tier. If you’re forwarding security events to an event collector, we should be able to create a similar rule there.

One observation in my lab is the domain admin logons via RDP will generate this alert, while standard users via RDP do not. As a rule, you probably shouldn’t be using a DA account for much of anything, but this can potentially generate false positives. I’d love additional feedback on this particular rule.

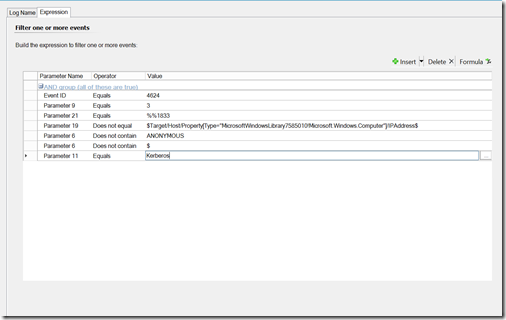

Rule 2: Monitoring the Member Servers for Lateral Walk (step 2):

Target: Windows Server Operating System

Like the other rule, it is an alert generating NT event rule targeting the security log.

Parameter 9 is the logon type and Parameter 21 is the impersonation code. Parameter 19 is filtering out the local IP address. Due to noise, I had to filter out a few additional things. I excluded anything with ANONYMOUS in it as DCs see this type of logon event for the SYSTEM account under normal conditions. I also filtered by the $ character as local machine accounts authenticate in this manner. My SQL server also lit up this one due to normal traffic, as such I created an override to turn this off for the SQL computers group that is created by the SQL management pack. You must have the SQL MP installed in order to override this. Unfortunately, this means you cannot detect this condition on a SQL server. However, we have plenty of other events to target. I also had to disable this against domain controllers for the same reason, though this wasn’t nearly as noisy. I needed to include Kerberos as RDP sessions will generate this event under an NTLM connection. As well, I configured alert suppression for this rule via parameter 19. This event appears more than once on a targeted system.

Rule 3: Monitoring for a credential swap (step 1):

Target: Windows Server Operating System.

As with the other rules, we are targeting the security log.

Parameter 9 is logon type. Parameter 10 is the process name. Parameter 11 is the authentication package.

The end result at this point in my lab is a very quiet set of targeted monitors that can detect the crumbs left behind when an attacker penetrates the environment. This test was only in my lab, so at this point, please feel free to let me know via the comments if you can replicate this or if your production environments are picking up noise that I’m not seeing in my lab. The goal is to leave a user with alerts that are actionable. I can provide the MP I’m developing (though of note I’m doing other things in here as well). If this is something you are interested in testing, please hit me up on linked in.

Technorati Tags: SCOM 2012,PtH,Pass the Hash,Intrusion Detection,Monitor,Rule