SQL Server Statistics: Charting Volatile Data And Density Vector Values

Summary: Tony O'Grady, a Microsoft Premier Field Engineer based in the UK, continues with his second post of 5 advanced articles on charting statistical data with SQL Server. In this post he explores how changes to the underlying data are reflected in cardinality estimations, explores some scenarios, and introduces the concept of density vectors. Enjoy!

In the first post in this series I gave an introduction to how the SQL Server optimiser utilises histogram values for cardinality estimations. In this second post we are going to look at how changes to the underlying data are reflected in cardinality estimations. We will look at specific scenarios where actual values are not represented in the histogram. I introduce the concept of the density vector and show both its advantages and limitations depending on the scenario.

In the first post in this series I gave an introduction to how the SQL Server optimiser utilises histogram values for cardinality estimations. In this second post we are going to look at how changes to the underlying data are reflected in cardinality estimations. We will look at specific scenarios where actual values are not represented in the histogram. I introduce the concept of the density vector and show both its advantages and limitations depending on the scenario.

Scenario 1: inserting additional values to [dbo].[SalesOrderDetail_big]

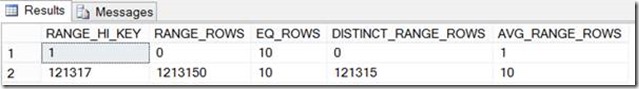

Reviewing the SalesOrderDetailID histogram from the first post, this covered values ranging from 1 – 121317 as we can see in Figure 1:

Figure 1

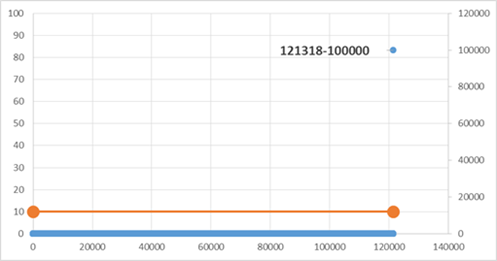

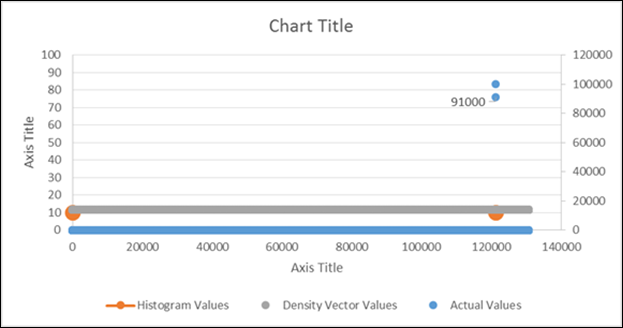

A sample INSERT statement below adds an additional SalesOrderDetailID value of 121318, inserting 100000 rows for this value. Since the insertion is less than the threshold for an automatic statistics update, the value 121318 will not be represented in the histogram. We can visualise this in Figure 2 which plots the histogram to actual values.

INSERT INTO [dbo].[SalesOrderDetail_big]

([SalesOrderID]

,[SalesOrderDetailID]

,[CarrierTrackingNumber]

,[OrderQty]

,[ProductID]

,[SpecialOfferID]

,[UnitPrice]

,[UnitPriceDiscount]

,[LineTotal]

,[rowguid]

,[ModifiedDate])

SELECT TOP 100000

[SalesOrderID]

,121318

,[CarrierTrackingNumber]

,[OrderQty]

,[ProductID]

,[SpecialOfferID]

,[UnitPrice]

,[UnitPriceDiscount]

,[LineTotal]

,[rowguid]

,[ModifiedDate]

FROM [sales].[SalesOrderDetail]

Figure 2

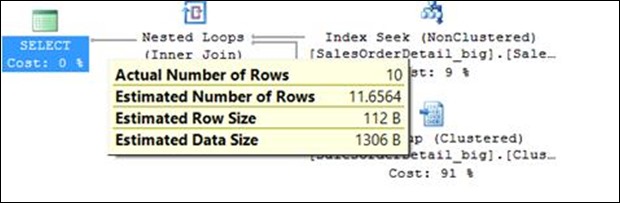

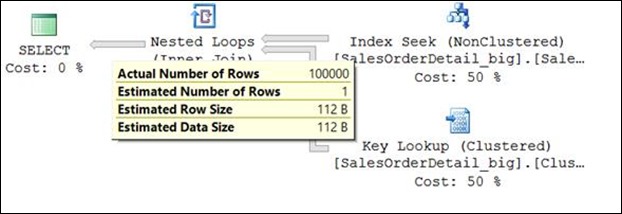

When a sample query uses a WHERE clause value equal to 1213818, SQL Server estimates the number of rows as 1. We can see this in Figure 3 where the query plans for this scenario contains an expensive Key Lookup

SELECT * FROM [dbo].[SalesOrderDetail_Big]

WHERE [SalesOrderDetailID] =121318

OPTION (RECOMPILE)

Figure 3

Scenario 2: inserting additional rows for an existing value in [dbo].[SalesOrderDetail_big]

INSERT INTO [dbo].[SalesOrderDetail_big]

([SalesOrderID]

,[SalesOrderDetailID]

,[CarrierTrackingNumber]

,[OrderQty]

,[ProductID]

,[SpecialOfferID]

,[UnitPrice]

,[UnitPriceDiscount]

,[LineTotal]

,[rowguid]

,[ModifiedDate])

SELECT TOP 91000

[SalesOrderID]

,121317

,[CarrierTrackingNumber]

,[OrderQty]

,[ProductID]

,[SpecialOfferID]

,[UnitPrice]

,[UnitPriceDiscount]

,[LineTotal]

,[rowguid]

,[ModifiedDate]

FROM [sales].[SalesOrderDetail]

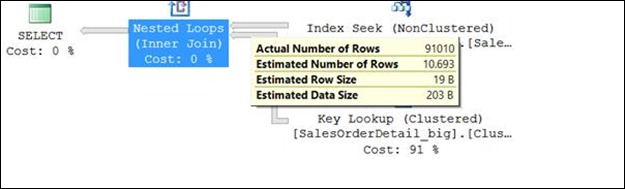

Similar to scenario 1 the addition of 91000 rows for SalesOrderDetailID value 121317 is not sufficient to trigger an automatic statistics update. As can be observed in figure 4 the query plan generated for the sample select statement has estimated the out of date statistics histogram value of 10.693

SELECT * FROM [dbo].[SalesOrderDetail_Big]

WHERE [SalesOrderDetailID] =121318

OPTION (RECOMPILE)

Figure 4

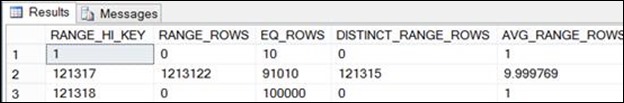

Looking at the histogram in Figure 5, for both scenario 1 and scenario 2 a statistics update can resolve the cardinality estimation errors, but managing and coordinating statistics maintenance on a production environment may be difficult to achieve.

Figure 5

Introducing DENSITY VECTOR Values

As in the sample scenario below, when the WHERE clause value is parameterised the optimiser makes use of statistical ALL DENSITY value in its calculations. This value for a column(s) can be reviewed by running:

DBCC SHOW_STATISTICS ([dbo.SalesOrderDetail_Big],[uststs_SalesOrderDetailID] ) WITH DENSITY_VECTOR

Figure 6

One of the advantages of the all density value is that it can continue to be used by the optimiser for cardinality estimations when an insert continues to add additional ever increasing values to a column, as would be the case for an identity column. In effect, for this scenario the all density calculation “keeps up” with the actual values whereas the histogram values do not.

Figure 7

As an example, if we execute the following query the value 121320 returns 10 rows. But, because 121320 is not represented in the histogram, SQL Server estimates 1 row:

SELECT * FROM [dbo].[SalesOrderDetail_Big]

WHERE [SalesOrderDetailID] = 121320

OPTION (RECOMPILE)

Let’s say we run the same query again without a statistics update, but this time parameterising it. In this scenario, SQL Server uses the ALL DENSITY VECTOR value of 11.6564 in its calculation.

DECLARE @x INT

SET @x = 121320

SELECT * FROM [dbo].[SalesOrderDetail_Big]

WHERE [SalesOrderDetailID] =@x

Figure 8

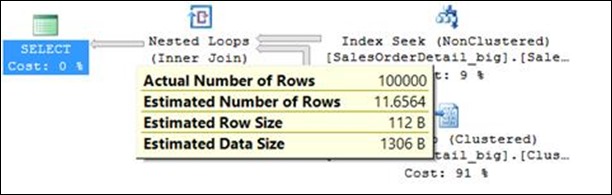

Keep in mind that this approach is not going to benefit all scenarios. Take for example when the WHERE clause value of 121318 from scenario 1 above. In this case the ALL DENSITY VECTOR calculation is 11.6564 and the actual number of rows is 100000. Note this was with fully updated statistics.

DECLARE @x INT

SET @x = 121318

SELECT * FROM [dbo].[SalesOrderDetail_Big]

WHERE [SalesOrderDetailID] =@x

Figure 9

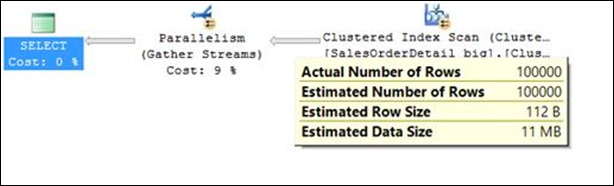

Whereas when we run the same query with a recompile hint this results in an accurate cardinality estimate.

DECLARE @x INT

SET @x = 121318

SELECT * FROM [dbo].[SalesOrderDetail_Big]

WHERE [SalesOrderDetailID] =@x

OPTION (RECOMPILE)

Summary

In this post we looked at how violate data can affect the accuracy of cardinality estimates when the values are not represented in the histogram. We can mitigate for this issue, but the mitigation may not be applicable in all scenarios particularly with outlier values.

In the third post of this series I am going to identify and resolve a scenario where even with a fully updated histogram the values may not be representative resulting in inaccurate cardinality estimations.

Written by Tony O’Grady; Posted by Frank Battiston, MSPFE Editor

![Result of DBCC SHOW_STATISTICS ([dbo.SalesOrderDetail_Big],[uststs_SalesOrderDetailID] ) WITH DENSITY_VECTOR Result of DBCC SHOW_STATISTICS ([dbo.SalesOrderDetail_Big],[uststs_SalesOrderDetailID] ) WITH DENSITY_VECTOR](https://msdntnarchive.blob.core.windows.net/media/TNBlogsFS/prod.evol.blogs.technet.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/00/92/37/metablogapi/7167.clip_image012_thumb_2A7256D6.jpg)

![Result of SELECT * FROM [dbo].[SalesOrderDetail_Big] ... Result of SELECT * FROM [dbo].[SalesOrderDetail_Big] ...](https://msdntnarchive.blob.core.windows.net/media/TNBlogsFS/prod.evol.blogs.technet.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/00/92/37/metablogapi/1256.clip_image016_thumb_7A0A4308.jpg)