Designing and Managing Servers for Cloud-Scale Datacenters

Kushagra Vaid, General Manager

Kushagra Vaid, General Manager

Server Engineering

Microsoft's cloud infrastructure, including datacenters and networks, hosts massive cloud applications such as Windows Azure, Bing, Xbox Live and Office 365 on more than a million servers around the world. Deploying and operating these services requires coordination between both the software and hardware components to maximize great utilization and computing efficiencies, and reduce the total cost of ownership (TCO) aspects that we work so hard to achieve.

As the General Manager of Server Engineering for Microsoft's cloud infrastructure, I lead a team of engineers focused on designing servers optimized for our cloud-scale datacenters. Servers represent a significant portion of an infrastructure's capital expenditures, so it is important to drive technological advances that can enable high-value business scenarios for improving TCO. Earlier today, I spoke at the Open Server Summit about designing servers for Microsoft's cloud-scale datacenters and in this blog will highlight a few of the key considerations in the design and management of servers in our infrastructure that I shared with the audience.

|

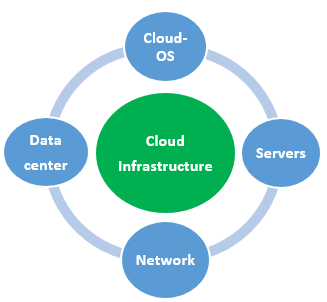

To optimize the infrastructure for Microsoft's 200+ cloud services, it's important to understand the connection of interactions between the workloads, the Cloud OS, and the server and datacenter architecture. We call this the vertical integration and view them collectively as an integrated unit because the combination presents efficiencies that are greater than the sum of its parts. Let's consider two examples relating to deploying and operating server clusters at scale where the synergy between the hardware and software components can provide significant TCO benefits.

Power capacity provisioning at scale

Microsoft's cloud-scale datacenters can have several different workloads running at any given time. Some of these may be public-facing services for internet users, while others may be internal services such as business intelligence data analysis for a Microsoft business group. For public-facing services we deploy server clusters for a broad set of services, where we make server capacity allocation decisions based on power consumption levels for the worst-case workload. Given that other workloads hosted on the same server infrastructure may have lower power consumption levels, like the internal data analysis mentioned above, we must solve how to utilize the allocated power headroom for improving overall datacenter TCO versus leaving that power capacity stranded.

The cost of power provisioning for datacenters ranges from $5M/MW to $10M/MW, which makes leaving any stranded server power an expensive proposition. This is a common challenge faced by datacenter operators and the reason we take a coordinated approach to balance server power and performance tradeoffs at the datacenter level over the lifetime of the deployed server cluster.

Managing server uptime during critical datacenter events

In another example, once clusters have been deployed, datacenter operators have a better understanding of the importance of these services related to workload priorities and Service Level Agreements (SLAs). Under normal operating conditions, everything operates smoothly and SLAs are met. For instance, if an air-cooling unit fails or a power grid outage occurs, we establish priority workloads that need to be maintained at full performance, such as public-facing services. For other internal services, such as our own processing and maintenance tasks, we may throttle or temporarily shut down to reduce thermal envelopes, etc. It's challenging to handle such real-time events manually given the short response times and the scale at which such policies need to be applied. But if the server, datacenter, and Cloud OS operate as a single integrated entity, it's possible to deal with such critical events without impacting customer SLAs.

Operating infrastructure at scale requires rethinking systems architecture and challenging existing conventions for how the various components should be managed. This is essential to enabling new business scenarios which keep cost of ownership under control. We continue to look across the infrastructure for integration opportunities that help us maintain service availability and improve our operations. In a follow-up to this blog, I'll provide a deeper look at how we maximize performance for applications and discuss how challenges across the infrastructure can be solved using a systems level approach. In the meantime, please download a PDF copy of my presentation from today's Open Server Summit.

More to come on this subject later. Our best practices for cloud datacenters are publicly available in the videos, whitepapers, and blogs that we have been sharing since 2007 and you can access them via our web site at www.microsoft.com/datacenters