Using Azure Container Instances to host Azure ML models

There are two documented ways to deploy a container which exposes a model from Azure ML Workbench. The first is to deploy the container image to an Azure Container Registry, and then run it in your local docker instance. This works to publish the container as local host, but not when you need a model service that is accessible over the internet. In the previous version of Azure ML, this could be easily accomplished by deploying the model as a web service. Since container based models have been adopted in the new ML preview, this is no longer an option. Instead, the documentation instructs that the model should be deployed to an ACS cluster orchestrated by Kubernetes. The cluster setup is mostly automated by Azure ML Model Management. This is a great way to deploy the model for production, but may be more heavy weight and expensive (three VMs are setup for you) that is needed, especially in a demo or PoC.

Fortunately, there is a new Azure service called Azure Container Instances. This service allows you to run a container in a PAAS based service which has features of Kubernetes orchestration under the covers, but with payment based on usage patterns (low in PoC or demo). It is a fairly straight forward process to publish Azure ML containers to Container Instances.

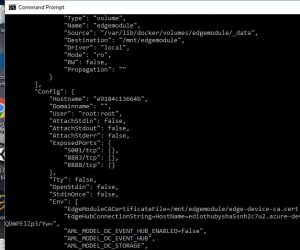

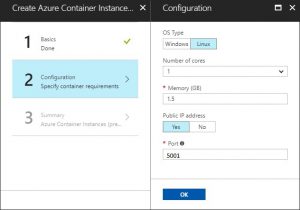

Follow the general instructions here to setup an Azure container instance, and provide the path to your ML container in ACS. The only tricky part is working out the ports to expose, and the URI to use when accessing the ML model. The easiest way to work this out is to issue a 'docker inspect' command on your ML container. This will tell you the external ports it is expecting to be mapped to its own internal ports. In the configuration / setup of the Container Instance, specify the main external port in the ports section. For example, for the following port output...

map the TCP 5001 port in the configuration section of the Container Instance setup wizard.

After you setup your container instance, you can retrieve its IP address from the portal. The resource will be called a "container group". In the overview section for this resource, you will see the IP address listed. You will also need the identifier and port to use in the Url. The port is simply the one you defined when creating the Container Instance. For the identifier, look in the output from Azure ML when you configured your model. You should see an example of calling the model through REST. This example will identify the last part of the URL you will use in calling your model. For example:

"http<0.0.0.0>:5001\score”. Simply post to this URL using your favorite method (I like PostMan), and data, and you should receive the appropriate response from your model.