Inside Hash and Sort Warnings

Recently I dove into Query Performance and troubleshooting topic. I delivered a couple of 400+ Performance and Tuning Workshops as part of my SQL Master Academy Training Initiative. All of those very intensive and incredibly useful workshops focused my attention on several topics. I made an experiment presenting a session named Detecting Problems in Query Plans in our SQL User Group Meeting. The people in the audience were very happy to clarify some topics, and the feedback I received was very positive.

I will try to blog them in next few publications, but let me start with a first one, that I consider as underestimated and not very clear, at the same time very common.

In order to explain what exactly the Hash and Sort warning are and why they should be treated as a signal of a performance problem let me first pay more attention on Expensive Operators in query plans

Expensive Operators

Some operators in query plans have specific resource needs. They need more memory than the others in order to perform their tasks. Such operators are called memory consuming operators and they could make a query more expensive from the resource point of view. Such operators are SORT, SORT DISTINCT, HASH JOIN, HASH AGGREGATE.

At compilation time, based on estimated number of rows that the operator will have as an input, the QP calculates the number of pages in memory that this operator needs in order to execute. When a query has a memory consuming operator the QP calculates the memory needed to execute the query as a Memory Grant. The Memory Grant is a value that contains the sum of all pages needed by all expensive operators in order to execute the query plan. Occasionally, the query can become very expensive, especially when those operators process large numbers of rows. For example the Sort operator is very expensive because it’s “costs” is proportional to number of rows that have to be sorted, respectively the number of pages that those rows fit.

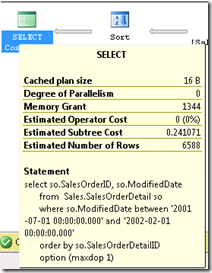

select so.SalesOrderID, so.ModifiedDate

from Sales.SalesOrderDetail so

where so.ModifiedDate between '2001-07-01 00:00:00.000' and '2002-02-01 00:00:00.000'

order by so.SalesOrderDetailID

option (maxdop 1)

We have a SORT operator which requires 1344 pages of memory in order to perform the sorting of data. The overall Query Memory required is 1344*8K=10.5MB

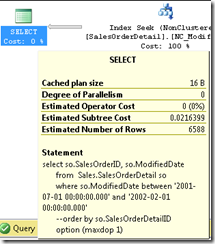

If I remove the need for SORT operator (you can comment ORDER BY clause to test that), then my query plan would be

Note that I don’t have Memory Grant as part of the query plan description of the root operator.

In both cases the execution plan is 16 Bytes in cache.

The key points

- Using Sort and hash operators in the query plan requires additional query memory or workspace memory, outside of the memory needed to store execution plan

- The Memory Grant is a an allocated query memory.

- The QP calculates Query Memory at the compilation time, but the real usage of this Memory is during the query execution.

How a query with expensive operators is executed

Query memory is allocated outside of the buffer pool. The amount of space available for query memory allocation is dynamically managed between 25% and 75% of non-AWE buffer pool (only data pages can use AWE mechanism for page allocation). 5% of the Query Memory is reserved for small queries (queries with low costs). No query can take more than 20% of the Total Query Memory. There are 5 query Memory Grant queues as a mechanism to manage expensive queries and to allow less expensive queries to run. The queues are organized based on query cost. Every query containing hash/sort operators has to pass trough the global grant queue, where it is put in its respective queue based on its costs. Queues are served on first come first serve basis. There is a little probability more than a couple of expensive queries from a large cost queue to execute at the same time, they probably will execute one after another.

After such a query is compiled and the plan is cached, there comes the step of query execution or the moment of truth. At this time the query needs the memory to be allocated. There are couple of scenarios that could happen here. If there is no available memory or there are other expensive queries with similar cost (on the same queue) executing at the same time, then the query waits in the Memory Grant Queue.

If the query reaches the wait time threshold (the Query Wait Option on SQL Server Level), then the query times out. You can start query again later, but obviously this is a signal that you have more queries with expensive operators than your server can perform. So the possible focus for further troubleshooting is to try to optimize queries and reduce their expensive operators trough proper indexing, reconsiderations of using ORDER BY clause, , increase server memory could be also an option (that is too broad topic to discuss here)

If the query gains the memory the query execution starts. So far so good. But the real moment of truth comes here, because this is the moment of test if the QP calculations and the Memory Grant is enough. What are the possible scenarios then?

The Memory Grant is not enough for the query to execute smoothly.

This is when the Hash and Sort Warnings appear. Why would this memory grant be not enough? Because there is a misunderstanding and respectively a miscalculation of the memory needs at compilation time and at query execution time. Note that I don’t mention that the problem is in memory shortage/misconfiguration or that you have to add more memory to your server. Well, that could be the case, but the experience of Hash and Sort warnings alone doesn’t necessary mean than there is a performance problem and resource shortage. Why there could be miscalculations and when?

Imagine that you have a query plan cached with a specific parameter value that QP get at the compilation time, and actually based on that value it creates the plan. You execute the query (trough stored procedure, sp_executesql with parameters for example) and you provide different parameters value leading to differences in selectivity and the result set (a lot more rows for the sort operator input for example).

Using the cached plan and respectively the same Memory Grant is not enough for the memory consuming operators to perform their tasks.

To illustrate exactly what happens, let’s focus on some examples. Lets create a very simple procedure called SalesOrderSelect. I am going to rely on procedure plan caching that is provided in SQL Server, that is why I am using a stored procedure here rather than ad-hoc select statement.

create proc SalesOrderSelect

@ModifiedDateFrom datetime,

@ModifiedDateTo datetime as

begin

declare @SalesOrderID int,

@ProductID int,

@ModifiedDate datetime

select so.SalesOrderID, so.ModifiedDate

from Sales.SalesOrderDetail so

where so.ModifiedDate between @ModifiedDateFrom and @ModifiedDateTo

order by so.SalesOrderDetailID

option (maxdop 1)

end

go

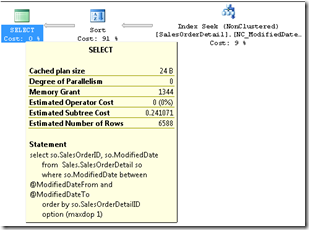

No lets execute the procedure for the first time with the parameters defining the interval of 6588 rows, which is about 5% of the rows in the table. The first execution generates the procedure execution plan for this specific parameter values, and this plan is cached in memory.

exec SalesOrderSelect '2001-07-01 00:00:00.000', '2002-02-01 00:00:00.000'

Now lets execute the stored procedure again, but defining a larger result set. Let’s also profiler the query, including the Hash and Sort Warning events from the Errors and Warning Event group

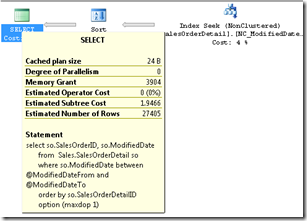

exec SalesOrderSelect '2001-07-01 00:00:00.000', '2003-02-01 00:00:00.000'

The query returns 27405 rows, about 4 times more that in the first execution. And because it is using the same execution plan that is cached in memory, the resources for the query are the same, including the Memory Grant parameter. This time, the SORT operator must perform the sorting on 4 times more rows than in the first execution, using the same amount of Memory (10.5MB). Because of that the Engine raises the Sort Warnings Event.

The sort operation doesn’t fit in memory and continue sorting using the tempdb database and performing multiple passes over the data that are required to be sorted. This is the reason of performance degradation. The same applies to hash operators when the memory grant is not enough – the hash warning indicates that the hash recursion has occured , which is the build input doesn’t fit in the memory granted, resulting in the split of input into multiple partitions that are processed separately. If any of these partitions still do not fit into available memory, it is split into subpartitions, which are also processed separately. This splitting process continues until each partition fits into available memory or until the maximum recursion level is reached (displayed in the IntegerData data column). Hash bailout occurs when a hashing operation reaches its maximum recursion level and shifts to an alternate plan to process the remaining partitioned data. You can imagine that a query could be very slow once reaching that case.

The Memory Grant is more than it is needed

What if we have an opposite case, where we execute the query with a cached plan using more restrictive clause, such ending up with more memory allocated then it is actually needed.

In order to do that I am recompiling the stored procedure and executing it after that

exec sp_recompile SalesOrderSelect

go

exec SalesOrderSelect '2001-07-01 00:00:00.000', '2003-02-01 00:00:00.000'

go

Note that the Memory Grant is calculated as 3904 pages or approx 31MB. If I execute the query using the smaller interval, I am going to need 10.5MB of memory. The query will not raise sort warning, but I am going to waste approx 20MB of the memory grant, my query will going to use 3 times more memory that it actually needs, and probably my query will go to inappropriate queue. .

What are the cases when Hash and Sort Warnings can occur

All the Cardinality errors cases - https://msdn.microsoft.com/en-us/library/ms181034.aspx – look at the cases when QP cannot accurately calculate cardinalities, I am not going to copy them here. If the QP cannot accurately calculate cardinalities of query plan operators, then it makes errors in calculating the query memory, sometimes even choosing the appropriate operator. When the QP have no idea what is the cardinality , for example if you have user-defined function whose argument is not a constant value in the where clause, then the QP has to guess and makes assumptions depending on the comparison operator (9-30% selectivity of the clause), which could be totally wrong in your case. (that is a good candidate for a next post)

Parameterized queries and stored procedures when the parameter values are very different leading to different selectivity of the query. This was the case I use in this post as an example.

The Solution

Track events first. I am using the RML tools for monitoring, and readtrace after that to process traces, or SQL Nexus. You can run RML to capture the detailed trace for some period of time depending on your workload or periods of slow system response. Don’t use the Profiler, it will harm your performance. Beside that the RML tools can and will surprise you with how many other thinks you can find happening with your database and workload that could be optimized. Sort and Hash warning appears in the Interesting Event Reports with statements where they occur. Sometimes I work directly with PerfAnalysis database to find out more info that do not appear in the graphical reports. When you find out the statements when the sort/hash warnings occur try to perform the following optimizations:

- make sure statistics are maintained properly on database and object level

- use compile hint, sometimes it is cheaper to recompile on every execution than to pay for sort/hash warnings, you can use plan guides if you don’t want to change your code

- don’t confuse parameters and variables and make sure you have the right values at the compile time provided to the QP

- make sure to eliminate/reduce cardinality error cases mentioned in the above link. Most of them need rewriting the query

- reduce ODRER BY if you can