SQL Server Unplugged Follow Up

As I promised recently I am following up some questions from the MS Days 2010 SQL Server Unplugged Session.

Q: RBS and FILESTREAM Usage, why using the FILESTREAM/RBS functionality in SharePoint delayed?

A: FILESTREAM and RBS are complementary approaches for storage and management of BLOBs and we see them both continuing to evolve.

- FILESTREAM provides a storage option that allows storage, efficient streaming and integrated management of large BLOBs in a SQL database by utilizing the underlying NTFS file system for BLOB storage/streaming. It offers fully transactional access and compatible operations as varbinary(max). The FILESTREAM support is built into SQL Server 2008 Database Engine.

- RBS is a set of standardized APIs that allow storage/retrieval of BLOBs outside of your main SQL database where a dedicated BLOB store is desirable for various reasons. This uses a provider model for plugging in any dedicated BLOB store that implements these RBS APIs. We have also built an out-of-the-box RBS FILESTREAM provider that allows a deployment to use a SQL Database (local or remote) as a dedicated BLOB store. This provider utilizes the FILESTREAM as the BLOB storage mechanism and ties the two technologies together. SQL Server (RBS) is available as part of the Feature Pack RC0 for Microsoft SQL Server 2008. The download includes a client-side DLL that you can use in your applications and also some stored procedures to install on the SQL Server itself. The RBS RC0 setup program is available for X86, X64, and IA64 computers. It requires the Microsoft Installer 4.5

Download the RBS RC0. Here’s the link: https://www.microsoft.com/downloads/details.aspx?displaylang=en&FamilyID=089a9dad-e2df-43e9-9cd8-c06320520b40

The RBS team also released a sample application showing the many ways to use this new feature, including:

- Basic store / fetch

- Specifying collections / blob stores for store calls

- Push and pull streaming APIs

- Using the System.IO.Stream APIs to do asynchronous reads from the blob store

This package also includes a sample file provider, in case you do not have an RBS store to test your application. Be sure to install the sample provider (the instructions are available at the CodePlex site) before you try storing and reading blobs.

Download the sample and leave comments at https://www.codeplex.com/sqlrbs

Check the recently created RBS team blog at https://blogs.msdn.com/sqlrbs

Q: How to decide FILESTREAM or In Data Page storage and what are the FILESTREAM advantages?

A: According to BOL use FILESTREAM when:

- You are storing BLOBs with an average size of 1 megabyte or more

- Fast read access is important

- You want to access BLOBs from your application's middle tier code

The advantages of using FILESTREAM are:

- You store and retrieve your BLOBs together with your relational data in a single data store

- The BLOBs are included in database backups and restores

- Inserting, updating and deleting BLOBs and your relational data happens in the same database transaction

- The 2 GB max size for a varbinary(max) column doesn't apply; you are only limited by the available space on the NTFS file system

- The SQL Server buffer pool memory is not used to operate on BLOBs; in previous versions large BLOBs could consume a lot of this memory

- All BLOB access can be performed from .NET code, allowing your middle tier code to easily work with these objects

- The NTFS file system can save and retrieve large BLOBs faster than SQL Server

Q: How the disk parameters should be setup for the disks where Transaction Log reside?

A: To answer the question lets clarify some terms which will allow us to be on the same page

Sector Size

The sector size is the smallest physical storage unit on the disk, the minimum data chunk that can be written to disk. . The sector size is a fixed size set by the disk manufacturer. Historically, sector size has been fixed at 512 bytes. (Newer drives make 1KB, 2KB, or 4KB sectors available.)

Stripe Size

The stripe size refers to the unit of data that is written and accessed from a disk in a RAID system. This is a configurable value that is set when designing the storage array system. A smaller stripe size allows data to be distributed to more disks and increase I/O parallelism. Note that the stripe size of a single SQL Server extent (64 KB) is the lower limit. For the same data, a larger stripe size means the data can be stored on fewer disks and decrease the I/O distribution and the degree of parallelism.

File Allocation Unit Size

When hard drives are formatted, the size of the file allocation unit defined by the OS (or user) determines the number of sectors per file allocation unit. The file allocation unit size is known also as cluster size. For example, if the sectors of a hard drive are 512 bytes & a 64KB file allocation unit is defined, the cluster is comprised of 128 sectors.

Partition Alignment

Disk array hardware reports 63 reserved (hidden) sectors, and Windows obediently implements this information, reserving these sectors at the beginning of the first partition of a disk. The master boot record (MBR) resides within these hidden sectors. The compliance by Windows with 63 hidden sectors reported by disk hardware results in misalignment with stripe units, disk controllers, and cache segment lines. In all versions of Windows earlier than and including Windows XP and Windows Server 2003, these reserved sectors do not coincide with fundamental physical boundaries. The result is that single clusters of user data are written across multiple stripe units of the RAID. Every nth operation is affected (n depends on file allocation unit (cluster) size and stripe unit size). Fundamental physical boundaries in disk controllers and other hardware are also violated. (Disk Partition Alignment Best Practices SQL CAT Team Article)

Correlations: Partition Offset, File Allocation Unit Size, & Stripe Unit Size

There are two correlations which when satisfied are a fundamental precondition for optimal disk I/O performance. The results of the following calculations must result in an integer value:

Partition_Offset ÷ Stripe_Unit_Size

Stripe_Unit_Size ÷ File_Allocation_Unit_Size

Of the two, the first is by far the most important for optimal performance.

What is the role of the sector size for the transaction log?

Although SQL Server tries to use the log space as efficiently as possible, certain application patterns cause the log-block fill percentages to remain small. The following extreme example points out the clear difference in the transaction log space requirements as the sector size varies.

WHILE(@I < 10000)

BEGIN

BEGIN TRAN

INSERT INTO tblTest values (‘A’, @I)

COMMIT TRAN

SET @I = @I + 1

END

- vs -

BEGIN TRAN

WHILE(@I < 10000)

BEGIN

INSERT INTO tblTest values (‘A’, @I)

SET @I = @I + 1

END

COMMIT TRAN

The first example requires SQL Server to write the log records 10,000 times, as each commit is processed. The second example enables SQL Server to pack log records and write all 10,000 inserts and the single commit at the same time.

Calculating the log usage for the examples results in the following approximations.

· By using a 4-KB sector size for the first example, ~40 MB of disk space is used.

· By using a 512-byte sector size for the first example, ~5 MB of disk space is used.

· By using a 4-KB sector size for the second example, ~1 MB of disk space is used.

· By using a 512-byte sector size for the second example, ~1 MB of disk space is used.

How the 40MB and 5MB disk space comes from - It is 10 000Transactions * 4K or 512byte respectively. With first two examples where we have large number of small transactions all that matters is the sector size. When you have one large transaction, the 1MB of disk space is calculated as just the total amount of data that need to be saved in the log unrelated to the sector size, because SQL Server is able to pack the log records. With smaller number of larger transactions and wrapping inserts you can gain sequential writes up to 64K blocks for writing the Transaction and not wasting log space, and because you have smaller number of transactions you get smaller MB/s and performance benefits.

Not that the example shows how the different sector sizes affect the log size in those particular cases. We however don't have much choice for the sector size because it is set by the hardware vendor. We however do have control for our transaction size and data layer application, and we should prefer to batch such kind of data modification instead of sending them as a separate small transactions.

Transaction Log structures and how they are written

The typical pattern of transaction log workload in OLTPs are sequential Log writes up to 60k and sequential Log reads up to 60k. Operations inside the database generate transaction log records. A log record contain information about data modifications (Prev/Next data pages LSNs, data values modified, transaction information). In the beginning of a transaction a beginning record is created, and the end of a typical transaction is marked as Commit Record, which indicates that the transaction must be reflected in the database’s data files or redone if necessary. You can easily find all the records for a transaction using the non-documented function fn_dblog

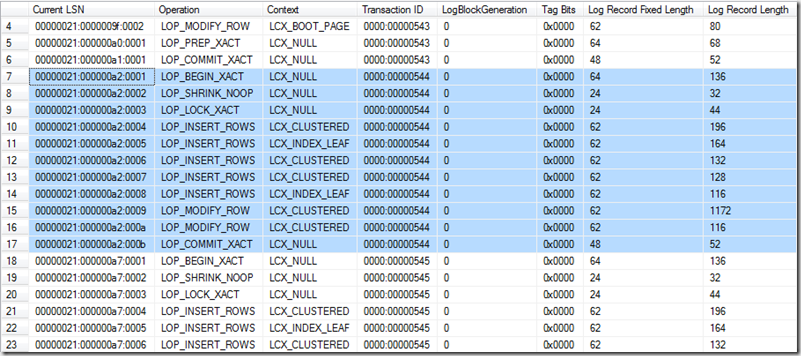

For example executing SELECT * FROM fn_dblog (NULL, NULL) for the current database, returns the following table result where you can easily see the transaction records for transaction 544 (from row 7 to row 17), the begin transaction record (LOP_BEGIN_XACT), the commit transaction record (LOP_COMMIT_XACT), their size and lot more information.

As you probably know at least from my previous blog posts for Log Fragmentation, the Transaction Log is a sequential file which contains VLFs – a smallest unit of Log management – making log part active, truncating the log. VLFs are split internally into dynamically sized log blocks, ranging from 512-bytes to 64KB in 512-byte increments, as needed.

When a transaction commits, all the transaction log records should be written to disk, which force the actual physical IO operation. Not every transaction log record is written as a separate IO operation to disk. Log records are grouped into log blogs, which are the unit of a write IO operation in the transaction log. The log block are dynamically sized according to specific internal algorithms, but their size definitely divides to sector size (usually 512) and their max size is 64KB. The log block change can be obtained from Current_LSN Column in the fn_dblog result set as a change in the middle number (000000a2 for the Transaction 544). Note that log blocks have different sizes according to the transaction (Summary of Log Record Length column in Bytes). Paul has a good post showing the importance of transaction size, batching data modification for log block sizes and the resulting TLog IO optimizations. Again the large number of very small transactions as well as the large transactions could affect negatively.

What we can do and what are the configurable values?

We can configure the Stripe Size, File Allocation Unit Size and we have to make sure the partitions are aligned (for OS prior to Windows Server 2008)

We recommend a 64 KB or 256 KB stripe size for most workloads. When the workload includes table and index range scans on tables that are larger than 100 MB, a stripe size of 256 KB allows for more efficient read-ahead.

It is very hard to know in detail the workload and to configure those values in the best possible way. That is why the recommendation exists covering the average workload type and mentioning exceptions.

Small stripe sizes work better for small IO requests because you can process lots of them in parallel using most of your spindles. A large IO request requires large seek time to read only few KBytes on each spindle, so in case your IO requests are large, increasing the stripe size can help to get more throughput, reading lots of data with small costs. Long stripe sizes usually work better in scenarios with not too many parallel requests.

If your IO requests are small and you have large stripe sizes you can end up with a not so good distributed load among the disks, and you cannot evenly utilize lots of spindles you have (in case you have them). You cannot get the maximum throughput because you do not parallel your IO operation. The small addressable unit of the array is the stripe so having very large stripes can hurt performance when storing small files or writing transaction log streams.

The file allocation unit size (cluster size) recommended for SQL Server is 64 KB

An appropriate value for most installations should be 65,536 bytes (that is, 64 KB) for partitions on which SQL Server data or log files reside. In many cases, this is the same size for Analysis Services data or log files, but there are times where 32 KB provides better performance. To determine the right size, you will need to do performance testing with your workload comparing the two different block sizes.

In the absence of an explicit vendor recommendation, use a partition offset that complies with the correlation discussed in the section “Correlations: Partition Offset, File Allocation Unit Size, and Stripe Unit Size”. 64 KB is a common, valid starting partition offset because it correlates well with fundamental physical boundaries in disks, controllers, and cache. Other valid starting partition offsets exist. The Windows Server 2008 default is 1024 KB.

The most important think to consider however are not those configurations. Even when we have those settings as a fact that we cannot change (Shared SAN, storage admins decisions, etc) we can live with that. Those that are really important are our database and transaction log initial file size and auto growth settings. If we don't set them correctly and/or leave them by default we risk to end up with huge disk and file fragmentation and hurt IO Performance. (see my previous blogs addressing fragmentation)