ICYMI: Recent Microsoft AI Platform Updates, Including in ONNX, Deep Learning, Video Indexer & More

Four recent Microsoft posts about AI developments, just in case you missed it.

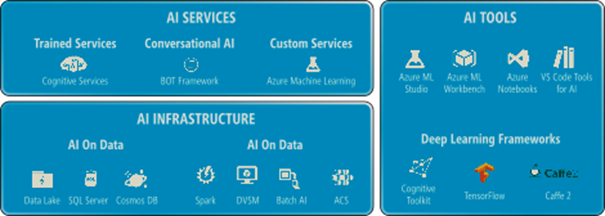

1. Getting Started with Microsoft AI – MSDN Article

This MSDN article, co-authored by Joseph Sirosh and Wee Hyong Tok, provides a nice summary of all the capabilities offered by the Microsoft AI platform and how you can get started today. From Cognitive Services that help you to build intelligent apps, to customizing state-of-the-state computer vision deep learning models, to building deep learning models of your own with Azure Machine Learning, the Microsoft AI platform is open, flexible and provides developers the right tools that are the best suited for their wide range of scenarios and skills levels. Click here or on the image below to read the original article, on MSDN.

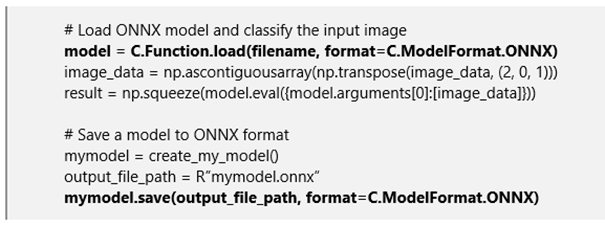

2. Announcing ONNX 1.0 – An Open Ecosystem for AI

Microsoft firmly believes in bringing AI advances to all developers, on any platform, using any language, and with an open AI ecosystem that helps us ensure that the fruits of AI are broadly accessible. In December, we announced that Open Neural Network Exchange (ONNX), an open source model representation for interoperability and innovation in the AI ecosystem co-developed by Microsoft, is production-ready. The ONNX format is the basis of an open ecosystem that makes AI more accessible and valuable to all – developers can choose the right framework for their task; framework authors can focus on innovative enhancements; and hardware vendors can streamline their optimizations. ONNX provides a stable specification that developers can implement against. We have incorporated many enhancements based on the feedback from the community. We also announced Microsoft Cognitive Toolkit support for ONNX. Click here or on the image below to read the original article.

3. Auto-Scaling Deep Learning Training with Kubernetes

Microsoft recently partnered with a Bay Area startup, Litbit, on a project to auto-scale deep learning training. Litbit helps customers turn their "Internet of Things" into conscious personas that can learn, think and do helpful things. Customers can train their AI personas using sight, sound, touch and other sensors to recognize specific situations. Since different customers may be training different AI personas at different times, the training load tends to be bursty and unpredictable. Some of these training jobs (e.g., Spark ML) make heavy use of CPUs, while others (e.g., TensorFlow) make heavy use of GPUs. In the latter case, some jobs retrain a single layer of the neural net and finish very quickly, while others need to train an entire new neural net and can take several hours or even days. To meet the diverse needs for training in a cost-efficient way, Litbit needed a system that could scale different types of VM pools (e.g. CPU-only, light GPU, heavy GPU) up or down, based on demand. This article talks about the lessons learned from this scenario, explaining how to scale different types of VMs up and down based on demand. While there are many options for running containerized distributed deep learning at scale, we selected Kubernetes due to its strong cluster management technology and the large developer community around it. We start by creating a Kubernetes cluster with GPU support on Azure to run different types of machine learning loads. Then we add auto-scaling capability to the Kubernetes cluster to meet bursty demands in a cost-efficient manner. Learn more at this link or by clicking on the image below.

4. Bring Your Own Vocabulary to Microsoft Video Indexer

Many of us who recall our first day at a new job can relate to the feeling of feeling overwhelmed with all the new jargon – new words, product names and cryptic acronyms, not to mention the exact correct context in which to use them. After a few days, however, we likely began to understand several of those new words and acronyms and started adapting ourselves to all that new lingo that was thrown at us. While automatic speech recognition (ASR) systems are great, when it comes to recognizing a specialized vocabulary, these systems are just like humans – they need to adapt as well. We were delighted, therefore, to announce Microsoft Video Indexer (VI) support for a customization layer for speech recognition, allowing you to teach the ASR engine new words, acronyms and how they're used in your context. VI supports industry and business specific customizations for ASR via integration with the Microsoft Custom Speech Service.

Speech recognition systems represent AI at its best, mimicking the human cognitive ability to extract words from audio. By customizing ASR in VI, you can tailor things to your specialized needs. Learn more at this link or by clicking on the image below.

Merry Christmas & happy holidays!

ML Blog Team