How to Develop a Currency Detection Model using Azure Machine Learning

This post is authored by Xiaoyong Zhu, Anirudh Koul and Wee Hyong Tok of Microsoft.

Introduction

How does one teach a machine to see?

Seeing AI is an exciting Microsoft research project that harnesses the power of Artificial Intelligence to open the visual world and describe nearby people, objects, text, colors and more using spoken audio. Designed for the blind and low vision community, it helps users understand more about their environment, including who and what is around them. Today, our iOS app has empowered users to complete over 5 million tasks unassisted, including many "first in a lifetime" experiences for the blind community, such as taking and posting photos of their friends on Facebook, independently identifying products when shopping at a store, reading homework to kids, and much more. To learn more about Seeing.AI you can visit our web page here.

One of the most common needs of the blind community is the ability to recognize paper currency. Currency notes are usually inaccessible, being hard to recognize purely through our tactile senses. To address this need, the Seeing AI team built a real time currency recognizer which can uses spoken audio to identify the currency that is currently in view, and with high precision and low latency (generally in under 25 milliseconds). Since our target users often cannot perceive whether a currency note is in the camera's view or not, having a real time spoken experience acts as feedback to them, helping them hold the note until it's clearly visible at the right distance and in the right lighting conditions.

In this blog post, we are excited to be able to share with you the secrets behind building and training such a currency prediction model, as well as deploying it to the intelligent cloud and intelligent edge. More specifically, you will learn how to:

- Build a deep learning model on small data using transfer learning. We will develop the model using Keras, the Deep Learning Virtual Machine (DLVM), and Visual Studio Tools for AI.

- Create a scalable API with just one line of code, using Azure Machine Learning.

- Export a mobile optimized model using CoreML.

Boost AI Productivity with the Right Tools

When developing deep learning models, using the right AI tools can boost your productivity – specifically, a VM that is pre-configured for deep learning development, and a familiar IDE that integrates deeply with such a deep learning environment.

Deep Learning Virtual Machine (DLVM)

The Deep Learning Virtual Machine (DLVM) enables users to jumpstart their deep learning projects. DLVM is pre-packaged with lots of useful tools such as pre-installed GPU drivers, popular deep learning frameworks and more, and it can facilitate any deep learning project. Using DLVM, data scientists can become productive in a matter of minutes.

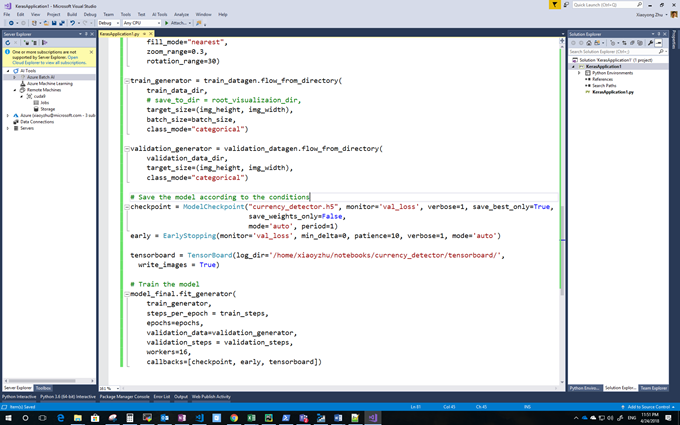

Visual Studio Tools for AI

Visual Studio Tools for AI is an extension that supports deep learning frameworks including Microsoft Cognitive Toolkit (CNTK), TensorFlow, Keras, Caffe2 and more. It provides nice language features such as IntelliSense, as well as debugging capabilities such as TensorBoard integration. These features make it an ideal choice for cloud-based AI development.

Visual Studio Tools for AI

Building the Currency Detection Model

In this section we describe how to build and train a currency detection model and deploy it to Azure and the intelligent edge.

Dataset Preparation and Pre-Processing

Let's first look at how to create the dataset needed for training the model. In our case, the dataset consists of 15 classes. These include 14 classes denoting the different denominations (inclusive both the front and back of the currency note), and an additional class denoting "background". Each class has around 250 images (with notes placed in various places and in different angles, see below), You can easily create the dataset needed for training in half an hour with your phone.

For the "background" class, you can use images from ImageNet Samples. We put 5 times more images in the background class than the other classes, to make sure the deep learning algorithm does not learn a pattern.

Once you have created the dataset, and trained the model, you should be able to get an accuracy of approximately 85% using the transfer learning and data augmentation techniques mentioned below. If you want to improve the performance, refer to the "Further Discussion" section below.

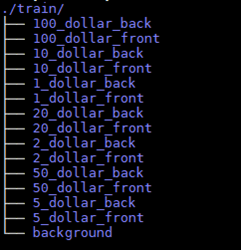

Data Organization Structure

Below is an illustration for one of the samples in the training dataset. We experimented with different options and found that the training data should contain a few images of the kind described below, so that the model can get decent performance when applied in real life scenarios.

- The currency note should occupy at least 1/6 of the whole image.

- The currency note should be displayed at different angles.

- The currency note should be present in various locations in the image (e.g. top left corner, bottom right corner, so forth).

- There should be some foreground objects covering a portion of the currency (no more than 40% though).

- The backgrounds should be as diverse a set as possible.

One of the pictures in the dataset

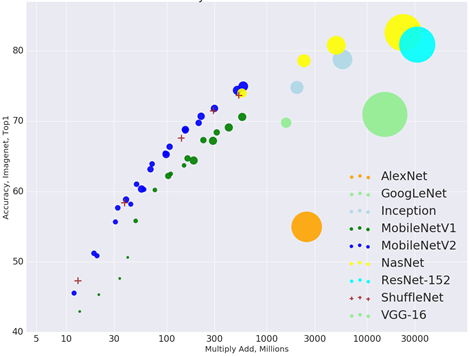

Choosing the Right Model

Tuning deep neural architectures to strike an optimal balance between accuracy and performance has been an area of active research for the last few years. This becomes even more challenging when you need to deploy the model to mobile devices and still ensure it is high-performing. For example, when building the Seeing AI applications, the currency detector model needs to run locally on the cell phone. Inference on the phone therefore needs to have low latency to ensure that the best user experience is being delivered but without sacrificing accuracy.

One of the most important metrics used for measuring the number of operations performed, during model inference, is called multiply-adds, abbreviated as MAdd. The trade-offs between speed and accuracy across different models are shown in the figure below.

Accuracy vs time, the size of each blob represents the number of parameters

(Source: https://github.com/tensorflow/models/tree/master/research/slim/nets/mobilenet)

In Seeing AI, we choose to use MobileNet because it's fast enough on cell phones and provides decent performance, based on our empirical experiments.

Build and Train the Model

Since the data set is small (only 250 images per class), we use two techniques to solve the problem:

- Doing transfer learning with pre-trained models on large datasets.

- Using data augmentation techniques.

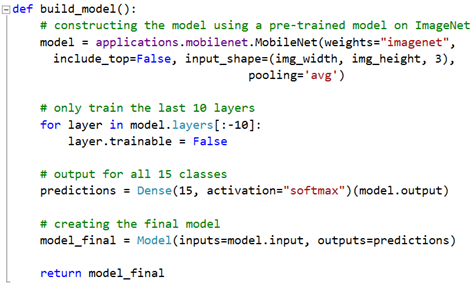

Transfer Learning

Transfer learning is a machine learning method where you start off using a pre-trained model and adapt and fine-tune it for other domains. For use cases such as Seeing AI, since the dataset is not large enough, starting with a pre-trained model and further fine-tuning the model can reduce training time and alleviate possible overfitting.

In practice, using transfer learning often requires you to "freeze" a few top layers' weights of a pre-trained model, then let the rest of the layers be trained normally (so the back-propagation process can change their weights). Using Keras for transfer learning is quite easy – just set the trainable parameter of the layers which you want to freeze to False, and Keras will stop updating the parameters of those layers, while still back propagating the weights of the rest of the layers:

Transfer learning in Keras

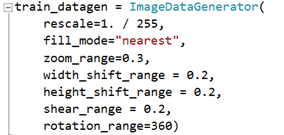

Data Augmentation

Since we don't have enough input data, another approach is to reuse existing data as much as possible. For images, common techniques include shifting the images, zooming in or out of the images, rotating the images, etc., which could be easily done in Keras:

Data Augmentation

Deploy the Model

Deploying to the Intelligent Edge

For applications such as Seeing AI, we want to run the models locally, so that the application can always be used even when the internet connection is poor. Exporting a Keras model to CoreML, which can be consumed by an iOS application, can be achieved by

coremltools:

model_coreml = coremltools.converters.keras.convert("currency_detector.h5", image_scale = 1./255)

Deploying to Azure as a REST API

In some other cases, data scientists want to deploy a model and expose an API which can be further used by the developer team. However, releasing the model as a REST API is always challenging for enterprise scenarios, and Azure Machine Learning services enables data scientists to easily deploy their model on the cloud in a secure and scalable way. To operationalize your model using Azure Machine Learning, you can leverage the Azure Machine Learning command line interface and specify the required configurations using the AML operationalization module, like below:

az ml service create realtime -f score.py --model-file currency_detector.pkl -s service_schema.json -n currency_detector_api -r python --collect-model-data true -c aml_config\conda_dependencies.yml

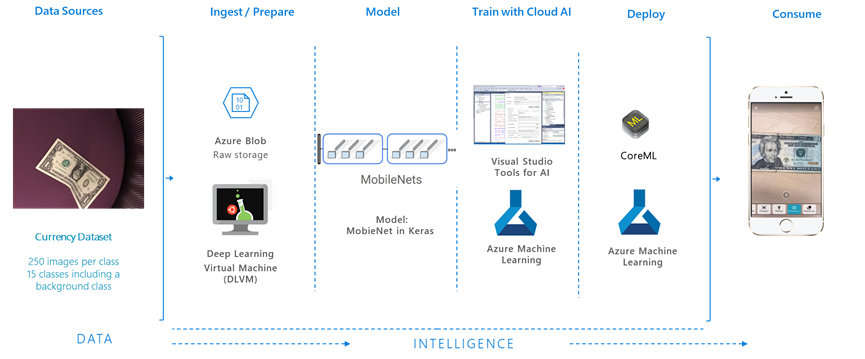

The end to end architecture is as below:

End to end architecture for developing the currency detection model and deploying to the cloud and intelligent edge devices

Further Discussion

Even Faster models

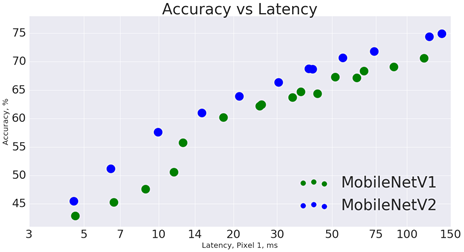

Recently, a newer version of MobileNet called MobileNetV2 was released. Tests done by the authors shows that the newer version is 35% faster than the V1 version when running on a Google Pixel phone using the CPU (200ms vs. 270ms) at the same accuracy. This enables a more pleasant user experience.

Accuracy vs Latency between MobileNet V1 and V2

(Source: https://github.com/tensorflow/models/tree/master/research/slim/nets/mobilenet)

Synthesizing More Training Data

To improve the performance of the models, getting more data is the key. While we can collect more real-life data, we should also investigate ways to synthesize more data.

A simple approach is to use images that are of sufficient resolution for various currencies, such as the image search results returned by Bing, transform these images, and overlay them on a diverse set of images, such as a small sample of ImageNet. OpenCV provides several transformation techniques. These include scaling, rotation, affine and perspective transformations.

In practice, we train the algorithm on synthetic data and validate the algorithm on the real-world dataset we collected.

Conclusion

In this blog post, we described how you can build your own currency detector quite easily using Deep Learning Virtual Machines and Azure. We showed you to build, train and deploy powerful deep learning models using a small dataset. And we were able to export the model to CoreML with just a single line of code, enabling you to build innovative mobile apps on iOS.

Our code is open source on GitHub. Feel free to contact us with any questions or comments.

Xiaoyong, Anirudh & Wee Hyong

(Xiaoyong can be reached via email at xiaoyzhu@microsoft.com)