Deep Neural Networks in Azure

This post is authored by Anusua Trivedi, Data Scientist, and Jamie F Olson, Analytics Solutions Architect, of Microsoft.

This blog is an extension of Part 2 of our blog series. In our earlier blog, we showed how to train a Deep Convolutional Neural Network (DCNN) to classify medical images to detect Diabetic Retinopathy. For training the DCNN, we have used an NC24 VM running on Ubuntu 16.04. N-Series VM sizes are currently under preview and available for select users at https://gpu.azure.com. In this post, we show you how to operationalize a Azure GPU-trained DCNN model using Azure ML and Azure ML Studio.

Before you get started, you'll need the Anaconda Python distribution installed either on your local Windows machine or in a Windows Data Science Virtual Machine. For this blog, we re-trained an ImageNet pre-trained GoogleNet model using the Kaggle Diabetic Retinopathy dataset. These re-trained model weights are saved into a Pickle file.

To deploy a DCNN model in Azure ML, you'll need to upload any Python packages you're using, as well as your Pickle file into Azure ML Studio as a zipfile Script Bundle. Although the Azure ML Python client library will identify local variables and upload them automatically, it does not currently support additional third-party packages.

There are lots of different ways you can prepare your Script Bundle. This blog shows some neat tricks regarding this, but we'll keep things simple, here.

We'll just use pip install:

> mkdir bundle\lib

> pip install --no-deps --target=bundle\lib theano

> pip install --no-deps --target=bundle\lib lasagna

> pip install --no-deps --target=bundle\lib six

In addition to these packages, we're using a slightly modified version of the lasagne implementation of GoogleNet. We'll also put that in the lib directory and put our pickle file in the bundle directory:

> dir bundle\

Directory: xyz\bundle

Mode LastWriteTime Length Name

---- ------------- ------ ----

d----- 10/5/2016 12:25 PM lib

-a---- 6/30/2016 12:19 AM 28006279 trained_googlenet.pkl

> dir bundle\lib

Directory: xyz\bundle\lib

Mode LastWriteTime Length Name

---- ------------- ------ ----

d----- 10/5/2016 12:21 PM lasagna

d----- 10/5/2016 12:21 PM Lasagne-0.1.dist-info

d----- 10/5/2016 12:25 PM six-1.10.0.dist-info

d----- 10/5/2016 12:11 PM theano

d----- 10/5/2016 12:11 PM Theano-0.8.2.dist-info

-a---- 10/5/2016 12:53 PM 4888 googlenet.py

-a---- 9/9/2016 1:27 PM 3456 googlenet.pyc

-a---- 10/5/2016 12:25 PM 30098 six.py

-a---- 10/5/2016 12:25 PM 31933 six.pyc

Now we're ready to zip it up:

> zip bundle.zip -r bundle\

Now upload the zip file as a dataset in Azure ML Studio, so that we can use it as a Script Bundle. This zip file will contain a single directory (named "bundle") that will be extracted as "Script Bundle\bundle" inside the Azure ML working directory.

Next, start setting up your Execute Python Script. You'll probably want to start by adding the packages you uploaded:

BUNDLE_DIR = os.path.join(os.getcwd(), 'Script Bundle','bundle')

LIB_DIR = os.path.join(BUNDLE_DIR,'lib')

# Add our custom library location to the python path

env = pkg_resources.Environment([LIB_DIR])

packageList, errorList = pkg_resources.working_set.find_plugins(env)

for package in packageList:

print "Adding", package

pkg_resources.working_set.add(package)

for error in errorList:

print "Error", error

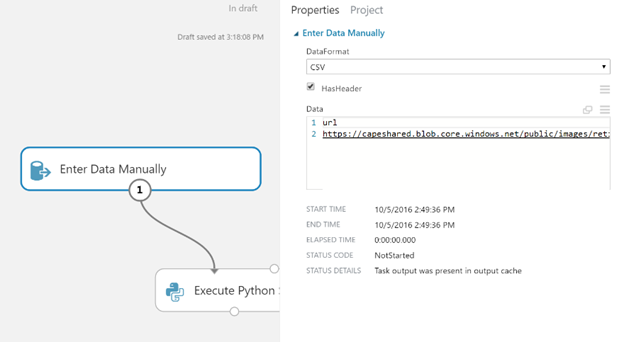

Now you need to write the model prediction/scoring logic inside Azure ML Studio. For this example, we download the images from an Azure Blob URL but you may be able to use the Import Images module if your images are compatible. For simplicity, this example uses publicly accessible URLs but you can also access private Blob containers from Azure ML. The web service will accept a single column of URLs referencing images to score. We manually entered a sample URL for building the experiment.

Before you can run your model in Azure ML, you may need to make some changes to the way your model is built and executed. The Azure ML environment does not have access to GPU resources nor does it have access to compilers and other development tools.

We had to make changes both in the GoogleNet model definition (bundle/lib/googlenet.py):

# The layers.dnn module requires a gpu on import, but AzureML doesn't

# provide a gpu so we need to use the non-gpu version

#from lasagne.layers.dnn import Conv2DDNNLayer as ConvLayer

#from lasagne.layers.dnn import MaxPool2DDNNLayer as PoolLayerDNN

from lasagne.layers import Conv2DLayer as ConvLayer

from lasagne.layers import MaxPool2DLayer as PoolLayerDNN

As well as in the theano configuration:

# We have to use 'None' since we have neither a GPU nor a compiler

theano.config.optimizer = 'None'

Once that's finished you load the pickle file into your model and apply any necessary image processing to prepare the images for scoring. For more details, check it out in the Cortana Intelligence Gallery.

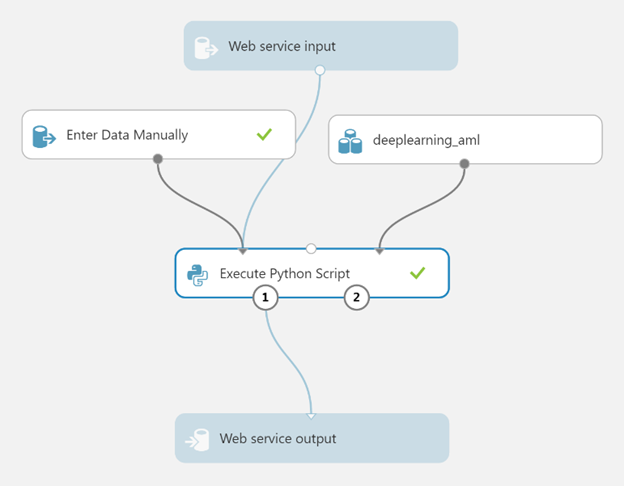

Our final experiment looks like this:

There you have it: A state-of-the-art deep convolutional neural network model, built with open source tools in Python and deployed as an Azure ML web service!

Anusua & Jamie

Contact Anusua at antriv@microsoft.com or via Twitter @anurive

Contact Jamie at jamieo@microsoft.com

Resources:

- Visit the Machine Learning Center and get started with Machine Learning.

- Watch the Predictive Modelling with Azure ML studio video.

- Sign up for a free trial.