Cortana Analytics Suite Helps Diebold Predict ATM Failures

This post is co-authored by Katherine Lin, Data Scientist, and Tao Wu, Principal Data Scientist Manager at Microsoft.

Unplanned machine downtime is a serious disruption to businesses across many industries, resulting in lost revenue, high unscheduled repair costs and customer dissatisfaction. With the advent of the Internet of Things (IoT), it is possible for the first time to tackle this problem using predictive maintenance, with either periodic or continuous monitoring of equipment conditions. By having a quantitative understanding of the machine health, we can accurately predict when a machine will break down and even predict the underlying causes. The ultimate goal of predictive maintenance is to perform maintenance tasks in the most cost-effective manner while ensuring optimal equipment performance.

So how is predictive maintenance being done these days, and how does it relate to data analytics? Simply put, machine learning algorithms are first trained using large amounts of data collected from the equipment, helping identify any hidden patterns; once that’s done, those trained algorithms are used to predict future equipment failures with similar patterns or signatures.

So how is predictive maintenance being done these days, and how does it relate to data analytics? Simply put, machine learning algorithms are first trained using large amounts of data collected from the equipment, helping identify any hidden patterns; once that’s done, those trained algorithms are used to predict future equipment failures with similar patterns or signatures.

Diebold Incorporated, the largest US manufacturer of ATMs, identified predictive maintenance as a key technology that could help their service teams monitor the health of their machines in real time and predict when, where and why failures are likely to occur. In the long term, this technology will help Diebold cut maintenance costs, increase ATM availability and achieve higher customer satisfaction. Given the amount of data involved and the sheer number of ATM terminals, it was important for Diebold to develop ML models quickly at scale and deploy them as highly available and scalable services.

Diebold and Microsoft jointly developed and operationalized an end-to-end data pipeline that involves several key components of Cortana Analytics Suite (CAS). CAS is a fully integrated big data and advanced analytics suite that revolutionizes the way we can transform data into actionable intelligence. The Azure ML component of CAS provides cutting-edge big data analytics. Working with Azure ML and Microsoft’s data science team, Diebold quickly developed and operationalized ML algorithms to predict ATM failures.

“On just one ATM module, we can collect over 250 data points – and an ATM might have 10-15 modules with hundreds of sensors operating at any given time,” explains Jamie Meek, Senior Director in the New Technology Incubation & Design group at Diebold, a team that is focused on ML and data analytics. “With Azure and Microsoft’s data scientists, we’re able to start identifying relationships between all these moving parts that no human could ever find on their own. Through this collaboration, we have the flexibility and elasticity of compute, storage and machine learning, all under one umbrella.”

The data used in the Diebold predictive maintenance solution includes the following:

- Sensor readings.

- Machine configurations.

- Error codes and detailed descriptions associated with each failure.

- Repair and maintenance logs.

Within Azure ML Studio, a series of data preprocessing and feature engineering steps were first performed on raw input data. A binary classification model was then developed to determine whether or not a failure would occur when dispensing the next X notes from the machine. Azure ML was used to deploy and compare the performance of multiple algorithms in parallel. The best performing algorithm was deployed as a web service that was used programmatically in an automatic data pipeline.

With Azure ML models, Diebold is able to address the following questions:

- What's the failure probability for each of the five sub-modules of the device when the next X notes are dispensed?

- What are the top features that contribute the most towards the prediction of failure?

- Can you rank machines based on a health score, to help identify the high risk population?

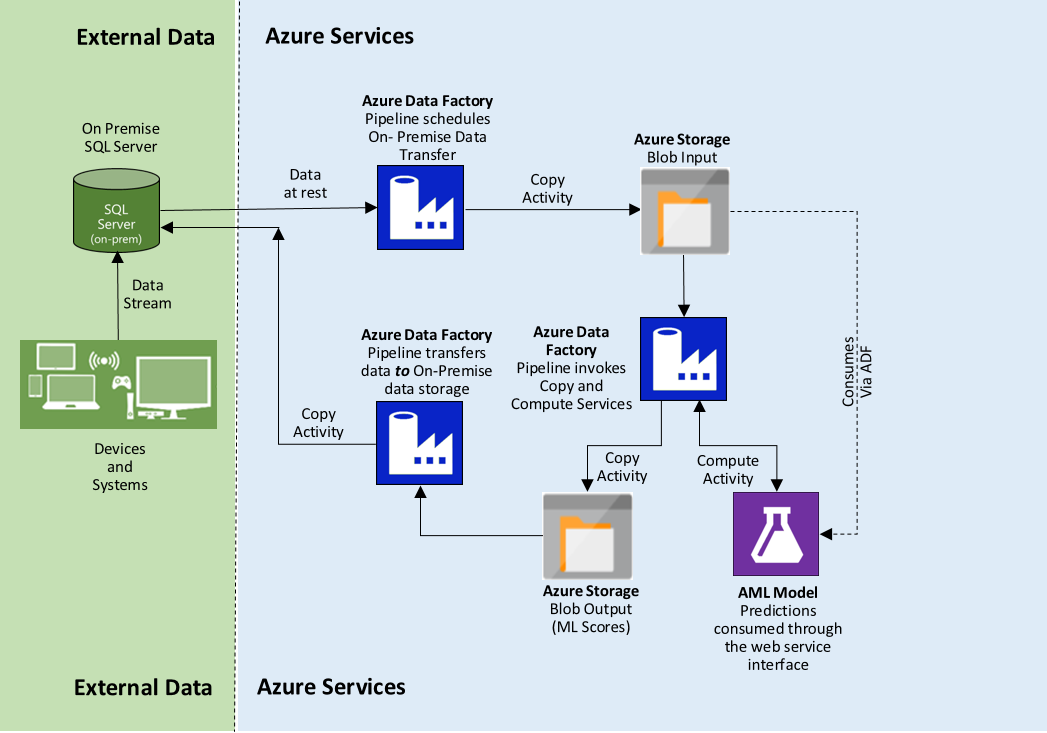

The figure below illustrates the architecture of the hybrid cloud data pipeline.

Automated data movement pipeline using Azure Data Factory

The input data is stored in Diebold’s on premise data storage, namely SQL Server. Another component of the CAS, the Azure Data Factory (ADF), schedules periodic data transfers between the SQL database and Azure cloud storage. When new data becomes available, another ADF pipeline calls into the Azure ML web service end-point to get batch predictions on a daily basis. The predicted results are then copied back to Diebold’s on premise data storage for downstream dashboard display and decision making.

With Cortana Analytics Suite, Diebold is able to build an end-to-end production quality predictive maintenance pipeline using advanced analytics capabilities, and do so in record time.

Katherine & Tao

Contact Tao at tao.wu@microsoft.com