Programmatic Retraining of Azure Machine Learning Models

This post is by Raymond Laghaeian, a Senior Program Manager on Microsoft Azure Machine Learning.

Azure ML allows users to publish trained Machine Learning models as web services enabling quick operationalization of their experiments. With the latest release of the Retraining APIs, Azure ML now enables programmatic re-training of these models with new data. This document provides a high-level overview of that process. For a detailed example of using the Retraining APIs, see this document.

Setting up for Retraining

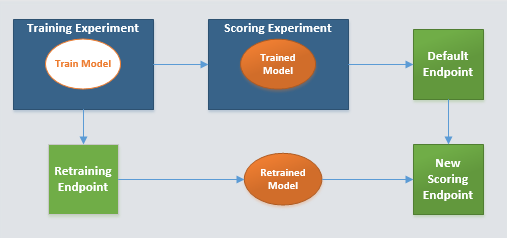

The first step in the process is creating a Training experiment to train a model. The trained model is then published as a web service using a Scoring experiment. The end-result of this process is a “default endpoint”.

The user will then need to publish the above Training experiment with an output of a trained model. This will be the common endpoint for all subsequent re-training calls.

To complete the setup, the final step is to create a new endpoint from the “default endpoint”. This new endpoint is what the user, or their customer, can use to update with a re-trained model.

Retraining

To retrain a model with new data, the user should use the web service endpoint published from the Training experiment (“Retraining endpoint”) providing its API key for authentication. The operation is a batch operation with input of the new data for retraining. When the retraining operation is complete, the system will return the URL of the retrained model.

The final step is then to call the API to replace the model for the “new scoring endpoint” (initially saved as part of the Training experiment) with the one retrained above passing in its URL. The “new scoring endpoint” now will be using the retrained model.

Updates

The experiments and modules of the web service can be updated by the web service owner as a result of model enhancements or module upgrades. Whenever the web service is updated, the update propagates to the “default endpoint” only which allows the web service owner to test the impact of the update. Then, other individual web services created from the same web service (added endpoints) can be updated as needed.

Model Evaluation

For model evaluation, the user will be able to add the Evaluate module to the Training experiment, or create a separate experiment altogether. In the former case, the output of evaluation will be returned along with the URL of the trained model after retraining. In the latter case, the user will be able to update the evaluation endpoint with the new version of the model and run the evaluation by executing the experiments.

Summary

With the new Programmatic Retraining APIs, you now can programmatically retrain models, evaluate their performance, and update the published APIs consuming without having to access the Studio. Using this capability, you can build client apps that automate this process and run it regularly or as new data arrives.

Raymond