Online Learning and Sub-Linear Debugging

This blog post is authored by Paul Mineiro , Research Software Developer at Microsoft.

Online learning algorithms are a class of machine learning (ML) techniques that consume the input as a stream and adapt as they consume input. They are often used for their computational desirability, e.g., for speed, the ability to consume large data sets, and the ability to handle non-convex objectives. However, they come with another useful benefit, namely “sub-linear debugging”.

The prototypical supervised online learning algorithm receives an example, makes a prediction, receives a label and experiences a loss, and then makes an update to the model. If the examples are independent samples from the evaluation distribution, then the instantaneous loss experienced by the algorithm is an unbiased estimate of the generalization error. By keeping track of this progressive validation loss, a practitioner can assess the impact of a proposed model change prior to consuming all the training input, hence sub-linear (in the training set size) debugging.

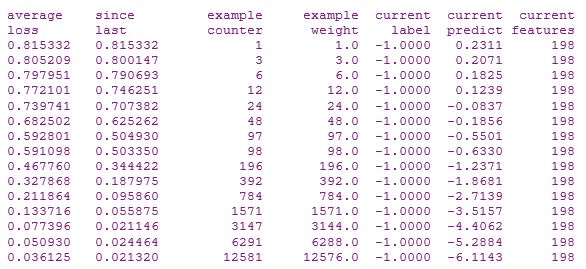

For example, when using the online learning software Vowpal Wabbit at a terminal, the output might look something like this:

The second column is the average progressive loss since the previous print.

Sub-linear debugging is an important technique to master because one of the greatest enemies of the ML practitioner is a slow experimental cycle. Most ideas, sadly, are not good ones, and if it takes an hour to rule out a bad idea you will only eliminate a handful of bad ideas a day. If, however, you take 5 minutes to rule out a bad idea, you are clearly a whole lot more productive.

As a real-world example, consider evaluating a new feature engineering strategy. The new training data are prepared, but progressive validation loss on this new input is much worse than previous results after less than a minute of processing. A likely culprit is a software defect introduced during the modification of the feature pipeline.

Going beyond detecting obvious large errors requires some intuition about different components of generalization error. Here's one fruitful way of thinking about it. The Bayes error rate is the best error rate possible from any prediction rule, and is a measure of the amount of noise inherent in the problem. The bias is the best error rate possible from a prediction rule that can actually be rendered by the learning algorithm. Roughly speaking, it is how well the learning algorithm would do given infinite data. The variance is how much error is caused by the learning algorithm picking a prediction rule which differs from the best learning rule available to the algorithm. Roughly speaking, it is due the algorithm having to filter out all the poorly performing available models using finite training data, and therefore variance tends to increase whenever the model class is made more powerful without easing the search problem for the learning algorithm. For an excellent introduction to these concepts, I refer you to master lecturer Abu-Mostafa.

With these concepts in mind, let's consider some common scenarios:

New features get introduced, and progressive validation loss is worse over the entire training run. This is the most common case: the progressive validation loss startsoff worse and stays worse. What’s going on? Adding new features cannot increase theBayes error rate, as the set of possible prediction rules includes thosethat ignore the new features. For similar reasons, with many learning algorithms adding new features cannot increase the bias, because the learning algorithm could choose not to use them (e.g., assign them zero weight in a linear model). However if the previous features were corrupted by virtue of introducing new features, then bias could go up. This corresponds to the software defect scenario outlined above.

Ruling out corruption of previous features, the remaining possibility is that variance has increased while the bias is unaffected (or even reduced). The hallmark of this effect is that the progressive loss starts to catch up to that of a previous good run as training proceeds. This is because variance is reduced with more training data. You might be tempted, under these conditions, to let the algorithm run for a long time in order to ultimately exceed previous performance, but this is a dangerous habit, as it defeats the purpose of sub-linear debugging. A better question to ask is how to achieve the (putative) bias decrease that the new features provide while avoiding much of the variance increase. Think “regularization”. Possibilities for the new features might include vector quantization, projection onto (a small number of) principal components, or application of a compressing nonlinearity.

If none of these things help, the new features might be counterproductive. Unless you are certain that these features reduce the Bayes error rate (e.g., from human experimentation), it is probably best to look elsewhere for model improvement.

Feature preprocessing gets changed, and progressive validation loss is initially better, but then is worse with more training data. This sometimes happens: new preprocessing is introduced and progressive validation loss starts off better, sometimes dramatically better. Feeling satisfied, you let the algorithm run while you get some lunch, and, when you return, you discover that with more data the progressive loss improvement slowed and performance is now worse than under the old preprocessing.

This scenario is consistent with the new preprocessing decreasing variance in exchange for increasing bias. With less data resources, this tradeoff can lower generalization error, but as data resources increase the tradeoff is no longer beneficial. Under these conditions some interpolation between the original and new preprocessing might yield best results, as the regularization effect of the new preprocessing is beneficial but too strong.

As an example I worked on a text problem where I tried limiting the input to the first 6 characters of each word. Initially progressive validation loss was much better but after an hour of training it fell behind using the complete word. Ultimately I discovered that using the first 8 characters of each word gave a mild lift on the complete training set. Presumably, if my training set had been larger, the right amount of prefix to use would have been larger (e.g., 9 or 10). The idea was right (“reduce variance by treating long words the same as their shorter prefixes”) but the strength had to be adjusted to fit the data resources (“with enough data, the best strategy is to treat words as different from their shorter prefixes”).

New features or preprocessing get introduced, and progressive validation loss starts better and stays better. Congratulations, you should consider buying some lottery tickets today! More seriously, just make sure you aren't looking at the previous scenario. That means enduring a full training run. As Reagan famously said, “Trust (sub-linear debugging) , but verify (on the complete data set) ”.

To get going on ML, a good place to begin is the Machine Learning Center where we have several resources available.

Paul Mineiro

Follow my personal blog here. Follow me on twitter.