Why R2? Software Defined Networking with Hyper-V Network Virtualization

This month, my team of fellow IT Pro Technical Evangelists are publishing a series of articles on Why Windows Server 2012 R2? that highlights the key enhancements and new capabilities that we're seeing driving particular interest in this latest release of our Windows Server operating system in the field. This article has been updated now that Window Server 2012 R2 is generally available. After reading this article, be sure to catch the full series at:

Hyper-V Network Virtualization (HNV) is part of our Software Defined Networking (SDN) portfolio that is included inbox with Windows Server 2012 and Windows Server 2012 R2. HNV provides the ability to present virtualized network architectures and IP routing domains to virtual machines (VM’s) in a manner that is abstracted from the existing underlying physical network architecture. By virtualizing the network layer with HNV, several important benefits can be realized around reduced networking complexity, improved configuration agility, enhanced routing performance in highly virtualized datacenters, cross-site Live Migration of VM’s, IP address space portability, and network layer isolation.

Hyper-V Network Virtualization (HNV) – Virtualizing the Network Layer

Windows Server 2012 R2 and System Center 2012 R2 Virtual Machine Manager (VMM) also enhance the inbox HNV capabilities through a number of important additions. In this article, we’ll discuss the benefits of HNV that organizations are leveraging today, the new features present in the “R2” product releases and Step-by-Step resources that you can leverage to begin implementing HNV in your own lab environment.

Hyper-V Network Virtualization – Real-World Benefits

Much more than simply creating virtual network switches (vSwitches) to connect VM’s to various physical subnets within a datacenter, HNV provides the ability to virtualize entire network address spaces and routing domains, independent of the underlying physical network architecture. Virtualizing the network layer with HNV can provide some very real benefits, including:

- Reduced complexity / Improved agility

- Enhanced routing performance

- Cross-subnet Live Migration of VM’s

- IP address space portability

- Network layer isolation

Let’s look at each of these areas in greater detail …

Reduced complexity / Improved agility

HNV doesn’t require the use of Virtual LAN’s (VLANs) in the physical network layer to segment traffic onto separate subnets. As any network engineer will admit, managing dozens of VLANs in modern enterprises can be quite challenging – VLAN adds and changes often requiring touching many different physical network devices and, as a result, can require significant time investments from network administrators. Instead of leveraging VLANs for traffic segmentation and isolation, HNV uses Network Virtualization over GRE (NVGRE) to securely isolate virtualized Layer-3 IP networks and then segment these virtualized networks into one or more IP subnets. Since physical network devices widely support the GRE transport for the NVGRE overlay protocol today, virtualized networks can be easily deployed incrementally without disrupting the existing physical network configuration.

Enhanced routing performance

Many organizations that are managing highly virtualized datacenters have seen a huge increase in East-to-West network traffic between IP subnets, that is, the traffic moving “horizontally” between virtualization hosts and virtual machines running in the datacenter. This is in contrast to traditional North-to-South hierarchical network architectures, where routing between subnets had been handled by dedicated core routers. In fact, as organizations increase virtualization densities per-host by moving to larger “scaled-up” virtualization hosts, they have seen East-to-West traffic between IP subnets further flatten out and have experienced higher volumes of cross-subnet traffic between VMs on the same virtualization host. Some organizations using high VM densities and scaled-up host server architectures have reported as much as 75% to 80% of their cross-subnet traffic within the datacenter being between VMs located on the same virtualization host!

With this increasing trend towards routing between VM’s on the same virtualization host or virtualization hosts co-located in the same datacenter racks, it makes sense to look for new ways to bring Layer-3 network routing intelligence into the hypervisor itself. In doing so, this allows virtual switches running locally on each hypervisor host to act as distributed Layer-3 routers so that cross-subnet traffic between VM’s never needs to leave the virtualization host and can occur at system bus speeds instead of physical wire speeds.

HNV addresses distributed Layer-3 routing between virtualized subnets by including a network virtualization routing extension natively inside Hyper-V Virtual Switches running on each Hyper-V host. This distributed router can make cross-subnet routing decisions locally within the vSwitch to directly forward traffic between VM’s on different virtualized subnets within the same virtual network, or routing domain. To manage and distribute the appropriate routing policies to each Hyper-V host, System Center 2012 R2 VMM performs as the routing policy server, enabling the configuration of distributed routers across many Hyper-V hosts to be easily coordinated from a single centralized point of administration.

Cross-subnet Live Migration of VM’s

HNV provides the ability, via the NVGRE overlay protocol, to easily extend consistent virtualized networks and their associated IP address spaces across multiple Hyper-V hosts, physical subnets and physical network locations. As a result, these virtualized networks can be transparently “stretched” across typical physical network boundaries. Since VM’s in this configuration receive their IP addressing based on the address spaces associated with these virtualized networks, and not the underlying physical network, this makes it super-easy to move VM’s across multiple physical subnets while preserving their virtualized IP addresses. As a result, VM’s running on virtualized networks can be easily Live Migrated to new Hyper-V hosts, regardless of the physical subnet to which the destination Hyper-V is attached.

IP address space portability

The abstraction of virtualized network IP address spaces from the underlying physical network architecture provides a higher level of portability that is important as organizations consider hybrid cloud strategies. In many cases, virtualized applications can be complex multi-tier applications that stretch across multiple virtual machines. Being able to extend the perimeter of portability beyond a single VM to include an entire virtualized network of several VM’s gives organizations the ability to easily relocate where these more complex applications are physically hosted, without the need for changing the IP address scheme that the application is using to communicate between VM’s. Just pick up that entire virtualized network and move it to a new physical location – HNV provides the agility to extend the virtualized network across the physical network on-demand.

Network layer isolation

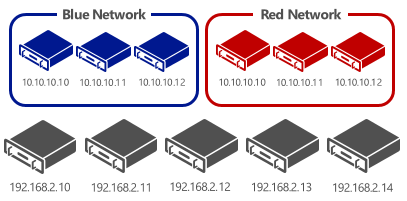

HNV securely isolates each virtualized network in their own routing domain at a physical network layer by dynamically tagging the NVGRE packets that are used to encapsulate the virtualized network traffic. Because virtualized networks are separated by routing domain, they are each independent and cannot communicate with one another without going through an intermediary network virtualization gateway. Because they are completely isolated, each virtualized network can use whatever virtualized IP address space is needed – separate virtual networks can even be configured with the same or overlapping virtualized IP address spaces!

This level of network-layer isolation can be a real benefit when organizations are preparing isolated lab networks, internal network security perimeters or performing a business merger / acquisition. In these cases, the ability to consolidate multiple overlapping IP address spaces on a common physical network architecture while keep them completely isolated from one another can help to accelerate configuration processes and reduce overall infrastructure complexity. Organizations that are hosting shared infrastructure across several customer organizations, such as hosting partners, can also benefit from this network layer isolation, which ensures that each customer tenant is operating in their own secure virtualized network even if all customers are ultimately using the same physical network fabric.

What’s New in R2?

As mentioned earlier in this article, HNV was originally introduced as an inbox Hyper-V capability in Windows Server 2012. In the new “R2” release, several important enhancements have been made to HNV to improve performance and configuration flexibility. Some of the noteworthy enhancements that I’ve seen positively impact customer organizations using HNV include:

- Inbox HNV Network Gateway: Having each virtualized network operating in complete isolation is great, but, at some point, these virtualized networks need to communicate with “real world” users – perhaps physical network clients or Internet-connected users. The inbox HNV gateway allows a Windows Server 2012 R2 Hyper-V host to be leveraged as an HNV-to-physical network gateway device, for Private Cloud connectivity, cross-premises Hybrid Cloud connectivity, or Internet connectivity. Each HNV gateway can support up to 100 virtualized networks when using NAT and HNV gateways can be clustered for high availability. Of course, you can also leverage a dedicated hardware gateway device from one of our networking partners, such as F5 or Iron Networks, but having inbox support provides the added flexibility to perform gateway functions without using 3rd party devices.

- Increased compatibility with Hyper-V Virtual Switch Extensions: In R2, we’ve also changed the manner in which HNV “plugs into” the Hyper-V Virtual Switch to provide improved compatibility with existing 3rd party Virtual Switch forwarding extensions, such as the Cisco Nexus 1000V. Prior to R2, these 3rd party extensions could only see the virtualized IP address space when using HNV, but now these extensions can also make forwarding decisions based on physical IP addresses as well – useful when you are using a combination of virtualized and physical addressing across your various VM’s.

- NVGRE Encapsulated Task Offload: Of course, adding network virtualization also brings about the additional network overhead of processing NVGRE packet headers when forwarding traffic. To optimize performance, R2 provides improved support for network adapter manufacturers to implement hardware offload functions for NVGRE, speeding performance by as much as 60% to 65% when using HNV. To-date, NVGRE offload capable adapters are available from Emulex and Mellanox.

Want to learn more?

HNV, Network Virtualization, and Software Defined Networking are ushering in a new, more agile era of network architectures that can provide real-world benefits today, as well as prepare datacenters for an easier transition towards leveraging hybrid cloud scenarios. Learn more about evaluating and implementing HNV with these great additional resources:

- Read! Hyper-V Network Virtualization Technical Details

- Watch! Deep-dive on HNV in Windows Server 2012 R2

- Do! Step-by-Step: Building Hybrid Clouds with NVGRE

Note: Special thanks to the MVP Authors and Technical Reviewers of the great Step-by-Step guide listed above! Be sure to follow them all on Twitter …

- Kristian Nese ,Cloud & Datacenter Management MVP

- Flemming Riis, Cloud & Datacenter Manager MVP

- Daniel Neumann, Cloud & Datacenter Management MVP

- Stanislav Zhelyazkov, Cloud & Datacenter Management MVP