Adding Storage Performance to the Test-StorageHealth.ps1 script

A few weeks ago, I published a script to the TechNet Script Center that gathers health and capacity information for a Windows Server 2012 R2 storage cluster. This script checks a number of components, including clustering, storage spaces, SMB file shares and core storage. You can get more details at https://blogs.technet.com/b/josebda/archive/2014/07/27/powershell-script-for-storage-cluster-health-test-published-to-the-technet-script-center.aspx

From the beginning, I wanted to add the ability to look at storage performance in addition to health and capacity. This was added yesterday in version 1.7 of the script and this blog describes the decisions along the way and the details on how it was implemented.

Layers and objects

The first decision was the layer we should use to monitor storage performance. You can look at the core storage, storage spaces, cluster, SMB server, SMB client or Hyper-V. The last two would actually come from the client side, outside the storage cluster itself. Ideally I would gather storage performance from all these layers, but honestly that would be just too much to capture and also to review later.

We also have lots of objects, like physical disks, pools, tiers, virtual disks, partitions, volumes and file shares. Most people would agree that looking at volumes would be the most useful, if you had to choose only one. It helps that most deployments will use a single file share per volume and a single partition and volume per virtual disk. In these deployments, a share matches a single volume that matches a single virtual disk. Looking at pool (which typically hosts multiple virtual disks) would also be nice.

In the end, I decided to go with the view from the CSV file system, which looks at volumes (CSVs, really) and offers the metrics we need. It is also nice that it reports a consistent volume ID across the many nodes of the cluster. That made it easier to consolidate data and then correlate to the right volume, SMB share and pool.

Metrics

The other important decision is which of the many metrics we would show. There is plenty of information you can capture, including number of IOs, latency, bandwidth, queue depth and several others. These can also be captured as current values, total value since the system was started or an average for a given time period. In addition, you can capture those for read operations, write operations, metadata operations or all together.

After much debating with the team on this topic, we concluded the single most important metric to find out if a system is reaching its performance limit would be latency. IOs per second (IOPs) was also important to get an idea of how much work is currently being handled by the cluster. Differentiating read and write IOs would also be desirable, since they usually show different behavior.

To keep it reasonable, I made the call to gather read IOPS (average number of read operations per second), write IOPS, read latency (average latency of read operations per second, as measured by the CSV file system) and write latency. These 4 performance counters were captured per volume on every node of the cluster. Since it was easy and also useful, the script also shows total IOPS (sum of reads and writes) and total latency (average of reads and writes).

Samples

The other important decision was how many samples we would take. Ideally we would constantly monitor the storage performance and would have a full history of everything that ever happened to a storage cluster by the millisecond. That would be a lot of data, though. We would need another storage cluster just to store the performance information for a storage cluster :-) (just half joking here).

The other problem is that the storage health script aims to be as nonintrusive as possible. To constantly gather performance information we require need some sort of agent or service running on every node and we definitely did not want to go there.

The decision was to take a few samples only during the execution of the script. It gathers 60 samples, 1 second apart. During those 60 seconds the script is simply waiting and it's doing nothing else. I considered starting the capture on a separate thread (PowerShell job) and let it run while we’re gathering other health information, but I was afraid that the results would be impacted. I figured that waiting for one minute would be reasonable.

Capture

There are a few different ways to capture performance data. Using the performance counter infrastructure seems like the way to go, but even there you have a few different options. We could save the raw performance information to a file and parse it later. We could also use Win32 APIs to gather counters.

Since this is a PowerShell script, I decided to go with the Get-Counter cmdlet. It provides a simple way to get a specified list of counters, including multiple servers and multiple samples. The scripts uses a single command to gather all the relevant data, which is kept in memory and later processed.

Here’s some sample code:

$Samples = 60

$Nodes = “Cluster123N17”, “Cluster123N18”, “Cluster123N19”, “Cluster123N20”

$Names = “reads/sec”, “writes/sec” , “read latency”, “write latency”

$Items = $Nodes | % { $Node=$_; $Names | % { (”\\”+$Node+”\Cluster CSV File System(*)\”+$_) } }

$RawCounters = Get-Counter -Counter $Items -SampleInterval 1 -MaxSamples $Samples

The script then goes on to massage the data a bit. For the raw data, I wanted to fill in some related information (like pool and share) for every line. This would make it a proper fact table for Excel pivot tables, once it's exported to a comma-separated file. The other processing needed is summarizing the raw data into per-volume totals and averages.

Summary

I spent some time figuring out the best way to show a summary of the 60 seconds of performance data from the many nodes and volumes. The goal was to have something that would fit into a single screen for a typical configuration with a couple of dozen volumes.

The script shows one line per volume, but also includes the associated pool name and file share name. For each line you get read/write/total IOPS and also read/write/total latency. IOPS are shown as an integer and latency is shown in milliseconds with 3 decimals. The data is sorted in descending order by average latency, which should show the busiest volume/share on top.

Here’s a sample output

Pool Volume Share ReadIOPS WriteIOPS TotalIOPS ReadLatency (ms) WriteLatency (ms)

---- ------ ----- -------- --------- --------- ---------------- -----------------

Pool2 volume15 TShare8 162 6 168 33.771 52.893

Pool2 volume16 TShare9 38 858 896 37.241 17.12

Pool2 volume10 TShare11 0 9 9 0 6.749

Pool2 volume17 TShare10 20 19 39 4.128 8.95

Pool2 volume13 HShare 13 243 256 3.845 8.424

Pool2 volume11 TShare12 0 7 7 0.339 5.959

Pool1 volume8 TShare6 552 418 970 5.041 4.977

Pool2 volume14 TShare7 3 12 15 2.988 5.814

Pool3 volume28 BShare28 0 11 11 0 4.955

Pool1 volume6 TShare4 232 3 235 1.626 5.838

Pool1 volume7 TShare5 62 156 218 1.807 4.241

Pool1 volume3 TShare1 0 0 0 0 0

Pool3 volume30 BShare30 0 0 0 0 0

…

Excel

Another way to look at the data is to get the raw output and use Excel. You can find that data in a file is saved as a comma-separated values on the output folder (C:\HealthTest by default) under the name VolumePerformanceDetails.TXT.

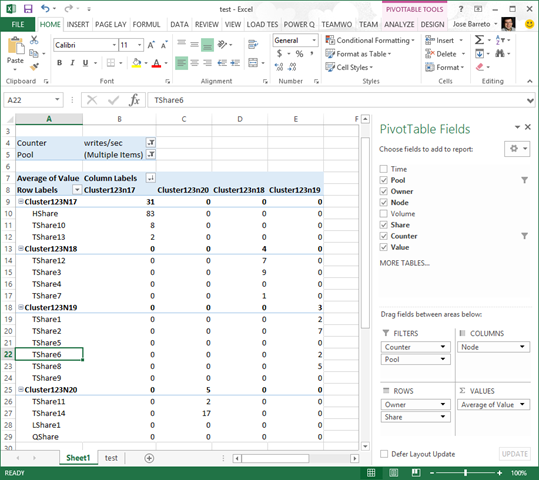

If you know your way with Excel and pivot tables, you can extract more details. You have access to all the 60 samples and to the data for each of the nodes. The data also includes pool name, share name and owner node, which do not come with a simple Get-Counter cmdlet. Here is another example of a pivot table in Excel (using a different data set from the one shown above):

Conclusion

I hope you liked the new storage performance section of the Test-StorageHealth script. As with the rest of the script (on health and capacity) the idea is to provide simple way to get a useful summary and collect additional data you could dig into.

Let me know how it works for you. I welcome feedback on the specific choices made (layer, metrics, samples, summary) and further ideas on how to make it more useful.