MMS 2013 Demo: Hyper-V over SMB at high throughput with SMB Direct and SMB Multichannel

Overview

I delivered a demo of Hyper-V over SMB this week at MMS 2013 that’s an evolution of a demo I did back in the Windows Server 2012 launch and also via a TechNet Radio session.

Back then I showed a two physical servers running a SQLIO simulation. One played the role of the File Server and the other worked as a SQL Server.

This time around used using 12 VMs accessing a File Server at the same time. So this is a SQL in a VM running Hyper-V over SMB demo instead of showing SQL Server directly over SMB.

Hardware

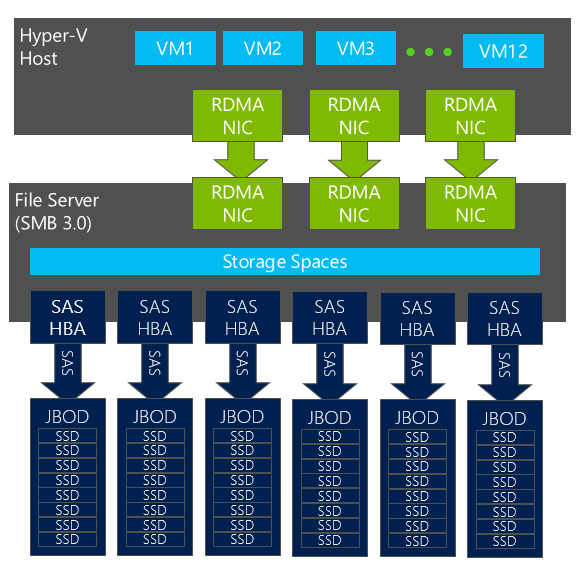

The diagram below shows the details of the configuration.

You have an EchoStreams FlacheSAN2 working as File Server, with 2 Intel CPUs at 2.40 Ghz and 64GB of RAM. It includes 6 LSI SAS adapters and 48 Intel SSDs attached directly to the server. This is an impressively packed 2U unit.

The Hyper-V Server is a Dell PowerEdge R720 with 2 Intel CPUs at 2.70 GHz and 128GB of RAM. There are 12 VMs configured in the Hyper-V host, each with 4 virtual processors and 8GB of RAM.

Both the File Server and the Hyper-V host use three 54 Gbps Mellanox ConnectX-3 network interfaces sitting on PCIe Gen3 x8 slots.

Results

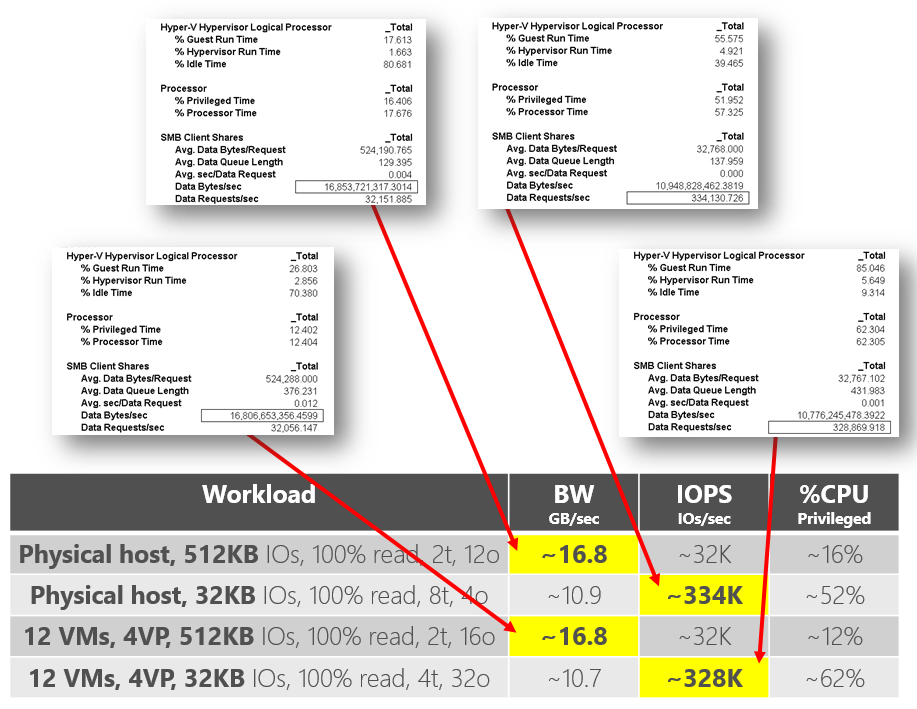

The demo showcases two workloads are shown: SQLIO with 512KB IOs and SQLIO with 32KB IOs. For each one, the results are shown for a physical host (single instance of SQLIO running over SMB, but without Hyper-V) and with virtualization (12 Hyper-V VMs running simultaneously over SMB). See the details below.

The first workload (using 512KB IOs) shows very high throughput from the VMs (around 16.8 GBytes/sec combined from all 12 VMs). That’s roughly the equivalent of fifteen 10Gbps Ethernet ports combined or around twenty 8Gbps Fibre Channel ports. And look at that low CPU utilization...

The second workload shows high IOPS (over 300,000 IOPs of 32KB each). That IO size is definitely larger than most high IOPs demos you’ve seen before. This also delivers throughput of over 10 GBytes/sec. It’s important to note that this demo accomplishes this on 2-socket/16-core servers, even though this specific workload is fairly CPU-intensive.

Notes:

- The screenshots above show an instant snapshot of a running workload using Performance Monitor. I also ran each workload for only 20 seconds. Ideally you would run the workload multiple times with a longer duration and average things out.

- Some of the 6 SAS HBAs on the File Server are sitting on a x4 PCIe slot, since not every one of the 9 slots on the server are x8. For this reason some of the HBAs perform better than others.

- Using 4 virtual processors for each of the 12 VMs appears to be less than ideal. I'm planning to experiment with using more virtual processors per VM to potentially improve the performance a bit.

Conclusion

This is yet another example of how SMB Direct and SMB Multichannel can be combined to produce a high performance File Server for Hyper-V Storage.

This specific configuration pushes the limits of this box with 9 PCIe Gen3 slots in use (six for SAS HBAs and three for RDMA NICs).

I am planning to showcase this setup in a presentation planned for the MMS 2013 conference. If you’re planning to attend, I look forward to seeing you there.

P.S.: Some of the steps used for setting up a configuration similar to this one using PowerShell are available at https://blogs.technet.com/b/josebda/archive/2013/01/26/sample-scripts-for-storage-spaces-standalone-hyper-v-over-smb-and-sqlio-testing.aspx

P.P.S.: For further details on how to run SQLIO, you might want to read this post: https://blogs.technet.com/b/josebda/archive/2013/03/28/sqlio-powershell-and-storage-performance-measuring-iops-throughput-and-latency-for-both-local-disks-and-smb-file-shares.aspx